Month: May 2020

MMS • Lin Sun Neeraj Poddar

Article originally posted on InfoQ. Visit InfoQ

Subscribe on:

Transcript

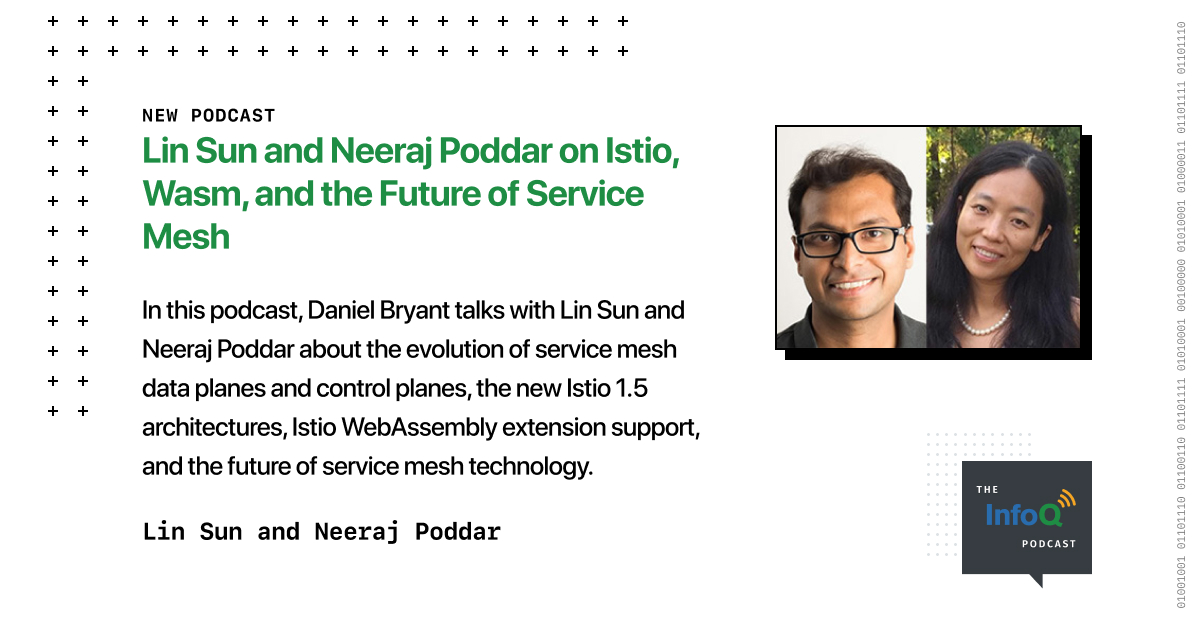

Hello. Welcome to “The InfoQ Podcast.” I’m Daniel Bryant, the News Manager at InfoQ, and Product Architect at Datawire. I recently had the pleasure of sitting down with Lin Sun, Senior Technical Staff Member, and Master Inventor at IBM, and Neeraj Poddar, Engineering Lead and Architect at Aspen Mesh. Recently, the InfoQ team and I published an ultimate guide on the topic of service meshes, which you can find at infoq.com. As a result of this, Neeraj and I had exchanged a few emails discussing the use cases for service mesh, and also exploring what the future holds in this space. When you reference some of the things that he’d learned from Lin and her very interesting work around Istio, the idea was born for us all to get together and discuss this topic in more depth.

Istio is a service mesh that was originally created by Google, IBM, and Lyft, but now has a very large community of people and organizations contributing to it. Both Lin and Neeraj are very active within this space. Although other service meshes do of course exist, I was keen to dive into the recent developments within Istio, particularly the 1.5 release which included several architectural changes, for example, moving to a monolithic control plane, and also, the addition of WebAssembly, or wasm support for creating plugins at the data plane.

Hello, Lin. Hello, Neeraj. Welcome to “The InfoQ Podcast.”

Sun: Hi guys, super excited to be here.

Poddar: Hi, Daniel. It’s nice to be here.

Background

Bryant: Could you briefly introduce yourself please and share a recent career highlight or background.

Poddar: I’m the Co-founder and Chief Architect at Aspen Mesh. I’ve been working in the service mesh and Istio space for the past two-and-a-half, three years with Lin and a bunch of other good community folks. As far as the highlights go, I think one of the best parts of co-founding a company is seeing the company grow. I’ve seen it grow from 3 people to now 25-plus. It’s amazing to see how much people can take on hard challenges and come out of the end of it with bright colors. I really enjoy that part. I’m still enjoying it as our team is growing. Working with the Istio community and making folks from our company integrate with that has been really satisfying. That has been a really good highlight for me.

Sun: Hi, my name is Lin Sun. I’m a Senior Technical Staff Member and Master Inventor at IBM. I’ve been on the Istio project for a long time now, almost three years now. Looking back at the highlight, last year, me and my co-author, Dan Berg, actually produced a book about Istio, “Istio Explained.” It took a lot of nights and weekend effort. It’s interesting to be able to put some documents together for our user from different types of scenarios, how a user can adopt Istio, how a user can look at the landscape of service mesh. That’s a small highlight. We’re really committed to this project.

Problem Spaces and Motivations for Service Mesh

Bryant: I know starting a company and writing a book are both amazing achievements, so kudos to you both there. Obviously, a company here, we’ve been talking about service mesh on and off for many years now, but I still want to admit to many folks though this is new for them. I wouldn’t mind to get your take on what are the problem spaces or the motivations for service mesh?

Sun: We were seeing a trend of people moving from monolithic to microservices. As part of that trend, as people go into microservices and cloud native, we’ve observed that there’s a common set of problems among these microservices that many people start trying to solve. For example, how am I going to connect to my microservices? How am I going to do retries? How am I going to observe my microservices? How am I going to secure the communication of my microservices? How am I going to enforce policies on my microservices? We’ve seen a set of problems, and people start to solve them for each individual language like Netflix OSS, so I would call it like a first generation of service mesh, especially for a particular programming language, then we’ve noticed the trend, people are not going to always write in one language. They’re going to start with multiple different languages. I think that’s when the service mesh notion was more formally born around using a sidecar proxy to be able to take care of all that for microservices. Then have a control plane to allow a user to intelligently program their sidecar proxies.

Poddar: What Lin said makes a lot of sense. I think for me, another thing to add on that will be, as companies have been moving or traditional enterprises are trying to compete with innovators in this space, they are realizing that the real competitive advantage is their developers and the ability to add applications at a far greater pace than their competitors who are trying to disrupt this space. In that sense, as people are moving towards microservices, they realize that a lot of the work in microservices from developers gets tangled with the work with operators. That’s what a service mesh gives you, in addition to what Lin said. It gives you a way of basically separating those layers where each of those personas can do their own work, and still be successful, and give the best outcome for the company. Operators try to enforce organizational policies. They were able to do that in monoliths through creating load balancers and network devices like BIG-IPs and whatnot. You want the same policies, but you don’t want to push that burden on developers. That’s what service mesh gives you, that decoupling between the operators and the developers, in my mind.

The Control Plane and Data Plane

Bryant: Another problem that I see folks getting their head around is this notion between a control plane and a data plane. Lin, you already mentioned about the sidecar proxies there. Neeraj, you mentioned some of these topics as well. Would one of you mind, breaking down how you see the control plane and the data plane, please?

Poddar: Whenever you have application data flowing through in your system, you want that application data to actually flow through the minimum thing so that it’s performant, but you still want to apply those policies. In the Istio land, wherever your application data is flowing through is what constitutes data plane. This is mostly the gateways and the sidecar proxies, which are collectively making up the mesh. As an operator and a user of the mesh, you have to configure things. You have to configure things in the language of the platform that you’re comfortable with. That’s where the control plane comes in. Control plane takes in the user provided configuration, whether it’s a platform configuration of Kubernetes or Istio specific configuration, and provides an abstraction layer, and converts it to a data plane specific configuration. It gives you the right separation, at the same point it makes the data plane more pluggable. In future we have the flexibility of switching out the proxy if we want. I really like the way Istio was started with this flexibility in mind, and this nice separation fits very well for people who are used to this paradigm in traditional networking.

Sun: I totally agree with that, Neeraj. One addition I would add is from a user perspective, it has been providing tremendous value to our user to have the abstraction layer provided by the control plane. Because one time I actually did an exercise myself, so I deployed a simple Bookinfo sample of Istio. I took a peek of that proxy configuration of one of the microservices, there are thousands of lines of the Envoy configuration. I’m not even doing anything fancy. It’s like round robin routing, and maybe a header-based routing. That’s it. What Istio really provides is through Istio resources to having a user programming with the abstraction layer for virtual services and destination rule, and be able to interact with control plane. Then have that configuration translated to a more complicated Envoy, or whatever proxy configuration it is. That’s real value to our user without needing to understand all the detail of the proxy configuration.

Istio 1.5

Bryant: I love Envoy. I obviously work with Envoy a lot. I don’t think anyone would deny the configuration language, the YAML, the JSON, it’s designed to be machine written. It’s very verbose. I totally get your picture around the control planes. You’ve already mentioned that both of you are big Istio advocates. I would say other service meshes do exist just for neutrality purposes. Istio has been coming along in leaps and bounds. I keep an eye on the project. I notice over the last year in particular, it looked really nice, 1.5, the release, really good stuff. Say, for folks that have heard of Istio maybe not paying too much attention over the last six months. Could you share some of the highlights in the project, please?

Sun: For Istio 1.5 particularly, I think one of the major highlights is really to make sure a user can easily deploy and operate Istio a lot easily, because prior to 1.5, a user would have to worry about multiple components of control plane. That means they would have to worry about checking out the logs for multiple control plane components, make sure each of them are upgraded correctly, make sure each of them have the right scaling policy, make sure they are healthy and up running. With 1.5, there’s a big change in our control plane components that we are actually merging all these control plane components back into one single control plane components called Istio daemon. That really simplifies operators and administrator to be able to install, and deploy, and upgrade to be able to manage that one single thing. We’re also seeing on the multi-cluster land, which is where I spend primary of my time in the project now, that the remote cluster, it’s actually much easier to config to talk to the primary cluster per se, the other cluster, when you have multiple clusters with Istio, because of that one single Istio daemon. It’s tremendously simplified the configuration for that.

Poddar: An interesting point here is in Istio, and obviously Lin knows this, is we try to come up with themes for every release or themes for the year. The theme for the past few releases has been operational simplicity, and improved user experience. What Lin said totally falls in that camp. I just want to give you an anecdotal story around, if someone is hearing this for the first time, they might be thinking it’s counterintuitive. People want us to make microservices and then use Istio to simplify the problem. Then why are you going from microservices to monolith. I totally get that. That’s why I want to put some statements out there, which makes sense to people who are trying to deal with this dichotomy in a way.

I think, in my opinion, what’s happening here is we went in the route of microservices assuming there are lots of different personas, which will be managing different pieces of Istio. That’s why the microservices paradigm made sense. You can have Citadel, which is issuing certs managed by security ops, and Pilot managed by somebody else, and Gateway managed by somebody else. We found in reality is that most of the time there is one operator who is doing all the work and adding burden on them to do upgrades and look at logs, like Lin said, for 20 different things doesn’t make sense. Even though from the developer’s point of view, we still have that logical separation in the code. From development in the community point of view, from the working groups, we are still microservices. In the deployment model, we are monolith.

Reacting is a Positive Sign of a Healthy Community

Bryant: I saw a very nice blog by Christian Posta talking about this stuff, actually. I think one of the things we can all agree as engineers is it’s better to look at something and go, if we can change that. Do it now rather than punt it a few years, and then wish you’d done that. I’m not saying it’s a mistake, but as in admitting as things change, reacting is actually a very positive sign of a healthy community I think.

Sun: That’s very true. Yes.

Poddar: Yes. I think we both just said the same thing. It’s just so nice to recognize that what your users are suffering for and what their needs are, and then going back and looking at some of the additions we have made. That’s definitely a sign of growth and maturity.

WebAssembly Support in Istio 1.5

Bryant: It’s a bit of a hot topic, a bit of a buzzword, but another thing that caught my eye in 1.5 is the WebAssembly support, the wasm support. I wouldn’t mind to get a few thoughts on what you both think of that. The potential, maybe the drawbacks with this approach with wasm as well.

Sun: I think Wasm Assembly support is really interesting. For Mixer, you guys all know when Mixer was a single component. It was on the path of data plane. Neeraj talked about data plane as part of the customer traffic, as a user travels through their microservices. Sometimes they have to go to the Mixer component to check if the car is even allowed. That has been an issue for many of our users. We actually don’t like that actual hop to Mixer. What if Mixer goes down? What if Mixer is deployed in a different region, or different zone? There was a lot of concern on that. I’m very excited to see the WebAssembly support in the community. I hope to see more documentation on this. Honestly, I haven’t got my hands on a lot on this myself other than the sample, the solo team put out. A really simple sample to replace the headers. I see it as a tremendous value. I really think of it as providing Docker images but proxy images to allow extensions into that proxy, which in our case is for the Envoy proxy. I do expect to see a lot of more movement of moving Mixer plugins into the WebAssembly runtimes and be able to distribute that runtime maybe into a particular hub, like WebAssembly hub. Then other people can easily grab it and consume it in their proxy. I think that’s a really tremendous value for the community.

Poddar: That’s the immediate tactical advantage, which is super important for the users. A little bit on my background. I have worked in proxies and load balancers for the past three, four companies. Data plane programmability is a big thing for customers. Aspen Mesh is part of F5 Networks. I was part of a startup called LineRate Systems, which was acquired by F5 Networks. The reason we were acquired was we had Node.JS programmability. BIG-IP is sticky for everyone because a lot of our customers come back and say, “We had Black Friday sale, and I was able to write an iRules LX and save my site.” I think that’s the power that WebAssembly gives you. It enables users to dynamically program with a much enhanced user experience. I’m really excited for where this lands. I think this can be a game changer, not just for Istio, but the entire proxy landscape.

Envoy

Nginx has data plane programmability through filters for quite a bit now. Envoy had C++ filters, but they were really hard. Wasm on the other hand gives you a much easier user experience and developer experience. As a company and as a vendor, we have dual advantages. As a vendor, now I can make extensions for our customers without making a lot of custom changes within Envoy. Similarly, our customers can make some specific changes for what they need, which we might not be able to provide. It’s really nice in terms of performance and security, because the security paradigm here is really nice. In terms of VM isolation, the risk is low. You can’t crash your Envoy even if you have a semi-faulty filter. I wouldn’t say it is totally foolproof just yet. From the performance, it’s very native to the machine code. Overall, I feel this is a big thing. I see a lot of good trends emerging from here in the coming months and years.

Sun: The language is a huge thing. A lot of people are having trouble with EnvoyFilter today because it’s only in C++, or Lua. What if I don’t know those two languages? I’m stuck. I don’t want to pick up a new language just to write my own filter.

Poddar: Exactly. Look at Nginx, for example OpenResty. There are so many companies which are just born out of OpenResty. As a co-founder, I just see opportunities here.

Discouraging Engineers from Putting Too Much Into a Plugin

Bryant: I totally agree with everything said here, but it’s my job to play devil’s advocate today. My background is very much in the Java space, and I did a lot of work on Netflix’s Zuul back in the day. The beauty of Zuul as an API gateway, you could dynamically inject groovy scripts at runtime to change functionality. It definitely, as you mentioned, Neeraj, you could do denial of service rejections by dropping in a script that would reject certain requests, and so forth. The flip side was, I made quite a bit of money as a consultant, fixing lots of these gateways where people have put business logic in the gateway, where they’d highly coupled their gateway and the services. I’m a little bit concerned, we might see the same thing with wasm, and Istio, and Envoy. I don’t know if either of you have got any thoughts on how we might discourage engineers from putting too much into a plugin?

Poddar: That separation is not very obvious and clear, when you just start out. I think the community and all the developers need a bit of maturity to actually understand what should be in the application code and what should be as a dynamic plugin, or a semi hack that you put in an infrastructure code. You want your infrastructure to be stable. These should be well thought out plugins. This shouldn’t be, “I woke up at 12:00 in the night and I wrote something so that I don’t have to change my code.” If I put on my operation’s hat, or a security ops person’s, I think this is as critical to any other infrastructure. You can’t just drop plugins. Whether we will get there, we will. It will take some time, I think. I’m totally with you that there is a concern. I think people like Lin and I, we should start educating now and get ahead of the curve a little bit.

Sun: In fact, we’re having similar challenges in the community here. I believe, Neeraj, we had a discussion maybe a week or two ago, some of these Envoy filters in the community, should we actually standardize and provide proper API’ing of our control plane, so a user doesn’t have to build their customized EnvoyFilter.

Poddar: Exactly. It’s hard to differentiate. When there are easy bailout things that you can do, or easy cop-out things, that’s what users tend to choose. We just need to provide some guidance and guardrails, and then, hopefully the best thing emerges.

Bryant: I should say, I’ve definitely made the mistake as well. I was sounding a bit judgmental. As you all know, I’ve definitely built my own filters, which are not a good idea as well. We’ve all done it.

Poddar: If you look at iRules repository for BIG-IP, you will see some crazy things. They’re scary.

Tips On How People Might Learn, Share, and Build Up Knowledge

Bryant: The thing is, often if you can do it, someone will do it. I’ve been there. I’ve done it early on in my career. It’s all about knowledge. That’s an interesting question in general. I’m guessing a big part of what you all do in the community with Istio is sharing this knowledge. Have you got any tips on how folks might learn or how they might contribute perhaps to sharing this and building up this body of knowledge?

Sun: I do find out, we’re making this easier for our users. Istio, at least the old perception from a lot of people was tremendous pieces for people to learn. What we have been doing as work in progress in the community is make sure our documents are easier to consume, make sure people can adopt it based on their need, and they don’t have to learn everything. We’ve been trying to educate people that you don’t have to learn an Istio-specific API. If you just want to get certain features like telemetry, observability of your microservices, you only need to learn minimum Istio API, if you just want to secure your microservices. We had a lot of users having a hard time with Istio API, mainly because of the networking API, which is actually very mature now. There were people having a lot of hard time to understand gateway API, virtual services, and destination rule, and ServiceEntry. Also, I think, most importantly, how to have these APIs work together. How do I combine these resources to work together to do what they wanted? That was a little bit more a learning curve for people.

In my book, I try to educate people, when you don’t have multiple versions, you don’t really need to look at the network API. We provide default retries for you. We have a little bit of default configuration to plugging along. I would say, stop based on your user cases, and focus, go there. Don’t try to learn everything of Istio all together, because it could overwhelm you because of the rich feature the community provides.

Improving Istio, and also Taking Advantage of It

Poddar: If you try to do everything in Istio, it will take a very long time. The other side of this learning, I would like to say is there is one about users who are trying to use Istio for their advantage. Then there are people who are trying to invest their time and improve Istio by being a developer or being a community member. I think we need to do better on both fronts. Our community should be more receptive of diverse people coming in and actually making contributions. By that, I mean we need to be making sure our working groups are more open, the meetings are more open. We have to make sure that it is in a time where it is appropriate for people from Asia and other regions, including UK and Europe to join. We are doing that. I think we are learning. It’s really good to see more and more contributions, both as a developer and then also users coming back and saying, “I wanted to do this, but I actually couldn’t do it.” Then ask questions and go to GitHub and fix it. I think we’re seeing the entire spectrum as the community is maturing more. I think, both the user experience and the developer experience will be better.

How Istio Will Interoperate With the Service Mesh Interface

Bryant: I wanted to dive into a couple of deeper topics now. The first one is around interoperability. I’ve found some really interesting conversations of late around the service mesh interface, the SMI spec. I’d love to get both your thoughts on where you think the value is of that. How Istio is going to interoperate with SMI, for example.

Poddar: Here’s my thinking in general about APIs and abstractions. Any developer when given a chance will try to create another abstraction, thinking it simplifies life. In some cases, it makes sense. In some cases, it does not. For the SMI, I think the benefit here is the operator can change the service meshes underneath. At the face value, it looks like there is a reasonable advantage of it. I have not seen much of customers in my day-to-day interactions, who are looking to do that on every year basis. Like, “I’ll go to SMI, today I will use Istio. Tomorrow, I will use Linkerd. Day after tomorrow I’ll use Consul.” I get the broader principles and why you would want to go towards SMI and maybe create an abstract layer. We have to see if one of these technologies is already emerging as the de facto standard. This is akin to Kubernetes versus Mesos versus Docker, and you know who won at the end. I’m not trying to project anything. I’m not trying to say they’re doing something which is not awesome. It’s just that I think as a user, you should be making sure that you choose it for the right reasons. Are you really in need for changing your underneath infrastructure so often? That’s my take.

Sun: I think I’m in a similar boat as Neeraj. I think it is a little bit too early in the evolution of service mesh solutions and the user adoption today, to conclude that we actually need a standard API specification to help our community. Think about how service mesh landscape today we have so many service mesh vendors, and each has their own implementation. Some have more features than others. If you end up using SMI, when you’re troubleshooting problems, you still have to go into the underlying vendors to troubleshoot the problem. At the end of the day, you may end up needing to learn every single vendor that you use underneath of your SMI. That’s my struggle with it right now.

Could SMI Provide a Consistent Workflow Across The Board?

Bryant: I think it’s an interesting point about standardization and the lifecycle of a product. Definitely, I did a lot of stuff in Java and JCP. We were only standardizing the boring stuff, the stuff that we all knew, the stuff that we were building on top. I also empathize with the SMI folks who I understand their goals. I think one other thing I’ve heard around SMI is a bit like using HashiCorp’s Terraform. The underlying cloud infrastructure is all different, but you use a consistent workflow with Terraform.

I wonder, do you think perhaps maybe the SMI could be in that space in that it provides a consistent way of working? I can imagine and say pick a number, 70%, 80% of users in service mesh land, really just want to do routing, observability, mTLS, the basic stuff. Do you think the SMI could provide that consistent workflow across maybe your estate has got a bit of Consul here, Linkerd here, Kuma here, Istio here? Any big organization is going to have a few things in the mix. Do you think SMI could provide value there?

Poddar: I think once we know what those 70%, 80% use cases are, maybe. I think as those use cases emerge, and as the space consolidates, like you said, the boring stuff can be standardized. Or, is one API already the standard, because getting users to debug two APIs is definitely worse than one.

Sun: Really, goes back to your point, how boring it can be? If it’s consistently going to work for every single platform out there, there may be a value out there. On the other hand, we also have to look at how is Kubernetes involved in this space? In the last KubeCon in the U.S., I believe people are already talking about the next version of the Ingress API. I’m sure you guys probably have seen it. It also has Route API, it has Virtual Gateway API. It’s going to build the standard of the Traffic API if that’s getting adopted by Kubernetes. If Kubernetes, everybody already agrees is the de facto standard for container orchestration system, so that may be the API.

Poddar: I totally agree. On top of that, I’m still not convinced if this is the right API to abstract. Most of the enterprise customers that I talk to, they struggle with, I want my application to work like this. Don’t tell me networking APIs. That’s my other struggle with service mesh spec. It’s still trying to abstract networking in more networking terms. It’s not talking to the language that people want to use, which is, tell me my application behavior, and then do whatever you have to do.

Bryant: I think it’s probably a whole podcast on that, and developer experience, and a bunch of things there. A bunch of other topics I’d love to cover as well. Just picking another one, which I think, again, folks are asking a lot about at InfoQ, is this notion of multi-cluster support. I’ll put into that topic as well. It might be an abuse of powers here. The Linkerd folks referred to as mesh expansion too. You got multiple Kubernetes clusters, but you might then have some VMs, or some old mainframe stuff running out of band on your COBOL, or whatever. We’re hearing a lot about COBOL at the moment. How do you see the Istio and the service meshes dealing with that thing, where there’s not just one Kubernetes cluster? There may be many, and there’d be other things in the mix too.

How Istio and Service Meshes Deal with Multi-cluster Support

Sun: We already have a pretty rich support, I would say, around multi-clusters. We support Inglewood official documentation around how you can set up homogeneous multi-clusters of Istio. How you can have Istio maybe running in one cluster for the xDS serving, but on the other cluster you also have a lightweight Istio running but not doing xDS serving, just to manage the certificate and sidecar injection. In the future, we’re actually going to involve a little bit more in this space. I’m currently working on developing a little bit more configuration to have a central Istiod notion that people would just maybe have the remote cluster fall back totally to the primary clusters in Istiod. That’s coming.

Multi-cluster Pattern In istio.io

We also have replicated control plane, a multi-cluster pattern in istio.io, where people can config heterogeneous clusters where the cluster doesn’t have to be the same as far as the services deployed within the cluster. They do have a shared single Root of Trust, but the services don’t have to be the same. You can config locality load balancer among these clusters, so to be able to fully leverage different functions provided by the mesh. We also have VM expansion support where you could potentially bring workload VMs to participate into the mesh. You can have part of your services are running VM and part of your services are running in Kube, and you could potentially load balance the amount that you use. That’s also a hot space involving istio.io. We already have some guidance for our users today on those.

Poddar: We just added a new API called WorkloadEntry, which gives you a uniform way to use the Kubernetes primitives and say, I want to add these VMs. I have migrated two of these VMs as pods. Now I want to load balance across VMs and pods, and VMs of the same services uniformly. That’s a really interesting API. I totally agree with all the options that Lin said. I think broadly, the use cases are trying to do locality aware load balancing, or trying to do a failover. I think we are there. We can do a much better job of making sure our documentation and the user experience is much better compared to where it is now.

Sun: One thing I would add is troubleshooting. Sometimes if you ever run through a multi-cluster scenario, you will notice that if it fails, it’s a little bit harder to troubleshoot today. That’s an area we definitely want to look into, to provide more guidance and automation to our user.

What the Future Holds For Istio and Service Mesh

Bryant: These distributed systems. Yes, who knew they’re really hard, aren’t they? I hear you. That’s great stuff. That leads nicely into wrapping up now. What does the future hold for Istio and the service mesh space?

Poddar: This is something that I’ve been thinking about for quite a bit, not just as a community member, but also for Aspen Mesh, since our futures are quite tangled. I think there are some tactical future enhancements that I think are going to happen and emerge. Then there are more strategic ones. One tactical thing I would say, multi-cluster support, and multi-cluster being a reality is pretty obvious to us that that’s going to happen. I see more and more users coming and saying, we have these many clusters, they are multi-cluster. Tell me how I can do this versus that, whereas, last year, only Lin and I were talking about it. It’s more real.

Second thing, which is more real, is I think the scale. I constantly see people coming from big companies like Alibaba and few others who are saying we have hundreds or even thousands of nodes on which Istio is deployed. Tell me how I can actually make Pilot work there. Tell me how I can make the control plane scale. That’s the thing that, again, as an abstract exercise we have done, but now it’s real. That’s the immediate things that are going to change towards.

The more strategic piece for the future for me is mostly around three things, one, which you’ve already touched about WebAssembly. I think WebAssembly will emerge and change a lot of data plane programmability, and might emerge as a proxy standard. I really look forward to what customers and developers alike come up with the crazy things you can do, keeping in mind that that might be troublesome or create a new market for consultants. Who knows?

Second thing, which is very interesting, which I’ve seen, is the application of service mesh in different business verticals. This is around service mesh Kubernetes. They started as a need for enterprises. What we are seeing is the same needs are relevant for telcos and 5G providers. 5G vendors are going to take this space. They’re going to make this a more interesting problem and more interesting solution, similar to how the telco vendors modified how load balancers used to be talked about. I think this is very interesting for me.

Similarly, I have seen some emergence in IoT sectors where IoT folks are saying we need to create an IoT platform. That platform does not look very different from an enterprise platform, just that we are talking about different protocols. I think the long-term vision here should be, how can we make service mesh, or particularly Istio apply to other verticals, to other protocols without trying to reinvent the wheel? I see a fair amount of momentum there. I think, as it advances, I think we will go in directions that we haven’t thought about. Two directions, which are really interesting is how can we make this work in constrained environments? Service mesh right now is deployed as sidecar proxies, which take up quite a few resources. Think about if you have to run a service mesh in a far edge 5G tower. I’m really looking forward to that evolution. Similarly, performance, if you’re trying to get this work for telcos. You have to be really high performant. Iptables might not work for you. You might have to go to some really advanced technologies. That’s exciting. Really, I think that will push us to new innovation.

Sun: I totally agree with what you said around multi-clusters, performance, and also WebAssembly provide new ways for a user to be able to extend Istio. From our perspective at IBM, we spend a lot of time multi-cluster. Make sure different interesting patterns around multi-cluster can work seamlessly for our user. We want to promote the central Istiod notion where Istiod doesn’t have to be running on the remote plane. That’s something I’m driving in the community. We’re also working on the community multi-revision. I think that’s super interesting. You guys probably know Red Hat OpenShift already support multi-tenancy. I’m super excited to see the environment workgroup did a lot of work on multi-revision support in the community that allow a user to be able to run multiple versions, or even same version of Istiod on the same cluster, to allow a user to seamlessly upgrade between versions and also for multi-tenancy purposes. That’s a tremendously useful feature to our users to be able to do a lot more testing before they move to the new version, and also, isolate their projects by different meshes within the single cluster. I feel with innovation in single cluster space, we’re also innovating in multi-cluster. There’s also a lot of talk on mesh expansion and federated mesh. How are we going to connect two meshes together? Those are super interesting areas. I’m expecting the community to continue to be involved, and hopefully, play a little bit ahead in the service mesh space.

Wrap-up

Bryant: That’s a lovely insight into interesting roadmap items, I think folks can look forward to and perhaps get involved with as well. That’s splendid. The final couple of things, though, if folks want to follow you online, what’s the best way to do that, Twitter, LinkedIn?

Poddar: Both for me. It’s nrjpoddar.

Sun: They can reach me on twitter @linsun_unc. I’m reasonably active on Twitter. I got a notification when Neeraj liked something.

Bryant: There you go. Thanks both of you for your time there. I really enjoyed chatting to you.

Sun: Thanks so much for having us.

Poddar: Thank you. It was really great to be here.

. From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Dylan Schiemann

Article originally posted on InfoQ. Visit InfoQ

The TypeScript team announced the release of TypeScript 3.9, which includes improvements in inference with Promise.all, compiler checking speed, the @ts-expect-error comment, and more in the final major version before TypeScript 4.0.

By Dylan Schiemann

Windows Terminal 1.0 Released with Support for Profiles, Multiple Panes, and Unicode Characters

MMS • Matt Campbell

Article originally posted on InfoQ. Visit InfoQ

Microsoft has moved Windows Terminal out of preview and announced the release of version 1.0. Windows Terminal includes multiple tabs, panes, customizable shortcuts, support for Unicode and UTF-8 characters, and custom themes and styles. The terminal can support PowerShell, cmd, WSL, and other command-line tools.

By Matt Campbell

GitLab 13.0 Released with AWS ECS for AutoDevops, Gitaly HA Cluster and Vulnerability Management

MMS • Hrishikesh Barua

Article originally posted on InfoQ. Visit InfoQ

GitLab announced their 13.0 release with AWS ECS support for AutoDevops pipelines, Gitaly highly available cluster support, vulnerability management and improvements in viewing Epics. It also adds features in security scanning, support for Terraform state storage, and a reduced memory footprint.

The Gitaly component in GitLab provides high-level RPC access to Git repositories to other Gitlab components. Prior to 13.0, highly available (HA) storage was enabled by NFS (Network File System). Gitaly was initially built as a solution to get around NFSs’ numerous issues. The 13.0 release adds HA to Gitaly clusters, obviating the need to use NFS.

GitLab’s AutoDevops feature enables users to set up a complete workflow with builds, vulnerability scanning, testing, deployment and monitoring using predefined templates. This release adds support for automatic deployment to AWS ECS clusters in AutoDevops. The previous releases integrated with Kubernetes clusters, with specific support for Google Kubernetes Engine.

Also on the automation tools side, 13.0 enables merge request (MR) reviewers to directly view the output of Terraform plan commands. Terraform ‘plan’ is a pre-run stage when using Terraform for infrastructure automation which shows the list of changes that will be made. The ability to view these changes directly in the MR aims to make it easier to inspect changes that will occur in the infra without running the ‘terraform plan’ command manually. Users can also store their Terraform state files in Gitlab now instead of using another backend.

The 13.0 release adds a way to view hierarchies of Epics visually. Epics are a way to track issues and features across projects and milestones within a group. This is available in the non-free versions of GitLab.

The new release adds to the list of security related features. Static Application Security Testing (SAST) support has been added for the .NET Framework to the list of supported languages, and Dynamic Application Security Testing (DAST) for REST APIs. It also introduces software vulnerability objects as first-class entities that other components like issues and wikis can link to and track using a unique URL. The entire commit history can now be scanned for secrets that might have been accidentally committed.

Other features in this release include versioning of snippets, a dark theme for the in-browser editor, and partial cloning for large objects. Puma has replaced Unicorn as the default web server from 13.0 onwards. According to the release notes, Puma reduces the overall memory consumption by about 40%.

The complete release notes can be viewed on the official announcement, and there are some points to note while upgrading. GitLab is available both as a SaaS solution and as a self-hosted software.

MMS • Bruno Couriol

Article originally posted on InfoQ. Visit InfoQ

The Babel team recently released Babel 7.10 with better tree-shaking support for React code. Babel 7.10 additionally supports checking the existence of specific private fields in objects and provides better ergonomics for the optional chaining ?. operator.

Babel 7.10 injects /*#__PURE__*/ annotations in some React functions calls to mark them as being safe to be tree-shaken away. This palliates the fact that when building an application with React, React.forwardRef calls prevent tree-shaking unused components. Adding /*#__PURE__*/ annotations before many top-level React pure function calls allows such functions to be tree-shaken/dead-code-eliminated by terser and other minifiers.

The following code:

import React from 'react';

const SomeComponent = React.lazy(() => import('./SomeComponent'));

is thus transformed into this code:

import React from 'react';

const SomeComponent = React.lazy(() => import('./SomeComponent'));

Accessing private fields in an object where those fields are not defined will throw an exception. The new release of Babel also allows developers to statically check if a given object has a specific private field, while eschewing exception handling. An example with the syntax #private_field in object is as follows:

class Person {

#name;

hug(other) {

if (#name in other) console.log(`${this.#name} ${other.#name}`);

else console.log("It's not a person!")

}

}

Babel 7.10 aligns the semantics of some edge cases of optional chaining with those provided by TypeScript 3.9:

foo?.bar!.baz

The previous code will behave similarly to foo?.bar.baz in both TypeScript 3.9 and Babel 7.10. Babel 7.10 also supports mixing optional chaining ?. with private fields as in o?.Foo.#x.

The new Babel release adds parser support for the new Stage 1 Module Attributes proposal (import a from "./a.json" with type: "json"). @babel/preset-env now compiles ES2015-style Unicode escapes (u{Babe1}) to the equivalent legacy syntax (uDAAAuDFE1). The Babel team also released in 7.10 the first experimental version of Babel’s new polyfills compatibility architecture.

Babel additionally now has an official babel/rfcs RFC process for discussing changes that significantly impact Babel’s users. The new process has value in particular in case of:

- A new feature that creates a new API surface area, and would require a config option if introduced.

- A breaking change or the removal of features that already shipped.

- The introduction of new idiomatic usage or conventions, even if they do not include code changes to Babel itself.

The whole changelog can be found on GitHub and contain the exhaustive list of changes shipped in Babel 7.10. Babel is available under the MIT open source license. Feedback and questions are welcome and can be logged in a dedicated GitHub Discussions repository. Contributions are welcome via the Babel GitHub organization and should follow Babel’s contribution guidelines and code of conduct. Donations to support the project may also get made via Open Collective.

MMS • Aditya Kulkarni

Article originally posted on InfoQ. Visit InfoQ

Elastic, the search company, has released Elasticsearch 7.7.0. This release introduces asynchronous search, password protected keystore, performance improvement on time sorted queries, two new aggregates and first release of packaging for ARM(non x86) platform.

Elasticsearch is a part of Elastic (ELK) Stack – Elasticsearch, Kibana, Beats and Logstash. With asynchronous search, the latest version allows users to retrieve results from an asynchronous search as they become available, thereby eliminating the wait for the final response only when the query is entirely finished.

This version appreciably reduces the amount of heap memory, leading to improved search and indexing performance. This also reduces costs by storing much more data per node before hitting memory limits.

The keystore can now be password protected for additional security. Elasticsearch uses a custom on-disk keystore for passwords and SSL certificates. The values are obscured by a hash, without any user-specific secret. While this is an optional feature, there won’t be any new prompts if the already existing keystore has no password. Users must choose to password-protect the keystore in order to use this new feature.

With the release of Elasticsearch 7.7, search results will be faster when querying time-based indices. The entire shards will be filtered out if the shard doesn’t contain any documents with relevant timestamps.

Two new aggregates – boxplot and top_metrics – are introduced. For a given dataset, boxplot aggregation will calculate the min, max and medium as well as the first and third quartiles. While resembling top_hits, the top_metrics aggregation will select a metric from a document according to largest or smallest sort value on a given, different field.

Painless, a secure scripting language introduced with Elasticsearch 5.0, has seen a great acceptance by users. The new Painless Lab will be available with Elasticsearch 7.7 in the Dev Tools section of Kibana. Users will be able to easily test out and debug their Painless scripts using the Painless Lab.

Transforms are now generally available, which include support for cross-cluster search. This allows users to create their destination index on a separate cluster from the source indices.

Elasticsearch 7.7 release is now supporting users working on non x86 platforms, with packages available for AArch64. For those running on Kubernetes cluster, official Helm charts for Elasticsearch are generally available.

Elasticsearch 7.7 is available for download and deployment via Elastic Service on Elastic Cloud. For additional information, there are release highlights with a complete list of updates.

Article: Well-being with Dr O’Sullivan, Part 2: Tech-Ing Care of Your Own Mental Health

MMS • Michelle OSullivan

Article originally posted on InfoQ. Visit InfoQ

Dr Michelle O’Sullivan, clinical psychologist, provides mental wellbeing advice for technology people. Particularly in these difficult pandemic conditions where remote work is the norm. Practical researched tips to help you stay performing to your best.

By Michelle O’Sullivan

MMS • Helen Beal

Article originally posted on InfoQ. Visit InfoQ

Datawire, provider of the Kubernetes-native API gateway, Ambassador, has released a new version of Ambassador Edge Stack designed to accelerate the inner development loop. The new Service Preview capability uses Layer 7 (L7) control to allow multiple developers to code locally and preview changes remotely as if the changes were part of the live cluster.

Service Preview addresses deployment and isolation problems by enabling developers to debug their application on their local computer as if it were in their cluster. It uses the L7 routing of the Ambassador Edge Stack to enable users to send test traffic requests into the development cluster through the edge and have those requests routed to and from their local development machine. This enables developers to treat the local version of the microservice they are testing as if it is in the shared cluster, and test the interconnections to adjoining microservices and data stores. Additionally, developers on a team can send individually identifiable test traffic to test changes on the microservice they are working on without affecting the work of others.

By testing microservices locally, developers can code and iterate their solution while avoiding the time-consuming build, push, and deployment to a remote Kubernetes cluster with every cycle. By routing test versions of live traffic requests to the local copy of the microservice being tested and treating the microservice as part of the live cluster, developers can more efficiently test their microservice and all of its connections.

Ambassador Edge Stack’s Service Preview capability leverages parts of Telepresence, an open-source project created by Datawire and now a Cloud Native Computing Foundation (CNCF) sandbox project.

InfoQ asked Datawire CEO, Richard Li, some questions about the new release:

InfoQ: How can Ambassador Edge Stack support teams rearchitecting applications into microservices?

Richard Li: Moving from monolithic applications to microservices means that the overall system is now composed of more components. Each service often depends on many other services i.e. it has more dependencies. The process of testing modifications in each service, and the impact it will have on its dependencies, before deploying the service to production is quite challenging.

Microservices development therefore often introduces a centralised staging environment for testing. This has many benefits, but lengthens the inner development loop and does not allow for isolated viewing and testing of changes. To overcome this, most organizations clone their clusters in the cloud or create duplicate versions locally so that developers can independently test their microservices. When organisations clone in the cloud there is a risk that costs can increase exponentially.

Ambassador Edge Stack aims to reduce the costs by eliminating the overhead and maintenance needed to duplicate these environments.

InfoQ: How does a developer using Ambassador Edge Stack compare to a developer using feature branching?

Li: Use of the Ambassador Edge Stack and Service Preview functionality is somewhat agnostic to the development branching strategy, in that teams can use their preferred approach to building features, merging code, and releasing. Having said this, the Service Preview functionality does assist with the adoption of modern branching best practices, such as trunk-based development, as developers can work from the same branch but preview their changes in isolation within a production-like environment.

InfoQ: Why is it that containers optimise developer experience for inner loops and microservices outer loops?

Li: The outer development loop involves the coding and release of production-ready changes that impact end users. Moving from monolithic applications to microservices enables smaller teams to own services/product and independently release iterations on a more frequent basis. Rather than being saddled with orchestrated release cycles spanning weeks or months, microservice teams have the agility and speed they need to swiftly respond to the needs of their users.

The inner development loop is the iterative process of writing, building and debugging code that a single developer performs before sharing the code with their team. These inner development loops are completed much more frequently than outer development loops.

Containers, which have become the de facto unit of deployment in cloud systems, introduce a container image build, upload and deploy tax within each development loop. This is a larger problem for inner development loops because every developer runs these multiple times each a day, in comparison with, say, once a day for an outer loop build and release.

InfoQ: Is there a case for developing in the cloud rather than on local machines?

Li: It is really a matter of both organisational preference and cost. Larger applications are almost always going to be in the cloud since they are too large to replicate down to someone’s desktop. That is exactly the problem we are helping to solve. We talk to people who ask for ’64 core’ desktops since they need to develop, and they can’t fit their app on their desktop. The ops team is forced to give everyone their own cloud copy which just drives the cloud bill up. Good for the cloud provider, bad for the development team.

Additionally, the overhead of maintaining the individual cloud environments introduces a headache for many teams, especially as the application and teams grow in complexity and size. With Service Preview, teams can keep their shared development cluster in the cloud, but make a local copy of the microservice for development, then route the identifiable test traffic to a local copy. This allows developers to test changes locally as if they are in the shared dev cluster without affecting the work of their peers.

Additionally, using Service Preview allows developers to use the tools and workflows that they prefer. Many debuggers and IDEs have been created for cloud use, but do not compare favourably to the tried-and-tested tools that can be installed locally on development machines.

To view a demonstration of Ambassador Edge Stack Service Preview, please visit here.

MMS • Bruno Couriol

Article originally posted on InfoQ. Visit InfoQ

The mdsvex npm package was recently entirely rewritten to allow Svelte developers to have Markdown content inside a Svelte component and also use Svelte components inside Markdown. Just like Gatsby uses MDX to enhance Markdown with React components, mdsvex allows developers to write Markdown reusing Svelte components, the final result itself being a Svelte component, which can for instance be used to generate a static web page with enhanced interactivity.

mdsvex‘s author describes the package as follows:

mdsvex is a markdown preprocessor for Svelte components. Basically MDX for Svelte. This preprocessor allows you to use Svelte components in your markdown, or markdown in your Svelte components.

Developers can thus write code for a Svelte component as follows:

<script>

import { Chart } from "../components/Chart.svelte";

</script>

# Here’s a chart

The chart is rendered inside our MDsveX document.

<Chart />

The Svelte component code should be saved in a .svx file to be recognized as a mdsvex file – that default choice can be reconfigured, and processed accordingly. This example shows how the content and limitations in terms of interactivity of Markdown can be by-passed with an interactive chart component.

mdsvex further enhances the static content by allowing for a layout component to wrap the generated Svelte component; and letting developers pass variables to the layout component through a front-matter (YAML or TOML syntax), like is customary in static site generators such as Hugo, Hexo or Eleventy. Several layouts can be specified according to the type of documents thanks to a named layout functionality.

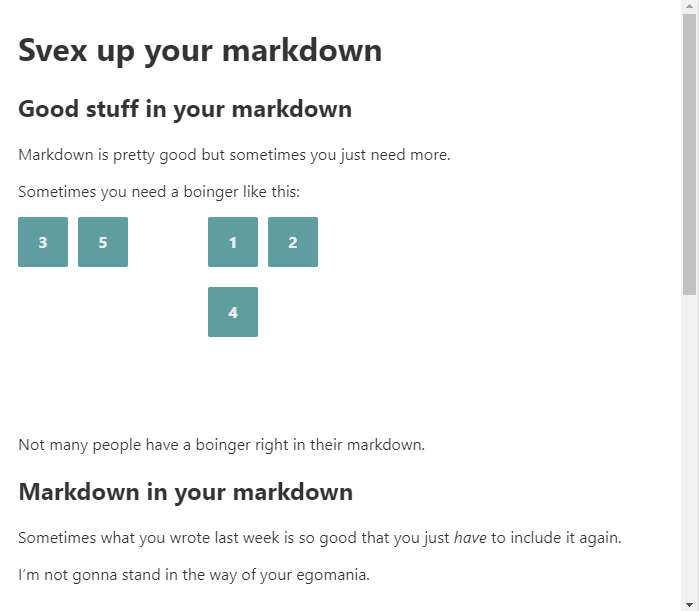

The documentation website provides an online playground with the following example showcasing most of the functionalities offered by mdsvex:

---

title: Svex up your markdown

count: 25

color: cadetblue

list: [1, 2, 3, 4, "boo"]

---

<script>

import Boinger from './Boinger.svelte';

import Section from './Section.svx';

import Count from './Count.svelte';

import Seriously from './Seriously.svelte';

let number = 45;

</script>

# { title }

## Good stuff in your markdown

Markdown is pretty good but sometimes you just need more.

Sometimes you need a boinger like this:

<Boinger color="{ color }"/>

Not many people have a boinger right in their markdown.

## Markdown in your markdown

Sometimes what you wrote last week is so good that you just *have* to include it again.

I'm not gonna stand in the way of your egomania.

>

><Section />

> <Count />

>

>— *Me, May 2019*

Yeah, that's right you can put widgets in markdown (`.svx` files or otherwise). You can put markdown in widgets too.

<Seriously>

### I wasn't joking

This is real life

</Seriously>

Sometimes you need your widgets **inlined** (like this:<Count count="{number}"/>) because why shouldn't you.

Obviously you have access to values defined in YAML (namespaced under `_metadata`) and anything defined in a fenced `js exec` block can be referenced directly.

Normal markdown stuff works too:

- Like

- This

- List

- Here

And *this* and **THIS**. And other stuff. You can't use `each` blocks. Don't try, it won't work.

This produces the following content in the playground:

To use mdsvex, developers need to add it as a preprocessor to a rollup or webpack config, and configure the Svelte plugin or loader so it handles .svx files:

import { mdsvex } from "mdsvex";

export default {

...boring_config_stuff,

plugins: [

svelte({

extensions: [".svelte", ".svx"],

preprocess: mdsvex()

})

]

};

The MDsveX project welcomes feedback which may be provided by opening an issue in the GitHub repository.