Month: June 2019

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

AI bias is in the news – and it’s a hard problem to solve

But what about the other way round?

When AI engages with humans – how does AI know what humans really means?

In other words, why is it hard for AI to detect human bias?

That’s because humans do not say what they really mean due to factors such as cognitive dissonance.

Cognitive dissonance refers to a situation involving conflicting attitudes, beliefs or behaviours. This produces a feeling of mental discomfort leading to an alteration in one of the attitudes, beliefs or behaviours to reduce the discomfort and restore balance. For example, when people smoke (behaviour) and they know that smoking causes cancer (cognition), they are in a state of cognitive dissonance.

From an AI/ Deep learning standpoint, we are trying to use deep learning to fund hidden rules where none may exist.

In future, the same problem may arise when we try to explain our own bias to AI.

In a previous blog AI and algorithmorcacy-what the future will look like –

I discussed why it would be so hard to explain religion to AI.

All religion is inherently faith based. An acceptance of faith implies a suspension of reason. From an AI perspective, Religion hence does not ‘compute’. Religion is a human choice(bias). But if AI rejects that bias, then AI risks alienating vast swathes of humanity

The next time we talk of AI bias – lets spare a thought for the poor AI who has to work with the biggest ‘black box’ system – the human mind##

But not all is lost for AI

Affective computing (sometimes called artificial emotional intelligence, or emotion AI) is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects(emotions). It is an interdisciplinary field spanning computer science, psychology, and cognitive science. The more modern branch of computer science originated with Rosalind Picard’s 1995 paper on affective computing. The difference between sentiment analysis and affective analysis is that the latter detects the different emotions instead of identifying only the polarity of the phrase. (above adapted from wikipedia).

Within Affective computing, Facial emotion recognition an important topic in the field of computer vision and artificial intelligence. Facial expressions are one of the main Information channels for interpersonal Communication. Verbal components only convey 1/3 of human communication – and hence nonverbal components such as facial emotions are important for recognition of emotion.

Facial emotion recognition is based on the fact that humans display subtle but noticeable changes in skin color, caused by blood flow within the face, in order to communicate how we’re feeling. Darwin was the first to suggest that facial expressions are universal and other studies have shown that the same applies to communication in primates.

Independent of AI, there has been work done in facial emotional recognition. Plutchik wheel of emotions illustrates the various relationships among the emotions. Ekman and Friesen pioneered the study of emotions and their relation to facial expressions.

AI’s ability to detect emotion from facial expressions better than humans lies in the understanding of microemotions. Macroexpressions last between 0.5 to 4 seconds (and hence are easy to see). In contrast, Microexpressions last as little as 1/30 of a second. AI is better at detection microemotions than humans. Haggard & Isaacs (1966) verified the existence of microexpressions while scanning films of psychotherapy sessions in slow motion. Microexpressions occurred when individuals attempted to be deceitful about their emotional expressions.

Thus, while AI lacks the ability to detect human bias due to cognitive dissonance and other aspects, AI does have the ability to detect microexpressions. Over time, this ability would overcome the limitations of AI in understanding human behaviour such as cognitive dissonance

Comments welcome

Image source: Emotion research labs

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Windows announced that Windows Subsystem for Linux (WSL) 2 is now available through the Windows Insiders program. WSL allows developers to run a Linux environment, including most command line tools and utilities, directly within Windows. WSL 2 presents a new architecture that aims to increase file system performance and provide full system call compatibility.

Available in build 18917 in the Insider Fast ring, Windows will now be shipping with a full Linux kernel. This change allows WSL 2 to run inside a VM and provide full access to Linux system calls. Windows will be building the Linux kernel in house from the latest stable branch based on the source code available from kernel.org. The initial builds will ship with version 4.19 of the kernel. The kernel will be specifically tuned for WSL 2 and will be fully open sourced with the full configuration available on GitHub. This change allows for faster turnaround on updating the kernel when new versions become available.

WSL 2 represents a change in architecture from WSL 1 by running inside a virtual machine, however the development team indicates that the benefits of WSL 1 will still exist. As Craig Loewen, program manager with the Windows Developer Platform, notes:

WSL 2 will NOT be a traditional VM experience. When you think of a VM, you probably think of something that is slow to boot up, exists in a very isolated environment, consumes lots of computer resources and requires your time to manage it. WSL 2 does not have these attributes. It will still give the remarkable benefits of WSL 1: High levels of integration between Windows and Linux, extremely fast boot times, small resource footprint, and best of all will require no VM configuration or management.

Within WSL 1 a translation layer was used to interpret most Linux system calls and allows them to run within the Windows NT kernel. This meant that some Linux applications were not available due to the challenge in implementing the system calls. With the inclusion of the Linux kernel with WSL 2 and full system call compatibility, a number of new applications are now available for use within WSL including the Linux version of Docker and FUSE.

Benchmarking performed by the development team has shown noticeable improvements with file intensive operations such as git clone, npm install, or apt update. According to Loewen, “Initial tests that we’ve run have WSL 2 running up to 20x faster compared to WSL 1 when unpacking a zipped tarball, and around 2-5x faster when using git clone, npm install and cmake on various projects.”

The team attempted to maintain the user experience of WSL 1 with WSL 2 , however there were some key changes. The largest change is that files that will be frequently accessed should now be put inside the Linux root file system to take advantage of new file performance benefits. This is a change of direction from WSL 1 where it was recommended that these files be put into the C drive. With this change, it is now possible for Windows apps to access the Linux root file system. For example, running explorer.exe . in the home directory of the Linux distro will now open File Explorer at that location.

Since WSL 2 now runs in a VM, you need to use the VM’s IP address to access Linux networking applications from Windows. As well, the Windows Host IP will be needed to access Windows networking applications from within Linux. The team has plans to include the ability to access network applications via localhost in the near future.

Linux distros can be run using either WSL 1 or WSL 2 and can be upgraded and downgraded between the two versions. New commands were added to facilitate setting the version: wsl --set-version <distro> <version>; this command allows you to convert an existing distro to use either the WSL 2 or the WSL 1 architecture (by specifying 1 or 2 for the version). The command wsl --set-default-version <version> will allow you to specify the initial version (either WSL 1 or 2) for new distributions.

WSL 2 is available starting in build 18917 in the Windows Insiders program. The team has provided a document outlining the additional user experience changes between WSL 1 and WSL 2. Users who wish to raise an issue or have feedback for the development team are invited to file an issue on GitHub. Some of the team members are also available via Twitter to help with general questions.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

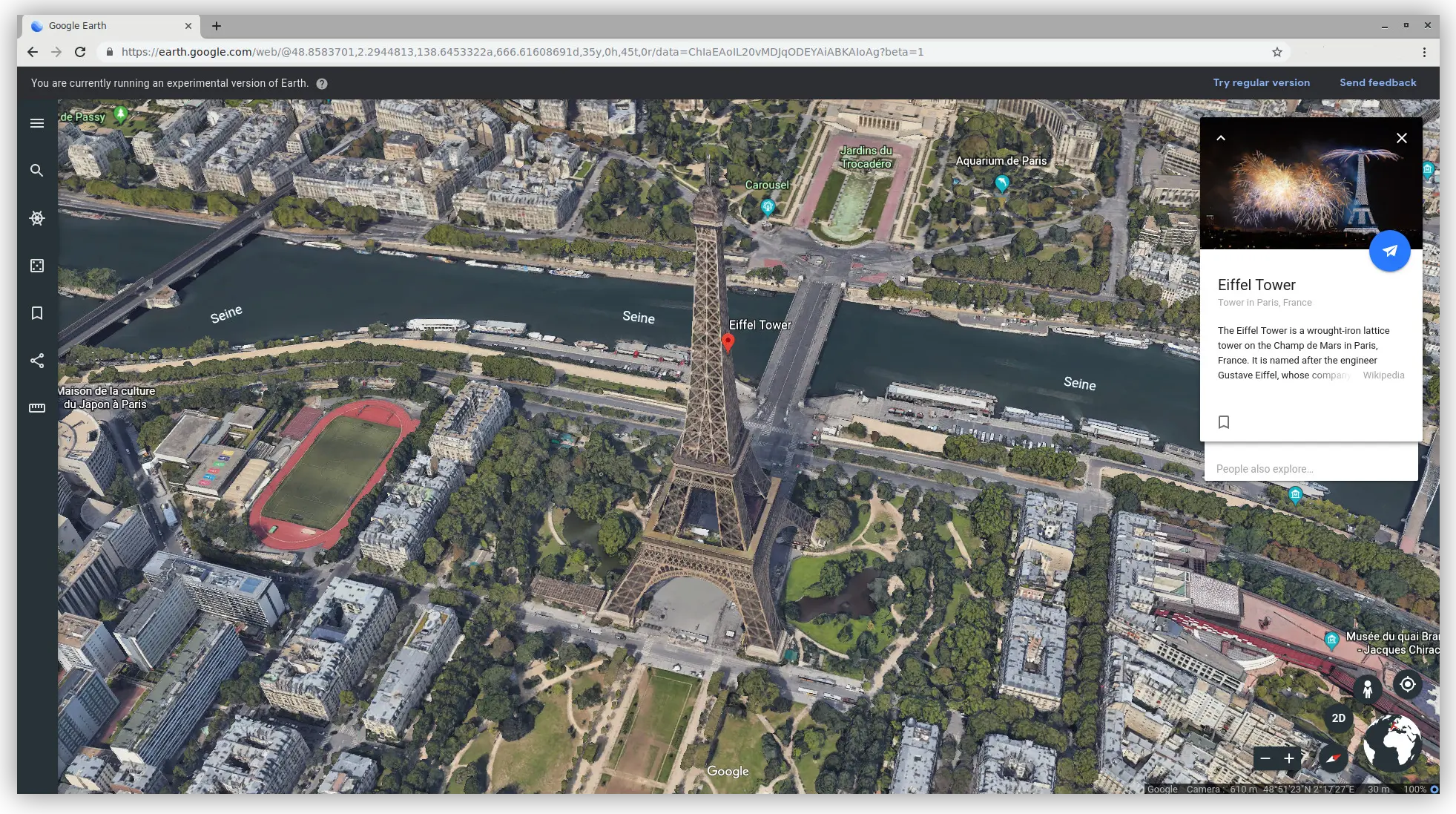

The Google Earth team recently released a beta preview of a WebAssembly port of Google Earth. The new port runs in Chrome and other Chromium-based browsers, including Edge (Canary version) and Opera, as well as Firefox. The port thus brings cross-browser support to the existing Earth For Web version, which uses the native C++ codebase and Chrome’s Native Client (NaCl) technology. Difference in multi-threading support between browsers leads to varying performance.

Google Earth was released 14 years ago and allowed users to explore the earth through the comfort of their home. This original version of Google Earth was released as a native C+±based application intended for desktop install because rendering the whole world in real time required advanced technologies that weren’t available in the browser. Google Earth was subsequently introduced for Android and iOS smartphones, leveraging the existing C++ codebase through technologies such as NDK and Objective-C++. In 2017, Google Earth was released for the Chrome browser, using Google’s Native Client (NaCl) to compile the C++ code and run it in the browser.

Thomas Nattestad, Product Manager for Web Assembly, V8 and Web Capabilities, explains the drivers behind the WebAssembly port of Google Earth:

As the Web progressed, we wanted Earth to be available on the platform so it could reach as many people as possible and let them experience the entire world at their fingertips. Web apps offer a better user experience because they’re linkable, (…) they’re secure, since users aren’t at risk of viruses that can come with software downloads; and they’re composable, meaning we can embed them in other parts of the web.

(…) Using WebAssembly, we see more possibilities not just for making apps more accessible across browsers, but smoothing out the online experience, as we’ve seen with Google Earth.

With the WebAssembly port, Google Earth is now available in Chrome, Edge (Canary channel) and Opera, as well as Firefox. It is not available however in Safari or the current public version of Edge, as those browsers lack full support for WebGL2.

Further differences between browsers impact Google Earth performance. A key factor is support for multi-threading. Jordon Mears, Tech Lead Manager for Google Earth, explains:

Think of Earth like a huge 3D video game of the real world. As such, we’re constantly streaming data to the browser, decompressing it and making it ready for rendering to the screen. Being able to do this work on a background thread has shown a clear improvement in the performance of Earth in the browser.

Multi-threaded WebAssembly is enabled by a feature called SharedArrayBuffer, which was pulled from browsers due to the Spectre and Meltdown security vulnerabilities. Chrome introduced Site Isolation to remediate the vulnerabilities and re-enabled SharedArrayBuffer for desktop. Other browsers, such as Firefox, still disable SharedArrayBuffer, with a view to bringing back support for multi-threading in future versions. In the meantime, Earth runs single-threaded in those browsers with possibly lower performance.

Future improvements in performance are linked to new features expected to come to WebAssembly, among which SIMD support, and Dynamic Linking,

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

About the conference

Code Mesh LDN, the Alternative Programming Conference, focuses on promoting useful non-mainstream technologies to the software industry. The underlying theme is “the right tool for the job”, as opposed to automatically choosing the tool at hand.

Bringing Blockchain Developer Tools to the Enterprise, Truffle and Microsoft Announce Partnership

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

In a recent blogpost, Microsoft and Truffle announced a partnership to bring blockchain developer tools to the Microsoft Azure ecosystem. The investments the organizations are making include local blockchain nodes for testing, with test data, smart contract authoring, continuous deployment, debugging and testing.

This partnership builds upon a previous engagement between the two companies in April 2018 when they co-authored guidance for using Truffle in consortium DevOps scenarios and included tooling in Microsoft’s Azure Blockchain Development Kit for Ethereum.

Truffle, a spin-off of Brooklyn-based blockchain company ConsenSys, has developed an open source blockchain development environment and testing framework for the Ethereum blockchain. Developers can use truffle tools to compile, link, test and manage binaries. The tools have over 3 million downloads and are used to expedite smart contract and front-end app development for distributed ledger applications.

Microsoft continues to make investments in their blockchain tools including Visual Code extensions, connectors for Azure Logic Apps/Microsoft Flow and Azure DevOps pipelines.

Both Microsoft and Truffle acknowledged the need for better blockchain tooling in the enterprise and this partnership seeks to address those gaps. Tim Coulter, Truffle ceo and founder, explains:

Early on it was very clear Ethereum developers needed professional, modern development tools. With enterprise’s growing adoption of blockchain technology, Truffle recognizes the importance of this opportunity to bring our blockchain-native experience to enterprise developers across the world through our partnership with Microsoft.

As a result of this partnership, Truffle developers can expect deep integration with Azure services like GitHub and the integration of Truffle Teams, which allows for the management and monitoring of blockchain applications, into Azure offerings. In addition, Azure developers can expect rich experiences in the following areas:

- Local nodes that can be used for development and testing.

- Node Test Data that has been forked from an existing blockchain instance so that developers can access a local copy of a blockchain network.

- Smart contract deployments using a repeatable scripted approach to local, private and public chain environments.

- Test Execution using Mocha test framework that can be executed locally, inside an Azure DevOps pipeline or through an interactive console.

- Debug smart contracts using tooling that is on par with .Net and Java developer’s expectations.

This partnership increases the strength of Microsoft’s blockchain ecosystem. Marc Mercuri principal program manager, Azure Blockchain Engineering explains:

As partners and as end users, we are big fans of Truffle’s technology and the people behind it. Their customer obsession and open orientation has made them the trusted choice for blockchain developers, and we are eager to see what you will build with Truffle on Azure.

For more information on how Truffle integrates with Microsoft’s blockchain offerings, please visit the Azure Blockchain team’s BlockTalk channel where they highlight the Truffle extension for Visual Studio Code.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Recently Microsoft open-sourced Try .NET, an interactive documentation generator for .NET Core.

Try .NET is a .NET Core Global Tool that allows the creation of interactive documentation for C#. Similarly to equivalent tools targeting different programming languages (such as Jupyter), it produces documentation that can contain both explanatory text and live, runnable code.

Maria Naggaga, senior program manager at Microsoft, explained the motivation behind the development:

Across multiple languages, developer ecosystems have been providing their communities with interactive documentation where users can read the docs, run code and, edit it all in one place. […] It was essential for us to provide interactive documentation both online and offline. For our offline experience, it was crucial for us to create an experience that plugged into our content writers’ current workflow.

All documentation is composed of markdown files containing a set of instructions and code snippets. In addition, Try .NET uses an extended markdown notation for code blocks that allows referencing a specific region within a source code file. This way, instead of writing down a code sample inside a code block, the writer can simply reference a C# region defined in another code file.

Using the extended markdown notation, a C# code block that would normally be written as:

```cs

Console.WriteLine("Hello World!");

```

Can be simply written as:

```cs --region helloworld --source-file ./Snippets/Program.cs

```

Where --region refers to the C# code #region named helloworld in the file Program.cs. More details and examples on the extended markdown notation for code blocks can be found in the tool’s GitHub repository.

Another interesting feature of the tool is that it can be used in conjunction with .NET Core templates. Currently, there is only one public template available for Try .NET, created to demonstrate the markdown extension notation. However, it is possible to use the existing functionalities of .NET Core to create local custom templates for Try .NET.

Scott Hanselman, partner program manager at Microsoft, also mentioned in his personal blog that Try .NET could be used for different purposes, such as creating interactive workshops or online books:

This is not just a gentle on-ramp that teaches .NET without yet installing Visual Studio, but it also is a toolkit for you to light up your own Markdown.

The development initiative behind Try .NET started two years ago, with the release of the interactive feature on docs.microsoft.com. Interactive .NET documents also have IDE-like features such as IntelliSense (for code-completion), which provides a richer user experience. Online examples of documents generated by Try .NET can be found here and here.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

In a series of blog posts, Mathias Verraes describes patterns in distributed systems that he has encountered in his work and has found helpful. His goal is to identify, name and document patterns together with the context in which they can be useful, and he emphasizes that patterns often become anti-patterns when used in the wrong context.

Verraes, working as a consultant and founder of DDD Europe, currently describes 16 patterns in three areas: patterns for decoupling, general messaging patterns and event sourcing patterns. For each pattern he describes the problem and the solution, sometimes also with example or implementation.

Completeness Guarantee is a decoupling pattern where the goal is to define the set of domain events sent from a producer, where a consumer needs to be able to reproduce the state of the producer. Often, events are created or updated in response to consumers needs, and after a while it can be hard to understand the purpose of each event and if it’s used at all by a consumer. To accomplish a completeness, an event must be published whenever a state changes in the producer, and ideally the event only contains changed attributes, nothing more. This way it becomes clear what each event means and what attributes it carries.

Passage of Time Event is a decoupling pattern aiming at replacing a scheduler that at some rate calls an API in a service with a scheduler that emits generic domain events, like DayHasPassed or MonthHasPassed. Interested services can then listen to these events and internally handle any actions needed. For Verraes this is a very reactive approach. Instead of sending a command and expecting a response, a scheduler can now just emit events about time, without caring about if any listens to them.

Explicit Public Events is a pattern for separating events into private and public events. Often a service, especially when using event sourcing, should not publish all events to the outside world. One reason is that the external API for the service becomes tightly coupled to the internal structure and an internal change may require a change both in the API and in other services. This pattern can be implemented by making all events private by default and specifically mark events that are public. Private and public events can then be published using separate messaging channels.

Segregated Event Layers is a way to separate private and public events even more. By creating adapters that listen to internal events and emits a stream of new public events, internal events become strictly private. Verraes notes that this is an implementation pattern for building an anti-corruption layer where the new event stream in practise becomes a new bounded context with its own event types and names.

One pattern that can be used when there are attributes in an event that only should be visible to some consumers is Forgettable Payloads. Sensitive information in an event is then replaced with an URL pointing to a storage containing the sensitive information; a storage with restricted access. Another similar pattern is Crypto-Shredding where sensitive information is encrypted with a different key for each resource. By deleting the key, the information cannot be accessed anymore.

Decision Tracking is used to store the outcome of decisions when event sourcing is used. If a rule changes, for example limits when it should be applied, and the events are replayed without tracking the rule changes, the outcome may be different. One solution is to store decisions as events together with the events that caused the decision. Verraes notes that this also can be used to mitigate the consequences of a bug, since it’s possible to replay all events and compare the outcome with data from the decision event.

Natural Language Message Names is a pattern recommending that verbs be used in message names to make them more expressive. Using a natural language and embedding it in code and artefacts is a core concept in Domain-Driven Design (DDD). Verraes notes that domain experts don’t use terms like Payment event or Invoice paid; they say the invoice was paid. For events he recommends using names like CustomerWasInvoiced and InvoiceWasPaid. For commands he prefers InvoiceCustomer and FulfilOrder.

Verraes concludes by noting that his series of patterns is a start, and he asks about other patterns and experiences other developers have found or had. His contact information can be found on his website.

Chris Richardson has created a pattern language for microservices, with patterns about deployment, communication styles, data management and other areas.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Monday newsletter published by Data Science Central. Previous editions can be found here. The contribution flagged with a + is our selection for the picture of the week. To subscribe, follow this link.

Featured Resources and Technical Contributions

- Online Encyclopedia of Statistical Science (Free)

- Model evaluation techniques in one picture +

- Bayesian Machine Learning

- A Comprehensive Guide to Data Science With Python

- Recognizing Animals in Photos – Building an AI model for Object Recognition

- Running Peta-Scale Spark Jobs on Object Storage Using S3 Selec

- 29 Statistical Concepts Explained in Simple English – Part 16

- Writing/Reading Large R dataframes/datatables

- Power Regression: New Least Square Method

- Question: Spatio-temporal clustering ?

- How to Build an NLP Engine that Won’t Screw Up

- How To build an app like Uber

Featured Articles

- The Importance of Community in Data Science

- Design Thinking: Is it a Floor Wax or a Dessert Topping?

- Big Data Transformation in the age of IOT

- Digital Transformation and the AI Advantage

- The Catch 22 problem holding back #AI application adoption …

- Four Powerful People Skills For Data Scientists

- What Business Really Wants from AI – The Future of AI Platforms

- Testing traditional Software vs. ML application

- How to Monetize the Sports Digital Transformation

- Top 8 Data Science Use Cases in Construction

- Face Recognition is the future Revolution

- How Data Masking is Driving Power to Organizations?

- What is NLP & How Does it Benefit a Business?

Picture of the Week

Source: article flagged with a +

To make sure you keep getting these emails, please add mail@newsletter.datasciencecentral.com to your address book or whitelist us. To subscribe, click here. Follow us: Twitter | Facebook.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

At QCon New York 2019, Kate Sills, a software engineer at Agoric, discussed some of the security challenges in building composable smart contract components with JavaScript. Two emerging TC39 JavaScript proposals, realms and Secure ECMAScript (SES), were presented as solutions to security risks with the npm installation process.

Today when running the npm install command, a module and all of its dependencies have access to many native operations including file system and network access. The main security risk is that a rogue dependency of an otherwise trusted module could get compromised and replaced with logic intended to access private information on a local machine such as a cryptocurrency wallet, and then upload that information to a remote server via an HTTP connection.

TC39, the technical committee responsible for future versions of the JavaScript standard, have two proposals which are currently in stage 2 of their approval. The first, realms, makes it easy to isolate source code, restricting access to compartments in which code lives. The realms proposal solves the problem of limiting access to a sandbox, by restricting access to self, fetch, and other APIs outside the sandbox. Realms have many potential use cases beyond security isolation, including plugins, in-browser code editors, server-side rendering, testing/mocking, and in-browser transpilation. A realms shim is available to leverage the current draft proposal of realms today.

Another potential attack vector is prototype poisoning, where the prototype of an object gets changed unexpectedly. The proposal to fight this attack vector is Secure ECMAScript (SES), currently in stage 1 of the TC39 approval process, which combines Realms with transitive freezing. npm install ses provides access to the SES shim.

Realms and SES successfully lockdown JavaScript, but many applications do need access to APIs like the file system and network. During Sills’ talk, she highlighted the principle of least authority to only grant what is needed and no more.

Sills provided an example of a command line todo application which relies on a common dependency in the JavaScript ecosystem to modify the styling of the console. This module requires access to the operating system object, but only to work around a color glitch found on specific operating systems. With SES, it is possible to attenuate access to restrict the availability of the dependency to only access the capabilities on the os object necessary to fix the color glitch.

During the presentation, Sills also highlighted Moddable XS, which has full support for ES2018 as well as support for SES, making it possible to allow users to install applications on IoT devices safely. Other examples of current SES implementations include an Ethereum wallet with all dependencies in an SES environment and the Salesforce locker device.

SES and Realms have a promising future, but there are currently some limitations, performance challenges, and developer ergonomics (e.g., modules today need to get converted to strings) to solve before these proposals become an official part of the JavaScript language.