Month: October 2019

MMS • Filippo Valsorda

Article originally posted on InfoQ. Visit InfoQ

Transcript

Valsorda: Today I’m here to tell you about the Go cryptography libraries. I’m Filippo [Valsorda] and my main job is that I’m the security coordinator for the Go project. I usually start with that because even if most of my time is spent maintaining the crypto libraries, I like to point out that the crypto libraries are just a way to guarantee the security of the Go ecosystem. The job is making sure that Go applications are secured and that job includes, for example, responding to reports to security at golang.org. There’s a whole group behind that email but I’m usually the person that will do triage.

Cryptography Is Hard

As for cryptography, you might already know that it’s hard – capital H Hard. These can mean different things depending on who you’re talking to because cryptography itself can mean a few different things. Today, we’re not going to talk about cryptography design, we’re not talking about what academics do when they design the next AES or the next SHA and they have to think about the next hash function and they have to think about things like differential task or anything like that. We’re going to talk about what people like us do, we’re going to talk about cryptography engineering.

Cryptography engineering is, of course, more fraught than other kinds of engineering. That makes it even more important to always keep an eye on complexity when developing. Cryptography comes with complexity in itself because the subject matter is complex, you are mixing math with dangerous security requirements. What I want to tell you with the talk today is that cryptography engineering, like most engineering though, is an exercise in managing that complexity.

Cryptography engineering, indeed, is special mostly because a single mistake can make your entire system useless. Cryptography is a tool to achieve security but if you get anything wrong in the cryptography implementation, that’s going to not deliver the security it was meant to deliver, and it will become useless. This is a bit like saying that if you get the lighting wrong on a bridge, it’s going to collapse.

When we have that level of assurance requirements in software engineering, we usually focused on testing as a way to gain confidence in the implementations. Cryptography engineering is particularly tricky there too because you can test everything that your users will do, you can test everything that your application will do, you can test everything that any reasonable member of your ecosystem will do, and still not deliver any security with your cryptographic implementation. The attacker will be more than happy to figure out what things you didn’t test because nobody in their right mind would send those inputs and proceed to use those to break whatever security property you are trying to deliver.

The most important tool in cryptography engineering is managing complexity and this complexity can be above your API surface where you’re managing the complexity in your APIs, in your documentation, in what you provide to users so that they use your libraries safely and correctly, and below the API surface where you don’t want to implement things that will bring in so much complexity that you can effectively guarantee the security of what you’re implementing.

These two have a little different dynamics and the failure mode in both is different. If you fail in managing complexity above your API surface, what you get is that users will roll their own crypto.

We’ve all heard “Don’t roll your own crypto” as gospel and while it’s a right advice, I often feel like cryptographers like us use it to gain a moral standing over developers that are just trying to roll their own crypto, not because they want to, but because what we provide wasn’t easy enough. I don’t believe any developer starts the day thinking, “It would be really nice to figure out my own composition of ciphers today.” They open maybe the docs of whatever library they have available, decide that this is all way too much, that it’s not clear how to use this. Then they search on Stack Overflow, and say, “It seems easy enough to bang together AES and HMAC, it will work out.”

Yes, you shouldn’t roll your own crypto but in the first place, you shouldn’t feel the need to. This is about cryptography library maintainers managing the complexity we expose above the API surface. Below the API surface, the same refrain, “Don’t roll your own crypto,” sometimes lead to, “But you’re not supposed to understand cryptography code. If you read my code and it’s all too complex to understand, it’s just because you’re not a cryptographer, ok?” That’s not how it works. Cryptography code can be readable. The cryptography code actually should be more readable than any other code base exactly because the complexity is intrinsic in what we are implementing, we need to strive even more to manage our complexity below the API surface. The job is harder, so we need to do a better job at making it manageable.

The Go Cryptography Libraries

From this understanding of cryptography engineering is how I maintain the Go cryptography libraries. For context, Go has made a different choice compared to most languages, which usually come with links or wrappers for OpenSSL or simply don’t provide any cryptography in the standard library. Rust doesn’t have standard library cryptography, it has very good implementations in the ecosystem. JavaScript only has web crypto, but it depends on what platform you’re running on. Python doesn’t come with a crypto standard library. Go does; you’ll find anything from a TLS stack implementation for HTTPS servers and clients all the way to HMAC or any other primitive that you might need to make signatures to verify hashes, encrypt messages.

Outside of the Go standard library, we have what we call the supplemental repositories. We usually call them the X repos because you fetch them from golanguage.org/x/crypto, for example, the cryptography one. This brings additional implementations and it has all sorts of things that usually are a bit more modern or a little less often used. The only main difference these days, it used to be that these were allowed to be less stable or less things. That’s not true anymore, I honestly gave up on that about a year ago. The distinction these days is just that the standard library gets released along with the language, Go 1.13 will come out soon, it will bring out new rotation of these packages.

These packages are just a Go module that you install an update just like you installed an update on your normal dependencies. I think that the decision to implement cryptography libraries in Go has a number of reasons, some of them are very specific to Go, like cross-compilation requirements. What I want to talk more about is how in the other direction, Go is a good language to implement cryptography. If you know a bit about Go, you might imagine the standard reasons for that. Go is memory safe, so you don’t risk buffer overflows and vulnerabilities like that. Heartbleed, the vulnerability in OpenSSL, could not have happened in Go because there is no way for other to run buffer.

Performance, of course – Go is not as performance as your C compiled by LLVM might be, but it can still deliver a level of performance that is usable. While writing the core of your cryptography in Python might not deliver something that you can actually use in production, writing it in Go, many times is sufficient. Go binaries are reproducible, which is a property that I really care about because it means that every time you build the same program in Go twice, even on two different machines, as long as you’re targeting the same architecture and operating system, the binary will be identical even if your cross-compiling. It will keep being identical forever, so it doesn’t matter when you compile it, as long as you use the same version of the compiler, the same version of all the dependencies. This is important in security because it means that you can verify that a binary comes, indeed, from the source it says it comes from.

Finally, Go is fairly easy to statistically analyze. These are the reasonably obvious reasons for which Go is good for cryptography but the ones I found are more important are the same reasons that Go makes for good language for a number of different users. Again, implementing cryptography is inheriting the early complex, so the fact that Go is a clear language is explicit, it always has explicit control flow, you can always tell what is going on in the language, there is no operator overloading.

When you look at some code, you can be sure what it does. When I look at some signing verification, I don’t have to worry that an exception will happen and then get quote somewhere else and skip a verification check or anything like that. Documentation is extremely easy and very well built into the culture. In Go, you just document things by adding comments to the export and symbols, which you might be familiar with if you use tools like Oxygen, but there’s also very good centralized tooling for showing this documentation.

Finally, there’s something that you might be confused about, “Wasn’t this about cryptography, why are we talking about code for matters?” One of the reasons I enjoy writing cryptography in Go is that there is a standard code for matter, go fmt. Basically, everyone in the community formats their code the exact same way, tabs instead of spaces, which might be controversial. It might not even be what I used to do but at least it’s always the same. When you’re looking at two pieces of code and trying to work out with the difference is, trying to figure out why one piece of code is working and that one isn’t, you always feel you’re in unfamiliar territory. All of these things reduce the overhead and the complexity of programming in the language, which is important when the baseline complexity is already so high because you’re implementing something complex.

All of this works out, the Go cryptography library has been solid for years now from before my tenure too and it’s been used in production in a number of places, it’s used in Kubernetes, it’s used in Docker. When I was a co-author, I used it to implement the TLS 1.3 prototype that run on the whole CDN for all the clients. There was no NGIX in the middle, there was no OpenSSL, we run Go straight on the edge. This helps the language succeed because some of the most common use case of the Go language. Making microservices and having an easy to use HTTP, TLS, HTTPS library, made it very easy to make self-contained services that work securely on your network.

However, it’s important to keep in mind what the goal of implementing this library was. The Go project is not in the business of building a cryptography library. The goal is keeping the ecosystem secured, the goal is providing security for Go programs for Go developers. The cryptography libraries are just a tool for that Goal, so my role is to develop a good cryptography library but that’s because I am trying to keep the ecosystem secured. It’s important in this to manage complexity on our side so that developers that are implementing something that requires HTTPS, something that requires signing, something that is requires encryption, don’t have to manage that complexity themselves.

This is important for anyone depending on the code they make. Any complexity you don’t address in your own work is complexity that you are going to delegate to your users. In open source project, it’s clear: if I don’t take care of complexity and just push it outwards out of the API surface, the millions of Go developers will have to handle that complexity. It scales all the way to work projects where someone will use your library. That someone might be you later, it might be the team next to you, it might be your team. Anytime a library does not actively manage the complexity it’s exposing, it’s delegating that complexity downstream to whoever using it.

This is, of course, hard to action. This is maybe a lofty goal, but some of the challenges that I encountered when I joined is that the crypto libraries were developed by Adam Langley. He did a fantastic job at building them and I liked that they’re not complex, I like that they are safe into how do I decide every day what to do and what not to do.

Until last year, I worked on defining the principles that defined this philosophy more explicitly and I came to a conclusion that the Go crypto library is trying to be secure, safe, practical, and modern. I say them in order because I think it’s important to know what the priorities of where you’re going with your project are. Secure is obvious, we don’t want to have vulnerabilities. Decisions on it are easy; if there’s vulnerability, we fix it – easy. Not easy to do maybe, but easy to decide, easy to think about. I like to think of secure also meaning as if we can’t guarantee that something is secure, if we can’t figure out how to test that, we should not merge it.

Equally important and often overlooked is that they should be safe. Safe here means that they are hard to use incorrectly, that they’re not just possible to use securely but that developers will use it securely. You will say, “You can’t go and make people use your libraries correctly.” That’s true, but I also can ask them to learn cryptography to use my libraries. If people need to understand cryptography to use the library, I failed. Safe means that default should be safe, it means that documentation should be clear about what you can and can’t do and what security it provides them and what it doesn’t. It means that if there is an insecure option, for example, if you really want to turn off certificate validation in TLS, it should be obvious that you’re doing that so that you or your code reviewers can spot it. Something that I am applying as a hard rule now is that anything insecure needs to shout it from the API.

I like short names, Go has a tendency to make shorter names than Java or other languages, but I will go out of my way to put insecure, legacy, superfluous even, in the exported APIs when I think you should make an active decision to opt into something that will be in your code. I want everybody who uses a weird version of CATX that was never standardized to have to put a comment about it that says, “Yes, I know it says legacy but I’m sorry, this happened, we have to.” Cool, we’re all adults, we’re all developers, we can make our decisions but I want these decision to be explicit and opt-in. The option to disable TLS certificate validation is called InsecureSkipVerify.

Next, practical, because at some point, you have to acknowledge that you have a job to do, you can’t just make the perfectly secure, perfectly safe empty library. Zero lines of code, zero vulnerabilities. I call it dangerous because it’s easy to call everything useful or everything practical. If someone comes with a feature request, it’s because they need it. Sometimes they might be misguided, and they might need something else but oftentimes when a developer comes to you and wants to implement something, that is a valid use case. That’s why they’re in order, they’re in order because I want to be able to say, “Yes, this sounds useful, but I don’t think we can implement it securely with the resources we had,” or, “Yes, this sounds useful but it will not at all be safe because people would turn it on without realizing” or “People would have to turn it off to be secured and that’s not an option, defaults have to be secured.”

We picked practical instead of useful because you have to decide what your use case is at some point and what you’re trying to enable. The job is to let Go developers develop secure applications effectively but at some point, there will be something that is too niche. I’m a big fan of providing hooks, callbacks, ways for people to plug in their own weird things when it’s safe to do so but in general, you have to decide what your scope is and this will come up again because it’s critical to managing complexity.

Finally, modern, I wanted to finish it on a note up because you can keep it very secure and very safe by never moving and stay in the exact same implementations as 15 years ago and then Libsodium happens, as it should. Libsodium is a C library that I truly recommend if you are working in the language that has bindings for Libsodium, which came out as a safer, more modern, more opinionated C cryptography library. I consider my job to make sure that Go never needs Libsodium, that we can keep adding the new things that people need, the more modern, the better alternatives: TLS 1.3, SHA-3 – controversial. Adding the things that people need in order to stay up to date with the state of the art.

This is also, by the way, why the Go cryptography library is not FIPS validated. FIPS is a certification that moves necessarily slowly because it’s a government certification and sometimes the cryptography engineering community figure out a way to do something better. For example, ECDSA signatures – it turns out you shouldn’t really do them with random IDs with random values. We learned that lesson painfully, lots of bitcoins were stolen, but now we know that it’s better to do it differently. FIPS requires a certain way of generating randomness. The problem is that you can’t stay modern when you also have to move at the pace of certification which, of course, it’s not their fault, certifying something is hard.

How the Go Cryptography Libraries Are Different

You might be noticing a pattern of trying to do something a little different from other cryptography libraries. All the principal sound intrinsically good, nobody will come in and argue, “No, you should not make a safe library,” or, “No, security is not that important,” or, “I don’t know, modern sounds bad to me.”

However, in practice, where we are different is how we prioritize things. You might have noticed that nowhere on the principles there was performance, for example. Performance should be sufficient such that it will be practical, because if it’s too slow to use it, you’re going to roll your own or you’re not going to use it, so it’s not being practical, so it’s not being useful. As long as performance is sufficient, I don’t care if we’re 20% lower than OpenSSL, that’s not a priority of the Go crypto libraries. Universal support is not a target, I don’t care if there are some ciphers that we don’t support as long as in practice, you get to talk with most of the TLS servers in the world. Yes, you don’t get to choose all the different ways to talk to the servers but as long as you can get your job done, once that’s taken care of, which, of course, is about practical, it’s not a priority to having implemented everything, just like it’s not a priority to implement uncommon use cases. Go has good support for modules these days, we’re rolling it out. It has a very good ecosystem of really modern software, so you can go and use something more tailored if you have a long tail use case.

Instead, priorities are readability, because the thing we’re implementing is complex, so we need to keep the code as readable as possible. We have to reduce the overhead complexity to be able to fight this fight and truly, this is not just about cryptography. You might have noticed by now, this talk is a ruse to convince you to manage complexity in your own code bases because I don’t think cryptographers are the only ones that have to implement something complex. Sometimes you have complex rules to interact with legacy clients, sometimes you have complex database algorithms to deliver the performance you need, sometimes you have complex interactions with hardware. Sometimes you just have complexity from the platform you’re running on, I find programming for the web extremely complex myself. It’s important to fight that complexity by managing it everywhere you can, removing the complexity of how the code reads so that you can focus on what the code is saying.

Safe defaults are important in and out of security context. Then, good guidance, documentation examples. People want to copy-paste, everybody will copy-paste, everybody will copy-paste how to use the crypto libraries and it will either be from the examples I write or from Stack Overflow. That will happen also for your libraries because as long as you are not going to be the only one who ever uses your libraries, somebody will want to copy-paste an example of how to do it. Examples are extremely important.

Now, let me show you an example of what I mean with not implementing everything. This is the list of OpenSSL supported ciphers. There are things in there like Camellia, Idea. There’s more that I don’t actually remember the names of. Those are names of ciphers that you probably never heard. Who had ever heard of Camellia? One hand? Yes. Right there, implemented, negotiable, when you try to look to a server that has all ciphers enabled, that’s in that. These are the cipher suites that crypto TLS implements and six of those are not enabled by default and you have to explicitly go out of your way to get them to your program. Essentially, you just use three variants with a bit of combinations of certificates.

This is to say that most of the value of the Go cryptography libraries is in what we don’t ship. We have good implementations, there are people that contributed really fast implementation of a number of things, I’m really happy about it but what I’m really happy about is all the things that are not in there. All the configuration options that are not in there, all the decision you don’t have to make when you use the library. Again, any library can benefit from this. A library that when you use it doesn’t ask you many questions and does the thing well enough that you can carry on with your day is a delight to use.

In particular, I always call out that I never want to add knobs. We are developers, we always want to solve the problem by adding another argument to that function, add another value to that enum, add another option to that interface. It’s an easy way to solve a problem on the spot and if feels good because we are problem solvers. That’s what feels good about programming, there’s a task and then we get it done. Someone comes and they need a semantic that is slightly different from the one you already have, “Can you just add another option to enable that semantic?” You go in there and you know how to do it, so you just write 10 lines and you’re happy and you satisfied the ticket, fixed.

You do it once, twice, thrice, you do it 10 times and then you have a legacy piece of software and now you want to rewrite it because you want to just bring all of that together. Refusing knobs is, I think, the most important baseline for decisions I make in the crypto libraries.

Then, making a curated selection of features. When I implement a cipher, I want it to be the best cipher for you because I don’t think you care what cipher you encrypt something with, you care that it’s encrypted security. And that it will interoperate well with other things, of course, and that it will be performance. These are decisions that you have to spend a lot of time learning what’s in my brain to then make them yourself if I gave you all the options and no guidance.

Instead, I want that if you see something in the libraries, “Ok, if it’s not marked as deprecated, I don’t have to worry about reading papers to find out if this was broken in the last five years or not.” I think that the most value we deliver is by curating. We deliver value by resisting adding things, by implementing 80% of the features with 20%, 10%, 5% of the complexity. To do that, you have to know your community, you have to know your use case, you have to know your downstream. That’s true both of, of course, open source projects that need to know who their community are, but also of your work projects, you need to know who is using your library and what for.

How I would like to word it is that maintaining a cryptographic library is an exercise in resisting complexity. Complexity always trends up, new features are added, things get broken and need workarounds. New research is done, new, better, modern things are added. Clients come out that don’t really interoperate with your thing, unfortunately. Complexity always turns up, the job of an owner of a library that’s used by many downstreams is to resist that complexity.

I like to think in terms of complexity multipliers. Every time I accept some complexity into the libraries, I am removing a bit of complexity from whoever is asking me for that feature that would have had to work around it in Slack or implement it themselves, and I am placing a little complexity on everybody else. This, of course, changes depending how wide everybody else is. For the Go standard library, it’s 2 million developers, so every time I take even a little bit of complexity, I am adding complexity below the API surface maybe, but for everybody that uses the library.

How the Go Cryptography Libraries Stay Different

Finally, I want to talk about how we make sure that the crypto libraries stay like they are. I told you what the principles are, I told you what my goal is, I told you how I want to resist complexity and how you should do. Now I’m going to talk a bit about how in practice we fight that. The cool thing we’re up against is that there’s a fundamental symmetry. It can take 10 times more time to review, maintain, verify all of that, and periodically fix a piece of code than it takes to write.

I’ll be honest, I love writing cryptography code because you get to do a thing that, intuitively, you’re not even that convinced it should work. There are things in cryptography that I’m still not entirely convinced are real, like zero-knowledge proofs, you can’t prove something about a statement without saying it. Ok, I don’t entirely have an intuition for the fact that it works but then you write it and it interoperates with the thing, it does the thing and you know from the paper that it has some properties and you can see it and that’s a thrill. I’m sure different people like writing different code, but writing code is fun and it’s the easy part of it because it has a clear success result, it runs, it passes the tests, it does the thing.

Most of the job is after, most of the job is getting it through code review, most of the job is reviewing it yourself or getting other people to review it and their time in reviewing it, maintaining it later, carrying that complexity for the rest of the life of the project, or until you get to make the much harder push to remove it.

This is especially true for things like assembly code. Sometimes, the Go compiler is not smart enough for delivering the performance we would want. For example, the Go compiler does not have auto-vectorizer and what a vectorizer does is just recognize that you’re doing something in a loop over, say, four integers and realize that you could just pack those four integers into a wider register and then just do the thing one quarter of the times over that wider register instead, vectorization.

It gets wider and wider, the newer CPUs make these registers more and more wide and they take more and more power and gets more and more complex to decide whether to use them, but the core point is that they can make your things six times faster. This is a PR to make ECDSA, elliptical implementation six times faster on a specific architecture by rewriting it in Assembly. It’s 2,500 lines of handwritten Aassembly. The reason is that when you write in Assembly, you have to do things like unroll loops yourself. Remember that loop we said we can do one quarter of the times? Yes, but you have to write it manual each time because there’s no compiler to do that for you when it delivers the performance gain. Frankly, we don’t have the maintainer resources to review 2,500 lines of Assembly, or at least not on a regular basis. If it happens once a year, that’s doable, but that’s not sustainable.

Here is a lesson that is hard to admit because all of us have an idea of what we want our project, our service, our system to look like in our head and we know what we would want it to be if we had unlimited resources. We don’t want to admit that it’s not perfect, that it’s the best it could possibly be because we don’t have the resources to make it that good. That PR could probably be made secure if we had more maintainers to review it, if we had people that could specialize in PowerPC architectures to verify it but the reality is that what secure is, is relative to your maintainer resources. Complexity that you can take is relative to the maintainer resources.

What we can secure is not an absolute, a PR is not secure or insecure, there are some MLT or there is not. It depends on whether we have the resources to keep it secure in the future to other than now, to improve it over time so that we fight that trend of complexity that goes up, to fix bugs when they come out and to apply new testing techniques later.

To fight this, I’ve been working with policies and making, for example, a policy that if you write Assembly, I need you to write as few as you can and explain why you wrote Assembly and to comment it well and make tests. This was a failure, this didn’t work. That’s because it was not commensurate to our maintainer resources. This made it harder to write Assembly, but it didn’t make it easy enough that we could review it confidently.

There is a new version of the Assembly policy that is going to come out after we discuss it with the contributors that do make that work but I’m going to give you an advanced peek. It says things like, “You have to code generate this.” I don’t want to see just handwritten Assembly, I want to see a tool that generates Assembly so that if you want to write the same thing 15 times, changing only one thing at a time, you can write a program that does that and then I can review the program and say, “Yes, it always prints the same thing and it just changes this register, it sounds good to me.” I wanted to have small units so that we can aggressively fuzz each operation.

For example, there was once a bug in an elliptical implementation in Go where subtraction was broken – subtracting two numbers. It took forever to find it because to hit the right inputs to break that function from the way larger dual complete elliptic curve operation function, it would take 2 to 40 operations. We eventually found it, but it would have been found much faster if we had 50 lines for each units to test, so that’s going to be a requirement. There’s going to be a requirement of reference Go implementation for two reasons: because I want to understand what you are trying to do and, of course, it’s easier to read Go than it is to read Assembly, and because it’s where you document, “Here is where to Go compiler does not do the thing that I do manually in Assembly.”

This is, of course, a lot more work but this is a way to fight complexity and it’s a policy which is commensurate to the maintainer resources. Just like what is secure and what is not is relative to the maintainer resources, policies need to be relative to maintainer sources too. Policies are not the only way to approach this. You can often make core changes that reduce complexity in everything else that can use them.

For example, one reason that a lot of implementations were using Assembly was not to do weird vectorization things, it was to multiply two uint64. When you multiply two uint64, you get a uint128, double wide. The Go language does not have a uint128 because our architectures don’t have it and so, it’s lower to do it in Go in two house and two parts than to do it in Assembly. This is a new intrinsic that is implemented by the Go compiler that just tells it, “Multiply these two uint64 and put in this two output of uint64.” That made a bunch of Assembly implementations be not that competitive against the Go implementations that use these.

Then there is tooling; fuzzing, mutation testing, we are working on that soon where since you can’t really tell with constant-time Assembly what is covered – code coverage is nice. The problem is that constant-time Assembly, by design, all runs all the time, so you don’t know which operations are being done or skipped because they all execute. Instead, mutation testing, you take an Add with Carry, you change it into an Add, and if your tests don’t break, you’re not testing the Carry.

Then, things like reusable tests, I’m a big fan. If you are the maintainer of a project that requires an interface, defines an interface, like the crypto.Signer. You can write tests that just test the interface behavior and then anybody that implements that interface will have very good tests and you will have a cleaner ecosystem.

The last part about this effort is that this is a community management job. Whether your community is your users, colleagues, customers, or anybody who has requests for your code. In Go, we have a proposal process; you just open an issue and you call it proposal column and it says, “I would like the crypto libraries to do this and that.” What’s that about is delegating complexity, someone is asking you to take complexity of them into the project. The problem is that now you’re taking up that complexity and every other user will suffer at the same time. This is why I like to say that everyone wants their proposal accepted and everybody else’s rejected because they understand that their proposal being accepted will reduce their complexity but everyone else’s proposal that gets accepted will increase the complexity of what they depend on.

This is always a judgment call, it’s extremely hard to make clear cut policies for what goes in and what does not. At the end of the day, a lot of the job is also understanding your community, understanding your users or customers, and understanding what things you do need to take up from them and what things you can justify not doing and justify it, explain to them you do like low complexity, so here’s how we maintain it.

Finally, the most satisfying kind of software engineer – removing things. In Go, we can’t remove things per se because we have a compatibility promise. When you update Go, nothing breaks, that’s our promise. We don’t remove APIs, we don’t change behaviors of exported APIs. Instead, we deprecate, deprecation are very formal things where you add that comment that is machine readable and a common line in linter tools will warn anybody who is importing this package, “You are using something deprecated.” In case of cryptography, I went on a deprecating spree and went to remove a bunch of things that you don’t want to use for one reason or the other, whether they are not safe, not secure, or not modern.

Sometimes you can’t just deprecate, it’s a matter of deprecating and replacing. The curve25519 API we had did not return an error because in most cases, you don’t care about malformed inputs, except sometimes you do and that’s not safe, that’s not a safe default, it’s leaving edge case in which it’s not secured to use the library. We are deprecating the old API and replacing it with a new one that always returns an error so that by default, it’s secured.

There are things that we get to remove like SSLv3 which has been completely broken for 5 years and been deprecated for about 20. This is something that we will remove but we’ll remove in stages, it will be first deprecated for a release and then six months later we’ll remove after getting that feedback from the community. In its place, you get things like TLS 1.3 because when you recover complexity budget by removing things, you get to spend that on more modern things.

Conclusion

In conclusion, what I want everybody to take away from this talk aside from the fact that Go has a cool set of cryptography libraries, is that every project has a complexity budget. It’s how much complexity you are taking up before it becomes an issue whether you acknowledge it or not because, yes, cryptography libraries start with a very complex baseline, but every project has a budget. Our budget is smaller, because we have to spend so much of it on the actual algorithms. Even if you get more slack because you have more understandable things to implement, it’s important to make sure that you don’t let complexity that trends up overshoot your budget.

You should actively manage your complexity budget, you should know what it is, you should know how much you have left, and you should know how everything that you implement is going to cost you. That famous mythical prototype that we will replace in three months does not exist.

Questions and Answers

Participant 1: As someone who dibble in open source, I mean, [inaudible 00:46:37] Go libraries myself, how much time do you actually spend, because as programmers, we like to learn new things and write them, so what’s the ratio or number in actually talking and comment other people’s pull request and accepting or rejecting them versus writing your own fixes or new implementation inside the libraries?

Valsorda: The question was, if I understand it correctly, what’s the ratio between how much time you spend discussing other people’s changes or reviewing versus making your own changes. I think cryptography is a bit particular because I spend a lot of time talking about other people’s requirements but not really their code. Assembly implementations – yes, a lot, but aside from that, I write a lot of the code that eventually lands. That’s different in other open source projects I manage because they have wildly different maintainer resource budgets and complexity budgets. They can spend more complexity, because they don’t have the maintainer resources to reimplement everything or discuss everything at that much length.

Participant 1: Just spend more time at other people’s pull request?

Valsorda: Yes.

Participant 1: They can’t have that because they don’t have their own…

Valsorda: Mostly because they will come with a PR more often than on the crypto libraries, but this is really depends on your project. Something that you might have noticed is that I did not really discuss what complexity is because that’s subjective. What I want people to take away is that it’s something you should be actively managing, so what I want you to do is ask that question even if I don’t have the answer. I want you to think, “Should I be spending more or less time on this or that? Should I be pushing back more or less? Should I be taking these changes?”

Participant 2: Has anyone built a PKI in your language?

Valsorda: What do you mean a PKI?

Participant 2: Like a CA.

Valsorda: TLS in x509 packages support the web PKI, that’s their main goal. Let’s Encrypt CA, the free automated one, their software called Boulder is written in Go, so that’s what powers the biggest CA on the internet. They have HSM for the actual keys but everything built around that is in Go. There are also other PKIs that run on Go, I think that we often see issues with the Estonian ID cards because I think Go is involved in the Estonian election process, which is terrifying. But I’m very happy that it is and I don’t have a reason to think it’s a bad choice at all, just my job.

See more presentations with transcripts

MMS • Joe Duffy

Article originally posted on InfoQ. Visit InfoQ

Transcript

Duffy: I’m really excited to talk about our approach to infrastructure as code and some of the things that we’ve learned over the last couple of years. One of the things I’m going to try to convince you all of is that infrastructure is not as boring as one might think. In fact, learning how to program infrastructure is actually one of the most empowering and exciting things as developers we can learn how to do especially in the modern cloud era. I’ll start by telling you a little bit about my journey, why I came to infrastructure as code, why I’m so excited about it. I’ll talk a little bit about current state of affairs, and I’ll talk about some of the work that we’ve been doing to try to improve the state of affairs.

First, why infrastructure as code? When I see the phrase “infrastructure as code,” I’ll admit I don’t get super excited about it even though that is an important technology to have in your tool belt. The reason why, when I break it apart, my background is as a developer. I came to the cloud through very different routes than I think a lot of infrastructure engineers and so, that gave me a different perspective on the space. I started my career and I did a few things before going to Microsoft, but I think the most exciting time in my career was at Microsoft where I was an early engineer on the .NET framework and the C# programming language, so back in the early 2000s.

I did a lot of work on multi-core programming and concurrency, created the team that did the Task framework and Async and ultimately led to Await. Then I went off and worked on a distributed operating system, which was actually eye-opening when I came to cloud because a lot of what we did foreshadowed surface meshes and containers and how to manage these systems at scale and how to program them. Then before I left Microsoft, I was managing all the languages team, so C#, C++, F# language services and the IDE. What really got me into cloud was taking .NET open source and cross-platform. The reason Microsoft did that was actually to make Azure a more welcoming place for .NET customers wanting to run workloads in Linux in particularly containerized workloads.

That might not have been obvious from the outside that that was the motivation but today, that’s really working well with .NET Core and Azure. In any case, that got me hands-on with server lists and containers and I got really excited. I knew for a while I wanted to do a startup and every year I wonder, “Is this the year?” I saw all everything with cloud is changing the way that we do software development and so I saw a huge opportunity. I didn’t know it was going to be infrastructure as code until I got knee-deep into it, but the initial premise was all developers are or soon will become cloud developers.

When you think of Word processors in the 90s, we don’t build that software anymore. Even if it’s a Word processor, it’s connecting to the cloud in some manner to do spell checking or AI, machine learning, storage, cloud storage. Basically, if you’re a developer today, you need to understand how to leverage the cloud, no matter what context. Even if you’re primarily on-prem, a lot of people are looking to using cloud technologies on-premises to innovate faster.

As a developer, if I’m coming to the cloud space, why do I care about this infrastructure stuff? If I go back 15 years, I would go file a ticket and my IT team would take care of it and I didn’t have to think about how virtual machines worked or how networking worked or anything like this. It was convenient, but those are simpler times.

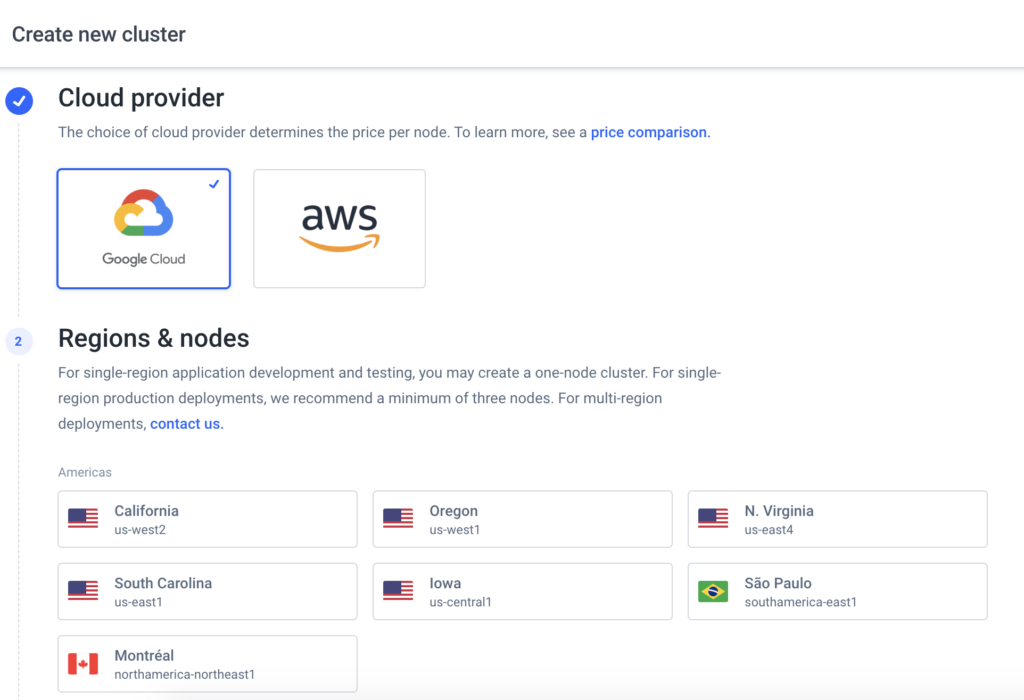

Google modern cloud architecture in AWS on the left and in Azure on the right and the thing you’ll notice is there’s a lot of building blocks. All these building blocks are programmable resources in the cloud. If you can tap into how to wire those things together and really understand how to build systems out of them, you can really supercharge your ability to deliver innovative software in a modern cloud environment.

Modern Cloud Architectures

I’ll talk a little bit about the current state of affairs. I spent a lot of time contemplating, “How do we get to where we are today where we’re knee-deep in YAML?” For every serverless function you write, you’ve got an equivalent number of lines of YAML to write that are so hard to remember that you always have to copy and paste them from somewhere or you have to rely on somebody else to do it. I think how we got here was, if you fast forward to the 2000s and virtualization was a big thing, we started to virtualize applications, we took our end tier applications, we virtualized them usually by asking our operations and IT folks that help configure these things. It was black box, you didn’t have to think about it. It was ok because there were too many of them, maybe you had an application in a MySQL back end or maybe you had a front end, a mid-tier, and a database.

Things are usually fixed, they’re pretty static, so you would say, “I think I need three servers for the next five years,” and then you buy those servers, you pay 2,500 bucks amortized over three years for each of those servers and that’s the way IT worked. I’d say the Cloud 2.0, to me, that’s really the shift in mindset from static workloads to dynamic workloads, where instead of provisioning things and thinking it’s going to stay fixed for five years – things change – suddenly, your applications a lot more popular than you thought it was going to be or maybe it’s less popular. Maybe with some major IT initiative for a new HR system and then suddenly, they decided to go with Google Hire or something and suddenly that thing wasn’t necessary, now you’ve got three VMs just sitting there costing money that are unused.

We moved to this model of more hosted services, more dynamic, flexible infrastructure. We go from 1 to 10 resources in the Cloud 1.0 world to maybe a couple dozen in the 2.0 world. Now, when you think of like a modern AWS application, you’re managing IM roles, you’re managing data resources, whether that’s like hosted NoSQL databases or RDS databases. You’re doing serverless functions, you’re doing containers, you’re doing container orchestrator if you’re using Kubernetes, diagnostics, monitoring. There’s a lot of stuff to think about and each one of these things needs to be managed and has a lifetime of its own and is ideally not fixed in scale.

You want to be able to scale up and down basically dynamically as your workloads change. I think the old approach of throw things over the wall and have them configured, we’ve tried to apply that to this new world and it’s just led to this YAML explosion that is driving everybody bonkers.

We used to think of the cloud as an afterthought, it was, “We’ll develop our software and then we’ll figure out how to run it later.” These days, the cloud is just woven into every aspect of your application’s architecture. You’re going to use an API gateway, you need to think about how routes work and how does middleware work, how you’re going to do authentication. There’s so many aspects that just deeply embedded within your application and you want to remain a little bit flexible there, especially if you’re thinking of things like multi-cloud or if you’re thinking of potentially changing your architecture in the future. You don’t want to take hard dependencies necessarily, but honestly, for a lot of people that are just trying to move fast and deliver innovation, that is the right approach is just embrace the cloud and use it throughout the application.

The Cloud Operating System

There’s another area where maybe I’m crazy, I think of things a little bit differently than most people, but I think of the cloud as really it’s a new operating system.

Over 10 years ago, when they kicked off the Azure effort inside Microsoft, it was called Red-Dog at the time. Dave Cutler, who was the chief architect of Windows NT used to describe it as a cloud operating system and everybody thought he was nuts, nobody knew what he meant. I think he really saw it as you think of what an operating system does. It manages hardware resources, whether it’s CPU network, memory disk. It manages lots of competing demands and figures out how to schedule them. It secures access to those resources and most importantly, for application developers, it gives you the primitives you need to build your applications.

It turns out if you just replace the phrase operating system in that phrase, in all of that, with cloud, it’s basically what the cloud does. It’s just operating at different granularity. The granularity of a traditional operating system is a single machine. Of course, there are distributed operating systems but most of those never really broke out of research, they’re fascinating to go study, but most operating systems we think of, whether it’s Linux or Mac or Windows is really single machine, whereas now we’re moving to multiple machines. Instead of the kernel calling all the shots. If you remember the Master Control Program from “Tron,” it used to be the kernel, well, now it’s the cloud control plane.

The perimeter is different, now you’re thinking of a virtual private cloud instead of just a single machine with a firewall running on it. It goes on and on, processors and threads, or processes and threads versus VM containers and functions. That was another perspective when coming to the space from a languages background, you look at Async programming and all the things we can do with thread pools and then you look at serverless functions. There’s definitely an interesting analogy there where, “Wouldn’t it be great if serverless functions were as easy to program as threads and thread pools are in AsyncTask in your favorite language?”

Managing Infrastructure

That starts to get me into the topic of infrastructure as code. Basically, you move from a world of thinking in your programs of allocating kernel objects. Maybe you’re allocating a file or a thread or whatever the objects are in your programming language that you’re doing up. Now you’re thinking about, “How do I stitch together lots of infrastructure resources to create applications?” Now you’re thinking of networks and virtual machines and clusters and Docker containers and all the data storages required to power your application.

I think when you get here, when you say to yourself, “I want to build a cloud application,” the initial approach for most people is, “I’ll go into AWS, Azure, Google Cloud, DigitalOcean,” whatever your favorite cloud provider is, go into the console, point and click to say, “Give me a Kubernetes cluster, give me some serverless functions,” all the things you need. First of all, it’s really easy to get up and running. If you don’t know the things you want in advance, you can go click around, there’s good documentation and there’s visualizations that guide you in the right direction, but the problem with that is it’s not repeatable. If you go build up your entire infrastructure by pointing and clicking in the UI, what happens when you want to provision a staging instance of that? Or maybe you want to go geo-replicated, now you have to have multiple instances of your production environment. Or what happens if something gets destroyed?

Somebody accidentally goes into the UI and thinks things are in the testing account and he says, “Delete everything,” and then realizes, “That was actually in production.” That happens more often than you would think. It’s also not reviewable. You can’t go into a team and code review that, you can’t say, “Am I doing this correctly?” unless somebody stands over your shoulder. It’s not versionable, so if you need to evolve things going forward, you’re going in and doing unrepeatable state changes from some unknown state to another unknown state.

What most people will do as the next step is, they’ll adapt scripts. All these cloud providers have SDKs, they have CLI, so you can always Bash script your way out of anything and that’s slightly more repeatable. Now you’ve got a script – and I’ll show in a few slides the difference here, but it’s not as reliable. If you’re provisioning infrastructure and you’ve got 30 different commands you have to run to get from not having an infrastructure set up to having a full environment, think about all the possible points of failure in there and how do you recover from them? If it fails at step 33, is that the same as if it fails at step 17? Is that automatable? Can you recover from that? Networks have problems from time to time, the cloud providers have problems from time to time, so this is not a resilient way of doing things.

Usually, the maturity lifecycle of an organization starts from point and click, moves to scripts, and then eventually lands on infrastructure as code. In infrastructure as code, you’re probably familiar with some technologies here, like AWS. Most of the cloud providers have their own, so AWS has Cloud Formation, Azure has Resource Manager templates, Google has Deployment Manager, and HashiCorp has Terraform which is a general-purpose one that cuts across all of these. I’ll talk about Kubernetes a little bit in the talk, it’s not a Kubernetes talk, but I’ll mention a few things.

Kubernetes itself also uses an infrastructure as code model in the way that it applies configuration, so infrastructure as code is pretty much the agreed-upon way of doing robust infrastructure for applications.

What really is infrastructure as code? Infrastructure as code allows you to basically declare your cloud resources – any cloud resources: clusters, databases – that your application needs using code. Infrastructure as code is not a new thing, it’s been around for a while. Chef and Puppet used infrastructure as code to do configuration of virtual machines, that’s more of like the Cloud 1.0, 2.0 period of time if you go back to my earlier slides.

Declarative infrastructure as code, which all the ones I just mentioned, are in that category, not Chef and Puppet, the Cloud Formation, Terraform, etc. The idea is to take those declarations and basically use that to say, “This is a goal state, this is a state that the developer wants to exist in the cloud.” They want a virtual machine, a Kubernetes cluster, 10 services on that Kubernetes cluster, a hosted database, and maybe some DNS records. That would be a configuration that you then apply, so you basically give that to your infrastructure as code engine, it says, “Ok, this is what the developer wants, here’s what actually exists in production and then we’ll just go make it happen.” It’s basically a declarative approach to stating what you’d like.

The nice thing about that is if it ever drifts, if anything ever changes, you always have a record of what you thought existed in your cloud and this will be a lot clearer as we get into a few more examples.

Let’s say we want to spin up a virtual machine in Amazon and then secure access to it, so only allow access on port 80, HTTP access. The left side is super simple script, if we just wanted to write something using the Amazon CLI, it would work. You notice we have to take the ID that returned from one and pass it to another command and, again, these are only three individual commands so it’s not terribly complicated, it’s a reasonable thing to do.

As your team grows, as more people contribute, as you have 100 resources instead of just 3, you can imagine how this starts to breakdown, you end up with lots and lots of bash scripts. To my previous point, if something fails, it’s not always evident how to resume where you left off. On the right side, this is Terraform and this is basically effectively accomplishing the same thing, but you’ll notice it’s declarative. Instead of passing the region for every command, we’re saying, “For AWS, let’s use US East 1 region.” For the web server, here’s the image name, I want to teach you micro which is super cheap and small. I’m going to use this script from here.

Notice here that we’re starting to get a little bit into programming languages, but it’s not really a full programming language because we’re basically saying, “Declare that we’re reading in this file.” You can read it, it’s reasonable. Then, you take this and you give it to Terraform and Terraform chugs away and provisions all the resources for you.

Hot It Works

I’ll mention this because effectively all the tools I mentioned for declarative infrastructure as code work the same way. In fact, the same way the Kubernetes controllers work in terms of applying configuration also. The idea is you take a program written in some code, whether it’s YAML, whether it’s JSON, whether it’s Terraform HCl, or we’ll see later what Pulumi brings to the table is the ability to use any programming language.

You feed that code to an infrastructure as code engine and that’s going to do everything I mentioned earlier, so it’s going to say, “What do we think the current state of the cloud is and what is the desired state that the code is telling us at once?” Maybe the state is empty because we’ve never deployed it before, and so everything the code wants is going to be a new resource. Maybe it’s not the first time, so we’re just adding one server, so it’s going to say, “All the other resources run changed and we’re just going to provision a new server.”

Then, it comes up with a plan and the plan is effectively a sequence of operations that are going to be carried out to make your desired state happen, “We’re going to create a server, we’re going to configure the server,” all the things that have to go behind the scenes into making that reality. If you think of the way you’re allocating operating system objects, just to tie it back to some of the earlier points, you generally don’t think about the fact that there’s a kernel handle table that needs to be updated by the kernel when you allocate a new file handle. You’re usually just in Node,js saying, “Give me a file,” and then there’s something magic that makes that happen.

Here, it’s a similar idea, it’s just stretched over a longer period of time. In a traditional operating system model, you run a program, it runs and then it exits. In this model, you run a program and it never really exits, it’s declaring the state. Some fun things, if you ever look at some projects – Brendan Burns has this metaparticle project which takes this idea to the extreme, which is, “What if we thought of infrastructure as code programs is actually just programs that use the cloud to execute and just ran for a very long time?” It’s a thought provoking exercise.

Because we’re declaring this and this gets to the scripting versus infrastructure as code, we have a plan, so actually, we can see what’s going to happen before it actually happens and that gives us confidence to allow us to review things. If we’re in a team setting, we can put that in a pull request and actually say, “That looks like it’s going to result in downtime,” or, “That’s going to delete the database, was that what you meant to do?” You actually have a plan that is quite useful. Once we’ve got a plan, then usually the developers say, “Yep, that’s what I want, let’s carry it out.” Then, from that point, the infrastructure as code engines driving a bunch of updates to create, read, update, and delete the resources. That’s how it works behind the scenes. In general, you don’t actually have to know that’s exactly what’s happening but that’s basically how Cloud Formation works, that’s how Terraform works, that’s how Pulumi works. All these systems effectively work the same way.

Days 1, 2, and Beyond

This works for day one, but another area where comparing it to point and click and scripting where it’s important is actually being able to diff the states and carry out the minimal set of edits necessary to deploy your changes. This takes many forms. Deploying applications, constantly deploying changes like new Docker SHA hashes that you want to go and roll out in your cluster, maybe from Kubernetes or whatever container orchestrator you’ve chosen. Upgrading to new versions of things, like new versions of MySQL, new versions of Kubernetes itself. That’s another evolution that you’re probably going to do.

Adding new microservices or scaling out existing services. Leveraging new data services, maybe you want to use the latest and greatest machine learning service that Google is shipping. Anytime you want to leverage something like that, you actually have to provision a resource in the cloud. You have to provision some machine learning data lake or whatever the resource is. Then, also evolving to adopt best practices. Sometimes, everything you’ve done, at some point, you might realize it was all wrong and you have to go and change things. For example, maybe you set up your entire Kubernetes server and cluster and everything and then you realize, “We’ve got to properly authenticate everything,” and now it’s open to the Internet and anybody can come play with our Kubernetes server. It’s probably not a great thing but it happens to a lot of people. As you evolve those sorts of things and fix them, you need something that can incrementally apply deltas.

I mentioned earlier, scaling up a new environment. If you’re standing up another production environment, one thing that we find a lot nowadays is every developer on the team has an entire copy of the cloud infrastructure for their service and that makes it really easy to go try out new changes, test changes. We even have people that spin up entirely ephemeral environments in pull requests. Maybe you’re going to make a change to your application, you submit a pull request, it spins up an entire copy, runs a battery of tests, then scales it all down as though it never existed and in doing that, you get a lot more confidence than if you’re trying to simulate those environments on your desktop.

Of course, sometimes you want to do that, running Docker on your desktop is a great way to just really spot check things but at some point, before you do your actual integration, you’re going to want to run these more serious tests.

Just One Problem

In any case, the one problem with all of this is infrastructure as code is often not actually code, it’s like the big lie here. It’s very disappointing once you realize this and what it means is, a lot of times what we’re calling code is actually YAML or often YAML with Go templates embedded within them, or you look at Cloud Formation, Cloud Formation is like YAML or JSON with a mini DSL embedded within the strings, and so you can put in the strings, referencing variables. The more complex our cloud infrastructure gets, the more this just starts to fundamentally break down.

The thing that’s so disappointing for me when I came to this space is, I had heard of all these technologies, I never actually used these for months at a time in anger. That was the first thing I did when I started Pulumi, it was, “Ok, for the next three months, I’m just going to build modern cloud applications using best of breed technologies,” and it was very quick that I was just swimming in YEML. It’s not just YAML, I think YAML is fine, YAML is good at what it was designed for. I think the problem is we’re using it for what it wasn’t designed for, we’re actually trying to embed application architecture inside of YAML and for that, I’ll take a programming language any day. You get abstractions and reuse, functions and classes, you get expressive constructs like loops, list comprehension, tooling, IDEs, refactoring, linting, static analysis and most of all, productivity – all the things I spent my last 15 years basically working on.

It would be great if we could actually use those things and I think when we work with organizations to adopt newer technologies, there’s still a divide between developers and infrastructure engineers but at the same time, there’s this desire to break down those walls and work better together. I think it’s not to say every developer is going to become an expert in networking, certainly I am not, but we should have the tools and techniques to collaborate across those boundaries so that if I want to go stand up a serverless function, I should be able to do that.

So long as my infrastructure team trusts that I’m doing it within the right guidelines and if I want to go create a new API gateway-based application instead of doing Express.js which is going to be more difficult to scale and secure, I should be empowered to do that. We thought that that was the way to go. This is an example from my home chart where the thing that’s craziest about all of this is you actually have to put indentation clauses in here because that’s the YAM. This is nested so you need to indent eight spaces to the right, otherwise, it’s not a well-formed YAML. You’ve got this mixing of crazy concerns in here which nobody loves.

Remember this slide that I said, “The cloud should not be an afterthought?” It really is still an afterthought for a lot of us. For applications, we’re writing in Go, JavaScript, Rust, TypeScript, you name it, but for infrastructure, we’re using Bash, YAML, JSON. HCL is the best technology out there that’s not a full blown programming language, it gets close but it’s still different. Then for application infrastructure in the Kubernetes realm, everything is YAML to the extreme. These little Bash things because often, we’re using different tools, we’re using different languages for applications, multiple bits of infrastructure so, oftentimes, we have to do infrastructure in AWS but then we’re doing Kubernetes infrastructure and we’re using different tools, we’re using different languages, slapping them together with Bash. Hepdio have this phrase, “Walls of YAML and mountains of Bash.” I think that is the state of affairs today.

Why Infrastructure as Code?

That leads me to the next section, which is, “What if we use real languages for infrastructure also?” Would that help us blur this boundary and actually make the cloud more programmable and arm developers to be more self-sufficient, while also arming infrastructure engineers to empower their teams and themselves to be more productive and really stand on the shoulders of giants? I love that phrase because we’ve been doing languages stuff for over half a decade. Wouldn’t it be great to benefit from all the evolution that we’ve seen there?

That’s what led us to the Pulumi idea, which was let’s use real languages, we’ll get everything we know about languages. I mentioned a lot of these earlier, we’ll eliminate a lot of the copy and paste and this jamming template in languages inside of YAML. At the same time, it has to be done delicately because it needs to be married with this idea of infrastructure as code. I think when I came to the space, my initial idea was, “We should write code, why are we writing on the CYAML?” I quickly soon appreciated why infrastructure as code is just so essential to the way that we actually manage and evolve these environments.

I think one thing Terraform did really well, which was have one approach across multiple cloud providers, we talked about a lot of people that are trying to do AWS and Azure and on-prem and Kubernetes and having to do in different tools and languages clearly does not scale.

Demo

With that, I’ll jump into a quick code demo and we’ll see what some of this looks like. First, this is a pretty basic Getting Started example here. This is a very simple Pulumi program. What it’s going to do is create an S3 bucket and then populate that bucket with a bunch of files on disk. Let me see if I can pull this back up.

If I go over here, I’ll see that it’s basically a simple Node.js project and, of course, it could be Python, it could be Go, and the first thing we’ll see is we’re actually just using Node.js packages. In a lot of these other systems, the extensibility model is just not clear. Actually, most of them are walled gardens because they’re specific to the cloud provider but here, we’re actually just using Node packages. Notice we’ve got Pulumi packages, so it’s AWS, there’s one for Azure and Kubernetes. We’re also using just normal Node packages. We’re using typical libraries that we know. We could be using our own private JFrog registry if we’re an enterprise that has JFrog, for example.

Another thing that is so easy to take for granted is, I get documentation like examples. I’m benefiting from all the things that we know about IDEs, if I mistype something, it’s going to say, “Did you mean ‘bucket’? You seem to have mistyped that.” Oftentimes in these YAML dialects, you don’t find that out until it’s too late. You’ve tried to apply it, now you’re maybe partway through a production deployment and you’re finding an error. Actually, you are bringing a lot of this insight into the inner loop.

We’re basically saying, “Give us an S3 bucket, we’re configuring it to serve a static page.” Then, here is where it gets a little bit interesting where we’re actually just using a Node API, so fs.readdir, and we’re basically saying for every file, create an S3 object and upload that to the cloud. Then, we set the policy to enable public access and then we print out the URL. Usually, if you were to do this in Cloud Formation, you’re talking 500 lines of YAML or something like that. What we’re going to do here is we’ll just run pulumi up. Remember that diagram I showed earlier? This is doing what that diagram said, where it ran the program and said, “If you were to deploy this, you’re going to create these four objects.”

If I want to, I can get a lot of details about it but pretty standard stuff. If I say yes, now it’s actually going out to Amazon and it’s deploying my S3 bucket. It uploaded these two objects, notice here it’s saying it’s uploading from the www directory. It’s actually uploading this in a Favicon, but it could be any arbitrary things. This is an extremely inexpensive way to host any static content on S3 but notice at the end, it pointed out the URL. If I want a custom URL, all the cloud providers are just amazing with all the building blocks they provide, so if I want to go and add a nice vanity URL like duffyscontent.com or something, that’s just another 10 lines of code to go configure the DNS for this. You can start simple and get complex when you need to. Here, notice that it printed the URL so I’m actually just going to open the URL and then we’ll see that it deployed.

Notice here, interesting thing, I didn’t actually update the infrastructure. I updated some of the files but it’s going to detect that, it’s going to say, “This object changed, do you want to update it?” Yes, I want to update it. The flow is the same if I’m adding or removing resources. Now I can go and open this up and we’ll see, “New content.” That’s a pretty simple demo.

If I want to get rid of everything and just clean up everything that I just did so Amazon doesn’t bill me for the miniscule amount of storage there, probably one cent a year or something like that, I can go ahead and delete it and it’s done. I should mention also, I talked earlier about standing up lots of different environments. We have this notion of a stack and so if you wanted to create your own private dev instance, you can just go create a new stack and then start deploying into it, so pretty straightforward.

This is a more interesting example, where this is building and deploying a Docker container to Amazon Fargate, which is their hosted clustering service. It’s not Kubernetes but it’s pretty close. What we did here was we took the Docker getting started guide, so if we go to Docker and download Docker, it walks you through how to stand up a load balancer service using Docker Compose, this is the moral equivalent but using Fargate. It’s got an elastic load balancer on it, listening on port 80. It’s actually going to build our Docker image automatically. If I look here, we’ve got just a normal Docker file. What this is going to do is, it’s going to actually do everything. It’s going to stand up the ECS resources, it’s going to build and publish the Docker container to a private registry within Docker itself. It’s also creating a virtual private cloud, so it’s secure.

Notice this is doing a fair bit more than our previous example and I’m going to say, “Yes.” This is the power of abstraction and reuse, we can actually have components that hide a lot of the messy details that frankly, none of us want to be experts in. If you were to write this out by hand, look, here this is almost 30 lines of code. Each one of these resources, if you’re doing Cloud Formation or some of the other tech that I mentioned earlier, you’d have to manually configure every single one in excruciating detail down to the property level whereas here, we can benefit from reuse what you know and love about application programming and know that in doing so, we’re benefiting from well-known best practices.

This may not be obvious, that’s actually the Docker build and push. Depending on the bandwidth here, the push may take a little bit longer, so I’m going to jump back to the slides and then we’ll come back in just a minute.

I mentioned multiple languages, so this is basically accomplishing the same thing infrastructure as code gives you just with any language. We have others on the roadmap like .NET and actually, there’s somebody who’s working on Rust right now which will be super cool or somebody who did F# as a prototype which is really actually a cool fit for infrastructure as code because it feels more declarative and pipeline-y. More language is on the way but right now, these are some great options.

I mentioned lots of different cloud providers, so every single cloud provider is available as a package. This is a stretched picture of turtles because it turtles all the way down. The magic of languages, you can build abstractions. Then, Kubernetes – I hinted at Kubernetes along the way, but what we see is to actually do Kubernetes, you you have to think about security so you have to think about IAM roles, you have to think about the network perimeter, you have to set up virtual private clouds. You think about, “If I’m in Amazon, I want to this CloudWatch, I don’t want to do this Prometheus thing maybe.”

You really have to think holistically about how you’re integrating with the infrastructure in whatever cloud providers going to. It’s not as simple as, “Give me an EKS cluster or GKE or AKS,” all those things are really important. Also, if you’re starting with Kubernetes, did you do that because you just wanted to deploy containers and scale up container-based workloads? Probably. You probably didn’t do it because you wanted to manage your own Mongo database with persistent volumes and think about the backups and all that or MySQL when you’re coming from a world of hosted databases. Most customers we talk with are actually using RDS databases, S3 buckets, Cosmos database if you’re an Azure, but not managing the data services inside of the cluster itself but actually leveraging the cloud services that are hosted and managed.

What this shows is, you can actually say in this example, the one on the left is basic provisioning a new GKE cluster like an entire cluster, and then deploying a canary deployment in Kubernetes to that cluster. Then it exports the config so you can go and access your new cluster, all in one program that fits on a page. The one on the right is showing ladder thing I was just talking about where Azure Cosmos DB has a MongoDB compatibility mode, so rather than host your own MongoDB instance, you can spin up some containers and just leverage that. Here, we’re basically creating new Cosmos DB instance, sending up some geo-replication capabilities of it, then here’s where it starts to get interesting, we’re actually taking the authentication information for that instance and putting it in a Kubernetes secret in this program.

Usually, you’d have to take that, export it, store it somewhere secure, fetch it later on, whereas here, we can actually just take it, put it in a secret, and then consume it from a home chart. Now the home chart is going to spin up some application that accesses that database.

If I go back, my application here should be done, this is the Fargate example. If I do this, saying, “Hello, world,” so it’s just the application up and running which is, again, just our application here. I should mention, just like I showed updating the file for the S3 bucket, if I want to deploy a new version of the container, I just go and change the Docker file, run pulimi up, it’ll deployed the dif and now I’ve got the new image up and running.

This is the Kubernetes example, which is effectively just a very basic NGINX deployment object followed by a service, so it’s a standard Kubernetes thing. If I go and deploy it, it’s going to look very much like I had shown before with the caveat. This is neat, it’s actually going to show me the status of the deployment as it’s happening. I don’t know if people have ever experienced deploying something with cubectrl in the Kubernetes. You’re often found tailing logs and trying to figure out, “Why is this thing coming up? It turns out I mistyped the container image name,” or any number of other things. Whereas here we’re actually saying, “Why are we sitting here waiting? Well, it turns out GCP is now allocating the IP address because we use the load balance service,” and so we can see those rich status updates.

Even cooler than that is if we go back here, we can actually just delete all that code and replace it with something like this. Again, the magic of abstraction here. This is, “I encountered this pattern hundreds of times in my applications, I’m sick of typing it out manually by hand, so I’m going to create a class and abstraction for it.” It turns out services and deployments often go hand in hand and they have a lot of duplicative information in each of them, so why not create a class to encapsulate all that and give it a nice little interface to say, “An image, replicas, ports.” That’s just showing you a little bit of a taste of what having a language gives you, instead of copy and pasting the YAML and then editing the slight edits everywhere, you can use abstraction.

Obviously, it’s easy to go overboard with abstraction but what we find is there’s a level of abstraction that makes sense. Some people use abstraction to make multi-cloud easier, some people just do it to make some common task in whatever cloud you’re going to itself much easier.

Automated Delivery

I should mention in terms of that maturity lifecycle of going from scripting to infrastructure as code, usually, the next step is continuous delivery, so using something like GitOps where you want to actually trigger deployments anytime you check in a change. That allows you to use pull requests and all of the typical code review practices you would use for your applications, you can now use it for infrastructure and it’s just code so it’s similar, you can apply the same style guide, you can apply the same pre-commit checks that you would use for your application code.