Month: February 2020

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Google has released Android 11 as a preview for developers, who can now adapt their apps to the upcoming Android release and provide early feedback to help Google improve the release robustness. Android 11 includes indeed many behavioural changes that could affect existing apps, as well as new feature and API and new privacy options.

Android 11 will support a number of new APIs for media management, connectivity, data sharing, machine learning, and more.

The MediaStore API supports now performing batch operation on media files, including granting write access to files, creating “favorite” files, and trash files or delete them immediately. Apps can also use raw paths to access files to simplify working with third-party libraries. To improve debug performance, developers can load GLES and Vulkan graphics layers into native application code.

The new ResourceLoader and ResourceProvider APIs enable apps to extend the way resources are searched and loaded. This is intended for custom asset loading, e.g. using a specific directory instead of the application APK. Additionally, C/C++ developers will be able to decode image directly using the NDK ImageDecoder API.

Machine learning support is another area where Android 11 brings new features, specifically adding support for TensorFlow Lite’s new quantization scheme, new ML controls for QoS, and streamlined data management across components to reduce data redundancy. Biometrics support is also extended with the new `BiometricManager.Authenticators interface.

Android 11 also introduces several changes to privacy, which include scoped storage enforcement, background location access, and a new one-time permission model that should make it easier for users to grant access to location, microphone, and camera in a single step.

All privacy changes listed above affect existing apps and Google explicitly requires developers to check their apps are compatible with them. But Android 11 introduces a few other behavioural changes that could have an impact on existing apps. For example, the JobScheduler API enforces now call limits to identify potential performance issues; furthermore, when the user denies twice a spcecific permission, then the OS assumes that means “don’t ask again”; and last but not least, the ACTION_MANAGE_OVERLAY_PERMISSION now always brings up the top-level Settings screen, whereas it used to bring the user to an app-specific screen.

The number of changes brought by Android 11 is too long to be fully covered here, so do not miss the official documentation for full detail. Also keep in mind that Android 11 development is not completed yet and Google plans to share new preview versions over the coming months.

MMS • Matt Campbell

Article originally posted on InfoQ. Visit InfoQ

NGINX announced the release of versions 1.13 and 1.14 of NGINX Unit, its open-source web and application server. These releases include support for reverse proxying and address-based routing by both the connected client’s IP address and the target address of the request.

NGINX Unit is able to run web applications in multiple language versions simultaneously. Languages supported include Go, Perl, PHP, Python, Node.JS, Java, and Ruby. The server does not rely on a static configuration file, instead allowing for configuration via a REST API using JSON. Configuration is stored in memory allowing for changes to happen without a restart.

With these latest releases, NGINX Unit now offers support for reverse proxying. A reverse proxy sits in front of the web servers and forwards client requests to those servers. The new proxy option has been added to the general routing framework and allows for proxying of requests to a specified address. At this time, the proxy address configuration has support for IPv4, IPv6, and Unix socket addresses.

For example, the routes object below will proxy the request to 127.0.0.1:8000 in the event that the incoming request matches the match condition:

{

"routes": [

{

"match": {

"host": "v1.example.com"

},

"action": {

"proxy": "http://127.0.0.1:8000"

}

}

]

}

The proxy option joins the previously added pass and share actions for deciding how to route traffic. The pass option, added in version 1.8.0, allows for internal routing of traffic by either passing requests to an application or a route. Internal routing is beneficial in cases where certain requests should be handled by separate applications, such as incorrect requests being passed off to a seperate application for handling to minimize impact on the main application.

The share action, added in version 1.11.0, allows for the sharing of static files. This includes support for encoded symbols in URIs, a number of built-in MiME types, and the ability to add additional types.

Address based routing was added with the 1.14 release. It enables address matching against two new match options: source and destination. The source match option allows for matching based on the connected client’s IP address, while the destination option matches the target address of the request.

Also with this release, the routing engine can now match address values against IPv4- and IPv6-based patterns and arrays of patterns. These patterns can be wildcards with port numbers, exact addresses, or CIDR notation. In the example below, requests with a source an address that matches the CIDR notation will provide access to the resources within the share path.

{

"routes": [

{

"match": {

"source": [

"10.0.0.0/8",

"10.0.0.0/7:1000",

"10.0.0.0/32:8080-8090"

]

},

"action": {

"share": "/www/data/static/"

}

}

]

}

If the /www/data/static/ directory has the following structure:

/www/data/static/

├── stylesheet.css

├── html

│ └──index.html

└── js files

└──page.js

Then a request such as curl 10.0.0.168:1000/html/index.html will serve the index.html file.

Artem Konev, Senior Technical Writer for F5 Networks, indicates that future releases of NGINX Unit should include round-robin load balancing, rootfs support to further enhance application isolation, improved logic for handling static assets, and memory performance improvements.

NGINX Unit is available for download on GitHub. Support for NGINX Unit is provided with NGINX Plus. For more details on changes in the release, please review the NGINX Unit changelog.

MMS • Uday Tatiraju

Article originally posted on InfoQ. Visit InfoQ

Github shipped an updated version of good first issues feature which uses a combination of both a machine learning (ML) model that identifies easy issues, and a hand curated list of issues that have been labeled “easy” by project maintainers. New and seasoned open source contributors can use this feature to find and tackle easy issues in a project.

In order to eliminate the challenging and tedious task of labelling and building a training set for a supervised ML model, Github has opted to use a weakly supervised model. The process starts by automatically inferring labels for hundreds of thousands of candidate samples from existing issues across Github repositories. Multiple criteria are used to filter out potentially negative training samples. These criteria include matching against a 300 odd curated list of labels, issues that were closed by a pull request submitted by a new contributor, and issues that were closed by pull requests that had tiny diffs in a single file. Further processing is done to remove duplicate issues and to split the samples into training, validation, and test sets across repositories.

At the moment, Github is using preprocessed issue titles and bodies that are one-hot encoded as features to train the model. TensorFlow framework was chosen to implement the model. The common practise of regularization along with additional text data augmentation and early stopping techniques are used to train the model.

To rank and present good first issues to the user, the model is run to classify all open issues. If the probability score of the classifier for an issue is above a designated threshold, the issue, along with its probability score, is added to the bucket of issues slated for recommendation. Next, all open issues with labels that match any of the curated list of labels are inserted to the same bucket. These label based issues are assigned higher scores. Finally, all issues in the bucket are ranked based on their scores with a penalty based on issue age.

Currently, Github trains the model and runs inference on open issues offline. The machine learning pipeline is scheduled and managed using Argo workflows.

Compared to the first release of good first issues feature back in May 2019, Github saw the percent of recommended repositories that have easy issues increase from 40 percent to 70 percent. Moreover, the burden on project maintainers to triage and label open issues has been reduced.

In the future, Github plans to improve the issue recommendations by iterating on the training data and the ML model. In addition, project maintainers will be provided with an interface to enable or remove machine learning based recommendations.

MMS • Bruno Couriol

Article originally posted on InfoQ. Visit InfoQ

Endre Simo, senior software developer and open-source contributor to a few popular image-processing projects, ported the Pigo face-detection library from Go to browsers with WebAssembly. The port illustrates the performance potential of WebAssembly today to run heavy-weight desktop applications in a browser context. InfoQ interviewed Simo on the benefits of the port and the technical challenges encountered. Answers have been edited for clarity.

InfoQ: You have authored or contributed to a number of open-source projects, mostly tackling image processing and image generation problems. Triangle, for instance, for the most artistic people among developers, takes an image and converts it to computer-generated art using Delaunay triangulation. Caire resizes images in a way that respects the main content of the image.

|

|

What brought you to machine learning and live face detection?

Endre Simo: I have a long-time interest in face detection and optical flow in general, which, in turn, awoke a researcher and data-analyst side in me. Because in the last couple of years I was pretty much involved in image processing, computer vision and all this sort of things and since I am also an active contributor in the Go community, I thought that it was the proper time to undertake a project bringing about something which the Go programmers were really missing: a very lightweight, platform-agnostic, pure Go face-detection library, which does not require any third-party dependency. At the time when I started to think about the idea of developing a face-detection library in Go, the only existing library for face-detection and optical flow targeting the Go language was GoCV, a Go (C++) binding for OpenCV, but many can acknowledge that working with OpenCV is sometimes daunting, since it requires a lot of dependencies and there are major differences between versions which could break existing code.

InfoQ: BSD-licensed OpenCV also provides facial recognition capabilities and wrappers for a number of other languages, including JavaScript. What drove you to write Pigo, what features does it provide and what differentiates it from OpenCV?

Simo: First of all, I do not really like wrappers or bindings around an existing library, even though it might help in some circumstances to interoperate with some low-level code (like C for example) without the need to reimplement the code base in the targeted language. Let me explain why:

- first of all it forces you to dig deeper into the library own architecture in order to transpose it to the desired language and

- second, which is more important, it costs you with slower build times since it needs to transpose a C code to the targeted language. Not to mention that the deployment is getting way more complicated and you can forget about a single static binary file like it is the case with the Go binaries.

So the major takeaway in my decision to start working on a simple computer vision library suitable specifically for face-detection was the huge time needed by GoCV at the first compilation. The Pigo face-detection library (which by the way is based on the Object Detection with Pixel Intensity Comparisons Organized in Decision Trees paper) is very lightweight, it has zero dependencies, exposes a very simple and elegant API, and more importantly is very fast, since there is no need for image preprocessing prior to detection. One of the most important features of Go is the generation of cross-build executables. Being a library 100% developed in Go thus means that it is very easy to upload the binary file to small platforms like Raspberry Pi, where space constraints are important. This is not the case with OpenCV (GoCV) which requires a lot of resources and produces slower build times.

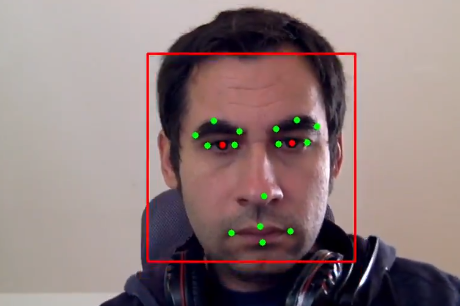

In terms of features it might not cover all the functionalities of OpenCV since the latter is a huge library with a big amount of functions included for numerical analysis and geometrical transformations, but Pigo does very well what it has been purposed to, i.e. detecting faces. The first version of the library could only do face detection but during the development new features have been added like pupils/eyes detection and facial landmark points detection. My desire is to develop it even further and have it do gesture recognition. This will be a major takeaway and also a heavy task since it implies to work with pre-trained data adapted to the binary data structure required by the library, or to put it otherwise to train a data set which is adaptable to the data structure of a binary cascade classification.

InfoQ: Why porting Pigo to WebAssembly?

Simo: The idea of porting Pigo to WebAssembly originated from the simple fact that the Go ecosystem has missing terribly a well-founded and generally available library for accessing the webcam. The only library I found targeted the Linux environment only, which obviously was not an option. So in order to prove the library real-time face-detection capabilities, I opted to create the demos in Python and communicate with the Go code (the detection part has been written in Go) through shared object (.so) libraries. I did not obtain the desired results, the frame rates were pretty bad, so I thought that I will try integrating/porting to WebAssembly.

InfoQ: Can you tell us about the process and technical challenges of porting Pigo to WebAssembly? How easy is it to port a Go program to Wasm?

Simo: Porting Pigo to WebAssembly was a delightful experience. The implementation went smoothly without any major drawbacks. This is probably due to the well written

syscall/jsGo API. Possibly, the only thing which you need to be aware if you are working with thesyscallAPI is that the JavaScript callback functions should always be invoked inside a goroutine, otherwise you are getting a deadlock. However, if you have enough experience with Go’s concurrency mechanism, that shouldn’t pose any problems. Another aspect is related to how you should invoke the Javascript functions as Go functions, since thesyscall/jspackage has been developed I think having the Javascript coder in mind. In the end, this is only a matter of acquaintance.

Another important aspect a Wasm integrator should keep in mind is that as WebAssembly runs in the browser, it is no longer possible to access a file from the persistent storage. This means that the only option for accessing the files required by an application is through somehttpcalls supported by the JavaScript language, like thefetchmethod. This can be considered a drawback since it imposes some kind of limitations. First, you need to have an internet connection for accessing some external assets. Second, it could introduce some latency between the request and response. It is much faster to access a file located on the running system than to access a file through a web connection. This can pose noticeable problems (memory consumption in particular) when you have to deal with a lot of external assets: either you load all the assets prior to running the application, or you need to fetch the new assets on the fly – which can suspend the application ocasionally.

InfoQ: What performance improvements did you notice, if any?

Simo: The Wasm integration has proved that the library is capable of real-time face detection. The registered time frames were well above 50 FPS, which was not the case with the Python integration. I notified some small drops in FPS when I enabled the facial landmark points detection functions, but this is somehow obvious since it needs to run the same detection algorithm over the 15 facial points in total.

[Example of facial landmarks detection as performed by Pigo]

InfoQ: You now have face detection running in the browser. How do you see that being used in connection with other web applications?

Simo: Running a non-JavaScript face detection library in the web browser gives you a lot of satisfaction not because it is running in the browser, since there are many other face-detection libraries targeting the Javascript language, but because you know that it was specifically designed for the Go community. That means someone familiar with the Go language can pick up the implementation details and understand the API more easily. The Wasm port of the library is a proof of concept that Go libraries could be easily transposed to WebAssembly. I see a tremendous potential in this port because it opens the door to a lot of creative development. Furthermore, I’ve presented a few possible use cases as Python demos (I might transpose them to Wasm at some time), for example a Snapchat-like face masquerade, face blurring, blink and talk detection, face triangulation etc. I have also integrated it into Caire, where it has been used to avoid face deformation on images with dense content. With the face detection activated, the algorithm tries to avoid cropping the pixels inside the detected faces, retaining the face zone unaltered.

InfoQ: How long did it take you to have a working wasm port? Did you enjoy the experience? Do you encourage developers to target WebAssembly today or do you assess that it wiser to wait for the technology to mature (in bundle size, features, tooling, ecosystem, etc.)?

Simo: Since I worked on the Wasm implementation part-time, I haven’t really counted how many hours it took to have a working solution, but it went pretty smooth. I really encourage developers to target WebAssembly because it has a great potential and it’s getting to have a wide adoption among many programmers. Many languages already offer support for WebAssembly, so I think it will have a bright feature in the following years, considering that WASI (WebAssembly System Interface) which is a subgroup of Wasm is also getting the interest of systems programmers.

MMS • Brittany Postnikoff

Article originally posted on InfoQ. Visit InfoQ

In this podcast, Daniel Bryant sat down with Brittany Postnikoff, a computer systems analyst specialising on the topics of robotics, embedded systems, and human-robot interaction. Topics discussed included: the rise of robotics and human-robot interaction within modern life, the security and privacy risks of robots used within this context, and the potential for robots to be used to socially engineer people.

Key Takeaways

- Physical robots are becoming increasingly common in everyday life, for example, offering directions in airports, cleaning the floor in peoples’ homes, and acting as toys for children.

- People often imbue these robots with human qualities, and they trust the authority granted to a robot.

- Social engineering can involve the psychological manipulation of people into performing actions or divulging confidential information. This can be stereotyped by the traditional “con”.

- As people are interacting with robots in a more human-like way, this can mean that robots can be used for social engineering.

- A key takeaway for creators of robots and the associated software is the need to develop a deeper awareness of security and privacy issues.

- Software included within robots should be patched to the latest version, and any data that is being stored or transmitted should be encrypted.

- Creators should also take care when thinking about the human-robot UX, and explore the potential for unintended consequences if the robot is co-opted into doing bad things.

Subscribe on:

Show Notes

Could you introduce yourself? –

- 01:05 My name is Brittany Postnikoff, or Straithe on Twitter

- 01:10 My current work is researching whether robots can social-engineer people, how they can do it, and what sort of defences we can put in place against those attacks.

Could you provide an overview of your “Robot social engineering” QCon New York 2019 talk? –

- 01:35 Some of the key themes of the talk include talking about how people interact with robots on a social level.

- 01:45 A lot of the research that has been done on human-robots interactions are things like robots holding authority over people, especially if the robot looks to have been given authority.

- 02:05 For example, when we do experiments, there’s usually a researcher in the room, and you generally trust the researcher.

- 02:15 What would happen is the researcher that would explain that the robot is going to say how the work needs to be performed, and the participant needs to listen.

- 02:30 In that case, the researcher is delegating authority to the robot, and people will interact with it appropriately.

- 02:40 I also talked about empathy for robots, whether they can bribe humans and similar experiments.

- 02:55 I introduced social engineering, and talked about robot social engineering attacks that I can see happening soon.

Robots are becoming prevalent within our society – can you give any examples? –

- 03:10 The robots in my talk are very much physical robots – nothing like a Twitter bot that you might interact with.

- 03:20 Roombas are usually the base case when I’m talking about robot social engineering.

- 03:25 There are also robots in airports that help navigating between gates.

- 03:40 There are robots in stores helping to sell things; one called Pepper that helps sells cellphones in malls.

Can you share how security, privacy and ethics differ in the domain of robotics? –

- 04:00 One of my favourite phrases: bugs and vulnerabilities become walking, talking vulnerabilities.

- 04:15 The aspect of physical embodiment that makes the domain of robotics interesting.

- 04:25 If you have a camera or microphone in one of your rooms, and you want to have a private conversation that’s not listened to by Amazon or Google, you might go to another room.

- 04:40 If you have a robot, and a malicious actor has worked their way into that robot, then it could follow you to a new room – all of a sudden there’s new privacy and security issues.

- 04:50 If a malicious person can get into your robot, they have eyes and ears into your home in places that they shouldn’t.

- 05:00 Having a camera that can move into a child’s room or bedroom – you might not notice it if it’s your robot and you’re used to it moving around all the time.

- 05:10 People don’t understand that when a malicious actor takes over your robot, it’s usually indistinguishable from when the robot is acting on its own.

How can we encourage engineers to think about security and privacy when they are designing these kinds of systems? –

- 05:30 I think a big part of it is awareness, which is why I like giving this talk and exposing people to this topic.

- 05:40 If you don’t know that certain attacks can happen, or certain design decisions make these kind of attacks more likely, why would you defend against it?

- 05:50 Building a culture of more security and privacy is something I try and do with these talks.

Are blackbox ML and AI algorithms helping or hindering what’s going on in robotics? –

- 06:20 My research typically avoids things ML and AI, because when you do social robotics work, there’s a concept called Wizard-of-Oz-ing.

- 06:30 It’s like in the movie, when you don’t know until the last moment that (spoiler alert) there’s a man behind the curtain controlling everything.

- 06:40 We use that in research as well, because it’s been shown that people can’t tell the difference between an autonomous robot that is acting on its own, and one that is being controlled by someone.

- 06:55 The important part of my research is the physicality of the robots, so how they gesture or look at people.

- 07:05 If it’s two feet tall, do you interact with it differently if it’s six feet tall?

- 07:10 So we spoof the ML and AI because results show they’ll interact with it the same way whether someone is controlling it or not if they perceive the robot to be capable of interacting on its own.

Can you introduce the topics of social engineering? –

- 07:40 It is a term that is used by the security community, but other terms that people might be familiar with are things like scams, conmen etc.

- 07:55 One of my favourite examples is from the early 2000s, when there was a gentleman who bought large amounts of cheap wine and rebottled it as more expensive types of wine (or relabelled it).

- 08:30 He did the rebottling, but it wasn’t enough – he had to convince people that the wine he had was worth buying.

- 08:45 He had tailor-made suits and fancy cars – he lived the lifestyle of someone who would own expensive bottles of wine – and had a background story prepared.

- 09:10 All that blends into one technique called ‘pretexting’ – there’s information gathering, knowing what you want to talk about, and why.

- 09:30 A lot of it is interpersonal playing on people’s cognitive dissonance between what they expect and what is real.

- 09:40 People often suspend their disbelief if people are believable.

How can robots be used for social engineering? –

- 09:50 Robots have different social abilities that they can use; empathy, authority, bribing, building a trust relationship, and these are a lot of the same things used in social engineering.

- 10:05 Robots can use these techniques is the same way.

- 10:10 We had robots build rapport with people, build a story about how the robot really liked working in the lab but was feeling sick lately and have a virus.

- 10:30 If you watch the video you can see the participants having empathy towards the robot and how bad they felt for it when it was sick.

- 10:40 The robot would say things like: “the experimenters are going to come and reset me – oh no!” and because it was an experiment, the researchers would do that.

- 10:50 People were visibility upset when that happen.

- 10:55 This is the kind of scammers rely on when there’s been a big disaster; con people will be asking for donations to this charity or saying that they’ve lost their home.

- 11:05 There have been experiments where robots have been pan-handling to get money – and people were thinking that the robot needs a home too.

- 11:15 It’s amazing how similar we are treating robots who are able to move as similar to humans.

- 11:20 My goal for the next few years is to try and recreate social engineering experiments but with robots instead.

How do engineers in general mitigate some of the risks you have mentioned? –

- 11:50 You can put security on the robots.

- 11:55 A lot of the robots I have interacted with have had dismal security.

- 12:05 The server software is from 2008, a beta version, has had no updates – and there’s dozens of open CVEs on it – and they are broken when you buy the robot.

- 12:20 That’s something to think about – make sure you can update the software, and someone is checking the security of robots in their home.

- 12:35 One robot I was playing with has a very usable portal when you go to the robot’s web server.

- 12:45 A lot of the robots have their own servers on them.

- 12:50 You can log in, but a lot of people don’t change the default password, so it is easy to get in.

- 12:55 It was easy for me to get in and make the robot do dangerous things.

- 13:00 It’s important to let users know or help them do proper set up.

- 13:10 Engineers could develop processes for people to do the proper set up and change passwords.

What can I do, as a user, to educate myself on these risks? –

- 13:30 Awareness is a good place to start; For example, I like to put my Roomba in a closet when it’s done.

- 13:35 That way, if someone was able to get control of it, they couldn’t do much because it was in a closet.

- 13:40 A lot of Roomba-like robots are now being sold as home guards, and they have 1080 HD cameras on them, and see if anyone is home or when they were there last.

- 14:00 If you have vacations on public calendars in your home, and a controlled robot can see that – it’s a great time to case your home.

- 14:15 By putting the robot in a closet, at least you are protecting yourself from some of those things.

- 14:20 It’s always a good step to reset passwords for all of your devices when you get something new.

- 14:25 Trying to run updates whenever you can is also a good idea.

- 14:30 Specifically when it comes to robot social engineering, if the robot is in your space, there are things you can do to contain it.

- 14:40 If the robot is in a public space, it’s not great to try and contain it because you can get in trouble for messing with someone else’s property.

- 14:50 If you have robots wandering around taking pictures of people’s faces or number-plates, people can be uncomfortable with that.

- 15:00 Having a way to obscure your face can be useful too.

What is the state of interaction between academia and industry? –

- 15:15 As far as I know, I am one of two people in the world looking at this topic, and the first to actively write about joining social engineering and robots together that I can find.

- 15:30 There are small overlaps with other places because this is so inter-disciplinary; academia and industry are doing well on collaboration and interacting with robots.

- 15:45 Interaction between robots has been adopted by industry and is being researched by academia – that space is going well.

- 15:50 Security and robots in general are going quite well – companies are showing up and presenting at conferences, so there is overlap between those spaces.

- 16:05 I haven’t seen as much overlap between academia and industry; I’ve tried to talk to some companies, and they’re not as concerned with things that may happen.

- 16:15 I think it will take an act of attack for things to happen before people start paying attention.

- 16:25 When it comes to robot social engineering specifically, it’s a very new space and I’m looking forward to seeing what will happen.

- 17:05 Because my topic is so inter-disciplinary, there are different groups who have different thoughts about how it should be done.

- 17:15 I feel that it makes my research stronger, because there are so many differing opinions and lack of understanding between groups.

- 17:25 I see my research as being an opportunity to be able to bring multiple groups together and give a nexus to talk in social situations that we otherwise might not have.

What do you think the future is for robotics and software engineering from a sci-fi perspective? –

- 18:00 I don’t think it has to be either software or hardware driven.

- 18:05 That’s one thing that usually gets me in sci-fi; there’s usually only focus on one technology where multiple vulnerabilities probably exist in the same space.

- 18:15 Star wars does it well; you have multiple different types of robots, throughout the whole franchise, who do a variety of different things.

- 18:25 If you look at C3P0, he’s pretty terrible at walking but is very smart.

- 18:30 Then you have R2D2, who doesn’t talk much – there’s some inflection in the voice which gives personality but his communication skills aren’t on the same par as C3P0.

- 18:45 R2D2 is great for flying around in space, using tools specifically.

- 18:55 Then you have some of the newer robots which are fantastic ninja fighters, which are obviously very good at movement, so I think Star Wars has that flexibility.

- 19:05 At the same time, there’s so many different formats for sharing information and processing data, in the Star Wars universe too.

- 19:15 When you think about Matrix, Snowcrash, Westworld, iRobot – there’s probably examples from each one of those things that we’re going to go towards in the future.

- 19:30 That’s one way that industry affects academia is that there’s so much effort people put in to create their robots that they grew up with in movies and make them real.

- 19:45 Star Trek has inspired so much technology since its initial release; it’s a feedback loop, for sure, where our imagination inspires what’s happening in tech.

What’s the best way to learn more about robotics or your topic? –

- 20:15 A lot of what I do is social robots, and less about robotics – if you had me in a room with a lot of roboticists, we wouldn’t have much to talk about other than design of outward appearance.

- 20:30 For hard robotics, it’s a lot of maths – so taking a lot of maths classes; learning how to use ROS, the Robot Operating System; getting experienced with hardware boards.

- 20:45 There’s a lot of books by “No Starch Press” which would help there.

- 20:50 Going to conferences and attending different workshops is helpful too – there’s a few conferences I go to that have workshops that bring you up to base level.

- 21:00 For university courses, trying a human computer interaction course is very helpful because it teaches you how people interact with machines like phones.

- 21:15 A lot of those principles can also be applied to robots.

- 21:20 I would recommend a robotics course if you wanted to get into physical and electrical robot design.

- 21:35 For human computer interaction there’s only a few schools that offer those courses, so choose one of them.

- 21:40 I always recommend an ethics course – take as many of these as you can.

What’s the best way to follow your work? –

About QCon

QCon is a practitioner-driven conference designed for technical team leads, architects, and project managers who influence software innovation in their teams. QCon takes place 8 times per year in London, New York, Munich, San Francisco, Sao Paolo, Beijing, Guangzhou & Shanghai. QCon London is at its 14th Edition and will take place Mar 2-5, 2020. 140+ expert practitioner speakers, 1600+ attendees and 18 tracks will cover topics driving the evolution of software development today. Visit qconlondon.com to get more details.

More about our podcasts

You can keep up-to-date with the podcasts via our RSS Feed, and they are available via SoundCloud, Apple Podcasts, Spotify, Overcast and the Google Podcast. From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

Previous podcasts

MMS • Helen Beal

Article originally posted on InfoQ. Visit InfoQ

Algorithmia, an AI model management automation platform for data scientists and machine learning (ML) engineers, now integrates with GitHub.

The new integration integrates machine learning initiatives into organisations’s DevOps lifecycles. Users can access centralised code repository capabilities such as portability, compliance and security. The integration follows DevOps best practices for managing machine learning models in production with the goal of shortening time-to-value for data science initiatives.

Algorithmia’s company history began with an AI algorithm marketplace for data scientists and ML engineers to find and share pre-trained models and utility functions. Launched in 2017, the Algorithmia platform uses a serverless AI layer for scaled deployment of machine and deep learning models. There is also an enterprise offering for those wanting to deploy, iterate, and scale their models on their own stack or Algorithmia’s managed cloud.

GitHub brings together a developers community to discover, share and build software and is a major repository for software developers and data science teams. By integrating with GitHub, Algorithmia enables data science deployment processes to follow the same software development lifecycle (SDLC) organisations already use, via an integration. With this Algorithmia-GitHub integration, users can store their code on GitHub and deploy it directly to Algorithmia and Algorithmia Enterprise. This means that teams working on ML can participate in their organisation’s software development lifecycle (SDLC) and multiple users can contribute and collaborate on a centralised code base, ensuring code quality with best practices like code reviews through pull requests and issue tracking. Users can also take advantage of GitHub’s governance applications around dependency management to reduce the risk of their models utilising package versions with deprecated features.

InfoQ asked Algorithmia CEO, Diego Oppenheimer to tell us more about the new integration.

InfoQ: Why are teams increasingly looking to machine learning as part of their applications?

Oppenheimer: As enterprises accumulate vast amounts of data, the ability to manually process it rapidly becomes infeasible. The rate at which machine learning models can produce actionable outputs is exponentially greater than traditional applications. A healthy elastic machine learning lifecycle requires little overhead, which could cut down on expensive headcount. As companies grow in size, workflow can become siloed (across departments, offices, continents, etc). A centralised ML repository helps with cross-company alignment. As a use case example: Instantaneous customer service or product function is a requisite for any business. If a customer has a negative experience, they are unlikely to continue doing business with you. To that end, ML opens doors to instantaneous, repeatable, scalable service features to ensure positive customer experience, and models can iterate quickly, meaning improvements can happen in far less time than going through a traditional review and update process.

InfoQ: Why would people want to use Algorithmia?

Oppenheimer: Algorithmia seeks to empower every organisation to achieve its full potential through the use of AI and machine learning. Algorithmia focuses on ML model deployment, serving, and management elements of the ML lifecycle. Users can connect any of their data sources, orchestration engines, and step functions to deploy their models from all major frameworks, languages, platforms, and tools. Algorithmia Enterprise offers custom, centralised model repositories for all stages of the MLOps and management lifecycle, enabling data management, ML deployment, model management, and operations teams to work concurrently on the same project without affecting business operations. Data scientists are not DevOps experts, and DevOps engineers are not data scientists. Time is wasted when a data scientist is tasked to build and manage complex infrastructure, make crucial decisions on how to scale ML deployments efficiently and securely, and all without incurring excessive costs. Algorithmia Enterprise customers don’t have to worry about wasting resources getting to deployment. We make it easy for both teams to work together.

InfoQ: Do you see data scientists and ML engineers regularly embedded into product teams? Or are software engineers in these teams upskilling to these roles?

Oppenheimer: The short answer is yes, data scientists and ML engineers are regularly embedded into product teams, though the configuration of teams is still in early stages so it’s not yet a definitive migratory pattern. The most successful ML teams are ones directly tied to a product or business unit because of how direct the impact can be. We see more and more centres of excellence in data science and ML in which ML teams are assigned to a product for a period of time to develop capabilities.

InfoQ: Will you also be announcing a GitLab integration?

Oppenheimer: Algorithmia’s source code management (SCM) system provides flexibility and options for ML practitioners. We have created a flexible architecture that allows for the integration of other SCMs in the future. Our latest integration with GitHub opens doors for a lot of models sitting in GitHub repositories to go directly into production. Algorithmia will be releasing other integrations in upcoming product releases.

InfoQ: What are the most common ML patterns you see today?

Oppenheimer: Data science teams are rapidly growing across all industries as companies rush to get ahead of the AI/ML curve. Top business use cases for machine learning are models to reduce costs, models to generate customer insights, and models that improve customer experience. Most ML-minded companies are within the first year of model development and only 9 percent of organisations rate themselves as having sophisticated ML programs. Half of companies doing ML spend between 8 and 90 days deploying one model. For companies with tens or hundreds of models, this timeline can drastically delay ROI. The biggest challenges reported during ML development are with scaling models up, model versioning and reproducibility, and getting organisational alignment on end goals. AI and ML budgets are growing across all industries, with financial services, manufacturing, and IT leading the charge of increasing their budgets by 26-50 percent. Determining what constitutes ML success varies by job role. Executives and C-level directors deem a return on investment the key success indicator according to our latest State of Enterprise Machine Learning Report.

InfoQ: Are there any particular industries that are leading adoption in this space?

Oppenheimer: We see ML growth across all industries, with ML market growth from $7.3bn in 2020 to $30.6bn in 2024 [43% CAGR] according to Forbes. However, the financial, manufacturing, and IT sectors do seem to be leading the way with increased budgets, well-defined use cases, and models in deployment.

InfoQ: What is your vision for your company in the next 12 to 24 months?

Oppenheimer: Algorithmia is focused on enabling organisations to take advantage of ML and AI to improve their businesses. AI is the most significant technological advancement in our lifetime and it will quickly become a core part of almost every business in the same way that the internet played a huge transformational role since their inception. Our organisation is focused on solving the last mile to delivering these capabilities to line-of-business applications in a scalable and manageable way. Integration with current organisational software development lifecycles and IT infrastructure is key so we will continue building and delivering flexibility at the pace that technology is advancing. We aim to partner with data science, DevOps, product, and executive team to reduce the time to market and allow them to create a competitive advantage with their ML investments.

The GitHub integration is now available to algorithmia.com public users and for existing Algorithmia Enterprise customers. The 2020 State of Enterprise Machine Learning Report is available here.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Transcripts

Vidstedt: I’m Mikael Vidstedt. I work for Oracle, and I run the JVM team, and I’ve been working with JVMs my whole career.

Stoodley: I’m Mark Stoodley, I work for IBM. I am also the project lead for Eclipse OpenJ9, which is another open-source Java virtual machine. I’ve also spent my whole career working on JVMs, but primarily in the JIT compiler area.

Tene: I’m Gil Tene. I’m the CTO at Azul Systems, where we make Zing and Zulu, which are JVMs. I have been working on JVMs, not for my whole career, just the last 19 years.

Kumar: I’m Anil Kumar from Intel Corporation. I can say I’ve been working at on the JVMs for the last 17 or 18 years, but not in the way these guys on the left side are talking. I’m more in showcasing how good they are. I also chair the committee at SPEC which made the OSGjava benchmark. If you heard about SPECjbb2005, SPECjbb2015, SPECjvm, SPECjEnterprise, they all had come out of the company Monica [Beckwith] and I have worked on. Anything related to how to improve these benchmarks better, how you can use them or what issue you see, perfectly happy to answer those things. We are working on a new one, so if you have any suggestions…

Kuksenko: I am Sergey Kuksenko. I am from Oracle, from Java performance team. My job is making Java JDK, JVM, whatever is faster, probably for the last 15 years.

GC and C4

Moderator: One of the things that we have in common in this panel is startup and JVM futures and responsiveness. Everybody has contributed in some way or form to improving the responsiveness of your application if it runs on the JVM. If you have any questions in that field, with respect to, say, futures, then I think talking to Mark [Stoodley] about that would be a good option, or you can just start talking to Gil [Tene] as well, where he can tell you everything about LTS, MTS, and anything in between. There’s an interesting thing that I wanted to mention about ZGC and as well as C4. Maybe you want to talk about C4. Do you want to start talking about C4 and how it changed the landscape for garbage collectors?

Tene: I did a talk yesterday about garbage collection in general. I’ve been doing talks about garbage collection for almost 10 years now. The talk I did yesterday was actually a sponsored talk, but I like to put just educational stuff in those talks too. I used to do a GC talk that was very popular. I stopped doing it four or five years ago, because I got sick of doing it, because it’s the only talk anybody wanted me to do. The way the talk started is I was trying to explain to the world what C4 is, and it turned out that you needed to educate people on what GC is and how it works. We basically ended up with a one-hour introduction to GC, and that’s popular. What I really like in the updated thing is, when I went back and looked at what I used to talk about and how we talk about it now, I used to explain how GCs work right now and how this cool, different way of doing it that’s solving new problems, that is needed, which is C4 and Zing, does its thing and how it’s very different and how I’m surprised we were the only ones doing it.

Yesterday I was able to segment the talk differently and talk about the legacy collectors that still stopped the world and the fact that all future collectors in Java are going to be concurrent compacting collectors. There are three different implementations racing for that crown now. I expect there to be three more, probably. I wouldn’t be surprised if there’s a lot of academic thing. There’s finally a recognition that concurrent compaction is the only way forward for Java to keep actually having the same GC in the next decade. It should have been for this decade, but I’ll take next decade. C4 probably is the first real one that did that. We’ve been shipping it for nine years. We did a full academic peer review paper on it in 2011. It is a fundamental algorithm for how to do it, but there’s probably 10 other ways to skin the cat. The cat is concurrent compaction. Everybody needs to skin it.

Vidstedt: I think you’re absolutely right. I think the insight has been that, for the longest time, throughput was basically the metric. That’s what we wanted Java to be really fast on the throughput side, and then, maybe 10, 15 years ago was when we started realizing that, “Yes, we’ve done a lot of that.” It’s not like we can sit back and just relax and not work on it, but I will say that the obvious next step there was to make everything concurrent and get those pause-times down to zero. Yes, lots of cool stuff happening in that area.

Stoodley: That’s actually a broader trend, I think, across all JVMs. Throughput did use to be the only thing that people really were focused in carrying about. The other metrics like responsiveness, like startup time, like memory footprint, and all of these kinds of metrics are all now coming to the floor, and everybody’s carrying about a lot of different things. It introduces a lot of interesting challenges in JVMs, and how do you balance what you do against all of those metrics, how do you understand, how do you capture what it is that the person running a Java application wants from their JVM in terms of footprint, in terms of startup, in terms of throughput, in terms of responsiveness, etc. It’s a very interesting time to be a JVM developer, I think.

Tene: I think the reason you’ve heard how many years we’re all at it is because it’s very interesting to work on JVMs.

Different Implementations of Compacting Concurrent Collectors

Participant 1: In the same way that the legacy collectors let your split and try it off between latency and throughput, I was curious see what the panel thinks about the different implementations of compacting concurrent collectors these days and how you would say they’re differentiating themselves in their runtime profiles? For a little bit of context, I’ve been using Java for 20 years. I haven’t been on the JVM side. I’ve been on the other side. I’ve been causing problems for you guys for 20 years. By the way, not everyone’s just interested in the GC talks. We did coordinate in the mission talks. That was remarkable. I was just curious to see if you could reflect on that, how do you see the different collectors differentiating themselves in that low cost, time, low latency space.

Tene: It’s hard to tell you. I think they’re still very evolving, and where they were aligned and whether there will differentiation on throughput versus responsiveness is still a question. I think there are multiple possible implementations that all could be good at both latency and throughput, that all myth about you have to trade them off, it’s false. There’s simple math that shows a concurrent collector will beat a stop-the-world collector on efficiency. There is no trade-off. Forget about the trade-off. There are implementation choices and focuses, for example, memory elasticity, focusing on small footprint versus large, is fragmentation a problem or not, which battles do you want to pick and compare it. We might find that there’s some rounded way of handling all of them, or maybe there’s specialization. I think there’s a lot for us to learn going forward.

For example, for us, with Zing, we probably started from the high performance, high throughput, low latency world, and we’ve been slowing coming towards the small. Today, you can run it in containers with a couple cores and a couple gigabytes in it. It’s great. For us, 200-megabyte seems really, really small, like tiny. Then you have these guys, they’re starting there and actually trying to compact from there. Those are very different concerns. I don’t know exactly what line, size of heap, size of footprint, maybe even throughput the crossovers are. They’re not architectural. They’re more about the focus of that implementation. I think that over the next three, four, five years, we’re going to see multiple implementations evolving, and they’ll either have an excellence in one field or wide breadth or whatever it is. Obviously, I think that the one we have now in production is the best, but I fully expect to sit here with a panel of five other guys comparing what we do well.

Stoodley: I would agree with that. I think, for us, it’s still early days. We’re still building out our technologies in this area. There’s still a lot to do just to get to state of the art in the area. There’s lots of interesting things to do, and we’ll see what it looks like when we get there. In the end, how successful we’ll be and whether there will be differentiation is going to come down to people like yourselves asking us questions and showing us examples, giving us things to prove how well it works or how well it doesn’t work. That will drive how well-developed these things are going forward. We need your help.

Realising New Features

Participant 2: Can I ask a follow-up question? When are we going to deprecate or take out CMS and G1 from the options?

Vidstedt: I have good news for you. Eight hours ago, I think, now, approximately, CMS was finally actually removed from the mainline codebase.

Tene: This is for 15 or 14?

Vidstedt: We never promise anything in terms of releases, but this is mainline JDK. The next release that comes out is 14, in March. That much I can say.

Participant 2: [inaudible 00:11:54]

Vidstedt: The reason why I’m hesitating here is that the only time to tell when a feature actually is either shipped or not in the release is when the release has likely been made. We can always change our minds. Basically, I will say that this is unlikely for CMS, but if people come around and say, “Hey, CMS, what’s actually the greatest thing since sliced bread?” On top of that, we’re willing to help maintain it because that’s the challenge for us.

Tene: That one’s the big one. Ok, all you guys, let’s say it great. Are you going to maintain it?

Vidstedt: Exactly. We’re not hesitating that to our use cases where CMS is very great but the fact is that it has a very high maintenance cost associated with it. That’s the reason why we’ve looked at deprecating and now finally removing it. Again, you’ll know on March, whichever day it is, if it’s in 14 or not, but, I’d bet on it not being there.

Tene: The other part of that question was the really interesting one. When are we going to deprecate G1?

Vidstedt: I think this is back to how things are evolving over time. What I know about myself is that I’m lousy in predicting the future. What we typically do is we listen to people that come around, and they say “We have this product and it’s not working as well as we want it to,” be it that on the throughput side or on the pause-time side, or I’m not as efficient as I could, or footprint, as Gil [Tene] mentioned. All of those things, those help drive us. We make educated guesses, obviously, but in the end, we do need the help from you figuring out what the next steps really are. G1 may, at some point, well be deprecated. I’m actually hoping that it will be, because that means that we’re innovating and creating new things. Much like CMS was brilliant when it was introduced 20 years ago or so, it has served its purpose, and now we’re looking into the future.

Tene: Let me make a prediction, and then we’ll look at the recording in the future. I want people to remember that yes, I’m that Zing guy and C4 and all that, but we also make Zulu, which is straight OpenJDK with all the collectors that are in it, including CMS that’s about to go away. I think G1 is a fine collector in Zulu. It’s probably the best collector in the Zulu thing, especially in Java 11 and above, as you’ve said. I think it’s around for a while. If I had to predict, I think we’ll see maturation of the concurrent compacting collector that can handle throughput with full generational capability in OpenJDK somewhere in the next three to five years to a point where it handles the stuff, it’s not experimental, maybe not default yet but can take it. At that point, you’re going to have this overlap period between that and existing default one, which is G1. I think that will last for another five-plus years, at least, before any kind of deprecation. If I had to guess, G1’s not going away for at least a decade, probably 15 years. If it goes away in 15 years, we’ll have really good things to replace it with.

Vidstedt: Exactly. Thanks for saying that. We try to be at least very responsible when it comes to deprecating and removing things, because we, again, realize that old things have value in their respective context, let’s say. CMS, for example, I remember the first time I mentioned that we were going to deprecate it. It was back in 2011 at JavaOne. I know that people had been talking about it before, but that was the first time I told people in public. Then we actually deprecated it in, I want to say 2000-something, a few years ago. We’ve given people some amount of, at least, heads up that it’s going away. We like to think that the G1 collector is a good replacement, and there are other alternatives coming as well. We do spend significant time on removing things. We realized that that comes with a cost as well.

Stoodley: Interestingly, in OpenJ9, we have not been removing collectors so much. We do have some legacy collectors that are from 15 years ago, things called optavgpause, which nobody really believes that it actually does optimize for average pause anymore. Our default collector is a generational concurrent similar to what CMS is, and we’ve kept that. We have a region-based GC. It’s called Balanced. We are working on pause-less GC. The irony is not lost on me that I have to pause while saying pause-less, because it’s very different than saying pauseless. Anyway, we have all of these things. one of the interesting features about OpenJ9 is we build the same source code base into every JDK release. JDK 8, 11, and 13 right now, all have the same version of OpenJ9 in them, in their most recent J9 release. Deprecating some of the things like GC policies are a little bit harder for us, but, on the plus side, it forces us to keep investing in those things and making sure they work really well in providing lots of options for people, depending on what it is that they want from their collector.

Tene: Actually, picking up on what you just said, the same JVM across the releases, we do the same on our Zing product. The same exact JVM is going for Java 7, 8, 9, 11, 13. HotSpot had this at some point. For a very short period of time, it was actually our domain thing, the HotSpot Express release model, which I personally really like. I really liked having a JVM that can be used across multiples. There’s goodness about the cool good things that have worked on for Java 14 bringing speed to Java 11 and that stuff. I would love to see a transition, a way to do that again, and it does have this little “deprecation is harder” problem, but only at the JVM level, not at the class library level. HotSpot Express, I think, was a great idea in the OpenJDK world. I don’t know what you think about it.

Vidstedt: I think there are definitely pros and cons. Obviously, you can get more of the investment and the innovation that is happening in mainline or more recent releases, let’s say, all the way to the old releases as well. What I think we found was that it came into existence at a time where JDKs were not actually being shipped. JDK 7 was effectively stalled for I think it was 5.5 years or something like that. We needed to get the innovation into people’s hands, so, therefore, we had to deliver the JVM into the only existing release at the time, which was JDK 6. Once the JDK started coming more rapidly and more predictively, I’m going to say that the reason for backporting things was less of an issue, let’s say, and also, the cost again of making sure that everything works. There’s a lot of testing and stuff that needs to happen to make sure that the new innovation not only works on the mainline version but on all the other versions in the past as well. There are trade-offs, some are good, are more challenging.

Tene: I would say that the community benefit that I would most put on this is time-to-market for performance improvement. As it stands today, you invest usually in the latest. They just get better. The GCs get better. The runtime gets better, whatever it is. Lots of things get better, but then those better things are only available as people transition and adopt. When we’re able to bring them to the current dominant version, you get a two-year time-to-market improvement. It’s exactly the same thing, but people get to use it for real a couple of years earlier. That’s really, I think, what’s driving us in the product, and probably you guys have been in the same way. I’d love to see the community pipeline do that too, so Java 11 and 13 could benefit from the work on 15 and 17 at the performance side.

Stoodley: It’s also from platform angle too so improvements in platforms. Containers weren’t really a thing when JDK 8 came out, but they become the thing now. If most of the ecosystem is still stuck on JDK 8, as a lot of the stats say, then it forces you to backport a whole bunch of stuff, and it’s extra work to do that backporting in order to bring that support for modern platforms into the place where everyone is. From our perspective, it’s just an easier way to provide that support for modern platforms, modern paradigms that would otherwise have to take a change in API perhaps. You have to jump the modularity bound, the hurdle, or whatever it is that’s holding you back from moving from 8 to 11. That’s getting easier and easier for a variety of reasons, and the advantage of doing that is getting greater and greater. Don’t think that I’m trying to discourage anyone from upgrading to the latest version of the JDK. I want people running all modern stuff. I recognize that it’s a reality for a lot of our customers, certainly from an IBM standpoint and stakeholders from the point of view of our open-source project. It’s just the reality that they’re on JDK 8. If we want to address their immediate requirements, JDK 8 is where you have to deliver it.

Vidstedt: This is not going to be a huge dataset, I guess, but how many people in here have tried something after 8, so 9 and later? For how many did that work?

Participant 3: We’re running 11. We’re running a custom build of 11 in production with an experimental collector. It’s a small sample set, highly biased.

Vidstedt: Ok. Out of curiosity, what happened? Ok, didn’t work.

Tene: Actually, when I look around, I start with that question, “How many have tried,” and then I say, “How many have tried in production?” Ok. How many are in production? Same number, good. How many don’t have aid in production anymore? There you go. That’s a great trend, by the way, because across our customer base for Zulu, for example, which we’ve been tracking for a while. In the last two months, we’ve started seeing people convert their entire production to running on 11. Running on 11 doesn’t mean coding to 11. It means running on 11. Because that’s the first step. I’m very encouraged to see that in real things. We have the first people that are doing that across large deployments, and I think that’s a great sign. Thank you, because you’re the one who makes it good for everybody else, because you end up with a custom build.

Challenges in Migrating

Participant 4: I was just going to share that the challenges for us getting from 8 to 9 was actually the ecosystem. The code didn’t compile, but that was just Java 9’s modules. I completely understand the reason why modules exist. I totally understand the ability to deprecate and removing things. Used it, loved it, many years ago. Can’t believe it’s still in the JDK. Our biggest challenge was literally just getting the module system working from 8 to 9. We couldn’t migrate because the ecosystem wasn’t there. We had to wait for ByteBuddy, for example, to get up on to 9. Going from 9 to 10 and 10 to 11 was literally IntelliJ migrate to the next language version. I have lots of challenges doing that. I tried going from 8 to 11. That was a complete abject failure. It was just so complicated to get there. Went 8 to 9, got everything working on 8 to 9, and then just went 9 to 10 and 10 to 11. 9 to 10, 10 to 11 was like a day’s work, 8 to 9 was about 3 months, because we had to wait for the ecosystem. It was simply not possible, but that was a long time ago.

Tene: I think your experience is probably not going to be the typical, because a lot of the ecosystem took a while, and it went straight to 11. As a result, you won’t have it on 9. There’s a bunch of people that are doing it now rather than when you did it. The 9 and 10 might actually be harder to jumping to 11 because there’s things that only started supporting in 11. For most people, I think we’re going to see a jump straight from 8 to 11. They’re both out there.

Participant 4: Yes, we started our 11 migration basically the day that 11 was out, and we tried to migrate. We’re very early.

Vidstedt: I think what we’re also seeing is that the release cadence that we introduced started with 9, but 10 was obviously the first one that then came out 6 months later. It takes time to go to 9, to some extent. There are a few things that you do need to adjust. ByteBuddy is a good example of that. What I think we saw was that the ecosystem then caught on to the release cadence and realized that there isn’t a whole lot of work moving between releases. As a matter of fact, what we’re seeing more now is that older relevant libraries and frameworks are proactively looking at mainline changes and making sure that once the releases do come out, it may not be the first day, but it’s at least not all that long after the release that they ship support versions. I think it’s going to be easier for people to upgrade going forward, not just because the big change really was between 8 and 9, but also because the libraries are more active in keeping up.

Tene: I like to ask the people for support and voice, and a good example of one that I’d like to voice, please move faster, is Gradle. I really wish Gradle was 13-ready the day 13 came out, and I’ll ask them to do it when 14 comes out so it’ll be ahead of time. Please, wherever it is that you can put your voice to issues and stuff, make it heard.

Efficiency of GCs

Kumar: After one question on the part of you guys talking a lot on the GC side, one of the trend we start seeing is many of them deploying in the container, and the next part, in the interaction with the customer, I’m seeing the use of the function as a service. That use case I wanted to check, is anyone of you here planning to deploy that yet? Because I do see some use. When that will happen, I don’t think right now the GCs are considering that case of just being up 500 milliseconds.

Stoodley: Epsilon GC is built for exactly that use case.

Tene: Don’t do GC, because there’s no point, because we’re going to be deprecating.

Kuksenko: It wasn’t built for that use case, it was a different purpose, but it’s extensively used for that.

Stoodley: It works well there.

Kuksenko: I’d rather say, it doesn’t work well.

Tene: It does nothing, which is exactly what you need to do for 500 milliseconds. It does not work very well, yes.

Kumar: Any thoughts about adding those testing for what GC might be good for function as a service situations? The reason I’m asking this is that 10, 15 years ago, when the Java came [inaudible 00:27:55], people were seeing the same issue where it’s C-program, you get the same reputability, it takes that many microseconds to do Java. It can be anywhere from one millisecond to one minute. Function as a service, when in the cloud, people are looking for the guarantees, what is your variability for that function within that range. I feel, right now, the GC will be in trouble at the rate we saw [inaudible 00:28:20].

Tene: I think we’re measuring the wrong things, honestly, and I think that as we evolve, the benchmarks were going to need to follow with the actual uses will play. If you look at function as a service right now, there’s a lot of really cool things out there, but the fundamental difference is between measuring the zero-to-one and the one-to-many behaviors. Actual function as a service deployments out there, the real practical ones are all one-to-many. None of them are zero-to-one. There are experiments of people playing with. Maybe soon, we can get to a practical zero-to-one thing, but measuring zero-to-one is not where it is, because it takes five seconds to get a function as a service started. It’s not about the GC, it’s not about the 500 milliseconds, microseconds, or whatever it is. The reason nobody will stick around for just 500 milliseconds is it costs you 5 seconds to start the damn thing.

Now, over time, we might get that down, and the infrastructure might get that down. It might start being interesting. I fundamentally believe that for function as a service to be real, it’s not about short-lived, it’s about elastic start and stop when you need to. The ability to go to zero is very useful, so the speed from zero is very important, but it’s actually the long-lasting throughput of a function that is elastic that matters. Looking at the transition from start to first operation is important, and then how quickly do you get quick is the next one, and then how fast are you once you’ve been around for a while. Most function as a service will be running for hours, repeating the same function and the same thing very efficiently. Or, to say it in a very web-centric way, we’re not going to see CGI-bin functions around for a long time. CGI-bin is inefficient. Some of you are old enough to know what I’m talking about. We’re not going to see the same things. The reason we have servlet engines is because CGI-bin is not a practical way to run, and I wish the Hadoop people knew that too.

The behaviors that we’re seeing now that I think are really intriguing is that the AOT/quickstart/decide what to perform first at the edge, the eventual optimization in the trade-off between those, can you do this and that, rather than this or that. Then GC within this is the smallest concern for me, because I think the JIT compilers and the code quality are much more dramatic, and the CPU spent on them at the start, which you guys do some interesting stuff around, I think is very important. GCs, they can adapt pretty quickly. We probably are seeing just weird nobody planned for these cases, but it’s very easy to work them out of the way. They don’t have a fundamental problem in the first two seconds, and all we have to do is just tweak around the heuristics so that it will get out of the way. It’s the JIT compilers and the code quality that’ll dominate, I think.

Kumar: The other cases I’m seeing, like the health care or others where you could have a large image or a directory comes in, they want to shut it down. They don’t want the warm instant something due to security and other things. You have that in your heap or something where you don’t end up doing the GC immediately. You can’t imagine to analyze and GC, and so you’re not there in the responsiveness.

Tene: You see that people want to shut it down because it’s wasteful, but that’s after it’s been up and doing nothing for minutes, I think.

Vidstedt: I completely agree with you. The time to warm or whatever we want to call it is a thing and a lot of that is JIT compilation and getting the performance cold in there. The other thing is class loading and initialization in general. I think that the trend we’re seeing and we’ve been working on is attacking that in two different ways. The first one is by offloading more of that computation, if you so will, to before you actually start up the instance. With JDK 9 and the model system, we also introduced the concept of link time. Using J-Link, you can create something ahead of time before you actually start up the instance where you can bake in some of the state or precompute some of that state so that you don’t have to do it at startup. That’s one way of doing that. Then, we’re also working on language and library level optimizations and improvements that can help make more of that stuff happen, let’s say, ahead of time, like statically compute things instead of having to execute it at runtime.

Tene: OpenJDK 12 and 13 have made great strides in just things like class-data sharing that I think it’s cut it in half.

Vidstedt: Yes. I know, it’s been pretty impressive. We have spent a lot of time. We have especially one guy in Stockholm who spends like 24 hours a day working on just getting one small thing at a time improved when it comes to startups. He picks something for the day or the week and just goes at it. CDS has been improving a couple of different ways, both on the simplicity side. It’s actually, class-data sharing, for those of you who don’t know it, is basically taking the class metadata. This is not the cold itself, but it’s like all the fields and bytecodes, all the rest of the metadata around classes. Storing that off is something that looks very much like a shared library. Instead of loading the classes JAR files or whatever at startup, you can just map in the shared library, and you have all the relevant data in there.

Simplicity in the sense that it’s been there for quite a while since JDK 5 if I remember correctly, but it’s always been too hard to use. We’ve improved on how you can use it. It isn’t always basically start up the VM and the archive will get created and mapped in next time. The other thing is that we’ve improved on what goes into it, both more sharing, more computations stored in the archive. Also, we’ve started adding some of the objects on the Java heap bin there as well. More and more stuff is going into the archive itself. I think what we’ve seen is that the improvements – I’m forgetting the numbers right now. We’ve talked about this at Oracle Code One earlier this year – the startup time for Hello World and Hello Lambda and other small applications has been improved. It’s not a magnitude, but it’s significant improvements, let’s say.

Kuksenko: I have to add that we work with startup from thought areas. We have AOT right now. We finally did CDS, class data sharing, not for all JDK class, but it’s possible to use it for your application class-data.

Tene: AppCDS.

Kuksenko: AppCDS is our second option. The third option, class and mainland, some of the guys pass through all static initialization of our class libraries using stuff. Nobody cares when JVM started. People cared when the domain is finally executed, but we have to pass all of that static initialization before. It was also reduced.

Tene: The one unfortunate name here is called class data sharing because the initial thing was how desktops don’t have to have multiple copies of this. We probably should call it something like class computation preloading. It’s saving us all the class loading time, all the parsing, all the verification, all the computation of just taking those classes and putting their memory in the right format and initializing stuff. It’s the sharing part, that’s the bit.

Vidstedt: I agree. We’re selling the wrong part of the value at this point. You’re right.

Stoodley: We got caught by that too, because we introduced shared classes cache in Java 5. It’s true that it did share classes when we introduced that technology, but very quickly, after Java 6, we started storing it ahead of time, compiled code in there, and now we’re starting profile data in there and other metadata and hints and all kinds of goodness that dramatically improved the startup time of the JDK, of applications running on the JDK, even if you have big Java EE apps. It’s sharing all of that stuff or storing all of that stuff and making it faster to start things up.

Tene: I think that’s where we’re going to see a lot more improvement and investment too. I think the whole CDS, AppCDS, whatever we call it going forward, and all the other things, like stored profiles, we know how to trigger the JITs already, we don’t have to learn on the back of 10,000 operations before we do them, that stuff. We call that ReadyNow. You guys have a different thing for this. We have JIT stashes or compile caches or whatever we call them. I think they all fall into the same category, and the way to think of the category is we care about how quickly a JVM starts and jumps into fast code. We’re winding this big rubber band, then we store our curled up rubber band with the JVM so it could go, “Poof,” and it starts. That’s what this CDS thing is and all the other things we’re going to throw into it over time, but it’s all about being ready to run really quick. The idea would be we have a mapped file, we jump into the middle of it, and we’re fast. That’s what we all want, but we want to do this and stay Java.

Java as a Top Choice for Function as a Service

Kumar: I think the second part of that one is, ok, we showed that it could be fast, it could be pretty responsive. I have been in Intel and other customers across many environments, not just Java. When it comes to function as a service, which is a rising case in the cloud, I don’t see that Java is still at the top choice. I see Python, I see Node.js, and other languages. Is there anything at the programming level being done so people see Java as the top choice for the function as a service?

Stoodley: Do you think that choice is being made because of performance concerns or because of what they’re trying to do in those functions?

Kumar: What they’re trying to do and how easy it is to be able to set up and do those things.

Tene: I think that function as a service is a rapidly evolving world, and I’ve seen people try to build everything in it. It seems to be very good for Glue right now, for things like occasional event things, not event streaming, but handle triggers, handle conditions. It’s very good for that. Then, when you start running your entire streaming flow through it, you find out a lot. I think that when you do, that’s where you’re going to end up wanting Java. You could do Java, you could probably do, I don’t know, C++ or Rust. Once you start running those things, what matters is not how quickly it starts, it’s how it performs.