Month: August 2018

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

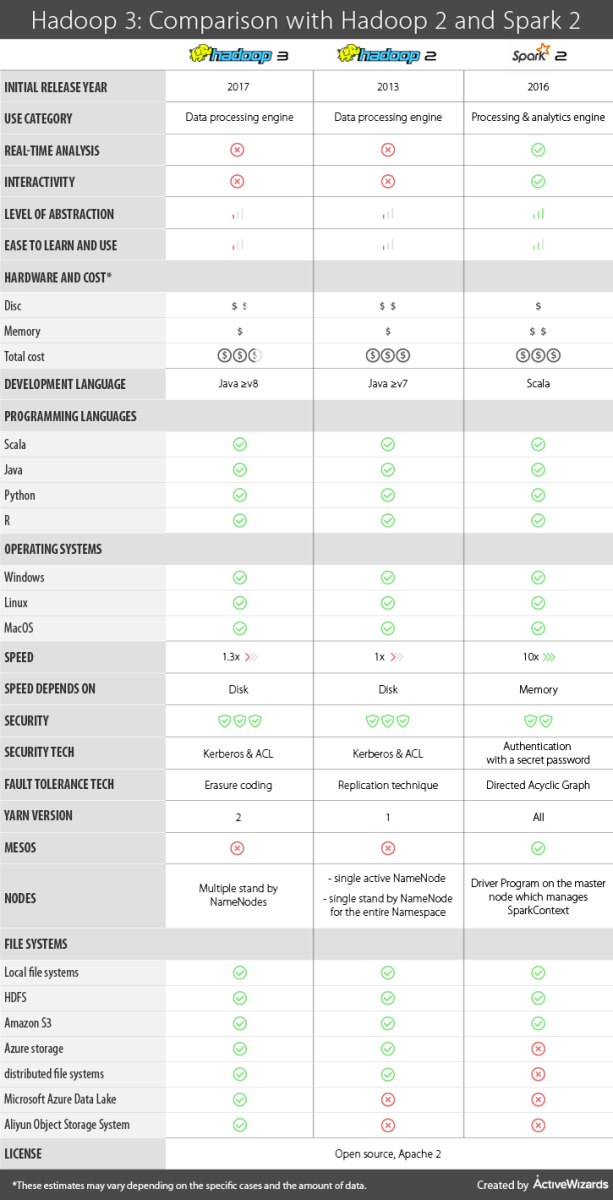

The release of Hadoop 3 in December 2017 marked the beginning of a new era for data science. The Hadoop framework is at the core of the entire Hadoop ecosystem, and various other libraries strongly depend on it.

The release of Hadoop 3 in December 2017 marked the beginning of a new era for data science. The Hadoop framework is at the core of the entire Hadoop ecosystem, and various other libraries strongly depend on it.

In this article, we will discuss the major changes in Hadoop 3 when compared to Hadoop 2. We will also explain the differences between Hadoop and Apache Spark, and advise how to choose the best tool for your particular task.

General information

Hadoop 2 and Hadoop 3 are data processing engines developed in Java and released in 2013 and 2017 respectively. Hadoop was created with the primary goal to maintain the data analysis from a disk, known as batch processing. Therefore, native Hadoop does not support the real-time analytics and interactivity.

Spark 2.X is a processing and analytics engine developed in Scala and released in 2016. The real-time analysis of the information was becoming crucial, as many giant internet services strongly relied on the ability to process data immediately. Consequently, Apache Spark was built for live data processing and is now popular because it can efficiently deal with live streams of information and process data in an interactive mode.

Both Hadoop and Spark are open source, Apache 2 licensed.

Level of abstraction and difficulty to learn and use

One of the major differences between these frameworks is the level of abstraction which is low for Hadoop and high for Spark. Therefore, Hadoop is more challenging to learn and use, as the developers must know how to code a lot of basic operations. Hadoop is only the core engine, so using advanced functionality requires plug-in of other components, which makes the system more complicated.

Unlike Hadoop, Apache Spark is a complete tool for data analytics. It has many useful built-in high-level functions that operate with the Resilient Distributed Dataset (RDD) – the core concept in Spark. This framework has many helpful libraries included in the cluster. For example, MLlib allows using machine learning, Spark SQL can be used to perform SQL queries, etc.

Hardware and cost

Hadoop works with a disk, so it does not need a lot of RAM to operate. This can be cheaper than having large RAM. Hadoop 3 requires less disk space than Hadoop 2 due to changes in fault-tolerance providing system.

Spark needs a lot of RAM to operate in the in-memory mode so that the total cost can be more expensive than Hadoop.

Support of programming languages

Both versions of Hadoop support several programming languages using Hadoop Streaming, but the primary one is Java. Spark 2.X supports Scala, Java, Python, and R.

Speed

Generally, Hadoop is slower than Spark, as it works with a disk. Hadoop cannot cache the data in memory. Hadoop 3 can work up to 30% faster than Hadoop 2 due to the addition of native Java implementation of the map output collector to the MapReduce.

Spark can process the information in memory 100 times faster than Hadoop. If working with a disk, Spark is 10 times faster than Hadoop.

Security

Hadoop is considered to be more secure than Spark due to the usage of the Kerberos (computer network authentication protocol) and the support of the Access Control Lists (ACL). Spark in its turn provides authentication only with a shared secret password.

Fault tolerance

The fault tolerance in Hadoop 2 is provided by the replication technique where each block of information is copied to create 2 replicas. This means that instead of storing 1 piece of information, Hadoop 2 stores three times more. This raises the problem of wasting the disk space.

In Hadoop 3 the fault tolerance is provided by the erasure coding. This method allows recovering a block of information using the other block and the parity block. Hadoop 3 creates one parity block on every two blocks of data. This requires only 1,5 times more disk space compared with 3 times more with the replications in Hadoop 2. The level of fault tolerance in Hadoop 3 remains the same, but less disk space is required for its operations.

Spark can recover information by the recomputation of the DAG (Directed Acyclic Graph). DAG is formed by vertices and edges. Vertices represent RDDs, and edges represent the operations on the RDDs. In the situation, where some part of the data was lost, Spark can recover it by applying the sequence of operations to the RDDs. Note, that each time you will need to recompute RDD, you will need to wait until Spark performs all the necessary calculations. Spark also creates checkpoints to protect against failures.

YARN version

Hadoop 2 uses YARN version 1. YARN (Yet Another Resource Negotiator) is the resource manager. It manages the available resources (CPU, memory, disk). Besides, YARN performs Jobs Scheduling.

YARN was updated to version 2 in Hadoop 3. There are several significant changes improving usability and scalability. YARN 2 supports the flows – logical groups of YARN application and provides aggregating metrics at the level of flows. The separation between the collection processes (writing data) and the serving processes (reading data) improves the scalability. Also, YARN 2 uses Apache HBase as the primary backing storage.

Spark can operate independently, on a cluster with YARN, or with Mesos.

Number of NameNodes

Hadoop 2 supports single active NameNode and single standby NameNode for the entire Namespace while Hadoop 3 works with multiple standby NameNodes.

Spark runs Driver Program on the master node which manages SparkContext.

File systems

The main Hadoop 2 file system is HDFS – Hadoop Distributed File System. The framework is also compatible with several other file systems, Blob stores like Amazon S3 and Azure storage, as well as alternatively distributed file systems.

Hadoop 3 supports all the file systems, as Hadoop 2. In addition, Hadoop 3 is compatible with Microsoft Azure Data Lake and Aliyun Object Storage System.

Spark supports local file systems, Amazon S3 and HDFS.

For your convenience, we created a table that summarises all of the above information and presents a brief comparison of the key parameters of the two versions of Hadoop and Spark 2.X.

Conclusion

The major difference between Hadoop 3 and 2 is that the new version provides better optimization and usability, as well as certain architectural improvements.

Spark and Hadoop differ mainly in the level of abstraction. Hadoop was created as the engine for processing large amounts of existing data. It has a low level of abstraction that allows performing complex manipulations but can cause learning and managing difficulties. Spark is easier and faster, with a lot of convenient high-level tools and functions that can simplify your work. Spark operates on top of Hadoop and has many good libraries like Spark SQL or machine learning library MLlib. To summarize, if your work does not require special features, Spark can be the most reasonable choice.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

The two main features of Go 1.11 are WebAssembly and modules, although both still in experimental stage.

Modules provide an alternative to GOPATH to locate dependencies for a project and to manage versioning. If the go command is run outside of $GOPATH/src in a directory where a module is present, then modules are enabled, otherwise go will use GOPATH. As Google Russ Cox explains:

A Go module is a collection of packages sharing a common import path prefix, known as the module path. The module is the unit of versioning, and module versions are written as semantic version strings. When developing using Git, developers will define a new semantic version of a module by adding a tag to the module’s Git repository. Although semantic versions are strongly preferred, referring to specific commits will be supported as well.

A module is created using go mod and is defined by a go.mod file in the module root which lists all required packages with their version numbers. For example, this is a simple module definition file declaring the module’s base directory and two dependencies that is created running go mod -init -module example.com/m:

module example.com/m

require (

golang.org/x/text v0.3.0

gopkg.in/yaml.v2 v2.1.0

)

Once the go.mod file exists, commands like go build, go test, or go list will automatically add new dependencies to satisfy imports. For example, importing rsc.io/quote in your main package, then executing go run would add require rsc.io/quote v1.5.2 to go.mod. Similarly, the go get command updates go.mod to change the module versions used in a build and may upgrade or downgrade any recursive dependencies.

More details on modules syntax can be found running go help modules. The feature will remain in experimental stage at least until Go 1.12 and the Go team will strive to preserve compatibility. Once modules are stable, support for working with GOPATH will be removed.

Support for WebAssembly aims to make it possible to run Go programs inside of Web browsers. You can compile a Go program for the Web running: GOARCH=wasm GOOS=js go build -o test.wasm main.go. This will produce three files, wasm_exec.html, wasm_exec.js, and test.wasm, that you can deploy to your HTTP server or load directly into a browser. The js package can be used for DOM manipulation.

For full list of all changes in Go 1.11, do not miss the official release notes. You can find here a list of all available downloads.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Artificial Intelligence with Python

By Prateek joshi

Build real-world Artificial Intelligence applications with Python to intelligently interact with the world around you

What you will learn:

- Realize different classification and regression techniques

- Understand the concept of clustering and how to use it to automatically segment data

- See how to build an intelligent recommender system

- Understand logic programming and how to use it

- Build automatic speech recognition systems

- Understand the basics of heuristic search and genetic programming

- Develop games using Artificial Intelligence

- Learn how reinforcement learning works

- Discover how to build intelligent applications centered on images, text, and time series data

- See how to use deep learning algorithms and build applications based on it

Click here to get the free eBook.

Python Machine Learning

By Sebastian Raschka

Learn how to build powerful Python machine learning algorithms to generate useful data insights with this data analysis tutorial

What you will learn:

- Find out how different machine learning can be used to ask different data analysis questions

- Learn how to build neural networks using Python libraries and tools such as Keras and Theano

- Write clean and elegant Python code to optimize the strength of your machine learning algorithms

- Discover how to embed your machine learning model in a web application for increased accessibility

- Predict continuous target outcomes using regression analysis

- Uncover hidden patterns and structures in data with clustering

- Organize data using effective pre-processing techniques

- Learn sentiment analysis to delve deeper into textual and social media data

Click here to get the free eBook.

Practical Machine Learning Cookbook

By Atul Tripathi

Building Machine Learning applications with R

What you will learn:

- Get equipped with a deeper understanding of how to apply machine-learning techniques

- Implement each of the advanced machine-learning techniques

- Solve real-life problems that are encountered in order to make your applications produce improved results

- Gain hands-on experience in problem solving for your machine-learning systems

- Understand the methods of collecting data, preparing data for usage, training the model, evaluating the model’s performance, and improving the model’s performance

Click here to get the free eBook.

Practical Game AI Programming

By Micael DaGraca

Jump into the world of Game AI development

What you will learn:

- Get to know the basics of how to create different AI for different type of games

- Know what to do when something interferes with the AI choices and how the AI should behave if that happens

- Plan the interaction between the AI character and the environment using Smart Zones or Triggering Events

- Use animations correctly, blending one animation into another and rather than stopping one animation and starting another

- Calculate the best options for the AI to move using Pruning Strategies, Wall Distances, Map Preprocess Implementation, and Forced Neighbours

- Create Theta algorithms to the AI to find short and realistic looking paths

- Add many characters into the same scene and make them behave like a realistic crowd

Click here to get the free eBook.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

This blog is to give brief introduction about Hadoop for those who know next to nothing about this technology. Big Data is at the foundation of all the megatrends that are happening today, from social to the cloud to mobile devices to gaming. This blog will help to build the foundation to take the next step in learning this interesting technology. Let’s get started:

1. What’s Big Data?

Ever since the enhancement of technology, data has been growing every day. Everyone owns gadgets nowadays. Every smart device generates data. One of the prominent sources of data is social media. We, being social animal love to share our thoughts, feelings with others and social media is the right platform for the interaction with other all around the world.

The following image shows data generated by users on the social media every 60 seconds. Data has been exponentially getting generated through these sources.

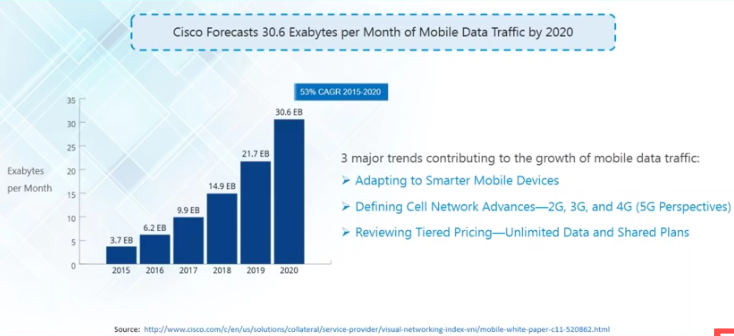

The following image show the Global Mobile Data Traffic prediction by Cisco till 2020.

Hence, Big Data is:

The term used for a collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications.

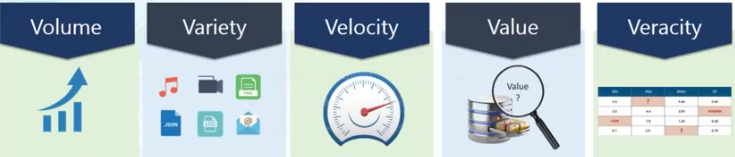

IBM data scientist break big data into 4 dimensions: Volume (Scale of data), Variety (Different forms of data), Velocity (Analysis of streaming data) and Veracity (Uncertainty of data).

Depending on the industry and organization, big data encompasses information from various internal and external sources such as transactions, social media, enterprise content, sensors and mobile devices etc. Companies can leverage data to meet their customer needs, optimize their products, services and operations. This massive amount of data can also be used by the companies to find new sources of the revenue.

2. How did Hadoop come into picture?

These massive amounts of data generated is difficult to store and process using traditional database system. Traditional database management system is used to store and process relational and structured data only. However, in todays world there are lots of unstructured data getting generated like images, audio files, videos; hence traditional system will fail to store and process these kinds of data. Effective solution for this problem is Hadoop.

Hadoop is a framework to process Big Data. It is a framework that allows to store and process large data sets in parallel and distributed fashion.

Hadoop Core Components:

There are two main components of Hadoop: HDFS and MapReduce

Hadoop Distributed File System (HDFS) takes care of storage part of Hadoop architecture.

MapReduce is a processing model and software framework for writing applications which can run on Hadoop. These programs of MapReduce are capable of processing Big Data in parallel on large clusters of computational nodes.

3. What’s HDFS and what are its core components?

HDFS stores files across many nodes in a cluster.

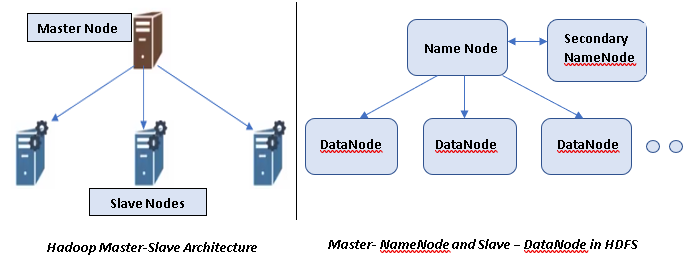

Hadoop follows Master-Slave architecture and hence HDFS being its core component also follows the same architecture.

NameNode and DataNode are the core components of HDFS:

NameNode:

- Maintains and Manages DataNodes.

- Records Metadatae. information about data blocks e.g. location of blocks stored, the size of the files, permissions, hierarchy, etc.

- Receives status and block report from all the DataNodes.

DataNode:

- Slave daemons. It sends signals to NameNode.

- Stores actual It stores in datablocks.

- Serves read and write requests from the clients.

Secondary NameNode:

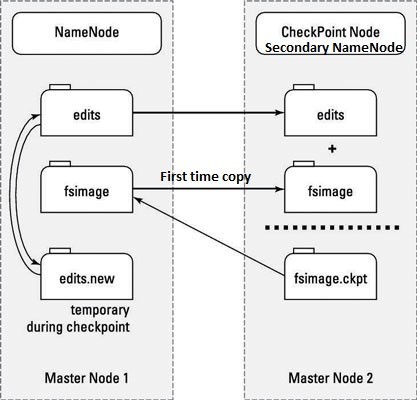

- This is NOT a backup NameNode. However, it is a separate service that keeps a copy of both the edit logs (edits) and filesystem image (fsimage) and merging them to keep the file size reasonable.

- MetaData of NameNode is managed by two files: fsimage and edit logs.

- Fsimage: This file contains all the modifications that happens across the Hadoop namespace or HDFS when the NameNode starts. It’s stored in the local disk of the NameNode machine.

- Edit logs: This file contains the most recent modification. It’s a small file comparatively to the fsimage. Its stored in the RAM of the NameNode machine.

- Secondary NameNode performs the task of Checkpointing.

- Checkpointing is the process of combining edit logs with fsimage (editlogs + fsimage). Secondary NameNode creates copy of edit logs and fsimage from the NameNode to create final fsimage as shown in the above figure.

- Checkpointing happens periodically. (default 1 hour).

- Why final fsimage file is required in Secondary NameNode?

Final fsimage in the Secondary NameNode allows faster failover as it prevents edit logs in the NameNode from getting too huge.New edit log file in the NameNode contains all the modifications/changes that happen during the checkinpointing.

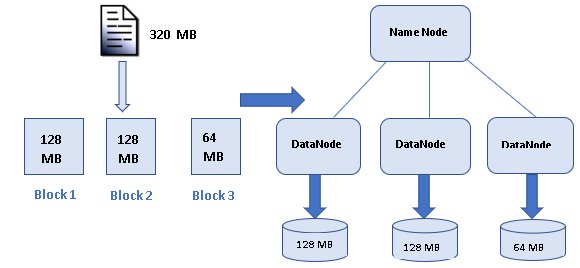

- How the data is stored in DataNodes? HDFS Data Blocks.

Each file is stored on HDFS as blocks. The default size of each block is 128 MB in Apache Hadoop 2.x (64 MB in Apache Hadoop 1.x)

After file is divided into data blocks as shown in the below figure, these data blocks will be then distributed across all the Data Nodes present in the Hadoop cluster.

- What are the advantages of HDFS and what makes it ideal for distribute systems?

1> Fault Tolerance – Each data blocks are replicated thrice ((everything is stored on three machines/DataNodes by default) in the cluster. This helps to protect the data against DataNode (machine) failure.

2> Space – Just add more datanodes and re-balance the size if you need more disk space.

3> Scalability – Unlike traditional database system that can’t scale to process large datasets; HDFS is highly scalable because it can store and distribute very large datasets across many nodes that can operate in parallel.

4> Flexibility – It can store any kind of data, whether its structured, semi-structured or unstructured.

5> Cost-effective – HDFS has direct attached storage and shares the cost of the network and computers it runs on with the MapReduce. It’s also an open source software.

In the next blog we shall discuss about MapReduce, another core component of Hadoop. Stay tuned.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Key Takeaways

- Hash tables are data structures that manage space, originally for a single application’s memory and later applied to large computing clusters.

- As hashing tables are applied to new domains, old methods for resizing hash tables have negative side effects.

- Amazon’s “Dynamo paper” of 2007 applied the consistent hashing technique to a key-value store. Dynamo-style consistent hashing was quickly adopted by Riak & Riak Core and other open source distributed systems.

- Riak Core’s consistent hashing implementation has a number of limits that cause problems when changing cluster sizes.

- Random Slicing is an extremely flexible consistent hashing technique that avoids all of Dynamo-style consistent hashing’s limitations.

This fall, Wallaroo Labs will be releasing a large new feature set to our distributed data stream processing framework, Wallaroo. One of the new features requires a size-adjustable, distributed data structure to support growing & shrinking of compute clusters. It might be a good idea to use a distributed hash table to support the new feature, but … what distributed hash algorithm should we choose?

The consistent hashing technique has been used for over 20 years to create distributed, size-adjustable hash tables. Consistent hashing seems a good match for Wallaroo’s use case. Here’s an outline of this article, exploring if that match really is as good as it seems:

- Briefly explain the problem that consistent hashing tries to solve: resizing hash tables without too much unnecessary work to move data.

- Summarize Riak Core’s consistent hashing implementation

- Point out the limitations of Riak Core’s hashing, both in the context of Riak and in terms of what Wallaroo needs in a consistent hashing implementation.

- Introduce the Random Slicing technique by example and with lots of illustrations

Introduction: The Problem: The Art of Resizing Hash Tables

Most programmers are familiar with hash tables. If hash tables are new to you, then Wikipedia’s article on Hash Tables is a good place to look for more detail. This section will present only a brief summary. If you’re very comfortable with hash tables, I recommend jumping forward to Figure 1 at the end of this section: I will be using several similar diagrams to illustrate consistent hashing scenarios later in this article.

A hash table is a data structure that organizes a computer’s memory. If we have some key called key, what part of memory is responsible for storing a value val that we want to associate with key?

Let’s borrow from that Wikipedia article for some pseudo-code:

let hash = some_hash_function(key) ;; hash is an integer

let index = hash modulo array_size ;; index is an integer

let val = query_bucket(key, index) ;; val may be any data type

Hash tables usually have these three basic steps. The first two steps convert the key (which might be any data type) first into a big number (hash in the example above) and then into a much smaller number (index in the example). The second number is typically called the “bucket index” because hash tables frequently use arrays in their implementation. The index number is often calculated using modulo arithmetic.

Many types of hash table can store only a single value inside of a bucket. Therefore, the total number of buckets (array_size in the example above) is a very important constant. But if this size is constant, how can you resize a hash table? Well, it is “easy” if we allow array_size to be a variable instead of a constant. But if we continue using modulo arithmetic to calculate the index bucket number, then we have a problem whenever array_size changes.

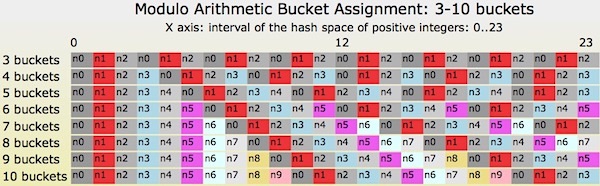

Let’s look at the first 24 hash values, 0 through 23, as we change the number of buckets in our hash table from 3 to 10.

Figure 1: Illustration of modulo arithmetic to assign hash values to buckets

On the far left of Figure 1, the bucket assignments for hashes 0, 1, 2, and 3 do not change. But when the modulo arithmetic “rolls over” back to bucket 0, then everything changes. Can you see a red diagonal line that starts at (hash=4, buckets=3) and continues down and to the right? Most values to the right of that diagonal must move when we change the number of buckets!

The moving problem is usually worse as the new array_size grows, especially when array_size grows incrementally from N to N+1: only 1/N values will remain in the same bucket. We desire the opposite: we want only 1/N values to move to a new bucket.

Consistent hashing is one way to give us very predictable hash table resizing behavior. Let’s look at a specific implementation of consistent hashing and see how it reacts to resizing.

A Tiny Sliver of the History of Consistent Hashing and Riak Core

A traditional hash table is a data structure for organizing space: computer memory. In 1997, staff at Akamai published a paper that recommended using a hash table to organize a different kind of space: a cluster of HTTP cache servers. This widely influential paper was “Consistent Hashing and Random Trees: Distributed Caching Protocols for Relieving Hot Spots on the World Wide Web”.

Amazon published a paper at SOSP 2007 called “Dynamo: Amazon’s Highly Available Key-value Store”. Inspired by this paper, the founders of Basho Technologies, Inc. created the Riak key-value store as an open source implementation of Dynamo. Justin Sheehy made one of the first public presentations about Riak at the NoSQL East 2009 conference in Atlanta.. The Riak Core library code was later split out of the Riak database and can be used as a standalone distributed system platform.

Earlier this year (2018), Damian Gryski wrote a blog article that caught my eye and that I recommend for presenting alternative implementations & comparisons of consistent hashing functions: ”Consistent Hashing: Algorithmic Tradeoffs”.

Riak Core’s Consistent Hashing algorithm

A lot has been written about the consistent hashing algorithm used by Riak Core. I’ll present a summary-by-example here to prepare for a discussion later of Riak Core’s hashing limitations.

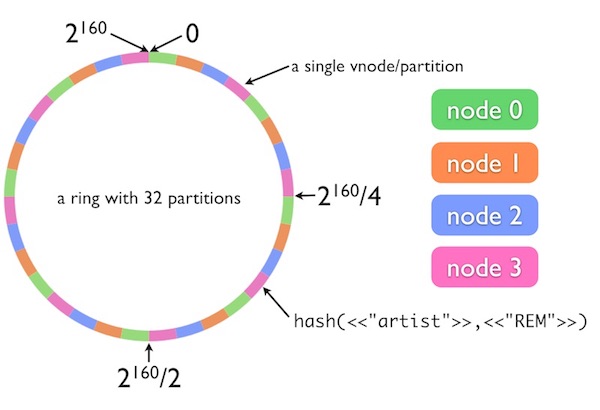

I’ll borrow a couple of diagrams from Justin Sheehy’s original presentation. The first diagram is a hash ring with 32 partitions and 4 nodes assigned to various parts of the ring. Riak Core uses the SHA-1 algorithm for hashing strings to integers. SHA-1’s output size is 160 bits, which can be directly mapped onto the integer range 0..2160-1.

Figure 2: A Riak Ring with 32 partitions and interval claims by 4 nodes

Riak Core’s data model splits its namespace into “buckets” and “keys”, which are not exactly the same definitions that I used in the last section. This example uses the Riak bucket name “artist” and Riak key name “REM”. Those two strings are concatenated together and used as the input to Riak Core’s consistent hash function.

This example’s hash ring is divided into 32 partitions of equal size. Each of the four server nodes is assigned 8 of the 32 partitions. In this example, the string “artist” + “REM” maps via SHA-1 to a position on the ring at roughly 4 o’clock. The pink node, node3, is assigned to this section of the ring. If we ignore data replication, then we say that node3 is the server responsible for storing the value of the key “artist” + “REM”.

Our second example adds data replication. What happens if node3 has crashed? How can we locate other replicas that store our key?

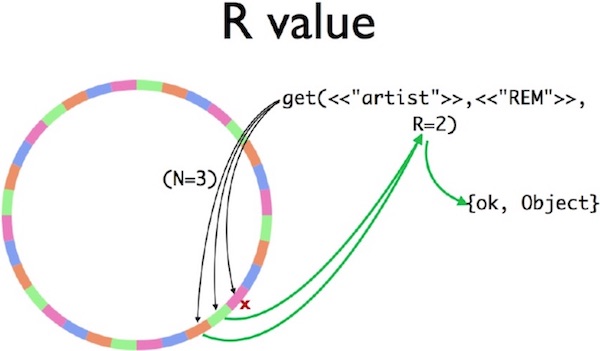

Figure 3: A Riak get query on a 32 partition ring

Riak Core uses several constants to help describe where data replicas are stored. Two of these constants are N, the total number of value replicas per key, and R, the minimum number of successful server queries needed to fetch a key from the database. To calculate the set of all servers that are responsible for a single key, the hash algorithm “walks around the ring clockwise” until N unique servers have been found. In this example, the replica set is handled by pink, green, and orange servers (i.e., node3, node0, and node1)

This second diagram shows that the pink server (node3) has crashed. A Riak Core client will send its query to N=3 servers. No reply is sent by node3. But the replies from node0 and node1 are sufficient to meet the R=2 constraint; the client responds with an {ok,Object} successful reply.

The Constraints and Limits of Riak Core’s Consistent Hashing algorithm

I worked for Basho Technologies, Inc. for six years as a senior software engineer. My intent in this section is a gentle critique, informed by hindsight and discussion with many former Basho colleagues. While at Basho, I supported keeping what I can call now limits or even flaws in Riak Core: they all were sensible tradeoffs, back then. Today, we have the benefit of hindsight and time to reflect on new discoveries by academia and industry. Basho was liquidated in 2017, and its source code assets were sold to UK-based Bet365. Riak Core’s maintenance continues under Bet365’s ownership and by the Riak open source community.

Here’s a list of the assumptions and limits of Riak Core’s implementation of the Dynamo-style consistent hashing algorithm. They are listed in roughly increasing order of severity, hassle, and triggers for Customer Support tickets and escalations at Basho Technologies, Inc.

- The only string-to-integer hash algorithm is SHA-1.

- The hash “rings” integer interval is the range 0 to 2^160-1.

- The number of partitions is fixed.

- The number of partitions must be a power of 2.

- The size of each partition is fixed.

- Historically, the “claim assignment” algorithm used to assign servers to intervals on the ring were buggy and naive and frequently created imbalanced workload across nodes.

- Server capacity adjustment by “weighting” did not exist: all servers were assumed equal.

- No support for segregating extremely “hot” keys, for example, Twitter’s “Justin Bieber” account.

- No effective support for “rack-aware” or “fault domain aware” replica placement policy

Limits 1 and 2 are quite minor, both being tied to the use of the SHA-1 hash algorithm. The effective hash range of 160 bits was sufficient for all Riak use cases that I’m aware of. There were a handful of Basho customers who wanted to replace SHA-1 with another algorithm. The official answer by Basho’s Customer Support staff was always, “We strongly recommend that you use SHA-1.”

I believe that limits 3-5 made sense, once upon a time, but they should have been eliminated long ago. The original reason to keep those limits was,“Dynamo did it that way.” Later, the reason was, “The original Riak code did it that way.” After the creation of the Riak Core library, the reason turned into, “It is legacy code that nobody wants to touch.” The details why are a long diversion away from this article, my apologies.

Limits 6-9 caused a lot of problems for Basho’s excellent Customer Support Engineers as well as for Basho’s developers and finally into Basho’s marketing and business development. Buggy “claim assignment” (limit #6) could cause terrible data migration problems if moving from X number of servers to Y servers, for some unlucky pairs of X and Y. Migrating old Riak clusters from older & slower hardware to newer & faster machines (limit #7) was difficult when the ring assignments assumed that all machine had the same CPU & disk capacity; manual workarounds were possible, but Basho’s support staff were almost always involved in each such migration.

Riak Core is definitely vulnerable to the “Justin Bieber” phenomenon (limit #8): a “hot” key that gets several orders of magnitude more queries than other keys. The size of a Riak Core partition is fixed: any other key in the same partition as a super-hot key would suffer from degraded performance. The only mitigation was to migrate to a ring with more partitions (which violated constraint #3 but was actually feasible via a manual procedure (and help from Basho support) and then also manually reassign the hot key partition (and the servers clockwise around the ring) to larger & faster machines.

Limit #9 was a long-term marketing and business expansion problem. Riak Core usually had little problem maintaining N replicas of each object. As an eventually-consistent database, Riak would err conservatively and make more than N replicas of an object, then copy and merge together replicas in an automatic “handoff” procedure.

However, the claim assignment had no support for “rack awareness” or “fault domain awareness”, where a failure of a common power supply, network router, or other common infrastructure could cause multiple replicas to fail simultaneously. A replica choice strategy of “walk clockwise around the ring” could sometimes be created manually to support a single static data center configuration, but as soon as the cluster was changed again (or if a single server failed), then the desired replica placement constraints would be violated.

Dynamo’s and Riak Core’s consistent hashing: a retrospective

The 2007 Dynamo paper from Amazon was very influential. Riak adopted its consistent hashing technique and still uses it today. The Cassandra database uses a close variation. Other systems have also adopted the Dynamo scheme. As an industry practitioner, it is a fantastic feeling to read a paper and think, “That’s a great idea,” and “I can easily write some code to do that.” But it is also a good idea to look into the rear view mirror of time and experience and ask, “Is it still a good idea?”

While at Basho Technologies, I maintained, supported, and extended many of Riak’s subsystems. I think Riak did a lot of things right in 2009 and still does a lot of things right in 2018. But Riak’s consistent hashing is not one of them. The limits discussed in the previous section caused a lot of real problems that are (with the benefit of hindsight!) avoidable. Many of those limits have their roots in the original Dynamo paper. As an industry, we now have lots of experience from Basho and from other distributed systems in the open source world. We also have a decade of academic research that improves upon or supersedes Dynamo entirely.

Wallaroo Labs decided to look for an alternative hashing technique for Wallaroo. The option we’re currently using is Random Slicing, which is introduced in the second half of this article.

Random Slicing: a Different Consistent Hashing Technique

An alternative to Riak Core’s consistent hashing technique is Random Slicing. Random Slicing is described in “Random Slicing: Efficient and Scalable Data Placement for Large-Scale Storage Systems” by Miranda et al. in 2014. The important difference with Riak Core’s technique are:

- Any hash function that maps string (or byte list or byte array) to integers or floating point numbers may be used.

- The visual model of the hash space as a ring is not needed: replica placement is not determined by examining neighboring partitions of the hash space.

- The hash space may have any number of partitions.

- A partition of the hash space may be any size greater than zero.

These differences are sufficient to eliminate all of the weaknesses of Riak Core’s consistent hashing algorithm, in my opinion. Let’s look at several example diagrams to get familiar with Random Slicing, then we will re-examine Riak Core’s major pain points that I described in the previous section.

Let’s first look at some possible Random Slicing map illustrations that involve only 3 nodes.

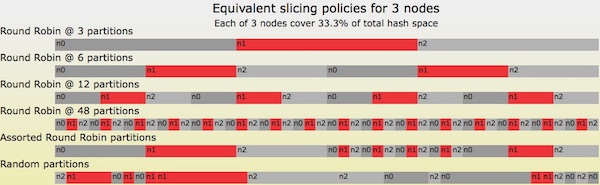

Figure 4: Six equivalent Random Slicing policies for a 3 node map

In all six cases in Figure 4, the amount of hash space assigned to n0, n1, and n2 is equal to 33.3%. The top case is simplest: each node is assigned a single contiguous space. Cases 2-4 look very similar to Riak Core’s initial ring claim policy, though without Riak Core’s power-of-2 partition restriction. Case 5, “Assorted Round Robin Partitions”, is actually a combination of earlier cases: case 2 for intervals in 0%-50%, case 4 for intervals in 50%-75%, and case 3 for intervals in 75%-100%. The bottom case is a random sorting of case 4’s intervals: note that there are three cases where nodes are assigned adjacent intervals!

Evolving Random Slicing Maps Over Time

All of the cases in Figure 4 are valid Random Slicing policies for a single point in time for a hash map. Let’s now look at an example of re-sizing a map over time (downward on the Y-axis) in Figure 5.

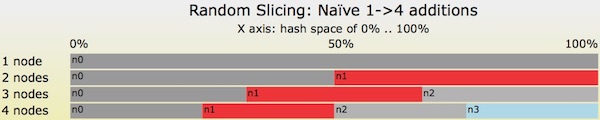

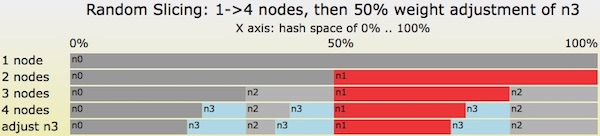

Figure 5: A naïve example of re-sizing a Random Slicing map from 1-> 4 nodes.

Figure 5 is terrible. I don’t recommend that anybody use Random Slicing in this way. I’ve included this example to make a point: there are sub-optimal ways to use Random Slicing. In this example, when moving from 1->2 nodes, 2->3 nodes, and 3->4 nodes, the total amount of keys moved in the hash space is equal to about 50.0 + (16.7 + 33.3) + (8.3 + 16.6 + 25.0) = 150%. That’s far more data movement than an optimal solution would provide.

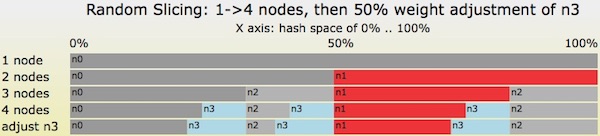

Let’s look at Figure 6 for an optimal transition from 1->4 nodes and then go one step further with a re-weighting operation.

- Start with a single node,

n0. - Add node

n1with weight=1.0 - Add node

n2with weight=1.0 - Add node

n3with weight=1.0 - Change

n3’s weight from 1.0 -> 1.5. For example, noden3got a disk drive upgrade with 50% larger capacity than the other nodes.

Figure 6: A series of Random Slicing map transitions as 1->4 nodes are added then n3’s weight is increased by 50%

After step 4, when all 4 nodes have been added with equal weight, the total interval assigned to each node is 25%: ‘n0’ is assigned a single range of 25%, and ‘n3’ is assigned three ranges of 8.33% adds up to 25%. The amount of data migrated by each step individually and in total is optimal: 50.0% + 33.3% + 25.0% = 108.3%. When compared to the bad example of Figure 5, the steps in Figure 6 will avoid a substantial amount of data movement!

After step 5, which changes the weighting factor of node n3 from 1.0 to 1.5, the amount of hash space assigned to ‘n3’ grows from 25.0% to 33.3%. The other three nodes must give up some hash space: each shrinks from 25.0% to 22.2%. The ratios of assigned hash spaces is exactly what we desire: 33.3% / 22.2% = 1.5.

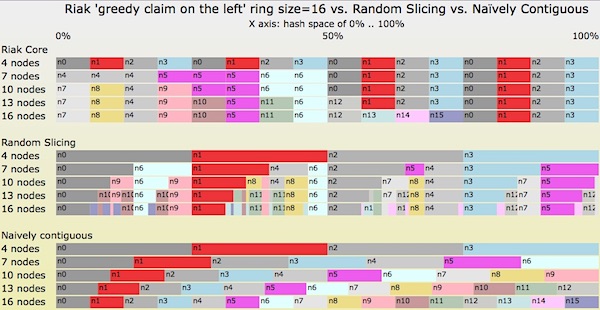

Finally, let’s look at a transition diagram in Figure 7. It starts with 4 nodes in the Random Slicing map. We then add 3 servers each, four times in a row. At the end, the Random Slicing maps will contain 16 nodes. Let’s see what the maps look like using three different techniques: Riak Core’s consistent hashing, Random Slicing with an optimal slicing strategy, and the naïve contiguous strategy.

Figure 7: Transitions from 4->7->10->13->16 nodes by three different slicing strategies

Some notes about Figure 7:

- The “Naïvely Contiguous” strategy at the bottom ends each transition with perfectly balanced assignments of hash space to each node. However, it causes a huge amount of unnecessary data movement during each transition.

- The Riak Core strategy at the top is optimal in terms of data migration by each transition, but the amount of hash space assigned to individual nodes is often unbalanced.

- Two cases of balance: in 4 & 16 node cases, all nodes are assigned an equal amount of hash space, 25.0% and 6.25%, respectively.

- Three cases of imbalance: 7, 10, and 13 nodes.

- In the 7 node case, nodes

n4andn5are assigned 3 partitions when the other nodes are assigned 2 partitions. The imbalance factor is 1.5. - In the 10 & 13 node cases, nodes

n0, n1, n2,andn3are assigned 2 partitions when some other nodes are assigned 1 partition. The imbalance factor is 2.0.

- The strategy in the middle is optimal both in terms of node balance after each transition and also the amount of data moved during each transition.

Random Slicing and Super-Hot “Justin Bieber Keys”

Let’s start with the Random Slicing perfect strategy in the middle of Figure 7, with 4 nodes in the map. Then let’s assume that the super-hot key for our Justin Bieber customer hashes to exactly 6.00000% in the hash space. In all phases of Figure 7, 6% is mapped to node n0. We know that n0 cannot handle all of the load for the Justin Bieber key and also all other keys assigned to it.

However, random slicing allows us the flexibility to create a new, very thin partition from 6.00000% to 6.00001% and assign it to node n11, which is the fastest Cray 11 super-ultra-computer that money can buy. Problem solved!

Maybe we solved it, but maybe we didn’t. In the real world, the Cray 11 does not exist. But if we could use a flexible placement policy, we can solve our Justin Bieber problem.

Random Slicing and Placement Policy Flexibility

There is one weakness in Riak Core’s consistent hashing that hasn’t been addressed yet by the Random Slicing technique: placement policy. But that is easy to fix. We’ll just add a level of indirection! (Credit to David Wheeler, see Wikipedia’s “Indirection” topic.)

Figure 6 (again): A series of Random Slicing map transitions as 1->4 nodes are added then n3’s weight is increased by 50%

Let’s look at Figure 6 again. Figure 6 gives us a mapping from a hash space interval to a single node or single machine. Instead, we can introduce a level of indirection at this point, mapping instead from a hash space interval to a replica placement policy. Then we can define any placement policy that we wish. For example:

- Node

n0-> Policy 0: store with Paxos replicated state machines on nodes 82, 17, and 22. - Node

n1-> Policy 1: store with Chain Replication state machines on nodes 77 and 23. - Node

n2-> Policy 2: use 7+2 Reed-Solomon erasure coding striped across nodes 31-39. - Node

n3-> Policy 3: send the data to the color printer in San Francisco - Node

n11-> Policy 11: use a special cluster of 81 primary/secondary replica machines: the 1 primary to handle updates as Justin changes stuff, and 80 read-only cache machines to handle the workload caused by Justin’s enthusiastic fans.

What Does a Random Slicing Implementation Look Like?

Let’s take a look at the data structure(s) needed for Random Slicing, considerations for partition policies, and what Random Slicing cannot do on its own.

Random Slicing implementation: data structures

If you needed to write a Random Slicing library today, one way to look at the problem is to consider major constraints. Some constraints to consider might be:

- How quickly do I need to execute a single range query, i.e., start with a key such as a string or a byte array and end with a Random Slicing partition?

- Related: How many partitions do I expect the Random Slicing map to hold?

- How much RAM am I willing to trade for that speed?

- What kind of information do I need to store with the Random Slicing partition? Am I mapping to a single node name (as a string or an IP address or something else?), or to a more general placement policy description?

Constraint A will drive your choice of a string/byte array -> integer hash function as well as the data structure used for range queries. For the former, will a crypto hash function like SHA-1 be fast enough? For the latter, will you need a search speed of O(1) in the partition table, or is something like O(log(N)) alright, where N is the number of partitions in the table?

Together with constraint B, you’re most of the way to deciding what data structure to use for the range query. The goal is: given an integer V (the output value from my standard hash function, e.g., SHA-1), what is the Random Slicing partition (I,J) such that I <= V < J. If you have easy access to an interval tree library, your work is nearly done for you. The same if you have a balanced tree library that can perform an “equal or less than” query: insert all of the lower bound values I into the tree, then perform an equal or less than query for V. Some trie libraries also include this type of query and may have a good space vs. time trade-off for your application.

If you need to write your own code, one strategy is to put all of the lower bounds values I into a sorted array. To perform the equal or less than query, use a binary search of the array to find the desired lower bound. This is the approach that we’re prototyping now with Wallaroo, written in the Pony language.

class val HashPartitions

let _lower_bounds: Array[U128] = _lower_bounds.create()

let _lb_to_c: Map[U128, String] = _lb_to_c.create()

- The initial hash function is MD5, which is fast enough for our purposes and has a convenient 128-bit output value. Pony has native support for unsigned 128-bit integer data and arithmetic.

- The data structures shown above (code link) uses an array of

U128unsigned 128-bit integers to store the lower bound of each partition interval. - The lower bound is found with a binary search (code link) over the

_lower_boundsarray. - The lower bound integer is used as a key to a Pony map of type

Map[U128, String], which uses aU128typed integer as its key and returns aStringtyped value.

In the end, we get a String data type, which is the name of the Wallaroo worker process that is responsible for the input key. If/when we need to support more generic placement policies, the String type will be changed to a Pony object type that will describe the desired placement policy. Or perhaps it will return an integer, to be used as an index into an array of placement policy objects.

In contrast, Riak Core uses its limitations of fixed size and power-of-2 partition count to simplify its data structure. Given a ring size S, the upper log2(S) bits of the SHA-1 hash value are used for an index into an array of S partition descriptors.

Random Slicing implementation: partition placement (where to put the slices?)

The data structures needed for Random Slicing are quite modest. As we saw earlier in the examples in Figure 4 and Figure 7, much of Random Slicing’s benefits come from clever placement & sizing of the hash partitions. What algorithm(s) should we use?

The Random Slicing paper by Miranda and collaborators, is an excellent place to start. Some of the algorithms and strategies used to solve “bin packing” problems can be applied to this area, although standard bin packing doesn’t allow you to change the size or shape of the items being packed into the bin.

From a practical point of view, if a Random Slicing map is added to frequently enough, then it’s possible to create partitions so thin that they cannot be represented by the underlying data structure. In code that I’d written a few years ago for Basho (but not used by Riak), the underlying data structure used floating point numbers between 0.0 and 1.0. After adding a single node to a map, one at a time, then after repeating several dozen more times, a partition can become so thin that a double floating point variable can no longer represent the interval.

When it becomes impossible to slice a partition, then some partitions must be reassigned: coalesce many tiny partitions into a few larger partitions. Any such reassignment requires moving data, for “no good reason” other than to make partition slicing possible again. There’s a rich space for solving optimization problems here, to choose the smallest intervals in such a way to minimize the total sum of intervals reassigned while maximizing the benefit of creating larger contiguous partitions. Or perhaps you solve this problem another way: to upgrade your app to use a different data structure that has a smaller minimum partition size, then deal with the hassle of upgrading everything. Either approach might be the best one for you.

Random Slicing implementation: out-of-bounds topics

If we use the right data structures and write all the code to implement a Random Slicing map, then we’ve solved all our application’s distributed data problems, haven’t we? I’d love to say yes, but the answer is no. Some other things you need to do are:

- Distribute copies of the Random Slicing map itself, probably with some kind of version numbering or history-preserving scheme.

- Move your application’s data when the Random Slicing map changes.

- Deal with concurrent access to your app’s data while its location while it is being moved.

- Manage all of the data races that problems 1-3 can create.

Random Slicing cannot solve any of these problems. Nor is there a single solution to the equation of your-distributed-application + Random-Slicing = new-resizable-distributed-application. Solutions to these problems are application-specific.

Conclusion: Random Slicing is a Big Step Up From Riak Core’s Hashing

I hope you’ll now believe my claims that Random Slicing is a far more flexible and useful hashing method than Riak Core’s consistent hashing. Random Slicing can provide fine-grained load balancing across machines while minimizing data copying when machines are added, removed, or re-weighted in the Random Slicing map. Random Slicing can be easily extended to support arbitrary placement policies. The problem of extremely hot “Justin Bieber keys” can be solved by adding very thin slices in a Random Slicing map to redirect traffic to a specialized server (or placement policy).

Wallaroo Labs will soon be using Random Slicing for managing processing stages within Wallaroo data processing pipelines. It is very important that Wallaroo be able to adjust workloads across participants without unnecessary data migration. We do not yet need multiple placement policies, but, if/when the time comes, it is reassuring that Random Slicing will make those policies easy to implement.

Online References

All URLs below were accessible in August 2018.

- Bet365. “Riak Core”.

- Decandia et al. “Dynamo: Amazon’s Highly Available Key-value Store”, 2007.

- Karger et al. “Consistent Hashing and Random Trees: Distributed Caching Protocols for Relieving Hot Spots on the World Wide Web”, 1997.

- Metz, Cade. “How Instagram Solved Its Justin Bieber Problem”, 2015.

- Miranda et al. “Random Slicing: Efficient and Scalable Data Placement for Large-Scale Storage Systems”, 2014.

- Sheehy, Justin. “Riak: Control your data, don’t let it control you”, 2009.

- Wallaroo Labs. “Wallaroo”.

- Wikipedia. “Hash Tables”.

- Wikipedia. “Indirection.”

About the Author

Scott Lystig Fritchie was UNIX systems administrator for a decade before until switching to programming full-time at Sendmail, Inc. in 2000. While at Sendmail, a colleague introduced him to Erlang, and his world hasn’t been the same since. He has had papers published by USENIX, the Erlang User Conference, and the ACM and has given presentations at Code BEAM, Erlang Factory, and Ricon. He is a four-time co-chair of ACM Erlang Workshop series. Scott lives in Minneapolis, Minnesota, USA and works at Wallaroo Labs on a polyglot distributed system of Pony, Python, Go, and C.

Scott Lystig Fritchie was UNIX systems administrator for a decade before until switching to programming full-time at Sendmail, Inc. in 2000. While at Sendmail, a colleague introduced him to Erlang, and his world hasn’t been the same since. He has had papers published by USENIX, the Erlang User Conference, and the ACM and has given presentations at Code BEAM, Erlang Factory, and Ricon. He is a four-time co-chair of ACM Erlang Workshop series. Scott lives in Minneapolis, Minnesota, USA and works at Wallaroo Labs on a polyglot distributed system of Pony, Python, Go, and C.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

In last part we have seen the basics of Artificial intelligence and Artificial Neural Networks. As mentioned in the last part this part will be focused on applications of Artificial neural networks. ANN is very vast concept and we can find its applications anywhere. I have mentioned some of the major use cases here.

(Source: itnext)

Applications of ANN’s

Widrow, Rumelhart and Lehr [1993] argue that most ANN applications fall into the following three categories:

- Pattern classification,

- Prediction and financial analysis, and

- Control and Optimization.

In practice, their categorization is ambiguous since many financial and predictive applications involve pattern classification. A preferred classification that separates applications by method is the following:

- Classification

- Time Series and

- Optimization.

Classification problems involve either binary decisions or multiple-class identification in which observations are separated into categories according to specified Characteristics. They typically use cross sectional data. Solving these problems entails ‘learning’ patterns in a data set and constructing a model that can recognize these patterns. Commercial artificial neural network applications of this nature include:

- Credit card fraud detection reportedly being used by Eurocard Nederland, Mellon Bank, First USA Bank, etc. [Bylinsky 1993];

- Optical character recognition (OCR) utilized by fax software such as Calera Recognition System Fax Grabber and Caere Corporation’s Anyfax OCR engine that is licensed to other products such as the popular WinFax Pro and FaxMaster[Widrow et al.1993];

- Cursive handwriting recognition being used by Lexicus 2 Corporation’s Longhand program that runs on existing notepads such as NEC Versapad, Toshiba Dynapad etc. [Bylinsky 1993], and ;

- Cervical (Papanicolaou or ‘Pap’) smear screening system called Papnet 3 was developed by Neuromedical Systems Inc. and is currently being used by the US Food and Drug Administration to help cytotechnologists spot cancerous cells [Schwartz 1995, Dybowski et al.1995, Mango 1994, Boon and Kok 1995, Boon and Kok 1993, Rosenthal et al.1993];

- Petroleum exploration being used by Texaco and Arco to determine locations of underground oil and gas deposits [Widrow et al.1993]; and

- Detection of bombs in suitcases using a neural network approach called Thermal Neutron Analysis (TNA), or more commonly, SNOOPE, developed by Science Applications International Corporation (SAIC) [Nelson and Illingworth 1991, Johnson 1989, Doherty 1989 and Schwartz 1989].

In time-series problems, the ANN is required to build a forecasting model from the historical data set to predict future data points. Consequently, they require relatively sophisticated ANN techniques since the sequence of the input data in this type of problem is important in determining the relationship of one pattern of data to the next. This is known as the temporal effect, and more advance techniques such as finite impulse response (FIR) types of ANN and recurrent ANNs are being developed and explored to deal specifically with this type of problem.

Real world examples of time series problems using ANNs include:

- Foreign exchange trading systems: Citibank London [Penrose 1993, Economist 1992, Colin 1991, Colin 1992], Hong Kong Bank of Australia [Blue 1993];

- Portfolio selection and management: LBS Capital Management 4 (US$300m) [Bylinsky 1993] (US$600m) [Elgin 1994], Deere & Co. pension fund (US$100m) [Bylinsky 1993] (US$150m) [Elgin 1994], and Fidelity Disciplined Equity Fund [McGugan 1994];

- Forecasting weather patterns [Takita 1995];

- Speech recognition network being marketed by Asahi Chemical [Nelson and Illingworth 1991];

- Predicting/confirming myocardial infarction, a heart attack, from the output waves of an electrocardiogram (ECG) [Baxt 1995, Edenbrandt et al.1993, Hu et al.1993, Bortolan and Willems 1993, Devine et al., Baxt and Skora 1996]. Baxt and Skora reported in their study that the physicians had a diagnostic sensitivity and specificity for myocardial infarction of 73.3 and 81.1% respectively, while the artificial neural network had a diagnostic sensitivity and specificity of 96.0% and 96.0% respectively; and

- Identifying dementia from analysis of electrode-electroencephalogram (EEG) patterns [Baxt 1995, Kloppel 1994, Anderer et al.1994, Jando et al.1993, Bankman et al.1992]. Anderer et al. reported that the artificial neural network did better than both Z statistic and discriminant analysis [Baxt 1995].

Optimization problems involve finding solution for a set of very difficult problems known as Non-Polynomial (NP)-complete problems, Examples of problems of this type include the traveling salesman problem, job-scheduling in manufacturing and efficient routing problems involving vehicles or telecommunication. The ANNs used to solve such problems are conceptually different from the previous two categories (classification and time-series), in that they require unsupervised networks, whereby the ANN is not provided with any prior solutions and thus has to ‘learn’ by itself without the benefit of known patterns. Statistical methods that are equivalent to these type of ANNs fall into the clustering algorithms category.

Conclusion:

This were some of the use case of Artificial Neural Networks. In next part we will see biological background and history of development of Artificial neural networks.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

I wrote about this long ago (see here in 2014), and so did many other practitioners. This new post shows more maturity I think, a more coherent view about the various data scientist roles in the Industry (now that things are getting more clear for most hiring managers), and how these scientists interact between themselves and with other teams. It is also a short read for the busy professional.

Source for picture: AnalyticsInsight

There are all sorts of data scientists.

- Some are BI analysts, and rarely code (they even use GUI’s to access databases, so they don’t even write SQL queries – the tool does that for them; however they must understand database schema.) But they are the guys that define metrics and work with management to identify data sources, or to create data. They also work on designing data dashboards / visualizations with various end-users in mind, ranging from security, finance, sales, marketing, to executives..

- Data engineers get the requirements from these BI analysts to set up the data pipelines, and have the data flow throughout the company and outside, with little pieces (usually summarized data) ending up on various employee laptops for analysis or reporting. They work with sys admins to set up data access, customized for each user. They are familiar with data warehousing, the different types of cloud infrastructure (internal, external, hybrid), and about how to optimize data transfers and storage, balancing speed with cost and security. They are very familiar with how the Internet works, as well as with data integration and standardization. They are good at programming and deploying systems that are designed by the third type of data scientists, described below.

- Machine learning data scientists design and monitor predictive and scoring systems, have an advanced degree, are experts in all types of data (big, small, real time, unstructured etc.) They perform a lot of algorithm design, testing, fine-tuning, and maintenance. They know how to select/compare tools and vendors, and how to decide between home-made machine learning, or tools (vendor or open source.) They usually develop prototypes or proof of concepts, that eventually get implemented in production mode by data engineers. Their programming languages of choice are Python and R.

- Data analysts are junior data scientists doing a lot of number crunching, data cleaning, and working on one-time analyses and usually short-term projects. They interact with and support BI or ML data scientists. They sometimes use more advanced statistical modeling techniques.

Depending on the size of the company, these roles can overlap. Many times, an employee is given a job title that does not match what she is doing (typically, “data scientist” for a job that is actually “data analyst”.)

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn, or visit my old web page here.

DSC Resources

- Invitation to Join Data Science Central

- Free Book: Applied Stochastic Processes

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions