Month: December 2018

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

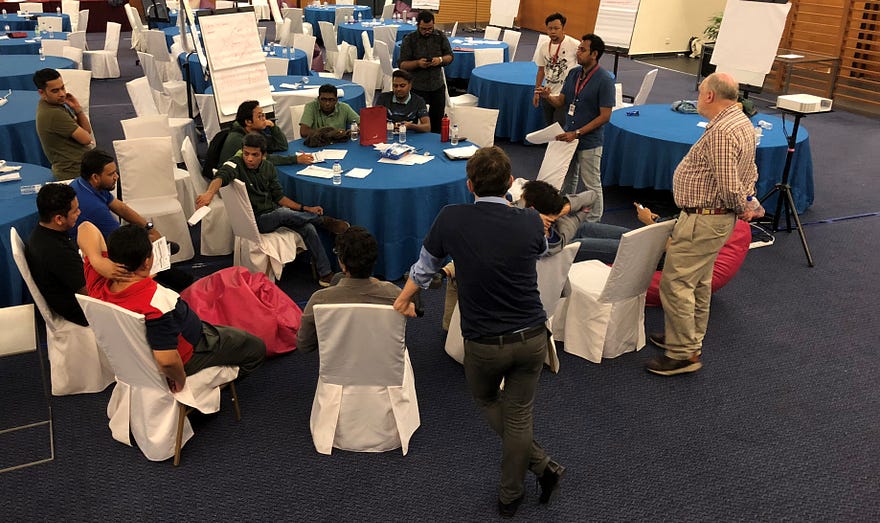

Organizing Data Unchained Malaysia was one of the most rewarding things I have ever done in my career. In November 2018, we invited 100 brilliant data enthusiasts, out of 300 candidates, to a resort in Kuala Lumpur to solve a data problem and to create a suitable business model for it.

We gave participants anonymized sample internet and phone call data, connected car records and some points of interest. We asked them to predict the destinations towards which cars are moving, to create a safe driving index and to build a business plan that uses these two models commercially — all this in just 24 hours. It was crucial for participants to possess a good blend of technical and business skills to make it through the competition.

Participants and judges in the competition

The datathon, or hackathon for data enthusiasts, was a collaboration between:

● A Malaysian digital and telecom conglomerate operating in 11 countries in South Asia and South East Asia, where I oversee Analytics / A.I.

● The Asia School of Business, a Joint Venture between the Malaysian Central Bank and Massachusetts Institute of Technology (MIT).

To set up the context, we had three main objectives when we launched this datathon:

● Energizing our organization and accelerate our data-driven cultural journey.

● Strengthening our employer brand and hiring the best available talent.

● Giving back to society and develop a talent pool of data professionals in Malaysia.

I learned as many things organizing this datathon as the participants themselves learned while solving the problem. These are my learnings from the perspective of a Chief Data Analytics Officer organizing a corporate datathon.

CHOOSING PARTICIPANTS:

1) Participants must be both internal and external to your organization. Inviting external participants (up to 70%) will not only contribute to your employer brand and hiring pipeline, it will also energize your internal teams (who should be up to 30%) even more since external participants are not subject the biases and group-thinking of your organization.

2) Participants must be deeply cross-functional. Around 60% of them should be technical (data scientists, data engineers, full-stack developers, data architects and IT professionals) and 40% should have a background on different areas of business, most importantly marketing, operations, design and customer experience).

Judges in Data Unchained 2018

WORKING WITH PARTNERS:

3) You need a business school as a partner, not a technical school. Technical schools or universities might help you attract technical participants, but analytics is not about math, it is about solving real business problems through math. Find the most reputed business school in your city or country and partner up with them. The business school should provide the venue, co-lead marketing activities and provide a significant pool of their own students to participate.

4) You need a TV station or a media company as a partner, if branding is one of your objectives. Getting on traditional media will be difficult unless you get a mainstream local politician or a celebrity as a guest speaker in your datathon. You will be able to publish your press release in some specialized websites, but nobody reads press releases anyway.

5) You need industry or start-up partners, which are relevant to your industry and to the analytics / A.I. community. Industry partners can market the event through their own channels, which were extremely useful in our case. Partners can also contribute with excellent judges or keynote speakers. Additionally, cloud vendors might provide infrastructure services or interesting external datasets for the competition.

6) You might need sponsors. Organizing a datathon is not expensive though. Venue, food, sizable prizes to attract talent and a few travel expenses are all you need. If you budget is tight finding sponsors among your industry partners is a very realistic option.

24 teams working on their data and business models

MARKETING YOUR EVENT:

7) Paid Facebook advertising did not work, linked-in worked and it was free. Business-oriented channels are more suitable for the kind of profiles you need. Anyway, if they are not on linked-in you are not going to hire them. Are you? (Yes… I know your CEO is not on linked-in but that is another story)

8) You need to bring 3 to 5 heavyweight judges (including guest speakers), who have an interesting story to tell, ideally from abroad. Reputed analytics professors, an analytically-oriented C-Level of your organization or from leading industry players will make excellent judges.

CHOOSING THE PROBLEM:

9) You need to work on a real business problem which must be open-ended and challenging. It does not matter if participants cannot finish it. What matters is to make people think big and out of the box. Narrowly defined questions such as those in Kaggle.com competitions, where participants are asked to maximize a few technical parameters, are not that useful in business, because business is open-ended and ever-charging. It will add a lot value to the competition if you can bring a person from your organization or a partner to explain to participants which ones of her real business problems she thinks data analytics might help solving. In our case, we were lucky enough to partner with GoCar, a Malaysian online car rental start-up.

10) The problem should not be too specific to your industry (like churn prediction in telecom). Choosing a topic of a new adjacent area where your business might play in the future is more energizing for internal teams and it allows to create better synergies between internal and external participants

11) Everything can and should be challenged. There are no rules about problem solving to be respected. Participants need to assume that everything can start from scratch.

12) Teams need to have a working prototype which generates actionable insights out of data. This is not an idea pitching competition, but a demo followed by a business case presentation with a clear development path including all technical and business steps required.

Awarding prizes

GETTING THE DATA:

13) You do not need a massive dataset, unless you are focusing on deep learning. Keep your data relatively manageable (up to a few GB) since you are looking for creativity rather than for algorithm finetuning. A smaller dataset can run faster (even on a laptop) and be transferred faster.

14) Do not limit yourself to internal data. Internal data does not have the potential to change your business. Only external data has that potential. You can get data from industry partners or public sources. You can also scrap the Web or buy it.

15) You need data from dissimilar and unrelated sources. This is obvious for a data scientist, but the power of data increases dramatically when you connect information that was not connected before. How to connect information is often difficult. You can ask participants to solve it. Also please encourage participants to use any external data they can find on their own.

16) It is far easier to manage the whole data life-cycle for the datathon inside a cloud, than providing copies to participants. However participants do prefer to have their own copy so that they can analyze with their own tools.

17) It is totally mandatory to correctly anonymize all information used in the datathon to assure data confidentiality. Introducing a small white error to the data might also help.

SETTING THE FORMAT:

18) The competition should be by teams, but it should be possible to identify individual contributions. In the real world we work in teams but if you are planning to extend an employment offer to some of the participants, you must identify them first. As a result, you need individual and team prizes. The best way to identify individual contribution is to mingle with participants and see how they work and think. Tell your team to sit with them, chat with them without directly help them and try to think whether they would excited to work in the team with us. A datathon is a 24-hour undercover interview.

19) Participants should be allowed to choose their own teams. You could argue that in the real world you cannot choose your colleagues, but the reality is that not being able to choose their team is a big drawback for participants. In our case we had 24 teams of 4 or 5 participants each.

20) The competition must be face-2-face. There can be online sections, or some participants might access only online. However, face-2-face interactions are key if your objectives including branding, hiring or corporate social responsibility.

21) The 24-hour format to solve the problem works very well because allows introducing the problem and having a few keynote speakers, solving it in 24 hours, selecting the winners and giving the awards in one weekend. However, it requires to have good facilities that allow participants to stay overnight, if they wish to do so.

Judging technical models at 3:00 am

JUDGING:

22) Your team needs to have solved the problem first and that solution is the benchmark against which you measure participants against model performance and creativity.

23) Judging needs to run in two stages: a technical pre-selection phase followed by a business-oriented phase. You and your analytics team, who defined the problem and prepared the data, should also evaluate the submissions. After the technical pre-evaluations, only teams who have a good working model will be allowed to pitch their business model to the judges in the second phase.

24) Judging will be extremely exhausting: in our case, technical deliberations to judge 24 teams took 6 hours after all participants went back home. If the datathon is exhausting for participants, imagine how it is for your team, who is observing and mingling with all participants, helping with logistics and performing the technical pre-selection.

SUSTAINING CULTURAL CHANGE:

25) Develop the content within your team to energizer your team. You can use an event organizer and outsource anything to them. But there are three things you should not outsource: format definition, problem definition and data preparation. Doing it in-house is also an excellent opportunity for team-building. It is indeed a lot of fun to do organize a datathon.

26) Continue the datathon vibe in your company to keep the mindset change going. A datathon is a spark that can temporarily energize your company around data analytics. But if you do not put in place the right processes to sustain the momentum, that surge of enthusiasm will disipate. Analytics training programs or internal mini-datathons in different parts of your company or defining a “data-first” imperative can help to sustain the energy.

27) It is the best thing we have done for our employer brand equity. A datathon provides a very targeted spotlight for your organization among data enthusiasts, who are the people you want to attract as employees or partners. It also provides the opportunity for participants to interact with yourself and your analytics team during the whole event and get to know more about your company. As I said above, at the end of the day, a datathon is a 24-hour interview.

Judging technical models at 3:00 am

WINNERS:

28) External participants will always do better than internal participants.While external participants join because they want, in general internal participants are told to join by their boss, which is less motivating.

29) The winners will have the right combination of technical and business skills. Technical-only teams will fail.

30) You will never be able hire the one or two top winners. Top winners can go anywhere. But there is so much talent in the competition to build lasting relations with.

*****

Disclaimer: Opinions in the article do not represent the ones endorsed by the author’s employer.

ABOUT THE AUTHOR

Pedro URIA RECIO is thought-leader in artificial intelligence, data analytics and digital marketing. His career has encompassed building, leading and mentoring diverse high-performing teams, the development of marketing and analytics strategy, commercial leadership with P&L ownership, leadership of transformational programs and management consulting.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Key Takeaways

- Making governments fit for the internet era will require new public institutions and empowered teams that combine emerging digital skills with traditional government expertise.

- Digital transformation is not an IT programme; it is about applying the culture, practices, processes & technologies of the Internet-era to respond to people’s raised expectations.

- Governments that have successfully applied digital transformation have saved substantial amounts of money while providing services that are simpler, clearer and faster for citizens to use.

- Service standards and spending controls are powerful levers for driving change across large, fragmented organisations.

- The strongest digital governments are those that have radically changed the way they work.

The book Digital Transformation at Scale by Andrew Greenway, Ben Terrett, Mike Bracken and Tom Loosemore, explores what governmental and other large organizations can do to make a digital transformation happen. It is based on the authors’ experience designing and helping to deliver the UK’s Government Digital Service (GDS).

InfoQ interviewed Greenway about digital transformations in governmental organizations.

InfoQ: Why did you write this book?

Andrew Greenway: After we left the UK Government Digital Service (GDS) in 2015, we knew there were lots of stories to tell. We found ourselves telling most of the same tales again and again. Writing a book was our way of capturing the lessons that seem to apply pretty much universally to large, old organisations. It’s not the full GDS story by any stretch; there’s hundreds of those.

And to be honest, I’d always wanted to write a book.

InfoQ: For whom is it intended?

Greenway: Anyone who knows their organisation is out of kilter with the internet era, and is desperate to do something about it. There are so many people emerging from a big programme that has failed, or who are fed up with endless bleatings of ‘change’ from management without seeing any real evidence for it. The book is written for people who know something’s up and want to get things done, as opposed to just talking about them.

We originally thought it would be mostly useful for public servants and officials – government is the backdrop for most of the stories – but we’ve found that much of what’s in the book applies to pretty much any industry that began its life on paper.

InfoQ: How do you define “digital transformation”?

Greenway: An organisation going through the process of applying the culture, practices, processes & technologies of the Internet-era to respond to people’s raised expectations.

Digital transformation has become a tired phrase, which is a pity. Like any bit of language that enjoys some reflected success, it has been bastardised and marketed into semi-obsolescence. Plenty of deeply mediocre work will be going on in the name of ‘digital transformation’ right now.

We agonised a bit over putting it in the book title, but in the end decided it was better to try and claim the term for something positive, rather than invent some new jargon that would confuse people.

InfoQ: What reasons do governmental organizations have to start a digital transformation?

Greenway: Often it comes from a recognition that the present state of affairs is unacceptable – either on the grounds of exorbitant cost, services that are clearly well below the standard people now expect, or a massive, visible programme failure with IT fingerprints on it. More positively, it can come from a leader (not always someone at the very top), who sees it as a lever to radically change their organisation for the better.

The other thing is governments don’t haveto start a digital transformation. They rarely go out of business. You could argue the internet is not the existential threat to them it has been to the traditional media, hospitality and music industries, and is rapidly becoming for retail, banking and insurance. That said, voters notice bad public services. When an administration is seen as incompetent, it doesn’t tend to survive very long. That can focus minds.

InfoQ: What’s needed to start a digital transformation?

Greenway: A strong political leader, a bold mission, a realistic but impactful first project, and an excellent, small, multidisciplinary team.

That first team will ship the first digital services, and it will ship the culture of what is to follow.

InfoQ: What things can be done in large organizations to establish agile teams consisting of people with the right skills?

Greenway: For many organisations, agile teams represent a very new way of working. It isn’t really possible to learn that in a classroom, or even be coached towards it. To really establish agile within an organisation, you need to bring in the full team, not just ones and twos (‘the unit of delivery is the team’ was a GDS mantra). That team should be given the conditions that allow them to deliver quickly, work in the open, and become a visible and tangible demonstration of what an agile team is. Some of that is intensely practical – having a decent workspace for them to all sit together, for example. Some of it is more challenging for institutions – moving to governance that is based on show and tells rather than steering boards is a big culture shock for many.

Without that team showing what it means for real, agile is just words on a page for people, and not very clear ones at that.

InfoQ: What are the main challenges of digital transformations in governments? How can we deal with them?

Greenway: Inertia is a difficult thing to wrangle in governments; ‘the way things are done around here’ generates in-built momentum, and is hard to redirect. A lot of the hard yards come down to challenging that, and picking your battles wisely. You can’t fix all the things at once. Complexity is the cousin of inertia – trying new things looks even less appealing when working life is seriously busy and complicated already; and governments are always busy and complicated.

Fighting inertia often comes down to being ruthlessly focused on delivering things that benefit the user, not the whims of the organisation. Tackling complexity is a case of being as enthusiastic about stopping things as you are about creating them. People never get enough credit for stopping things, but doing it well requires courage, innovation and creativity. Bringing simplicity where there was chaos is a good test of whether digital transformation is genuine or not.

InfoQ: How can you measure user satisfaction with digital services provided by governments?

Greenway: With a lot of care, and having your eyes wide open about how much to trust those numbers.

We included user satisfaction as one of the four performance indicators for all new and redesigned digital services. Very quickly we found out that no matter how you measure it, user satisfaction figures actually tell you very little about the actual quality of the service.

Say you’re paying your tax online. No matter how first-rate the digital experience of that public service is, very few people will take real pleasure from it. That service might have a beautifully optimised user experience, but poor user satisfaction numbers.

Over time, we focused much more on things like how many users successfully completed a transaction end-to-end, and reducing the time it took them. Public services are different to the private sector in this respect. The total number of users and the ‘stickiness’ of the service is often irrelevant as a performance measure; when it comes to public services, citizens want to do whatever’s necessary, and get on with their lives.

InfoQ: How does the GDS service standard look and what purpose does it serve?

Greenway: The standard was a list of criteria that we asked all new or redesigned digital service to meet before they could be launched to the public. These points were not just about the look and feel of the service; they also related to who was on the team, how they worked, what metrics they measured, how they ensure the service was secure, and so on.

The criteria themselves have been iterated a few times (and the idea of a standard has been adopted in lots of other countries), but their intention is the same. Enforcing a standard is a practical way of changing working practices at scale. Enforcement is important. A lot of the time, we found ourselves saying ‘no’ to services from departments where colleagues had wanted to kill them off, but lacked the mandate to do so. Saying no, and being open, honest and consistent about why, is the way to change behaviour. It’s not a popular job, but it protects the people who are willing and able to work in the right way.

About the Book Authors

All four authors are partners in Public Digital, a consultancy that helps large international organisations, governments and senior leaders to deliver digital transformation at scale.

Andrew Greenway worked in five government departments, including the Government Digital Service, where he led the team that delivered the UK’s digital service standard. He also led a government review into applications of the Internet of Things, commissioned from Government’s chief scientific advisor by the UK Prime Minister in 2014.

Andrew Greenway worked in five government departments, including the Government Digital Service, where he led the team that delivered the UK’s digital service standard. He also led a government review into applications of the Internet of Things, commissioned from Government’s chief scientific advisor by the UK Prime Minister in 2014.

Ben Terrett was director of design at the Government Digital Service, where he led the multidisciplinary design team for GOV.UK which won the Design of the Year award in 2013. Before working in government, Terrett was design director at Wieden + Kennedy, and co-founder of The Newspaper Club. He is a governor of the University of the Arts London, a member of the HS2 Design Panel, and an advisor to the London Design Festival. He was inducted into the Design Week Hall of Fame in 2017.

Ben Terrett was director of design at the Government Digital Service, where he led the multidisciplinary design team for GOV.UK which won the Design of the Year award in 2013. Before working in government, Terrett was design director at Wieden + Kennedy, and co-founder of The Newspaper Club. He is a governor of the University of the Arts London, a member of the HS2 Design Panel, and an advisor to the London Design Festival. He was inducted into the Design Week Hall of Fame in 2017.

Mike Bracken was appointed executive director of digital for the UK government in 2011 and the chief data officer in 2014. He was responsible for overseeing and improving the government’s digital delivery of public services. After government, he sat on the board of the Co-operative Group as chief digital officer. Before joining the civil service, Bracken ran transformations in a variety of sectors in more than a dozen countries, including as digital development director at Guardian News & Media. He was named UK Chief Digital Officer of the year in 2014 and awarded a CBE.

Mike Bracken was appointed executive director of digital for the UK government in 2011 and the chief data officer in 2014. He was responsible for overseeing and improving the government’s digital delivery of public services. After government, he sat on the board of the Co-operative Group as chief digital officer. Before joining the civil service, Bracken ran transformations in a variety of sectors in more than a dozen countries, including as digital development director at Guardian News & Media. He was named UK Chief Digital Officer of the year in 2014 and awarded a CBE.

Tom Loosemore wrote the UK’s Government Digital Strategy, and served as the GDS’s deputy director for five years. He led the early development of GOV.UK. Outside government, Loosemore has also worked as the director of digital strategy at the Co-Operative Group, as a senior digital advisor to OFCOM, and was responsible for the BBC’s Internet strategy between 2001 and 2007.

Tom Loosemore wrote the UK’s Government Digital Strategy, and served as the GDS’s deputy director for five years. He led the early development of GOV.UK. Outside government, Loosemore has also worked as the director of digital strategy at the Co-Operative Group, as a senior digital advisor to OFCOM, and was responsible for the BBC’s Internet strategy between 2001 and 2007.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

In a recent blog post, Amazon introduced a new service called AWS Cloud Map which discovers and tracks AWS application services and cloud resources. With the rise of microservice architectures, it has been increasingly difficult to manage dynamic resources in these architectures. But, using AWS Cloud Map, developers can monitor the health of databases, queues, microservices, and other cloud resources with custom names.

Service maps are nothing new, but the nature of transient infrastructure can create anomalies in some of the traditional tools. Amazon positions some of the benefits of using their solution as:

Previously, you had to manually manage all these resource names and their locations within the application code. This became time consuming and error-prone as the number of dependent infrastructure resources increased or the number of microservices dynamically scaled up and down based on traffic. You could also use third-party service discovery products, but this required installing and managing additional software and infrastructure.

Ensuring resources are dynamically kept up to date is a key feature of AWS Cloud Map. Abby Fuller, a senior technical evangelist at AWS, explains how this is achieved:

AWS Cloud Map keeps track of all your application components, their locations, attributes and health status. Now your applications can simply query AWS Cloud Map using AWS SDK, API or even DNS to discover the locations of its dependencies. That allows your applications to scale dynamically and connect to upstream services directly, increasing the responsiveness of your applications.

Registering your web service and cloud resources in AWS Cloud Map is a matter of describing them using custom attributes including deployment stage and versions. Subsequently, your applications can make discovery calls and AWS Cloud Map will return the locations of resources based upon the parameters passed. Fuller characterizes the benefits of this approach as:

Simplifying your deployments and reduces the operational complexity for your applications.

In addition to resource tracking, AWS Cloud Map also provides proactive health monitoring. Fuller explains:

Integrated health checking for IP-based resources, registered with AWS Cloud Map, automatically stops routing traffic to unhealthy endpoints. Additionally, you have APIs to describe the health status of your services, so that you can learn about potential issues with your infrastructure. That increases the resilience of your applications.

Adding resources to AWS Cloud Map occurs through the AWS console or CLI by creating a namespace. With a namespace provisioned, an administrator then needs to determine whether they want to enable resource discovery only using the AWS SDK and AWS API or optionally using DNS. DNS discovery requires IP addresses for all the resources you register.

Resources, including Amazon Elastic Container Service (ECS) and Amazon Fargate are tightly integrated with AWS Cloud Map which simplifies enabling discovery. Fuller explains:

When you create your service and enable service discovery, all the task instances are automatically registered in AWS Cloud Map on scale up, and deregistered on scale down. ECS also ensures that only healthy task instances are returned on the discovery calls by publishing always up-to-date health information to AWS Cloud Map.

AWS Cloud Map is currently available in the following regions: US East (Virginia), US East (Ohio), US West (N. California), US West (Oregon), Canada (Central), Europe (Frankfurt), Europe (Ireland), Europe (London), Europe (Paris), Asia Pacific (Singapore), Asia Pacific (Tokyo), Asia Pacific (Sydney), Asia Pacific (Seoul), and Asia Pacific (Mumbai) Regions.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Digital natives are familiar with the lean startup and agile practices. They go further by combining Agile with the Toyota Production System which enables them to experiment with ideas, spread innovations, and scale fast.

Fabrice Bernhard, co-founder and CEO of Theodo UK, presented “what lean can learn from digital natives” at Lean Digital Summit 2018. InfoQ is covering this event with summaries, articles, and Q&As.

Digital native companies like Google, Apple, Facebook, and Amazon (GAFAs) share some very effective management practices with Toyota, said Bernhard. He mentioned OKRs which are similar to Toyota’s Hoshin-Kanri, and agile teams that have a lot of autonomy and use visual management to self-inspect and share their progress with the rest of the organisation.

What digital native companies have, that traditional companies like Toyota do not and which contributes to making them scale faster, is a culture of software and connectedness, he said. Typical outcomes are, for example, continuous product updates over the air, open-sourcing tools to train and attract talent outside the organisation, or using the latest digital technologies to communicate in real-time internally and with their ecosystem. This helps them spread innovation much faster internally and with the outside world.

What lean teaches us is that inspecting the way you build your product at the most granular level is a huge source of innovation, said Bernhard. His advice for a company trying to accelerate innovation is to create that culture, with many- if not most- teams doing kaizen, ie teams inspecting the way they work at a very detailed level to come up with ideas, and then experimenting with different ways to improve their work. This should create a flow of innovation coming from the whole organisation, he said.

Once this flow of innovation exists, set up an internal social network and organise regular meetings between “guilds” of people who share similar topics of innovation to ensure innovation is spread effectively across teams that can benefit from it, he advised.

InfoQ interviewed Bernhard about what lean can learn from the digital natives.

InfoQ: Are the agile of digital natives and the lean of Toyota compatible?

Frabrice Bernhard: The successful adoption of some lean management practices by the GAFAs, like Hoshin-Kanri and visual management, shows that digital natives are receptive to lean culture. Similarly, the emphasis on retrospective and daily inspection in the agile methodology, used by most startups, reminds us that agile has deep roots going all the way back to the Toyota Production System. The hardest challenge is therefore not mixing both the agile culture of digital natives and the lean culture of Toyota; the challenge is adopting both individually in your organisation in the first place.

InfoQ: How did you mix the culture of digital natives with a lean culture?

Bernhard: At Theodo, once we had started adopting both agile and lean practices, we quickly experimented with mixing both cultures successfully.

The first initiatives were around putting our weekly client’s satisfaction survey (the voice of the customer in lean) on the Scrum board and training Scrum teams in using lean problem-solving frameworks when an indicator was red. These initiatives helped teams to be more autonomous and helped us scale faster.

More recent initiatives are, for example, making time for an improvement kaizen alongside the typical Scrum sprint. This allows teams to invest much more time in analysing the way they work and improving it, than a traditional agile team. This has led to incredible innovations which, because we have the culture of digital natives, have spread extremely fast across the organisation, using communication tools like our internal social network or our shared code repositories on github.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

How will AI evolve and what major innovations are on the horizon? What will its impact be on the job market, economy, and society? What is the path toward human-level machine intelligence? What should we be concerned about as artificial intelligence advances?

The Architects of Intelligence contains a series of in-depth, one-to-one interviews where New York Times bestselling author, Martin Ford, looks for the truth about AI and what we need to know and prepare for with the coming impact of AI on our lives.

Martin explores our future by meeting with twenty-three of the most important people in artificial intelligence today, including ‘the Godfather of AI’, Geoffrey Hinton, and Andrew Ng, founder of Google Brain, to find out the truth from the hype, and how we need to prepare for AI.

What You Will Learn:

- The state of modern AI

- How AI will evolve and the breakthroughs we can expect

- Insights into the minds of AI founders and leaders

- How and when we will achieve human-level AI

- The impact and risks associated with AI and its impact on society and the economy

About the Author

Martin Ford is a futurist and the author of two books: The New York Times Bestselling Rise of the Robots: Technology and the Threat of a Jobless Future (winner of the 2015 Financial Times/McKinsey Business Book of the Year Award and translated into more than 20 languages) and The Lights in the Tunnel: Automation, Accelerating Technology and the Economy of the Future, as well as the founder of a Silicon Valley-based software development firm.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

The new advice today for data scientists is not to become a generalist. You can read recent articles on this topic, for instance here. In this blog, I explain why I believe it should be the opposite. I wrote about this here not long ago, and provide additional arguments in this article, as to why it helps to be a generalist.

Of course, it is difficult, and probably impossible to become a data science generalist just after graduating. It takes years to acquire all the skills, yet you don’t need to master all of them. It might be easier for a physicist, engineer, or biostatistician currently learning data science, after years of corporate experience, than it is for a data scientist with no business experience. Possibly the easiest way to become one is to work for start-up’s or small companies, taking on many hats as you will probably be the only data scientist in your company, and will have to change jobs more frequently than if you work for a big company. To the contrary, for a big company, you are expected to work in a very specialized area, though it does not hurt to be a generalist, as I will illustrate shortly. Being a specialized data scientist could put you on a very predictable path that limits your career growth and flexibility, especially if you want to create your company down the line. Let’s start with explaining what a data science generalist is.

The data science generalist

The generalist has experience working in different roles and different environments, for instance, over a period of 15 years, having worked as a

- Business analyst or BI professional, communicating insights to decision makers, mastering tools such as Tableau, SQL and Excel; or maybe being the decision maker herself

- Statistician / data analyst with expertise in predictive modeling

- Expert in algorithm design and optimization

- Researcher in an academic-like setting, or experience in testing / prototyping new data science systems and proofs of concept (POC)

- Builder / architect: designing APIs, dashboards, databases, and deploying/maintaining yourself some modest systems in production mode

- Programmer (statistical or scientific programmer with exposure to high performance computing and parallel architectures – you might even have designed your own software)

- Consultant, directly working with clients, or adviser

- Manager or director role rather than individual contributor

- Professional with roles in various industries (IT, media, Internet, finance, health care, smart cities) in both big and small companies, in various domains ranging from fraud detection, to optimizing sales or marketing, with proven, measurable accomplishments

In short, the generalist has been involved at one time or another, in all phases of the data science project lifecycle.

The generalist might not command a higher salary, but has more flexibility career-wise. Even in a big company, when downsizing occurs, it is easier for the generalist to make a lateral move (get transferred to a different department), than it is for the “one-trick pony”.

Timing is important too. If you become a generalist at age 50 (as opposed to age 45) it might not help as getting hired becomes more difficult as you get past 45. Still, even if 50 or more, it opens up some possibilities, for instance starting your own business. And if you can prove that you have been consistently broadening your skills throughout your career cycle, as generalists do by definition, it will be easier to land a job, especially if your salary expectations are reasonable, and your health is not an issue for your future employer.

An example

I consider myself to be a generalist, and here, I explained how I became one, and the benefits that it provides both in small and large companies, and especially as an entrepreneur. It is true that there are many areas that I do not master, but as a generalist, you can hire the right employees to do what you are not so good at, or what you enjoy less.

I started my career in the academia, and I was expected to become a university professor. I failed an interview for such a position at Iowa State University around 1997, and realized soon after that there was too much competition for me to succeed in this endeavor. Twenty years later, I think this is the best thing that happened to me: not only do I continue to do research and publish (independently) but I don’t have the pressure to “publish or perish” and can write articles or books, even state-of-the-art, that are accessible to a much larger audience. I had to unlearn how to write esoteric scientific articles in the process. But I recognize that did gain a strong research training during these PhD and post-doc years.

Later on, I worked for a number of Internet companies, between 1998 and 2002, in the volatile bubble years of the Internet. No job was safe, and in the process I had to change jobs multiple times. That was the beginning of my corporate years, and having worked with many smart people in different places, if anything, was the beginning to start having a large network of influential people, and getting noticed. While market volatility scares many people, if you can handle the risks (I was young back then, which makes it easier) it can boost your career and experience as a generalist. I played in the stock market back then, sold my home in the Bay Area at the best time, worked for Visa and Wells Fargo for a while, which further diversified my experience. This would not have been very successful without trusting my gut instincts (what to do, when to sell or buy or where – activities that involve analyzing data but also intuition.)

At Wells Fargo, my biggest contribution was about identifying a big IT issue that made all the Internet logs completely wrong, even though I was hired as a BI analyst. I am still remembered at that bank for fixing this multi-million dollar issue. I could not have done that had I been a pure data scientist working exclusively on the mission I was hired for in the fist place. My previous experience in failed Internet companies is what helped me, as well as asking a salary a little below average to increase my chances of being hired.

At NBCi (at their peak they had 1,600 employees) I was the key guy who helped them NOT sign a multi-million deal with Netscape, based on past experience and analyzing the poor traffic coming from that source. Also, although hired as a BI analyst, I knew how to run scripts (Perl scripts at that time) to extract/summarize data from databases and deliver it to my boss automatically by email, in a way that quickly conveys all the important information he needed about weekly business metrics. Usually, BI people do not know how to code to that level. My boss complained one day that I did not work much (I had automated my job of course) but he still was happy. The time I saved doing the number crunching by automating it, I used it to start my own business.

Later in 2010, I trained BI people to write their own queries in Perl. I coded the low level part, all they had to do is put their SQL queries in a text file at the right place (on the right server) then enter a simple one-line UNIX command to run their query. It saved them a tremendous of time (they used Toad before, a very, very slow process to extract big data out of Oracle databases) and everyone was happy. Note: you need to be a very good friend with your IT team to have them approve this process. Again, despite me being perceived as someone not working a lot (that is, working smart rather than hard) everyone was happy (my boss — the CEO –, BI analysts, and myself.) I also mentioned some Google and Bing API’s available to download large list of keywords with pricing and volume information – a risky move for me as it could have killed my job consisting of building these lists myself manually (it did not.) Something even the software engineers (in a search company!) were not aware of. They ended up using my Google/Bing accounts (and even my credit card to pay for the services!) to optimize this process, though after initially using my own Perl code to access the API’s, my software engineer colleagues switched to Python.

I took advantage of this free time to further develop my business, and that was pretty much my last experience working for a company. Along those years, I learned enough to run my business, I was ready to become full-time entrepreneur working from home, which is what I did. My experience with new business models was more extensive than that of MBA graduates from top schools, and I was no longer afraid by competition. (that said, a good MBA degree is great for all the fantastic lifetime connections you will make during your college years.)

Even in personal life, being a generalist helps: buy or sell a home (or investing in general) with better outcomes than average, blending both your data science experience with intuition to maximize results. When we launched DSC, I knew more about tax, legal issues, advertising, marketing, sales, IT (automating processes), editorial process – especially regarding managing the specific business in question – than many who have a degree in those fields. It helped a lot saving costs, not having to hire tons of specialized experts. Along the way, I also learned how to significantly increase my negotiation skills, to the point of being able to purchase homes that few would qualify for (and reduce the price without sounding aggressive), the most recent one resulting in a 7-digit loan, even though these other home buyers had a stronger position than me in the first place (them being a US citizen, not born in poverty, on a payroll, working for some big company, sometimes very well paid, with an official job title – I have none of this, other than provable, decent, stable / increasing, income, as proved by many years of tax returns.)

These are some of the benefits of being a generalist.

To not miss this type of content in the future, subscribe to our newsletter. For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn, or visit my old web page here.

DSC Resources

- Book and Resources for DSC Members

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Find a Job

- Post a Blog | Forum Questions

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Deno is a rethink of a server-side JavaScript runtime from original Node.js creator Ryan Dahl, to address regrets and challenges with Node.js.

Node.js is by far the most widely used server-side and command-line JavaScript runtime. With its widespread popularity, there are limits on what may change within Node.js without breaking compatibility.

After leaving Node.js and JavaScript a few years ago, Dahl returned to the JavaScript ecosystem due to his growing interest in machine learning. After initially announcing Node.js at JSConf.eu in 2009, Dahl returned to this year’s JSConf.eu to explain his regrets with Node.js and to introduce Deno, an early attempt to address these concerns.

Dahl regrets several vital areas of Node.js, including not sticking with promises to provide async patterns, the overall Node.js security architecture, the internal build system, package management, and the handling of modules.

In spite of Node.js’ success and popularity, these criticisms are far from new. Many early efforts were made to include alternatives to these approaches but got met with resistance from the core Node.js team. Promises and modules have changed within Node.js because of their introduction into the language standard, but internal workings of Node.js fail to leverage their full capabilities even today.

Deno provides a secure V8 runtime engine with TypeScript as its foundation for increased code accuracy and with its compiler built into the Deno executable.

Deno strives to better leverage the JavaScript security sandbox, as well as simplifying the module and build system.

Critics of Dahl also note that some of the other challenges with Node.js did not get mentioned in Dahl’s talk, and there is concern that history could repeat itself. For example, Dahl assigned original copyright of Node.js to Joyent, which led to early conflict and the short-lived IO.js fork. This fork got resolved with the formation of the Node.js Foundation and current project governance model. Currently, the Deno copyright lists Ryan Dahl as the holder.

Deno shows early promise as an alternative to Node.js but should not yet be considered mature or stable, and as expected, does not yet have a thriving ecosystem. Deno is open source software available under the MIT license. Contributions and feedback are encouraged via the Deno GitHub project.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

The Grafana team announced an alpha version of Loki, their logging platform that ties in with other Grafana features like metrics query and visualization. Loki adds a new client agent promtail and serverside components for log metadata indexing and storage.

Loki aims to add traceability between metrics, logs and tracing, although the initial release targets linking the first two. Logs are aggregated and indexed by a new agent with initial support for Kubernetes pods. It uses the same Kubernetes APIs and relabelling configuration as Prometheus to ensure the metadata between metrics and logs remain the same for later querying. Prometheus is not a prerequisite to use Loki, however, having Prometheus makes it easier. “Metadata between metrics and logs matching is critical for us and we initially decided to just target Kubernetes”, explained the authors in their article.

According to Loki’s design document, the intent behind Loki’s design is to “minimize the cost of the context switching between logs and metrics”. Correlation between logs and time series data here applies to both data generated by normal metrics collectors as well as custom metrics generated from logs. Public cloud providers like AWS and GCP provide custom metrics extraction, and AWS also provides the ability to navigate to the logs from the metrics. Both of these have different query languages to query log data. Loki also aims to solve the problem of logs being lost from ephemeral sources like Kubernetes pods when they crash.

Loki is made up of the promtail agent on the client side and the distributor and ingester components on the server side. The querying component exposes an API for handling queries. The ingester and distributor components are mostly taken from Cortex’s code, which provides a scalable and HA version of Prometheus-as-a-service. Distributors receive log data from promtail agents, generate a consistent hash from the labels and the user id in the log data, and send it to multiple ingesters. Ingesters receive the entries and build “chunks” – a set of logs for a specific label and a time span – which are compressed using gzip. Ingesters build the indices based on the metadata (labels), not the log content, so that they can be easily queried and correlated with the time series metrics labels. This was done as a trade-off between operational complexity and features. Chunks are periodically flushed into an object store like Amazon S3, and indices to Cassandra, Bigtable or DynamoDB.

The querying API takes a time range and label selectors, and checks the index for matching chunks. It also talks to ingesters for recent data that has not been flushed yet. Searches can be regular expressions, but since the log content is not indexed, it has the potential to be slower than if it were. Loki is open source and can be tried out on Grafana’s site.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

In mid November around 1,600 attendees descended on the Hyatt Regency in San Francisco for the twelfth annual QCon in the city.

QCon SF attendees – software engineers, architects, and project managers from a wide range of industries including some prominent Bay-area companies – attended 99 technical sessions across 6 concurrent tracks, 13 ask me anything sessions with speakers, 18 in-depth workshops, and 8 facilitated open spaces.

We’ve already started publishing sessions from the conference, along with transcripts for the first time. The full publishing schedule for presentations can be found on the QCon SF website.

The conference opened with a presentation from Jez Humble & Nicole Forsgren, two of the authors of “Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations” – one of InfoQ’s recommended books for 2018.

Some members of InfoQ’s team of practitioner-editors were present and filed a number of stories about the event, but the main focus for this article is the key takeaways and highlights as blogged and tweeted by attendees.

by Grady Booch

Twitter feedback on this keynote included:

@danielbryantuk: “Most of contemporary AI is about pattern matching signals on the edge, and inductive reasoning” @Grady_Booch #QConSF https://t.co/JoXkxfhuyi

@danielbryantuk: “As a developer you can ask yourself what value AI projects will have for you. One answer is that you can plug in these new frameworks in order take advantage and add value within your overall system” @Grady_Booch #QConSF

@TDJensen: Right now AI and deep learning specifically are valuable as parts of other systems vs. as a stand-alone system #QConSF per Grady Booch, IBM

@danielbryantuk: “When using AI within software you will need to get data scientists involved early. You need to think about data usage and also ethics” @Grady_Booch #QConSF https://t.co/kRhPn6RPSF

@eranstiller: “The human brain runs at about 20 Hz, some run slower” — @Grady_Booch, a talk about #AI and computation #QConSF https://t.co/VJXW94l8HN

@randyshoup: Everything is a system @Grady_Booch at #QConSF https://t.co/xckgXOcspB

@danielbryantuk: “The disparity in computational effort required for training AI models versus inference of the models will effect software architectures, particularly around inference at the edge” @Grady_Booch #QConSF https://t.co/ZP1wangWI5

@danielbryantuk: “Everything is a system. In complex systems, malfunctions may not be detectable for long periods” @Grady_Booch #QConSF https://t.co/xq8P054UoP

@cinq: Every line of code represent an ethical and moral decision – @Grady_Booch #QConSF

@danielbryantuk: “We are increasingly seeing social and ethical issues getting more attention when building systems. Modern systems can have a big impact on people” @Grady_Booch #QConSF https://t.co/jJt0COUt5v

@gwenshap: “First question I ask is “Do you have a release process and can release on regular cadence?” If not, fix this first.” &<- @Grady_Booch at #QConSF

@danielbryantuk: “When working with a new system I focus on ‘can the system be easily and rapidly deployed’ and ‘is there a sense of architectural vision’. If you have these two thing right you get rid of 80% of your typical issues” @Grady_Booch #QConSF https://t.co/FkXGoowaYa

@Ronald_Verduin: ‘Grow a system through the iterative and incremental release of an executable architecture’ Grady Brooch #QConSf https://t.co/aFjkTYnYVq

@danielbryantuk: “Software whispers stories to be interpreted and realised by the underlying hardware. You [as developers] are the story tellers” @Grady_Booch on the importance of making your work count #QConSF https://t.co/4P3JwANGAr

Bernice Anne W. Chua attended this keynote:

He shared his experience as a creator and a person in tech, who also happens to be a black trans-man. It seemed fitting as a final talk, because even if we are software developers, we are all human beings first and foremost. It’s nice to know that even though each of us has our own specific kinds of struggles and challenges, we are not alone in that all of us are struggling together. One of the things that we as people might find ourselves dealing with is at the end of the day, we want to know that what we build is going towards something meaningful to us.

Twitter feedback on this keynote included:

@tsunamino: Purpose is a force: it drives us, it’s strong, it’s the core of what we are building #QconSF https://t.co/O9PsLeGcyR

@jdkiefer89: Be the force that drives! #QConSF @fakerapper #purpose https://t.co/nfTTeuBmlI

@tsunamino: Being an openly queer black person is difficult, especially in academics #QConSF

@tsunamino: Kortney went to tech because academia wouldn’t give him a job because he was trans, despite being the first person to graduate from his department #QConSF

@tsunamino: There was no space for trans people to get together and make tech to help one another, no community. So he made it #QconSF

@tsunamino: To apply a concept that is so simple (spare change programs) to combat a problem so big (combating cash bail programs) helps people feel they can contribute in real ways #QConSF

@tsunamino: To find your purpose, you can’t do it in isolation. You have to find your community in whatever format makes sense to you #QConSF

@tsunamino: Remain open to growth – continue to study and meet new people #QConSF

@tsunamino: Tell your story – if you don’t document your life, no one else will. Check back in on your journey so you know where you’ve been and where you’ve come from #QConSF

@tsunamino: Helping others will help you figure out your purpose. It’s important to be more open, even if it makes you more vulnerable #qconsf https://t.co/RuU8ardNGG

@danielbryantuk: Great #qconsf closing keynote by @fakerapper! Key takeaways:

– everyone intrinsically provides value

– find your community

– remain open to growth

– tell your story

– help others https://t.co/ETIM0Ma3tp

by Jez Humble & Nicole Forsgren

Twitter feedback on this keynote included:

@bridgetkromhout: Business likes to play golf, and ops doesn’t want change: the classic model of dysfunction. @jezhumble & @nicolefv #qconsf https://t.co/xmAtA7uif2

@tsunamino: Important keynote message at #QConSF: be skeptical of the data you see out there (eg the number of pool deaths are correlated with years Nic Cage movies come out) https://t.co/jZCDCz16J1

@bridgetkromhout: Correlation is not causation; @nicolefv explains how cherry-picking data isn’t enough, as we have logical fallacies and biases. #qconsf https://t.co/lP0q8zeZPW

@danielbryantuk: I always enjoying showing clients these software delivery performance metrics from The DevOps Reports and “Accelerate” — these pics are from the @nicolefv and @jezhumble #qconsf keynote https://t.co/aPY3lXW6qm

@tsunamino: Capabilities that drive performance after 4 years of research #QConSF https://t.co/8GFkapWDJp

@danielbryantuk: “Elite performers are 3.5X more likely to have strong availability practices” @jezhumble #qconsf https://t.co/VPETetLHZh

@danielbryantuk: “Transformational leadership is a key capability that drives high performance” @nicolefv #qconsf https://t.co/WDEOpHTM0n

@tsunamino: At the top is transformational leadership, good product development, and empowered teams and test automation #QConSF

@bridgetkromhout: Invest in capabilities that are predictive of high performance in an IT org. @nicolefv & @jezhumble #qconsf https://t.co/rsBezbUIAk

@neilathotep: Software delivery performance predicts organizational performance. And continuous delivery makes our work better #qconsf https://t.co/eA4hImo1is

@danielbryantuk: “You can drive cultural change in many ways — one way is by changing your technical practices” @nicolefv #qconsf https://t.co/urw8rWBeSv

@neilathotep: You can be using mainframes and achieve the outcomes – @jezhumble @QConSF #qconsf https://t.co/06FwcFjBMN

@tsunamino: Meaning the technical stack isn’t the most important (cough kubernetes) #QConSF

@tsunamino: If you aren’t seeing benefits from moving to *the cloud* you probably aren’t hitting all these points #QConSF https://t.co/nof0mm5rS0

@charleshumble: “High performance is possible you just have to execute.” Dr. Nicole Forsgren, #qconsf

@bridgetkromhout: Cloud is “the illusion of infinite resources” according to @jezhumble. For me, not worrying about data center cooling or rack-n-stack is magic; I think moving up the stack is powerfully freeing. #qconsf https://t.co/98wLXYkSB5

@danielbryantuk: Several great predictive findings from the 2018 State of DevOps Report for high performing teams, via @jezhumble and @nicolefv at #qconsf Architectural outcomes, adopting the cloud properly, and monitoring and observability are core predictors https://t.co/GwezC1Prjo

@tsunamino: How do you actually measure effective automated testing? These three caused the most issues #QConSF https://t.co/SMF1I2Y1r3

@tsunamino: Continuous testing practices that actually work #QConSF https://t.co/8b2mpueEu7

@danielbryantuk: “Continuous testing is not all about automating everything and firing all of the testers” @nicolefv and @jezhumble dropping some testing wisdom at #QConSF https://t.co/c6LiN5qpe6

@tsunamino: Properly scoping projects is key to building features or updating org practices #QConSF https://t.co/5vqCEjVWSi

@randyshoup: Taking big bits of work and splitting them into smaller bits that actually provide value to customers is key to *everything* @jezhumble and @nicolefv #QConSF

@tsunamino: You will not be able to fix your tech stack without also fixing the trust within your culture #QConSF https://t.co/6HmCdKZ6zU

@danielbryantuk: “When something goes wrong, ask yourself that if you had the same information and tools, would you have done the same thing? Don’t automatically fire the person seen to be the cause of the issue” @nicolefv and @jezhumble at #qconsf https://t.co/aIAaUkWlz4

@tsunamino: It’s not the mix of skills that makes the perfect team, it’s psychological safety #QConSF https://t.co/v6d5Q1woWt

@randyshoup: Team *dynamics* are critical; team skills are not. There was *no* correlation at Google between the technical skills of a team and its performance. #psychologicalsafety @nicolefv and @jezhumble #QConSF https://t.co/ikUdZQSzW6

@bridgetkromhout: Leaders set clear goals and direction, then let their teams decide how to implement. @nicolefv & @jezhumble on how high-performing orgs work. #qconsf https://t.co/OHVP0dTyBU

@jessitron: If teams can’t change the incoming requirements, you might be doing fast-waterfall. @jezhumble #QConSF https://t.co/Duhvqa4jTI

@vllry: @nicolefv @jezhumble Stability and velocity aren’t a tradeoff. Easy to change systems, strong testing/monitoring/SRE reduce cost of change management. Architecture and paradigm changes are key, not just tools and policies. #qconsf https://t.co/qIgso0WYNM

@vllry: @nicolefv @jezhumble On the subject of doing DevOps “right” – buy into the goals and not just the checkboxes of key success indicators, like cloud adoption. Using GCP/AWS/Azure and having a test suite don’t inherently mean you’re getting value from those things. #QConSF https://t.co/s3b8P1NRYK

@vllry: @nicolefv @jezhumble Enable autonomy to be agile. Teams (and members) should be able to work independently with little coordination and consultation. To enable this: fast change management and architectural isolation. I strongly recommend watching the talk when posted! #QConSF https://t.co/CJkoYt0gFP

Twitter feedback on this keynote included:

@wesreisz: @hspter Nice visual to represent how to think about working in #dataScience #QConSF https://t.co/szpCSt8SRl

@danielbryantuk: “If data science is an art, why don’t we teach it like an art?” @hspter #QConSF https://t.co/GJJUesYmq3

@chemphill: Explore a problem through solutions #DataScience @hspter @stitchfix #QConSF https://t.co/W3bVOPpBx8

@randyshoup: Lots of things can go wrong in a data analysis @hspter at #QConSF https://t.co/rpULQjlWwi

@randyshoup: … and so is data science, via @hspter at #QConSF https://t.co/UAfZ53vIce

@wesreisz: @hspter love this image… Generative organizations practice blameless postmortems #QConSF https://t.co/PrQNKwK4aV

@randyshoup: Statistics as an academic discipline is problem-focused. Data science as a product-oriented discipline is solution-focused. @hspter at #QConSF

@sangeetan: Data Science – Design, science and art come together @hspter #QConSF https://t.co/EF2yehwQmV

@danielbryantuk: “Blameless postmortems and sprints have a lot in common. They provide structure and rules for playing nice” @hspter #qconsf https://t.co/wPleeJK8c1

@danielbryantuk: “Empathy is a useful skill within life and software development, and it’s something that can be developed with meditation” @hspter #QConSF https://t.co/TXrBUGT4uj

Go – A Key Language in Enterprise Application Development?

by Aarti Parikh

Twitter feedback on this session included:

@ag_dubs: what was fun 10 years ago isn’t fun anymore- are we due for a new programming language paradigm revolution? #21stCenturyLangs #QConSF https://t.co/zFba02IdTg

@ag_dubs: i want to be able to leverage multicore processors from the *language* level #21stCenturyLangs #QConSF https://t.co/DF1UiFq0v3

@ag_dubs: the history of languages and platforms is tending towards simplicity #21stCenturyLangs #qconsf https://t.co/RjTv7Md1J7

@ag_dubs: @golang wants to be the simplicity that both developers and platforms seek #21stCenturyLangs #QConSF https://t.co/mVmNspKFjL

@katfukui: Applying love languages to technology can shape and change how humans use what you build. For ex, use words of affirmation to add love and thought in notification and text-based workflows #QConSF

@ag_dubs: stability really mattered for Java – and @golang is paying attention to that, even as it looks to go 2.0 #QConSF #21stCenturyLangs https://t.co/riqFAHACLn

@ag_dubs: “i like programming in Go because jt is bridging the gap between application and systems programming.” – @classyhacker closes us out with some inspiring thoughts about the legacy of @golang #21stCenturyLangs #QConSF https://t.co/b4vmFu3lN7

Kotlin: Write Once, Run (Actually) Everywhere

by Jake Wharton

Twitter feedback on this session included:

@maheshvra: There is a common concern in adopting #kotlin, that is owned by Jetbrains, not a community or any affiliated open source foundation. Though, it offers good value. What is the future? #21stCenturyLangs #QConSF

@ag_dubs: because passing state into a class is such a common pattern, @kotlin gives u syntax sugar for collapsing that data into a property #21stCenturyLangs #QConSF https://t.co/FfR7VJu2vG

@ag_dubs: kotlin has property delegates that make abstracting patterns like lazy loading or null checks easy and expressive #21stCenturyLangs #QConSF https://t.co/p5Cba9xDUK

@ag_dubs: now to talk about @kotlin on multiple platforms- there’s platform-dependent AND platform-independent kotlin! #21stCenturyLangs #QConSF https://t.co/nWFAvExSBz

@ag_dubs: toolchains are incredibly important for making a multiple language solution work- and kotlin has great solutions for this interop #21stCenturyLangs #QConSF https://t.co/Y0yxMljQ7M

@ag_dubs: an architecture for a multiplatform application- can we rewrite it *all* in kotlin? @JakeWharton says yes! #21stCenturyLangs #QConSF https://t.co/bamiTy6gOg

@ag_dubs: being able to have a small team target multiple platforms with a single #kotlin codebase gives you lots of options for folks to access your application #21stCenturyLangs #QConSF https://t.co/p42phNPRLt

WebAssembly: Neither Web Nor Assembly, But Revolutionary

by Jay Phelps

Twitter feedback on this session included:

@ag_dubs: asm.js was a great frontrunner in leading the #webassembly revolution – check out that bitwise or 0, it’s functionally a type annotation for integer! #QConSF #21stCenturyLangs https://t.co/Ci0UsL5DhS

@ag_dubs: #webassembly is revolutionary because it’s the first open and standardized bytecode #QConSF #21stCenturyLangs https://t.co/pst5mKCB3V

@ag_dubs: is #webassembly trying to kill javascript? NOPE! #QConSF #21stCenturyLangs https://t.co/IDlB3LmYod

@ag_dubs: #webassembly is going to affect language and compiler design as we go forward- and it’s because the web has very interesting and unique constraints! #QConSF #21stCenturyLangs https://t.co/nMUCA1zsCP

@ag_dubs: you may already be using #webassembly ! (and not even know it!) shoutout to @fitzgen’s sourcemap impl in @rustwasm ! #QConSF #21stCenturyLangs https://t.co/xhvEHY468b

Fairness, Transparency, and Privacy in AI @LinkedIn

Bernice Anne W. Chua attended this session:

The most important takeaway from this talk is that “We should think of privacy and fairness by design, at the very beginning, when developing AI models; not just as an afterthought.”, and “Privacy and fairness are not just technical challenges. They have huge societal consequences. That’s why it’s our responsibility to include as many and as diverse a group of people as possible to be our stakeholders.”

Airbnb’s Great Migration: From Monolith to Service-Oriented

by Jessica Tai

Twitter feedback on this session included:

@danielbryantuk: Nice overview of the migration and service creation principles from the @AirbnbEng team, via @jessicamtai

at #QConSF

– services should own read and write data

– service addresses specific concern

– avoid duplicate functionality

– Data mutations should publish by standard events https://t.co/QrFJPJIrp7

@danielbryantuk: Excellent overview of the @AirbnbEng migration to microservices by @jessicamtai at #QConSF “It’s worked out well for us, but SOA (and distributed systems) isn’t for everyone.” https://t.co/OdseHPnNkf

@danielbryantuk: The @AirbnbEng team have clearly thought about their approach and tooling as they’ve moved to microservices. @jessicamtai at #QConSF giving a shout out to @TwitterEng’s Diffy for comparing migrated endpoints https://t.co/tL7D3EjHcc

Netflix Play API – An Evolutionary Architecture

Twitter feedback on this session included:

@gburrell_greg: Distributed monoliths are worse than monoliths. @suudhan at #QConSF https://t.co/cCXiLQ9YFn

@gburrell_greg: Beware of data monoliths! @suudhan at #QConSF https://t.co/MaaU9xPWgM

Paying Technical Debt at Scale – Migrations @Stripe

by Will Larson

Twitter feedback on this session included:

@randyshoup: “Cassandra is a Trojan Horse released by Facebook to ruin a generation of startups”@Lethain at #QConSF

@randyshoup: What percentage of the system should be migrated before we declare a migration successful and complete? @Lethain at #QConSF https://t.co/HUpIX2q4pp

Real-World Architecture Panel

by dy Shoup, David Banyard, Tessa Lau,Jeff Williams, Colin Breck

Twitter feedback on this session included:

@danielbryantuk: Great #QConSF real world architecture panel by @randyshoup

– Automation and robotics is being driven by large orgs. Democratizing the tech is important (esp for small projects)

– People outside the industry have high expectations and think humanoid robots will arrive next year https://t.co/0uyU3sDwUw

@danielbryantuk: Important things to learn and understand when working with real world automation and robotics:

– Systems thinking

– Awareness of security, privacy, and regulation

– UX

– Algorithms

– Mechanics

#QConSF architecture panel, organized by @randyshoup

@tessalau: Robotics doesn’t have all the tools which general software has yet, we need more primitive building blocks like ROS for robotics – @tessalau at #QConSF @rosorg https://t.co/lk6o6lQkWx

Scaling Slack – The Good, the Unexpected, and the Road Ahead

by Mike Demmer

Twitter feedback on this session included:

@danielbryantuk: “Deploying is only the beginning. We’re taking time to migrate data correctly while still running at scale. We’re also conscious of the second system effect where we are tempted to sneak in new updates along with the migration” @mjdemmer #QConSF https://t.co/dA3n1UF9vs

What We Got Wrong: Lessons From the Birth of Microservices

by Ben Sigelman

Twitter feedback on this session included:

@JustinMKitagawa: “Those Sun boxes are so expensive!” and “Those Linux boxes are so unreliable!” Sentiments leading google to develop disposable commodity hardware infrastructure and their foundational platform constructs circa 2001.

@gwenshap: The only good reason to adopt microservices: scale human communication. @el_bhs at #QConSF

@gwenshap: There is a real cost to making network calls. Keep that in mind when going #serverless. Good for embarrassingly parallel problems, but keep an eye on serial network calls. #QConSF

@gwenshap: There are cross-cutting concerns like Monitoring and security are delegated to small microservices team. This is significant overhead. #QConSF

@raimondb: Ben siegelman: beware of too much independence with microservices #qconsf https://t.co/miU4hzYWIB

@Ronald_Verduin: How is your scrum team independence organized? Ants are independent but there are some rules. #QConSF Ben Siegelman https://t.co/PxO0hlVOOh

@gwenshap: Monitoring is about detecting critical signals and refining the search space for “what’s wrong”. Giant dashboards don’t help refine the search space. #QConSF

@gwenshap: Hardest part about Dapper: political reality of getting something to run as root on every machine at Google. #QConSF

@gwenshap: Biggest mistake in building Dapper? Not analyzing bottlenecks early and writing to disk. #QConSF

@MicroSrvsCity: Optimize for team independence not for computer science #Microservices #QConSF @el_bhs https://t.co/aYdGCydCfp

Evolving Continuous Integration: Applying CI to CI Strategy

Twitter feedback on this session included:

@sangeetan: Operability is important consideration for internal tools as well. Think how well your entire team will be able to support your users. Elise McCallum @WeAreNetflix #QConSF #DevEx

@sangeetan: You don’t always have to replace your system to address gaps. Incorporate best parts of other tools into your own and customize to your use cases to provide optimal experience. CI evolution @WeAreNetflix #QConSF with Elise McCallum

@sangeetan: Connecting existing tools reduces the need for developers to learn yet another new tool. Incremental evolution and migration FTW. Elise McCallum @WeAreNetflix #QConSF #DevEx

Helping Developers to Help Each Other

by Gail Ollis

Twitter feedback on this session included:

@sangeetan: Automation doesn’t just make things easier, it’s executable documentation that gets tested regularly. @GailOllis #QConSF #DevEx

@danielbryantuk: Exploring the PhD research of @GailOllis at #QConSF, looking at the effect of good and bad software development practices. Some of the top responses from interviewees about the biggest impacts https://t.co/cbzi9pCFyg

@danielbryantuk: The dangers of working in isolation within a software system, via @GailOllis at #QConSF https://t.co/CsSB9jU84E

@sangeetan: The moment anything is written, it becomes legacy and has constraints around it. @GailOllis #QConSF #DevEx https://t.co/uyLyMuTd4v

@neilathotep: “I saw him create a legacy system in 3 weeks” @GailOllis on helping developers to help each other #QConSF

@danielbryantuk: “You see a lot of themes in software variable names, which are not helpful when trying to solve an issue — at least ‘wibble’ is obviously meaningless” @GailOllis #QConSF https://t.co/DwxgLAuhN4

@sangeetan: Re inventing the wheel, adding elephant to it and a spaceship on top – what others’ code can feel like to people who have to work with it @GailOllis #QConSF

Building a Reliable Cloud Based Bank in Java

by Jason Maude

Twitter feedback on this session included:

@danielbryantuk: “Banking IT has traditionally been about stability and conservatism — the opposite to what modern organizations do. This is driven by the requirement that when you put your money in the bank you don’t expect it to be stolen by child wizards.”

@danielbryantuk: The combination of @scsarchitecture and the DITTO architecture has allowed @StarlingBank to build a resilient online banking system

npm and the Future of JavaScript

by Laurie Voss

Twitter feedback on this session included:

@annthurium: “JavaScript is the most popular language in the world right now…50% of working programmers use JS at least some of the time.” -@seldo #QConSF https://t.co/8Qc9JRRPL5

@tsunamino: Turns out package managers are all about the same speed because OSS means sharing best tips so everyone wins! #QConSF

@annthurium: Did you knowwwwww that “npm ci” will double the speed of your builds? @seldo #QconSF

@tsunamino: Package authors must have 2FA enabled to prevent someone from publishing something under your name #QConSF https://t.co/qHAERy7xVa

@tsunamino: Omg please upgrade your packages 🙊 #QConSF https://t.co/oEMawT76cx

@tsunamino: Or if you want to, let the robots fix their own security vulnerabilities! #QConSF https://t.co/8JzrgSGlU4

@annthurium: Studies show that people pick languages for projects based on what libraries are available, which lines up with npm survey data. @seldo #QConSF https://t.co/ZFSndW2Yok

@tsunamino: Frameworks never really die, they get maintained in legacy products and then people start new products in new frameworks #QConSF https://t.co/DGlUwvlvUx

@annthurium: React isn’t a framework so much as an ecosystem. React solves the view problem, but decouples itself from other related problems such as routing, state management, and data fetching that are solved by other tools. @seldo #QConSF https://t.co/YnamPHoLuz

@annthurium: Only 56% of npm users are using a testing framework. 😱 @seldo #QConSF

@tsunamino: Predictions of the future of JavaScript: learn GraphQL and Typescript and WASM because they aren’t going away #QConSF https://t.co/lAoCqmy1tk

@tsunamino: The best frameworks are the ones that have the most people using it bc you get a community to help #QConSF https://t.co/aAVeBBIP56

Crisis to Calm: Story of Data Validation @ Netflix

Twitter feedback on this session included:

@danielbryantuk: “Adding circuit breakers for mitigating risks related to data propagation added a lot of value, but also a few problems. Genuine scenarios accidentally tripped the circuit. We learned to use knobs to fix this at first” @lavanya_kan #qconsf https://t.co/wGmKEYYI1G

@danielbryantuk: “The Netflix data propagation system is very fast, but this means that bad data is propagated just as rapidly as good data! This can lead to challenges” @lavanya_kan #qconsf https://t.co/k4xm8SNMnf

@danielbryantuk: “Data validation is key to high availability” great thinking points from @lavanya_kan about data changes and safety #qconsf https://t.co/VfzpkPNtDW

From Winning the Microservice War to Keeping the Peace

Twitter feedback on this session included:

@danielbryantuk: Put circuit breaking into a microservices system by default. “This stops a single match creating a system wide fire.” Andrew McVeigh #qconsf https://t.co/BAOca0QqU7

@danielbryantuk: “Put an API gateway into a microservices system straight away, e.g. something that handles auth and rate limiting” Andrew McVeigh at #qconsf also mentioning the benefits of @EnvoyProxy Can I also subtly plug @getambassadorio which is an open source gateway also built on Envoy 🙂 https://t.co/eD9kHaxI4t

Reactive DDD—When Concurrent Waxes Fluent

Twitter feedback on this session included:

@randyshoup: Prefer fluent interfaces: By setting data on an entity, you are not conveying why you are doing that. Define a protocol instead of a pile of setters. @VaughnVernon at #QConSF https://t.co/5ZvsRJRAPZ