Month: December 2018

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

At the recent Connect() event, Microsoft announced the public preview of Python support in Azure Functions. Developers can build functions using Python 3.6, based upon the open-source Functions 2.0 runtime and publish them to a Consumption plan.

Since the general availability of Azure Function runtime 2.0, reported earlier in October on InfoQ, support for Python has been one of the top requests and was available through a private preview. Now it is generally available, and developers can start building functions useful for data manipulation, machine learning, scripting, and automation scenarios.

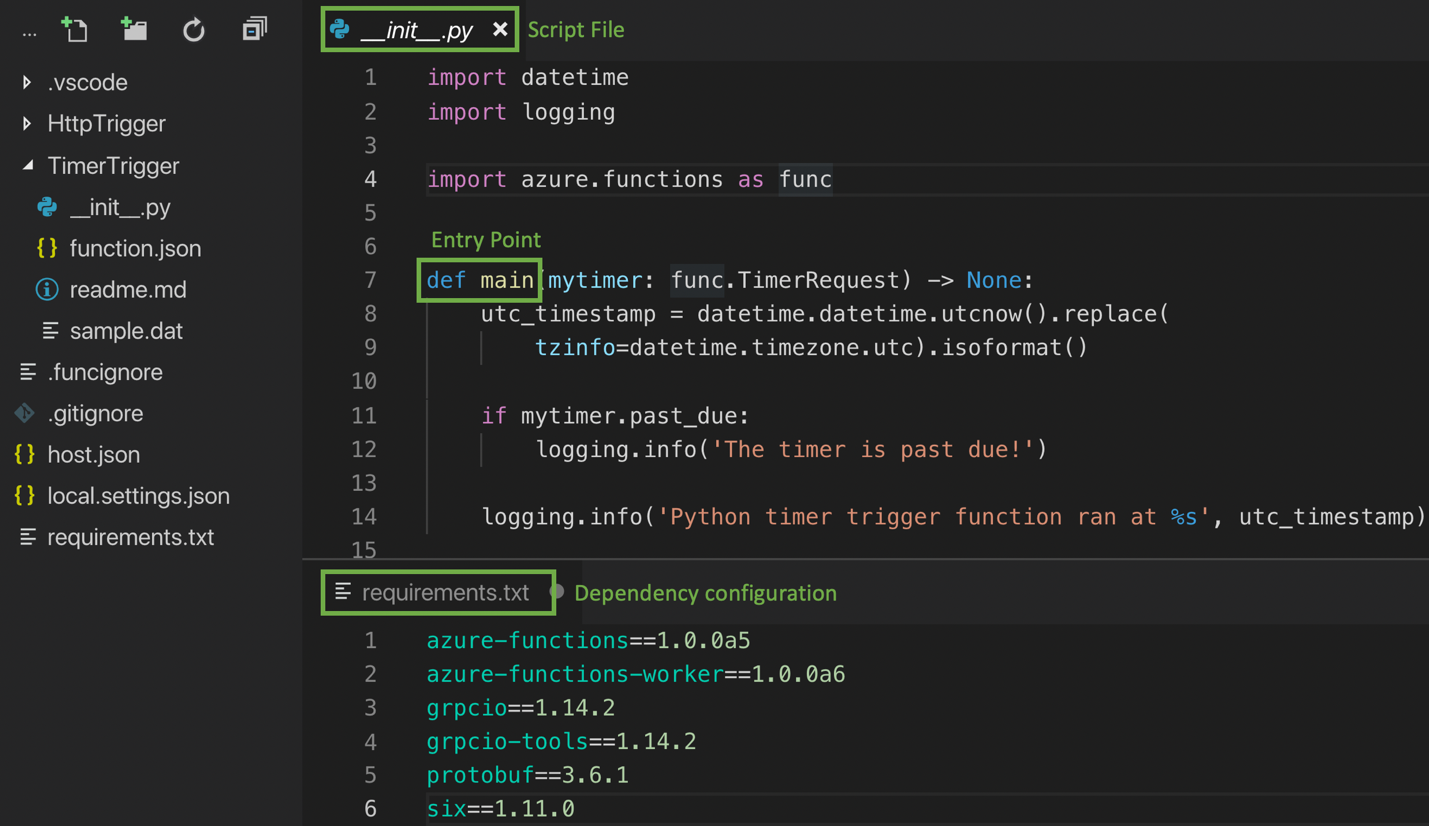

The Azure runtime 2.0 has a language worker model, providing support for non-.NET languages such as Java, and Python. Hence, developers can import existing .py scripts and modules, and start writing functions. Furthermore, with the requirement.txt file developers can configure additional dependencies for pip.

Source: https://azure.microsoft.com/en-us/blog/taking-a-closer-look-at-python-support-for-azure-functions/

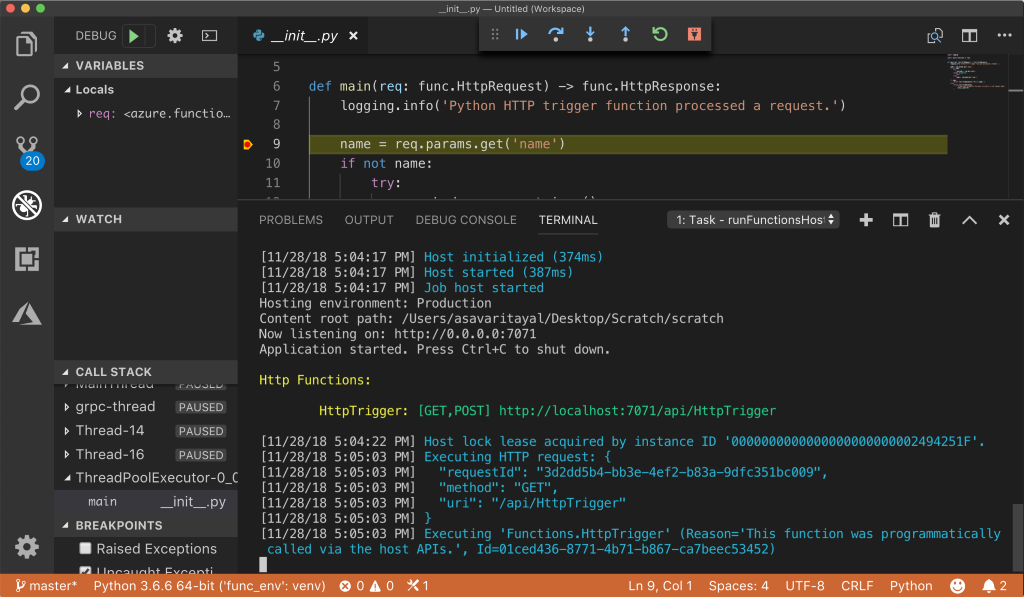

With triggers and bindings available in the Azure Function programming model developers can configure an event that will trigger the function execution and any data sources that the function needs to orchestrate with. According to Asavari Tayal, Program Manager of the Azure Functions team at Microsoft, the preview release will support bindings to HTTP requests, timer events, Azure Storage, Cosmos DB, Service Bus, Event Hubs, and Event Grid. Once configured, developers can quickly retrieve data from these bindings or write back using the method attributes of your entry point function.

Developers familiar with Python do not have to learn any new tooling, they can debug and test functions locally using a Mac, Linux, or Windows machine. With the Azure Functions Core Tools (CLI) developers can get started quickly using trigger templates and publish directly to Azure, while the Azure platform will handle the build and configuration. Furthermore, developers can also use the Azure Functions extension for Visual Studio Code, including a Python extension, to benefit from auto-complete, IntelliSense, linting, and debugging for Python development, on any platform.

Source: https://azure.microsoft.com/en-us/blog/taking-a-closer-look-at-python-support-for-azure-functions/

Hosting of Azure Functions written in Python language can be either through a Consumption Plan or Service App Plan. Tayal explains in the blog post around the Python preview:

Underneath the covers, both hosting plans run your functions in a docker container based on the open source azure-function/python base image. The platform abstracts away the container, so you’re only responsible for providing your Python files and don’t need to worry about managing the underlying Azure Functions and Python runtime.

Lastly, with the support for Python 3.6, Microsoft is following competitor Amazon’s offering AWS Lambda, which already supports this Python version. By promoting more languages for running code on a Cloud platform both Microsoft and Amazon try to reach a wider audience.

Podcast: Charles Humble and Wes Reisz Take a Look Back at 2018 and Speculate on What 2019 Might Have in Store

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

In this podcast Charles Humble and Wes Reisz talk about autonomous vehicles, GDPR, quantum computing, microservices, AR/VR and more.

Key Takeaways

- Waymo vehicles are now allowed to be on the road in California running fully autonomous; they seem to be a long way ahead in terms of the number of autonomous miles they’ve driven, but there are something like 60 other companies in California approved to test autonomous vehicles.

- It seems reasonable to assume that considerably more regulation around privacy will appear over the next few years, as governments and regulators grapple with not only social media but also who owns the data from technology like AR glasses or self-driving cars.

- We’ve seen a huge amount of interest in the ethical implications of technology this year, with Uber getting into some regulatory trouble, and Facebook being co-opted by foreign governments for nefarious purposes. As software becomes more and more pervasive in people’s lives the ethical impact of what we all do becomes more and more profound.

- Researchers from IBM, the University of Waterloo, Canada, and the Technical University of Munich, Germany, have proved theoretically that quantum computers can solve certain problems faster than classical computers.

- We’re also seeing a lot of interest around human computer interaction – AR, VR, voice, neural interfaces. We had a presentation at QCon San Francisco from CTRL-labs, who are working on neural interfaces – in this case interpreting nerve signals – and they have working prototypes. Much like touch this could open up computing to another whole group of people.

Show Notes

- 02:13 Reisz: How do you keep your pulse on software?

- 02:16 Humble: One of the main methods I use to keep abreast of technology trends is through the software practitioners and news writers who contribute to InfoQ.com, both through their writing and when we meet at QCon conferences throughout the year. In addition to participating in the planning of some of the QCons, I attend developer meetups and other conferences.

- 03:28 Reisz: You’re hosting the Architectures You’ve Always Wondered About track at QCon London in March. What are some of the talks you have in there, so far?

- 03:34 Humble: The BBC is coming to talk about iPlayer, their catch-up service in the UK. A couple years ago, they talked about their microservices journey and moving to AWS. I’m hoping to get them to talk about the iPlayer client, written entirely in Javascript, and it’s a fascinating story.

- 04:06 We’ve got AirBnb coming, talking about their microservices journey and their experience running microservices in production.

- 04:19 John Graham-Cumming from Cloudflare will be talking about Cloudflare Workers, their Javascript-based, serverless execution environment.

- 04:33 There are a few others that aren’t quite confirmed yet, so I can’t discuss them, but it’s shaping up quite well. I’d like to get one more UK or London company, and maybe a Bay Area/Silicon Valley company to round it out.

- 04:48 Reisz: One of the things that stood out for me in 2018 was the pervasiveness of AI and how it’s affecting our lives now, and will be affecting our lives in the near future. One example is autonomous driving cars, and you can’t really talk about that without mentioning the Uber accident last March. It shows there’s lots of room for improvement here, but in 2016 there were approximately 34,000 deaths in North America alone from car accidents. Self-driving cars have a tremendous ability to make an impact on our daily lives.

- 05:48 Humble: I completely agree. The vast majority of accidents are caused by driver error, and eliminating that whole reason for accidents is a good thing. Ubiquitous autonomous vehicles are probably further away than many people imagine, but I do think that autonomy within certain constraints is still a big deal. There’s still a way to go, but there’s definitely a chance for these vehicles to save lives. There are also opportunities to help people with mobility issues, or live where there is a lack of public transportation. There will inevitably be accidents, but that shouldn’t make us blind to the fact that cars are pretty lethal anyway, and autonomous vehicles have the ability to improve that in a significant way.

- 07:18 Reisz: Fully autonomous vehicles catch the major headlines, but it’s going to augmenting people, especially in the short term. The human in the AI loop, where feedback allows the human to make better decisions.

- 07:43 Reisz: Waymo is now the first fully-autonomous car allowed to be on the roads in California, at least around Silicon Valley.

- 08:05 Humble: Waymo has done ten million autonomous miles, and a further seven billion simulated miles, since 2009. They seem to be a long way ahead in the amount of driving they have done, but there are about 60 other manufacturers that are approved to test autonomous vehicles in California. There is a lot of money and effort going on, just in the category of cars, but there is also work being done on trucks and drones.

- 09:04 Reisz: Another subject that’s been in the news, especially in Europe, is GDPR.

- 09:14 Humble: GDPR is the General Data Protection Regulation, announced by the European Union in April 2016, and came into effect a couple years later. There were some high profile companies that weren’t ready, including Pinterest’s Instapaper, Unroll.me, and Stardust. It’s a very complex piece of legislation, with the purpose of giving you the right to understand how your data is being used. We will need case law to understand how well it works in practice. It’s part of a movement by governments all over the western world to tackle the business of consumer data and privacy. We’ve seen bills passed in California and Vermont, and I think we’ll see more and more. Back to what we were just talking about, if you think the data that can be collected from social media records is a problem, imagine how much data we could collect from self-driving cars.

- 10:28 Reisz: Ford’s CEO was recently interviewed, and discussed the analytics they are doing around automobile financing as well as driving characteristics. He said about 100 million people have Ford vehicles, and there’s a huge case for monetizing those insights. If you add that to self-driving cars, it’s amazing the kind of insights we could potentially find from people’s habits.

- 11:04 Humble: I think we’re at a cusp in our industry of seeing a large quantity of legislation. Because we’ve been slow to put our own house in order, such as around ethically implications of software, it’s probably not a bad thing that legislators are starting to call attention to issue.

- 11:25 Humble: Something else that we’ve seen this year on InfoQ and at QCon was the ethical implications of technology. The broader point is, because software is becoming more ubiquitous in more people’s lives, the impacts you have if you’re working on software just become more profound. If one person throws litter out the car window, it doesn’t really matter, but if everyone does it, pretty soon the whole world is covered in rubbish. It’s the scale that is becoming interesting now.

- 12:30 Humble: In March, at QCon London, we had our ethics track, which was the first major conference that I know of that had an ethics track.

- 12:41 Reisz: As far as I know, that’s true. Ann Curry spun up a follow-on conference on ethics after that, but it was following QCon London.

- 13:05 Reisz: There was some news recently about Red Hat that I know you wanted to talk about.

- 13:09 Humble: Essentially IBM is acquiring Red Hat. Other than Oracle, IBM and Red Hat are two of the largest contributors to the Java platform. One thing that seems likely is that IBM will reduce the total number of people allocated to supporting Java. I also think it’s interesting in the general context of public clouds, because I think it’s something we can expect to see a bit more of in the next two or three years. Mergers and acquisitions in the cloud space are quite likely to happen. I wouldn’t be surprised if Oracle we to acquire somebody, or they could exit the public cloud business altogether. I have the sense that their cloud offering isn’t gaining enough traction in the market, and we’ve had some other companies exit the market (AT&T, Cisco, the former Hewlett-Packard), so there’s precedence.

- 14:07 Humble: I could be really contentious and say it’s quite interesting to see what Google does. Obviously, Google is one of the big players in the cloud space, but they’re quite a long way behind AWS, and they seem to be a long way behind Azure, as well. I wonder how much longer they will keep going. My guess is they will always have a bit of a niche with machine learning-type stuff, because of TensorFlow. Google almost invented the whole idea of cloud computing, and yet AWS, to me, seems to be very far ahead.

- 14:48 Reisz: Let’s put a pin in 2018 and look at 2019. What do you see as the major trends going forward into the coming year?

- 14:57 Humble: One of the things we talked about briefly last year was quantum computing. It’s still some way out, but it is still genuinely interesting.

- 15:07 Reisz: Before you go further, let’s level set. What is into quantum computing?

- 15:14 Humble: It’s very hard to describe in a small number of words. We published a series of articles by Holly Cummins of IBM (part one, part two, part three) which are brilliant, and are a much better explanation. Basically, quantum computers were purely theoretical twenty years ago, and they now exist. They exploit certain aspects of quantum mechanics. In particular superposition which has to do with spin and that an observed spin is in one of two states. The other bit has to do with shared properties of entanglement. You can observe one electron, and that tells you the state of the other electron, over long distances, up to 1.3km.

- 15:51 Humble: The key thing to understand is that quantum computers can deal with certain kinds of problems much faster or, in some cases, more effectively, than classical computers. In fact, we had a formal proof of that this year. You tend to hear it talked about a lot in the context of cryptography because we rely on asymmetric keys, and, in theory, quantum computers can break asymmetric keys, so we’ll need new ways of securing stuff. But there are several categories of problems, including financial risk management, logistics, or medical research, which require high levels of computing power.

- 16:55 Humble: There are several companies exploring this space. IBM has their Q machines, which you can access via IBM’s cloud, to run real experiments on real quantum computers. Google launched a Python open library called Cirq, and Microsoft has Q#.

- 17:30 Reisz: Are these all new languages to target quantum processors?

- 17:39 Humble: IBM has a Python library, as does Google. Python seems to be one of the languages being used, but Microsoft has created its own language in Q#. I don’t know how that will go, but I suspect we will need new languages to describe what we’re trying to do, and Python isn’t a bad place to start. I think it’s genuinely exciting that you can go to GitHub, download some code and then run it on real quantum computers in IBM’s data centers. For corporations, it also means you don’t have to invest in building and maintaining these complex machines, because you can just access them through the cloud.

- 19:29 Humble: What are some of the forward-looking things you’ve been thinking about? I know you’ve been thinking a lot about machine learning.

- 19:35 Reisz: What’s old is new might be a good way of phrasing things. Back in 2016 Netflix talked about Open Connect, and how they worked with ISPs to stage these boxes all around the world. Most of the content being served to clients all around the world was via this CDN. This facilitated the Netflix Everywhere deployment when content was made simultaneously available to 190 countries.

- 20:27 Reisz: I see the pattern of pushing logic out to the edge and taking it off the cloud resources. That’s particularly true for ML and AI. Mike Lee Williams of Cloudera, one of our committee members for QCon AI, released a report talking about federated machine learning. It ties some of the privacy concerns of GDPR into edge and machine learning. Federated learning allows you to use your phone or other IOT devices to run a machine learning algorithm using training data specific to that device, and then upload the model to a central server and then aggregate those models together.

- 21:36 Humble: So what does that mean and what are the benefits?

- 21:38 Reisz: It means taking some of the processing power off the centralized servers and pushing it down to edge devices. To handle the privacy implications, instead of uploading personal data, you keep the personal data local and only upload the trained model to the server.

- 22:03 There are some other interesting cases for edge computing outside machine learning. At QCon New York, Chick-Fil-A talked about what they’re doing with edge computing. Statistica said by 2025 there are going to be 75 billion IOT devices connected to the internet. I think it’s very compelling and forward-looking to start leveraging the edge, and being able to push machine learning and different functions down to those devices, and then aggregate them up.

- 22:48 Humble: We’ve known for a while that if you train models using big machines, that you can then push those models down to small devices to run them. Doing that the other way is really fascinating. The most recent Apple devices have a neural engine as part of the A12 chip, which is exactly for this idea of being able to run things and push it back up. In Apple’s case, it’s partly around privacy.

- 23:20 Humble: Something else we’re seeing with machine learning, as we’re putting the tooling into the hands of more developers, the applications we’re seeing are really fascinating. We know about use cases with image processing, such as interpreting radiology scans. Some of the opportunities with wearables are also compelling, like being able to combine wearable health tech with machine learning. We’re really just scratching the surface of what machine learning can do.

- 24:32 Humble: It would be remiss of us to not talk about microservices.

- 24:43 Reisz: Two or three years ago, when we talked about microservices at QCon, we were talking about how to decompose the monolith. Where do we set our bounded contexts? How do we decompose this thing into services? We were still asking, “What is ‘micro’ in a microservice?” Now I hear a lot more of the conversation being around how to operate microservices. How do we properly setup north-south and east-west communication among services? How do we properly secure things? How do we handle observability? When you get to a certain point with microservices, the discussion is now, “How do we enable this?”

- 25:27 Reisz: Kubernetes seems to have won the battle when it comes to orchestration. People are now building on top of Kubernetes as a given piece of infrastructure. We’re seeing a lot of work with service meshes. If cloud removed the undifferentiated heavy lifting of building apps on infrastructure, I think service meshes are starting to remove the undifferentiated heavy lifting in writing applications. It does dynamic routing. It gives you resilience with circuit breakers. It provides a best-in-class observability experience. Some of the names we hear about in that space are Envoy, Hashicorp’s Consul Connect, Bouyant’s Linkerd 2, and Istio. One interesting one is Cilium, which works in the kernel space, instead of the user space.

- 27:35 Humble: We’re also seeing some definite pushback against microservices.

- 27:38 Reisz: Microservices solve the specific problem of developer and deployment velocity, when people are stepping on each other’s toes. If you’re not having those problems, microservices may not be the right answer; monoliths are perfectly fine. You do hear counter-arguments from people now saying, “microservices were wrong for us.”

- 28:11 Reisz: At our heart as developers are languages. What trends are you seeing for languages in 2019, and is there a language of choice?

- 28:24 Humble: Java isn’t going anywhere. C# and .NET isn’t going anywhere, either. There’s still a tremendous amount of interest in those languages on InfoQ, and I think that will continue to be true. In terms of the other languages we’re seeing interest in, Go is one. We had a popular piece recently about Go and microservices at The Economist. We also see a lot of interest in Rust. If you’re doing anything that’s performance-sensitive, Rust is a pretty good alternative to C++. I’m personally very interested in Swift. My ten-year-old is a big fan of the iPad, and he wants to learn Swift, so I’ve been learning it with him. Kotlin, on the JVM space, is similar to Swift because it’s found its niche for doing mobile development. Python has also been growing really rapidly. It’s not new, about the same age as Java, but it’s really taking off, driven mostly by data science.

- 30:02 Reisz: I know you have an interest in augmented reality and virtual reality. What’s coming up in 2019 with AR and VR?

- 30:14 Humble: In AR, we don’t have the form factor, yet. I think it’s likely to be eyeglasses, but I don’t think anyone is quite ready with the tech. I don’t think Apple gets enough credit for building really small computers. If you think of AirPods or the Apple Pencil, they are really discrete computing devices that we don’t really think of as computers. That works well for the idea that if you’re going to build an AR device, it has to be small and discrete. There’s also the whole issue with privacy. We learned from Google Glass that you must have a strong privacy story if this stuff is going to work for you. I think we know where it’s going, but we aren’t there yet.

- 31:13 Humble: In more general terms, there’s so much happening around human-computer interaction, with voice, AR, and neural interfaces. Adam Berenzweig from CTRL-labs gave a presentation at QCon San Francisco talking about their wrist device that picks up nerve signals. I think that’s interesting because the same way that touch opened up computers to a class of people who couldn’t use them before, neural interfaces may be able to do that for people for whom touch isn’t an option. It can also be a benefit for everyday users who start to suffer from repetitive stress injuries, to allow alternative input options. That whole space is a long way out, but I find it all fascinating. In the same way we had the touchpad shift, I think there will be another shift coming.

Resources

Holly Cummins articles on Quantum Computing (part one, part two, part three)

Rethinking HCI with Neural Interfaces @CTRLlabsco

More about our podcasts

You can keep up-to-date with the podcasts via our RSS Feed, and they are available via SoundCloud and iTunes. From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

Previous podcasts

Related Editorial

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

The past few years have seen a wave of travel companies member accounts compromised which have rocked the industry.

Cathay Pacific had 9.4M accounts compromised.

British Airways had 380,000 card payments breached.

Raddison hotels had up to 10% loyalty program accounts compromised.

Hyatt twice had malware embedded into payment systems that had a bunch of data compromised.

IHG had s string of hotels data compromised between 2016-2017

Marriot beat them all with 500M loyalty accounts compromised in a recent data breach.

While the worrying trend of personal data being accessible to hackers, unsavoury characters and the dark web continues to grow; there is potentially a much larger, scarier secret these companies are not disclosing to the public in the wake of the data leaks.

Has personally identifiable behavioural or consumer insights data been exposed?

What’s the big deal?

You may have heard the phrase data is the new oil, which implies that what businesses can extract from personal data is so rich and of high value that it’s what underpins the commercial models of technology companies.

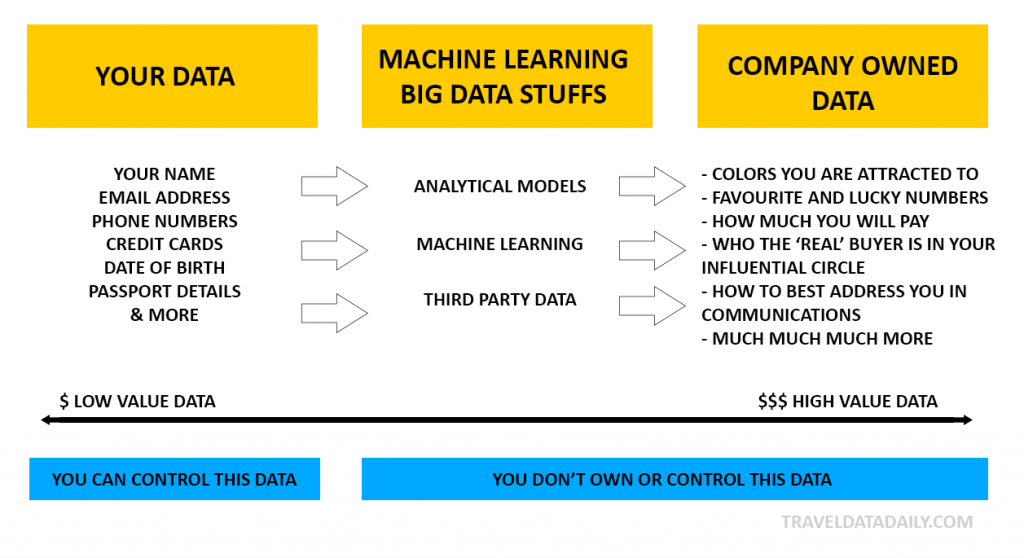

Consumers are lead to believe that it’s their data which businesses are monetising, selling it off to advertisers, emailing you personalised offers, and displaying relevant advertisements. That is not entirely true.

Personal consumer data such as your name, email address, phone number etc. are details which you as an individual, own. The privacy protections around GDPR and other global privacy laws are in place to restrict how companies collect, store and share your personally identifiable information with third parties. The concept is to give you greater perceived control over what happens with your information. That is true.

If businesses are not selling access to your personal information to external parties, and yet data is touted as ‘the new oil’, then how the heck are big organisations generating revenue from data?

The real value of data and how it’s used to make you buy more products.

Data that you input into a website or app that is created by you is your data. That may include your name, email address, gender, date of birth, credit card numbers and so on. Let’s call the data ‘personal ownership’.

Traditional advertising and CRM targeting focus on basics such as – “let’s show advertisement X to all males between 24 and 30 years of age who live in Los Angeles”. Advertising in this way works – but it’s nothing compared to where the real money is in the business.

Next, we have dark data, which is the hidden, and often utilized data which can be how many failed logins a user has on a website, the number of times a user visits a site on any given day, what types of airline tickets they are searching for, what web browser or mobile phone they use, etc.

However, the real cash is in behavioural data. That is – knowing WHY a consumer clicks on a link or transacts with a brand. What was the intent, or the primary driving factor behind the engagement?

Consumers do not own their behavioural insights. These insights are derived from data science teams at the organisations who have invested time creating machine learning models to identify traits, trends and use success metrics to better predict future intent behaviour on similar consumers.

Now armed with the behavioural data, companies can hyper-personalised the content you see, the marketing emails you receive, and the prices displayed. In this sense, companies can provide the right incentive to the right person at the right time. The ‘How Big Data is Changing the way we fly‘ explains how some airlines are feeding in behavioural data into their internet booking engines.

Your personal information, while interesting – is not that important. It’s an identifier of sorts and tells us who you are. As more is learned about who you are, what you click on, what you do and don’t interact with – you are segmented into multiple bucks of user behavioural types. Typically, organisations at this level of data analytics will not provide consumers with any algorithmic transparency.

Why is behavioural data valuable?

If a business knows what your primary drivers are in given situations, it’s possible to exploit your motivations and leverage them to drive a particular agenda.

In the 2015 movie ‘Focus’ starring Will Smith, his character is a clued-up con man who lives and dies by the game. Smith’s character in it for the long haul and keeps his eye on the ultimate long-term goal throughout the entire movie.

[Spoiler Alert] – The long-con involves a big-time gambler who has his day seeded with the ‘lucky’ number 55. Smith’s team position themselves in key areas of the gamblers life holding signs and objects with the number ‘55’ subtly placed everywhere. The number is plastered EVERYWHERE in the gamblers life before the final scenes. The branding for the number 55 is therefore implanted into the gamblers head in the leadup to the big event, and when it comes time to pick a number – guess which number he bets on?

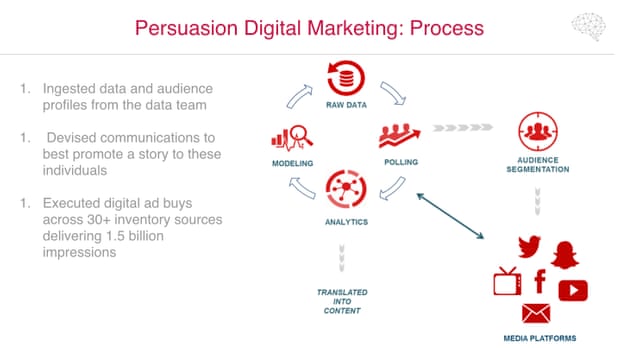

In the 2016 US Federal elections, Cambridge Analytica came under scrutiny for assisting political campaigns by using Facebook behavioural data to drive users to specifically designed landing pages that would appeal to them.

One Facebook member may see “Trump supports the right to arms”, and another user may see “Trump to enforce stricter gun controls”. The entire concept was to garner support for Trump – but the messaging is delivered on an individual basis specifically to what motivates that person to click, engage or share.

Knowing what type (or segment) of user clicked on each link type let Cambridge Analytica know what important interests and motivations each Facebook user have, what made them click, share and like certain pages – even those not politically inclined.

Photograph: Cambridge Analytica

As you can see – the power of knowing behavioural influence data is supremely powerful. Many companies are training their models to be able to identify specific ‘look-a-like’ behavioural patterns, and this leads to a greater risk of your personally identifiable actionable insights (aka: all about you), being exposed when company data is leaked or compromised.

These companies don’t need to disclose if insights data is compromised in the same way they do if your personal information has been compromised. If leaks are disclosed to the public, the company also does not need to disclose what anonymization or transparency (if any) was involved in the modelling process.

Basic behavioural insights include:

– Which marketing channels a type of user is most likely to purchase via

– Which complimentary ancillary/upsells a user is most likely to purchase using propensity modelling

– Your favourite drink or meal on a flight

Advanced behavioural insights include:

– How to address a user in communication (Hello Name, Dear Name, etc..)

– Whether to target marketing communication directed to that user, or to the key person of influence in their buying circle (ie: target emails to the wife who may have ultimate influence over the husband who is seen as the frequent flyer)

– Your lucky numbers or other numbers which you have a strong affinity toward

KNOWING HOW TO MAKE A CONSUMER BUY YOUR PRODUCT, BY USING THE WHY A CONSUMER WILL BUY YOUR PRODUCT IS THE HOLY GRAIL OF MARKETING.

Getting to the HOW and WHY can be achieved after analysing key insights, which is extracted from, or the Dark Data are hidden within standard data sets, third party data, and consistent machine learning over time which looks for patterns in similar user types.

Should consumers be concerned their data has been exposed? Absolutely.

However, to understand the actual extent of the data breaches has on consumers, we need to know the full extent of every piece of data which was compromised.

Hotels and airlines ARE using your personal data to change your behaviour and drive specific transactional and non-transactional outcomes. While it can be a positive experience with highly personalised deals – it’s important to keep in mind that – you don’t own that data.

MMS • RSS

Article originally posted on InfoQ. Visit InfoQ

Transcript

Hey, everybody. Thanks for coming. I appreciate everybody coming to the after lunch slot. It’s always a risky one because everybody else is pretty full and ready to nap, you know. But hopefully, we have a pretty fun presentation in store for you.

So today I’m going to be talking about connecting, managing, observing and securing microservices and that’s a big mouthful, and obviously any single one of those topics is more than enough for an hour-long talk so we’re going to be doing kind of a whirlwind tour of some of the problem space, the shape of some of the solutions to help connect manage, observe and secure services and then we’re going to do a deep dive into Istio itself which is the particular service mesh that I work on.

Intro

So just a little bit about me. You know, I’m one of the core contributors at Istio. Just left to help start a company that’s building on top of the project now. And before that, I worked on the project at Google.

So our high-level agenda: What is the problem? What’s the shape of the solution? What are some service meshes that are around that you could use today and then we’ll talk a little bit about maybe when one might be more appropriate than another. And then we’ll do the deep dive into Istio itself and in the end we’ll have a nice demo to show some of the functionality that I’m talking about.

The Problem

So this is kind of the motivating problem statement for service meshes in general and for a lot of technology that’s cloud data today. Our shift to a modern architecture leaves us unable to connect monitor, manage or secure. That’s the fundamental problem. And so what do I mean by this modern distributed architecture? We mean that we’re moving away from monoliths and into services. So there’s a lot more points of contact. Whereas before with the monolith I might have one or two front doors to get into the system, when I have a set of services there’s doors everywhere into various things.

We are deploying them into more dynamic environments. This is both a really good thing and a very painful thing. So these dynamic, you know, fluid scaling environments that cloud providers can give us are incredible. If you heard the talk that was in the ballroom I guess two before mine, that was a perfect intro. Andrew [McVeigh] talked about a lot of the challenges of building microservices at scale. And these dynamic environments are a key piece of that, things like Kubernetes and Mesos and Nomad and other orchestrators. Let you take use of this flexible capacity but the problem is you have to architect your entire system around this change that’s now introduced. With old style architectures, I worked trying to oil pipe one, and we ran five services. We had a service-oriented architecture but I could walk into our server room and I could point to each of the servers that ran our services. Right? And that doesn’t work when compute scales. You can’t go point to the box that runs it.

And then the final piece of this and in my opinion, this is actually the thing that causes the most developer pain, is that our applications are now composed with the network. And the network is really the glue that sticks our applications together, whereas before in the world of monoliths, we maybe had one process, one giant binary that we could debug through. And really this is a failure of tooling and this is a large part of the reason in my opinion that it’s so hard to move to micro service-based architectures because the tools that we’re used to using to diagnose problems really don’t apply in the same way because they don’t know how to navigate the network. How do I trace a request across a set of 10 services? You know, that’s hard to do. How do I trace a call through 10 processes on a single machine, is hard enough today with our tooling, and now you make them distributed and it’s an incredible amount of complexity to add. But we have to take on that complexity so that we can increase our velocity, move faster and actually ship features, right. So we have to take on that pain to achieve our business goals.

Connect

So what exactly are the things that I’m talking about when I say connect, manage, monitor and secure? When we talk about connections, we want to make an application developer not think about the network, and that’s really hard when now the network is the thing that glues all of your application together. Right? And today if you go and look at dead services you’ll see a lot of patterns like who has written a FOR loop to do retries? Yes. So who has gotten it wrong and then DOSed another service? And fixing that is a pain, right, because you have to go redeploy your code, and redeploying code is risky and hard. That’s never a good thing. So we want things like resiliency, things like retry, circuit breaking, timeouts, I want to do lame ducking, I want to not talk to a back end that’s giving me bad responses. And really I shouldn’t have to build that into my application.

Second big piece of connectivity is service discovery. How do I even know the things that I’m talking to and where they live? You know, and today there are solutions like DNS that are widely used that come with a lot of problems again, because we have this dynamic environment. DNS caches are kind of our enemy. How many people have had problems with DNS caching that results in request going to bad services that should be out of rotation?

And that could be hard to fix. And similarly, we want load balancing everywhere, because that’s really a key to building a robust and resilient system is being able to shift load easily. And ideally, we would like our load balancing to be client side. I would like my clients to be smart enough to be able to pick the right destination, because that means that I could have more efficient network topologies, right. If I have to run a middle proxy that does load balancing, it means that it becomes a point in my network where all this traffic has to flow through, and I would really like to keep traffic point to point where I can keep nice, simple, efficient networks, if I can. And so the client side load balancing is a really valuable tool to help with that.

Monitoring

Next, we want to do monitoring. This is just what’s going on. This gets really to the heart of where I think most of our developer pain is today, which is I have this thing, I have this system and it’s got all these moving parts. What’s it like, what’s it doing? And there we need metrics and we need logs and ideally, we want traces too so that we can actually look at the path of any particular request through our system.

And it’s not enough just to have metrics and logging. You really need consistent metrics and logging with similar dimensions, with the same semantics for those dimensions everywhere because when we have metrics for example that are ad hoc per service, it becomes impossible to build a higher level system on top of those metrics. If you use something like a service mesh that can give uniform metrics everywhere, it becomes possible to do things like build alerting on top of those predefined metrics that your entire organization can use. Hey, I’m spinning up a new service. I need to wire up alerts for it. You go, “Oh, cool. Here’s the templates already, you know. Here, we already have our metrics. You’re ready to go.”

And it really reduces the effort required to spin up new services, the effort required to monitor existing ones. This is one of the key features of a service mesh that we’ll come back to which is just this consistency because a different system is handling these core requirements rather than every individual application doing it.

Manage

We want to be able to manage the traffic in our system, and we want to manage not just where it goes, where it gets routed to but how it does that and really we want to be able to apply policy to that traffic. I want to be able to look at L7 attributes of a request and decide whether or not my application is going to serve that request or not. And there’s a ton of different use cases there. So you can think about maybe some API gateway use cases around things like coding, rate limiting, off. You can even phrase authn/z, you know. Is the service allowed to talk to another service is a perfect example of policy that applies to an entire fleet that you can use a service mesh to help you implement.

And when I talk about traffic control a lot of the industry today does L4 load balancing and L4 traffic control. So we really want to move that up to be application aware. I want to be able to make load balancing decisions based on application load on the health of my actual service. Not necessarily how much CPU or RAM or whatever it’s using because those may or may not actually be correlated depending on what my use case is.

Secure

And then a final big piece that we need now and this gets back to some of this decomposing of the monolith into individual pieces. Security becomes a lot harder. So before, typically, I would have one entry point into my monolith, and we can lock down and firewall everything else, and network traffic is locked down and I can apply network policies at L3 and L4 to make sure that things can’t talk to each other that should. The problem is all of that is focused on the network identity of a workload. What is the IP:port pair, what is the machine that’s running it? That’s what all of our network security is used to dealing with. Except that I just said, “Hey, we’re in this new dynamic environment where things change all the time.” And that IP and port that’s hosting one application may not be hosting at the next. That’s a lot for existing networking tooling to keep up with. If we look at some of the early CNI implementations in Kubernetes as an example, they suffered all kinds of problems with crashing kernels and that kind of thing because it was doing IP tables to update which hosts on the network were allowed to talk to each other ones.

And that was kind of an abuse of that system to provide a valuable feature set and since then a lot of CNI plugins have moved away from that model, but fundamentally a lot of the network level tooling that we’re used to dealing with is not built to cope with the higher rate of change of the applications today.

So we want to be able to assign an identity that is tied to the application, not to the host, the thing that’s running on the network. I want my identity and I want the policy that I write about what two services are allowed to talk to each other for example, to be in terms of the services and not in terms of the IP address that happens to host that service right now.

And then with the goal with moving away from that reachability is authorization model, right. Kind of in vogue today is the zero trust networks and Google’s Beyond Corp. That’s kind of the stuff that I’m talking about here, when we can start to move identity out of the network and into some higher level construct that’s tied to an application.

The Service Mesh

So, the goal of a service mesh is to move those four key areas out of your applications, out of thick frameworks and into something else. And that something else depends on which implementation you pick. A common service message today that people use is just Envoy proxies, right. So we write up some config for how Envoy is going to proxy for my service, and we put an Envoy beside every instance. And you can get a lot of the traffic control, you can get a lot of the resiliency features that I talked about, the telemetry out of that system.

You could do something like Linkerd which is again another service mesh that works in a similar way to what Envoy does now, where you put a proxy beside every single workload, it intercepts all the network traffic, and allows you to provide this feature set. And we’ll go into depth about how that architecture works and then how those things happen.

And then Istio is the third service mesh that provides these features that I talked about. One of the other key goals that I didn’t talk about in those four is the consistency. I want metrics, I want security, I want policy – each of those four categories I want to be consistent across all of my services. I want to retry policy to be consistent. All of that. I would like to be able to control it in one place and this is a key enabler for velocity. When we add in a service mesh, we can delegate another team to handle a lot of these cross-cutting concerns and move them out of the realm of concern of an application developer.

So a single central team can do things like manage the traffic health and the network health of your infrastructure, and individual developers don’t need to worry about that. It’s a huge force multiplier. Central control is a huge force multiplier to let an organization do more by moving things out of the view of developers.

And then finally another key feature of all of these systems are fast to change. Change on the order of config updates, not binary pushes. And that’s a really, really key feature just for that DOS use case that I talked about. I have on multiple occasions DOSed another service with a bad retry loop. It’s really nice to be able to push config to change that and not have to go get a release in and cut a new binary.

Istio

So Istio is a platform that does that. It is a service mesh that implements a lot of this capability. And so let’s talk about kind of in detail how it works. So this is going to be our mental model. It’s real, it’s about as simple as it can be. A just wants to call B over the network. We don’t really have to care what. It can be HTTP rest request, it could be TCP bundle over TLS. It really doesn’t matter. It’s just a network call. If it’s a protocol that we can understand like HTTP for example, then we can get more interesting data about it to show the user and I’m going to demo a lot of this stuff. I’m going to demo a lot of the telemetry and that kind of thing in at the end of the talk and we’ll get to see some of what I’m talking about. But this is just our model.

How Istio Works

So how do we start to build this kind of mesh to get this functionality that I’ve talked about? The first thing that we’re going to do is put a proxy beside every single workload that’s deployed in our system. We call this a sidecar proxy. Istio uses Envoy as its proxy of choice. Envoy was specifically built with this use case in mind. So if we think about like a traditional nginx that we use for load balancing in our system, it has a very different set of requirements than a proxy that we’re going to put beside every single application. It’s a lot bigger, the rate of change is a lot slower, you’re handling substantially more traffic typically. You want to make a different set of tradeoffs somewhere when we’re building in this sidecar style model. We want a lighter weight proxy. Keeping the footprint small becomes very important. We want it to be highly dynamic because again that’s one of the big requirements of the system that we’re deploying into is that things change quickly. We need to be able to update the configuration of our proxies very quickly as well. And so Envoy was built by Matt Klein and the team at Lyft to address these particular use cases.

Secondly, then we need to start to get configuration into the system. So Istio has this component called Galley. It’s responsible for taking configuration, validating it and then distributing it to the other Istio components.

So we had these sidecars there. They actually need some config to do something. In our system, if we just have static sidecars that sit there it doesn’t give us a lot. So we deploy this Pilot component of Istio, which is responsible for understanding the topology of our deployment and what’s the network loop like, and pushing that information into the sidecar so they can actually do routing at runtime.

We want to perform policy at runtime like I mentioned. We want to be able to do things like service to service authentication. I want to do things like rate limiting. Ideally, I might even want to do things like end user off in Mixer. So that’s one of those things that invariably in every organization winds up being a library that you include. How do you handle your end user credentials? How do you authenticate them and how do you authorize them for access? Hopefully, all of your services do it the same way. And they probably use a library to do that. This is another key location where, hey, that’s logic that we can pull out and into our service mesh. Things that are horizontal that cut across our entire fleet, we can pull into our service mesh, implement at one time.

And if I have Mixer here, in this case, doing the off policy on the end user request, for example, I don’t need my application developers to worry about it. And this is one of the other kind of core features of a service mesh as opposed to other approaches to get this functionality, because service meshes are not necessarily new. There’s been things like Finagle that had been around for quite a while that Twitter uses. There’s Netflix and Hystrix and that family of things. Those are all very service mesh-like libraries and frameworks. The problem is they’re all language specific. Using a proxy to implement it, it being the network to the application lets you sidestep that language dependency so you can provide all this functionality in a language agnostic way.

And again, this is one of those things that helps boost developer productivity. It becomes one less hurdle to use a new language in your environment for example, because a lot of the core cross-cutting functionality which has traditionally been implemented as libraries can move out into a language agnostic format. Huge benefit.

And then finally there’s a component of Istio called Citadel that’s responsible for provisioning workload identity, that L7 identity that’s tied to the application. Citadel is responsible for provisioning that at runtime and that looks like a certificate. That’s an X509 cert and rotating it frequently.

So how does our request actually flow through the system? If we go back to the very beginning, A just wants to call B. We’ve set up all this machinery, these Envoy sidecars, the control plane is running static and A is used to call the B. So the first thing that happens is that locally the client sidecar traps that request. That can be done in a variety of ways but so far Istio typically uses IP tables to do this redirect. And so it traps all the traffic in and out. Envoy then inspects that request that looks at the metadata, the L7 request. If this is an HTTP call we’ll look at the host header to determine routing. And Envoy then takes this opportunity to make a client-side routing decision, and picks the B instance that we’re actually going to send the request to. And Pilot ahead of time programmed all this data into the Envoy. So any given Envoy in the context of a request knows all the other endpoints and can make an immediate decision and route. We don’t need to do DNS lookups, we’re not out of path. You can then immediately route.

So we pick a B. We forward our call to B. We don’t necessarily know that there’s a client-side Envoy or server side Envoy in this case. We don’t know that there’s a sidecar there or not. Part of the goal of the system is to be transparent. But in this case, we do have one that catches it on the other side.

Now receiving – this is where I want to apply policy. So this is actually in request path. I’m going to block. I’m going to go talk to Mixer and I’m going to say, “Hey, here’s this request that I just got. Here’s the bundle of the data about it. Do I let it through or not? Make a decision.” And this is the point where you can do things like implement your own authn/z. If you’re doing things like a header based token for off that’s easy enough to pull that out of the request and do authentication or authorization. You can do things like rate limiting here. Again, anything you might want central control over is a good fit for policy in Istio.

And Mixer makes a thumbs up, thumbs down decision. That’s really at the end of the day. Envoy says, “Do I let it through or not?” And Mixer just replies with “yes”, “no” and a cache key. So obviously it would be prohibitively expensive to call out to Mixer for every single request that comes into the system because that doubles the traffic in your system, that’s not feasible. And so, instead, it turns out that a lot of service-to-service communication, and even end-user-to-service communication has a lot of properties that make it very cacheable. Typically any given client has a very high temporal locality. If you’re going to access my API, you’re probably going to access it a whole bunch in a short amount of time and then you’re going to go away and not come back for a long time. And then you’re going to come back and access it a whole bunch.

Very admitable to caching and you can actually see cached rates, above 90%. So rather than doubling the traffic in your system by making these policy calls instead, you’re talking about a much smaller increase, maybe 10%.

So Mixer gives a thumbs up. We say, “Yes, cool. Let the request through.” And so the sidecar there will send the request back into the application that’s behind it. The application will do whatever business logic it needs to build its response. Maybe that’s calling other services down the graph, maybe it’s going to a database. Maybe it just has the answer in hand.

But it will send that response back. And then asynchronously and out of band, both the client and the server side sidecar will report back telemetry. And that’s awesome because it means that we get a complete picture of what happened from the client’s perspective and from the server’s perspective which is massive for debugging. It’s so frustrating, we typically only ever have the server side metrics. As somebody that’s producing a service, I always worked on back-end services myself. I always had my server-side metrics and there’s so many times the problem is either in the middle or on the client. Having a single system that gives us both sides of that is massive for debugging. It’s so awesome.

Architecture

So here’s a little bit nicer picture of the architecture that we just walked through with all of the components labeled. And again like I said, Pilot is for config to the sidecars, Mixer is for telemetry and for policy, Citadel is for identity and Galley is for configuring everything.

Demo

So with that we’re actually going to dive into a demo, and I’m going to just kind of show you some of what I’m talking about with the service mesh and we’ll see some of the telemetry, we’ll see some of the traffic control. So let me frame our demo. If anybody has played with Istio before, you’ve seen the bookinfo app. It is the canonical test application that we use for Istio. And this is what the deployment looks like. We have a product page that’s the UI that renders a book. It calls the detail service to get some details about the book, and it calls some version of the review service to get a review for that book.

Finally, two of the reviews calls the rating service. So this is what we’re just going to deploy. I’ll just do [inaudible] and apply in a second. Where we’re going with it is we’re going to use Istio to split it up and deploy it across clusters. So this is a use case that I’ve had quite a few different users talk about, which is we need to deploy it across availability zones as an HA requirement for example. That’s a pretty frequent one. I need to run in two availability zones as an HA setup and that’s a blocker for my merger and acquisition, for example.

So what we’re going to do is go from this setup to that setup with no errors. We should see a 100% 200s the entire time and it should be seamless, the application. We’re not going to touch the applications that are deployed at all.

So with luck, it’ll go smoothly. […] Let me go to my cheat sheet real quick and make sure that I’m set up correctly. So just real quick so that everybody can see what I’m doing. I’m just going to alias throughout this presentation. I’m going to use KA and KB because I’m going to be typing and to type coop control in the context is ridiculous. So KA goes to cluster A, KB goes to cluster B.

And then let’s go ahead and get Istio. Istio, I’ve already installed actually. Sorry you can never type when you’re on stage. And so just to prove that I’ve installed this ahead of time already. So this is just a stock Istio installed. This is actually the Istio demo script. One small change that I’ll touch on later is I do have a core DNS deployed and we’ll get into that. And KB is the same. So if I grab this, we can see the same and notice we have just this one external IP address. That’s our ingress into the remote cluster. So that’s our proxy that’s running ingress.

So let’s go ahead and deploy the book info in the cluster A. Again this is just the standard stock Istio looking for deployment and similarly … (This is a proof that you know the demo is live is that something breaks. Sorry. One second.) I tried to bypass some of my setup. So all I did was just run a little script that went and pulled the IP addresses that we’re going to need later for me, and wrote them into some files including … Sorry about that. So now let’s verify that our product page was actually deployed successfully. All right, so we’re deployed. We’re there. We have our ingress.

Sorry about this, guys. It’s fun debugging. Let me pull up the ingress. Actually it did work before, I promise. So we’re just going to do this by hand to verify, and then we can do the nuclear option which is just blow up the pod. (So sorry about that.) And then there’s one more little bit of setup that we’re going to do if I find my script. This is all because I didn’t correctly tear down my cluster last time. I’ll go into detail about what this is in a second but I’m just doing this to set up things.

We’re using the CW tool that we’re using is this a tool that produces Istio config for us. And we’ll dive in detail what it does. But this now gets everything working. So we have book info with reviews. Awesome. We’re at the start of the demo now. So let’s go ahead and start driving some traffic to the deployment. I’m going to show you some stats in a second. So all I’m going to do is just start a loop that’s just curling the websites, we get some traffic in the background. And then I am also going to go ahead and set up Grafana to run. So in the Istio demo deployments, Istio ships with a Grafana dashboard that lets us see all these consistent metrics that I talked about.

One more command. You can tell that this is a fresh demo. This is the first time giving this particular demo. Awesome. This one’s not on me. This is the port forward. Now we get our Grafana dashboards. So this is the stock Istio dashboard so if you’ve looked at Istio before you would see it. And here we can clearly see the set of services that we’ve deployed. We can see the small amount of traffic that we’re sending to it right now – about 10. And then we can go and look at any individual service that we have deployed. Again, this is the advantage of having these pre-canned metrics that are homogenous everywhere. I can do things like define one dashboard that plugs into those metrics that provides useful and interesting data like total request volume, the success rate, our latencies, these critical metrics for actually diagnosing the health of a service. Out of the box without having to add them to the application itself. And we can see both the client side and the server side. We don’t have any … sorry, I guess we do in this case.

So these dashboards, awesome, free, out of the box. We’re now in this state. So let’s go ahead and start to migrate things over. So if we’re moving to this end destination, step one is going to be just to migrate details over first. So we’ll go incrementally one service at a time. We’ll ship them over and the big thing that we want to watch, we can actually see our global success rate. This is aggregate any 500 across the entire system will trigger something there. So we expect that to stay at a 100 as we do our traffic shifting. And then let’s go ahead and start doing that. So the first thing that we’re going to do is deploy the detail service into our second cluster.

And if we get the service we see it’s deployed. Now let’s talk about the CW tool that I was going to demo. So Istio gives us the tools in hand to shift this stuff around basically playing some shell games with how names resolve. The problem is it’s the config to write this, to do these kinds of traffic shifts and Istio’s super tedious to write. It’s exactly the same every single time but it’s multiple config documents that you need to produce together. So the CW tool that we’re going to be using, it’s called Cardimapal, is something that my team built, and all it does is just generate the Istio config for a couple of different use cases. The reason I’m talking about it now is because in order to generate this config, we need a little bit of auxiliary data. So the first thing that we need is a representation of our clusters. So I mentioned before that we have A and B.

Here is our A and B clusters and in particular, these addresses are those IP addresses of the ingresses that I talked about earlier. So we’re going to communicate between our clusters over the internet via the ingress, and we can rely on Istio TLS to keep that secure. So Citadel provisions identities. Those identities are used to do mutual TLS between workloads. So we can over the internet just go in through the ingress and that’s fine. We sub the same route of trust ahead of time and so the workloads trust each other, and we can just do TLS all the way through. Not need to worry about setting up a VPN or any other kind of complicated setup that we might have to do otherwise.

The second thing that we need is a small representation of the service. Enough to be able to generate some configuration to talk to it. Here’s the name, the product page service, how we call it, the ports. And this backends bit here lines up with the clusters in A. So if I had product page deployed in B for example or I just deployed details into B, I can go ahead and add this as a backend.

So all I’m doing is updating this little model of our detail service and I’m saying, “Hey, it’s deployed both into cluster A and it’s deployed into cluster B. And it’s the details that default Kubernetes service name. That’s the actual address of the service in the cluster. And so now we can go and generate some of that tedious config to wire up our cluster so that this ingress works, details us here and then we’ll wire up some config on A side to let it know that cluster B exists.

So this time we’ll say, “Hey, Cardimapal generate config for cluster B and I care about the detail service.” So this just spat out a bunch of yammo. Let me pull it over somewhere where we can actually look and see what it does. So there’s three key pieces of config that we have here. The first is our gateway. How do we actually get into this detail service? And this just says, “Hey, run on the normal SEO gateway and use the details like global name.” This is going to be the name I expect a client to call with this global name to ingress.

I defined routing for that. I say, “Hey, by the way, at that gateway that we created, if you see these two hosts go ahead and just send it to the detail service.” I had a typo here in my services And then finally we have a service entry that just says, “Hey, by the way …”

By the way, this service entry, I’m going to define this name, these two names and just resolve them to the Kubernetes service really. So it’s just basically playing a shell game, creating a new name for our Kubernetes service. And our application already talks to this thing. And the reason that we want to do this that we want to decouple naming from the Kubernetes names is it allows us to do things like shift traffic between clusters. The problem is that Kubernetes names are scoped to a cluster. And so, if I immediately want to begin to do things across clusters, I need some different naming domain where I can’t get conflicts. And so that’s why we’re playing a little bit of a shell game with some of the names here. We’re using this global service rather than the full Kubernetes service name. And that’s also why we’re running core DNS in our clusters so that we can resolve these new names that we’re creating.

So let’s go ahead and do that. Let me verify that I actually saved this correctly so we don’t wind up with reviews on there like last time. Details, details everywhere. Ratings, ratings, reviews. So the same config that I just walked through. We’re just going to keep it and apply it. We didn’t create our namespace. Excellent. So now I can take this. I can prove to you that our ingress works real quick. So I’m going to curl the detail service and I’m going to set that host header. We have the same problem that we had in the other one so let’s just kill the pod real quick. Sorry, and again this is just a little bit of setup. It’s not typical that you have to go delete your ingress pod to get config to apply.

So we got a 404 which we would expect because we didn’t set our host header but as soon as we set our host header, suddenly we can do routing normally and we can get our details. Great. So we have set up this. Now we want to add our keyed link.

And the way that we’re going to do that is go update our representation. So I want to move details. I really don’t want to model details being in A. I want to shift it over to cluster B. So I’m going to go back to my model with my services. I just deleted it out and now we are only in cluster B. And we can see that we actually generate now a different set of config for cluster A. So I said, “Hey, details moved. The config to talk to details is now a little different.” It actually points to this IP address for the remote cluster. So we say, “Hey, if you want to resolve that details name go over to the royal cluster.”

So we can just go ahead and apply that. And then the last thing we have to do is you remember that virtual service that I showed you here? This is currently in our cluster and it says, “Hey, when you see details send it to the local service.” Clearly, I don’t want that anymore, because I just said, “Hey, no. I want to move it over to the remote.” So let’s go ahead and delete this virtual service. And this is just some Istio config and what we’ll see here is if we come and look at our cluster A we see that there’s traffic going in. If we look at cluster B we see that the only traffic was my couple curls. What we should see is when I remove this rule our traffic flop over because right now that rule says, “Keep the traffic local.”

And then let’s lead it in the right namespace. And so we just removed that config from cluster A. So now what we should see is traffic start is A still 100% success. This should still load. We’re still loading and we’re seeing reviews. And we see that traffic is actually starting to pick up. So we’re on our five minute delayed window for our metrics. So we can see the traffic picked up but our ops per second is still low. And we can dig into a little bit more detail here and we can see that in fact, traffic is picking up. And we can go back to our side that’s actually serving our UI and see that did it with actually no errors anywhere. So we didn’t drop any connections to anybody. We didn’t lose one of the user requests during that flop over. Everything just kind of flowed through the system.

So we’re running short on time now because, you know, it turns out the demo was a little bit more efficient when it works. I want to save some time for questions. So I apologize we didn’t get to dig in quite as much as I’d wanted to but hopefully we got to see a little bit of the telemetry out of the box that’s pretty useful. And we can see how we can apply pretty cool traffic shifts and make interesting things happen with just changing configuration. I didn’t have to touch any of those applications, we didn’t have to touch any of the code, it was all deployed and running the entire time and we were able to change it really without the applications noticing. And that’s a key feature for that philosophy. I want my ops team to be able to manage the cluster and my dev team not to have to care.

Questions and Answers

So questions? We got four minutes.

Man: So in your example, you were referring that saber A called Mixer to apply policies, and you said that there was no penalty you paid per request but then when invoke service B, there is again Envoy. So down the stream service there always calling Mixer to check enforcement?

Butcher: So the server side, Envoy will always call. You want policy to be enforced on the server side because you can’t trust the client applied policy. So we always hit on the server side. Logically, Mixer is called on every single request to a server but we cache. Does that answer the question?

Man 1: Yes.

Butcher: Perfect.

Man 2: In the instance you showed, is the same instance of Istio actually managing across two distinct Kubernetes clusters?

Butcher: That’s a great question. So and this gets into some of that Istio multi-cluster work. The answer is today what I demoed you does not actually. So these are two separate control playing instances with two separate config domains. In my opinion, that’s actually how you want to run Istio across clusters. So it really gets into what you mean by what’s a service mesh. The real answer is identity. These workloads can communicate with each other because they share a common identity domain. Because they have that common identity domain we can establish connections. It doesn’t really matter who’s configuring that. The fundamental piece is that there’s communication. And so we can have separate administrative domains. It’s still one mesh because we still have one set of identities.

Woman: So every time I make a call that Mixer is invoked. What keeps it from being the single point of failure?

Butcher: Yes, that’s a great question. So there are a couple of different things. So A, Envoys are doing caching. So you can think of Mixer in some senses as a centralized cache and then the Envoy as this kind of leaf cache notes. So the second piece is that Mixer itself can be horizontally scaled. So there’s not any one particular instance of it. There are many instances. I guess the short answer is Mixer can be a single point of failure if you configure things incorrectly, but in terms of actual scaling, it’s horizontally scaled so one instance going down is not going to kill anything. You can push bad policy that might cause a global outage and be a single point of failure. But outside of that, it tends to not be. So this architecture actually came out of Google. Google had a system of identical architecture for about the last four and a half years now. And actually what we’ve observed is that not only is it not a single point of failure, but it actually boost the client perceived availability of the backends that it’s in front of, because it acts as a distributed cache. And it turns out that it’s really, really easy to run a distributed cache at high availability and it’s really, really hard to run systems that make policy decisions at high availability.

So there are definitely failure modes in which it can be a single point of failure but in practice, we’ve actually observed it doing just the opposite and increasing the client perceived availability of a back end. And off is a perfect example. So today your off call -every single service should be calling your off service for every single request. And instead, you can reduce that by a factor of about 10X by having Mixer cache that and have that result reused. And that just works natively with how Mixer functions. It automatically does these caching of decisions.

See more presentations with transcripts

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

I thought I would follow on my first blog posting with a follow-up on a claim in the post that going returns followed a truncated Cauchy distribution in three ways. The first way was to describe a proof and empirical evidence to support it in a population study. The second was to discuss the consequences by performing simulations so that financial modelers using things such as the Fama-French, CAPM or APT would understand the full consequences of that decision. The third was to discuss financial model building and the verification and validation of financial software for equity securities.

At the end of the blog posting is a link to an article that contains both a proof and a population study to test the distribution returns in the proof.

The proof is relatively simple. Returns are the product of the ratio of prices times the ratio of quantities. If the quantities are held constant, it is just the ratio of prices. Auction theory provides strategies for actors to choose a bid. Stocks are sold in a double auction. If there is nothing that keeps the market from being in equilibrium, prices should be driven to the equilibrium over time. Because it is sold in a double auction, the rational behavior is to bid your appraised value, its expected value.

If there are enough potential bidders and sellers, the distribution of prices should converge to normality due to the central limit theorem as the number of actors becomes very large. If there are no dividends or liquidity costs, or if we can ignore them or account for them separately, the prices will be distributed normally around the current and future equilibrium prices.

Treating the equilibrium as (0,0) in the error space and integrating around that point results in a Cauchy distribution for returns. To test this assertion a population study of all end-of-day trades in the CRSP universe from 1925-2013 was performed. Annual returns were tested using the Bayesian posterior probability and Bayes factors for each model.

For those not used to Bayesian testing, the simplest way to think about it would be to test every single observation under the truncated normal or log-normal model versus the truncated Cauchy model. Is a specific observation more likely to come from one distribution or another? A probability is assigned to every trade. The normal model was excluded with essentially a probability of zero of being valid.

Bayesian probabilities are different from Frequentist p-values. Frequentist p-values are the probability of seeing the data you saw given a null hypothesis is true. A Bayesian probability does not assume a hypothesis is true; rather it assumes the data is valid and assigns a probability to each hypothesis that it is true. It would provide a probability that data is normally distributed after truncation, log-normally distributed, and Cauchy distributed after truncation. The probability that the data is normally distributed with truncation is less than one with eight million six hundred thousand zeros in front of it when compared to the probability it follows a truncated Cauchy distribution.

That does not mean the data follows a truncated Cauchy distribution, although visual inspection will show it is close; it does imply that a normal distribution is an unbelievably poor approximation. The log-normal was excluded as its posterior didn’t integrate to one because it appears that the likelihood function would only maximize if the variance were infinite. Essentially, the uniform distribution over the log-reals would be as good an estimator as the log-normal.

To understand the consequences, I created a simulation of one thousand samples with each sample having ten thousand observations. Both were drawn from a Cauchy distribution, and a simple regression was performed to describe the relationship of one variable to another.

The regression was performed twice. The method of ordinary least squares (OLS) was used as is common for models such as the CAPM or Fama-French. A Bayesian regression with a Cauchy likelihood was also created, and the maximum a postiori (MAP) estimator was found given a flat prior.

Theoretically, any model that minimizes a squared loss function should not converge to the correct solution, whether statistical or through artificial intelligence. Instead, it should slowly map out the population density, though with each estimator claiming to be the true center. The sampling distribution of the mean is identical to the sampling distribution of the population.

Adding data doesn’t add information. A sample size of one or ten million will result in the same level of statistical power.

SIMULATIONS

To see this, I mapped out one thousand point estimators using OLS and constructed a graph of it estimating its shape using kernel density estimation.

The sampling distribution of the Bayesian MAP estimator was also mapped and is seen here.

For this sample, the MAP estimator was 3,236,560.7 times more precise than the least squares estimator. The sample statistics for this case were

## ols_beta bayes_beta

## Minimum. : -1.3213 0.9996

## 1st Quarter.: 0.9969 0.9999

## Median : 1.0000 1.0000

## Mean : 1.0030 1.0000

## 3rd Quarter.: 1.0025 1.0001

## Maximum : 5.8004 1.0004

The range of the OLS estimates was 7.12 units wide while the MAP estimates had a range of 0.0008118. MCMC was not used for the Bayesian estimate because of the low dimensionality. Instead, a moving window was created that was designed to find the maximum value at a given scaling and to center the window on that point. The scale was then cut in half, and a fine screen was placed over the region. The window was then centered on the new maximum point, and the scaling was again halved for a total of twenty-one rescalings. While the estimator was biased, the bias is guaranteed to be less than 0.00000001 and so is meaningless for my purposes.

With a true value for the population parameter of one, the median of both estimators was correct, but the massive level of noise meant that the OLS estimator was often far away from the population parameter on a relative basis.

I constructed a joint sampling distribution to look at the quality of the sample. It appeared from the existence of islands of dense estimators that the sample chosen might not be a good sample, though we do not know if the real world is made up of good samples.

I zoomed in because I was concerned about what appeared to be little islands of probability.

To test the impact of a possibly unusual sample, I drew a new sample of the same size with a different seed.

The second sample was better behaved, though not so well behaved that a rational programmer would consider using least squares. The relative efficiency of the MAP over OLS was 366,981.6. In theory, the asymptotic relative efficiency of the MAP over OLS is infinite. The joint density was

The summary statistics are

## ols_beta bayes_beta

## Mininum : 0.1906 0.9994

## 1st Quarter: 0.9972 1.0000

## Median : 1.0000 1.0000

## Mean : 1.0004 1.0000

## 3rd Quarter: 1.0029 1.0001

## Maximum : 2.2482 1.0005

A somewhat related question is the behavior of an estimator given only one sample.

The first sample of second set resulted in OLS estimates using R’s LM function,

## ## Call:## lm(formula = y[, 1] ~ 0 + x[, 1])

## ## Residuals:##

Minimum 1Q Median 3Q Max

-1985 -1 0 1 83433

## ## Coefficients:## Estimate Std. Error t value Pr(>|t|) ##

x[, 1] 1.000882 0.008043 124.4 <2e-16 *** ## —

## Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ‘ 1## ##