Article originally posted on InfoQ. Visit InfoQ

Transcript

Nauck: I’ll talk about machine learning. First, before I start, I want to say something about what that is, or what I understand from this. So, here is one interpretation. It is about using data, obviously. So, it has relationships to analytics and data science, and it is, obviously, part of AI in some way. This is my little taxonomy, how I see things linking together. You have computer science, and that has subfields like AI, software engineering, and machine learning is typically considered to be subfield of AI, but a lot of principles of software engineering apply in this area.

This is what I want to talk about today. It’s heavily used in data science. So, the difference between AI and data science is somewhat fluid if you like, but data science tries to understand what’s in data and tries to understand questions about data. But then it tries to use this to make decisions, and then we are back at AI, artificial intelligence, where it’s mostly about automating decision making.

We have a couple of definitions. AI means using intelligence, making machines intelligent, and that means you can somehow function appropriate in an environment with foresight. Machine learning is a field that looks for algorithms that can automatically improve their performance without explicit programming, but by observing relevant data. That’s what machine learning typically means. And yes, I’ve thrown in data science as well for good measure, the scientific process of turning data into insight for making better decisions. So, that’s the area.

The Ethical Dimension of AI, ML and Data Science

If you have opened any newspaper, you must have seen the discussion around the ethical dimensions of artificial intelligence, machine learning or data science. Testing touches on that as well because there are quite a few problems in that space, and I’m just listing two here. So, you use data, obviously, to do machine learning. Where does this data come from, and are you allowed to use it? Do you violate any privacy laws, or are you building models that you use to make decisions about people? If you do that, then the general data protection regulation in the EU says you have to be able to explain to an individual if you’re making a decision based on an algorithm or a machine, if this decision is of any kind of significant impact. That means, in machine learning, a lot of models are already out of the door because you can’t do that. You can’t explain why a certain decision comes out of a machine learning model if you use particular models.

Then the other big area is bias, which is somehow an unfair, under or over-representation of subgroups in your data; obviously your racial bias, gender bias is the obvious one. You may have heard that things like facial recognition, they are racially biased, and they are also gender-biased. So, if you look at tests trying to identify the gender of a person using a facial image recognition system, you find that white males are recognized most frequently, but dark-skinned females most infrequently. So, there’s a strong gender and racial bias in these systems. Where does this come from? How does this happen?

Another area that is of concern, maybe to you guys in software development too, but people tend to use modules that they get from somewhere. You get a library, you get a piece of code, you stick it together to build what you want to build. When you’re using code, and if you have the source code, at least you can look into it, you can read it. If you have standard code, you can use your testing regime.

But what about stuff that has been generated by machine learning? So, if you get yourself a facial image recognition system from somewhere, and you stick it into your home security solution, and that then fails to recognize the owner and let them into the house, how can you be responsible for this component that you have included into your system? Do you know how it has been built, tested, validated? Is it free of bias? All of these things. So, we will see, especially in this domain, gluing together different components from different areas with different types of provenance and quality. How do you make sure that what you assemble is of good quality?

A Report from the Coalface: Naïve Bayes- from R to Spark ML

Here is an example of a team that used a machine learning algorithm to tackle a particular problem. They want to predict the propensity of a customer to call a helpline. So, they had data about customers calling helplines, and data about customers who didn’t call helplines. Then all the data they had about these customers, they tried to use to predict a customer like you to call a helpline in a particular timeframe. They used a particular machine learning model here, or a statistical model, called a Naive Bayes classifier. For the purpose of this example, it’s not important how that works, but they used to a particular library. And in data science, machine learning, you’ll find a lot of people using R or Python typically.

These guys used R, and this particular library can work with numerical data and categorical data, so numbers or strings if you like. They built this model, then they found out the target system they had to deploy their model into. Unfortunately, they couldn’t use R, they had to use Spark. And they used another library, Spark ML, to try to rebuild, and this particular library can only use categorical data strings represented as numbers. So, what they did is say, “Okay, we have strings in our data, and we have numbers, we leave the numbers alone. We just take the strings and convert them into numbers. And then we have numbers, so we can use this library.” They used the library. And luckily they had negative numbers in their data, so the algorithm refused to work with that. There were luckily some tests in there, otherwise, they would have got some weird result and deployed it.

If you look into the documentation, this is the documentation of the library in R, and it says it works with a numeric matrix or data frame of categorical and/or numeric variables. So, this is what it can ingest and work with. If you look at the Spark ML library, this is what the documentation says, a bit less readable, but the input here has to be data, which is like an array or a list of labeled points, and a labeled point apparently seems to be something that uses real numbers. The first number seems to be somewhat separate from the rest. The rest seems to be in vector. So, hopefully, here they have put the zeros and ones in there. You can’t really tell from this sort of documentation what sort of data this algorithm can work with. If there is no testing happening inside the procedure to check what is it that you stick inside to use it, then you might get all sorts of weird effects, if you don’t understand what these methods do.

Some of them helpfully do this. Here’s an example. This is, again, from an R environment. Here we are trying to build a number of classifiers, and one of them complains and says, “It looks like one of your variables is constant.” When you do data analysis, constant data is useless because it doesn’t have any information. So, it’s very helpful that the algorithms tells me that. That means there’s some testing happening inside. That looks at what is the data that’s going in and does it make sense for the algorithm to run? But you can’t rely on this. This is not necessarily happening in every piece of code that you find in any library around machine learning.

Machine Learning Can Work Really Well- But It Doesn’t Always

Machine learning usually works quite well, and you have seen in the media, there’s a lot of success in machine learning. Most of it these days is down to what’s called deep learning or deep networks, which is a technique that was already around in the 1980s and has really come to fruition now because we have lots of compute power, and we have lots of big data with labels. Where does this data come from? You have helpfully provided it by uploading photos into the cloud and sticking labels against it. So, Google now knows what cats and dogs are because you guys have either clicked on images when you looked for images of a cat or a dog, or you have uploaded photos somewhere and put the labels.

This system here has learned to label images by using that sort of data, photos and labels that are associated with it. Then they try to label new pictures, and it does quite a good job in the first columns. If you look at the top left one, it says a person riding a motorcycle on a dirt road; that’s quite good for labeling a picture. But, obviously, it’s wrong. It’s not a dirt road. It’s probably an off-road motorcycle race, which already gives you an idea that these things probably don’t understand what they’re seeing. If we go further across, then we see second column, two dogs play in the grass. So, somehow it missed the third dog. A human wouldn’t make this mistake. If we go further to the right, then look at the one in the second row, a little girl in the pink hat is blowing bubbles. So, some of the hat of the lady here has been interpreted as a bubble. And if you look in the same row, far right, that’s the best one, a refrigerator filled with lots of food and drinks.

How does that happen? How does a system make such an obvious mistake? This is because these systems don’t understand anything. They don’t understand what they’re looking at. For them, it’s just statistical patterns. Without testing and proper analysis, you have no idea what these things are actually finding. There are classical examples where these systems tried to differentiate between wolves and huskies, and what they have learned is that all the huskies are on pictures with snow. So, you have built a snow detector, but not the differentiator for wolves or huskies. But if all your data is of that sort, you have no way of finding that out. You have to become quite smart about how you test.

People say that machine learning is more alchemy than science. That is because of this hype around using things like deep networks. People are in a bit of a frenzy. What can I do with it? Where can I apply it? What cool stuff can I do with these things? The kind of things you want to have is reproducibility. So, if you train a model on some data and you get a result, like anything in science, you want it to be reproducible; that means somebody else needs to walk the same path and come to the same result. It doesn’t mean it’s correct and you can’t really prove that it’s correct, but you can show that somebody else can come to the same result. In these systems, you sometimes have a lot of parameters that you have to play with, and people have an intuitive understanding how to do this, and they get a result, and then they stop. And the question is, how good is this really? Can you show that what you’ve done is correct and useful?

Challenges in Analytics

There are quite a few challenges in machine learning, and we are looking at a few of them here. Data provenance means where does this data come from? That’s always the first question you should ask yourself. Where does the data come from? What has been done to the data? Is it really first source or has somebody messed with it? Is it somehow transformed already? Interpreted? Has somebody put their stamp on it and changed it? Also, can you trust it? How has it been generated? Is it like measurements from sensors? Maybe you have then some idea how good these sensors are. What is the error of the sensor? Has it had been created by humans? Do they have an incentive to lie about the data? These kind of questions you should ask yourself and test for it.

Data quality is then more error free. When the data has been recorded, have errors crept in somehow? Is there missing data in there that shouldn’t be missing? Data bias, we have talked about it already. It’s some group over or under-represented in that data, and that is actually quite difficult to find, and even more difficult to get rid of. Like keeping data out of modelings, like keeping hands off your picnic, it will always creep back in. It can come in via the data or via modeling, because every one of us has intuitive bias and we have some ideas what the world is like, and this goes into the models and that this bias is the models. So, the way you select the data that you seem to be relevant for the training would bias it, and when you test your model, you will have biases about it. Where it should perform, where the performance doesn’t mean so much, is not relevant. That puts bias into the models.

You can test for bias, you can try to correct for bias, but it’s not trivial. If you think about a loan application, do you want that everybody is evaluated based on their financial performance, their financial indicators, or do you want loan rejections to be equal across gender groups, racial groups, age groups? Completely different question. Different types of bias that you need to get your head around.

Model comprehension. We already talked about it. You may have to explain to somebody why does a model give you the answer that it gives you. And that’s not always possible. There are approaches for this. You can get libraries, like LIME or SHAP that do this. But you have to think about what is an explanation. To people, an explanation is something meaningful in the context they’re talking about. When we give explanations about why we did this, we always make them up. An explanation is something you make up post-talk to communicate to somebody else about why you did something. But it’s very rare that you have a reasoning process in your head based on language. That you said, “Okay, what am I going to do? I’m going to do this, and this is because I’m going to do it.” Your decision making is usually much snappier, and you don’t reason it out for yourself. Maybe if you’re going to buy a car, but in the end still the decision making is typically an emotional process.

An explanation for a human is something that has a certain kind of quality. An explanation that you might get out of a statistical model might be something that is expressed in numbers, and weights, and combinations of variables, which somebody might not understand if they are not a statistician or mathematician. So that’s another tricky dimension. Then the ethical dimension. So, are you allowed to use the data? What kind of decisions are you making? How does it affect people? You may have to test for the effects of your model application as well and I’ll come back to this.

The Process of Building Models from Data

Here’s the picture of a process around data analytics, data science, machine learning. It’s pretty much all the same. You start with some business understanding, you have to understand your data, prepare it, and then you get to the modeling. That’s the sexy bit. Everybody wants to work in this modeling box, But in reality, you waste 70% to 90% of your time in the data preparation stage. And so, this is also where your test efforts should be. Modeling is actually quite easy. The difficult bit is getting the data right for the modeling. Then, okay, the other bits, you have to obviously test what you’re doing, evaluate it and then deploy it where things can go wrong too.

There’s a perception problem. As I said, everybody wants to do modeling. So, if you thinking, “Oh, I’m working in machine learning,” and this is the idea you have in your mind of what your work environment looks like, but actually, it looks like this. You spend most of your time trawling through data, trying to get your head around data, trying to get hold of data, figuring out the quality of the data is what it means, and how you can combine different types of data that are in different operational systems. It is very messy, and all sorts of errors can creep in there.

What I do when I talk about machine learning or data science to that community, I point to you guys, and hold you up as shining beacons, because you’re a software developer, you know all about testing. I say, “We have to learn from these guys. These guys test what they do, test-driven development.” So, how can we lift and shift this over to machine learning and to data science? How do we know that our data is any good, that our model is any good? If the decisions that we’re taking based on model outputs have the impacts that we expect.

Follow Best Practice for AI, ML and Data Science- Test Everything!

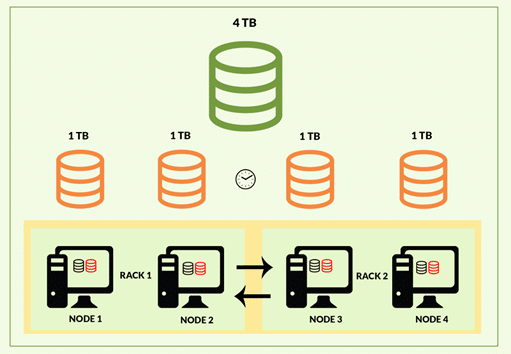

Here is a data diagram that shows where you can test. This roughly shows the kind of stages you go through when you put a model in deployment. You build a model from data, and you use it in some sort of application later. So, you start with data repository. This is the first stage where you can do testing. Ideally, you prevent bad data going into your repository. The earlier you start, the better. In databases, there is something like triggers. If you work with databases, you might know this. It basically rules you. You write down, say if you put a row of data into this table, these are conditions it has to meet before you can put it in. Nobody is using this anymore. But that’s a possibility. You can just write, say, make sure that this column is always positive, or that there’s never a missing value in this column. You can do this at the level of the data repository. If you know that somebody is doing this and says, “Yes, I’m testing that no bad stuff goes into my data repository,” Then okay, that’s great, You have shifted the responsibility, and you can move on.

The next stage is data wrangling. That means the data is never in the kind of shape that you can just put it into a learning algorithm. If you learn your machine learning by going to Kaggle where all the data is nicely prepared for you, there was a whole lot of pain that happened before that you’re not seeing. So, this is the preparation phase. You take the data, you turn it into something else so you can use it in machine learning. You generate features out of it, and you can make a lot of mistakes in that space.

Often, this phase is even documented. So, you magically arrive at a set of data, and you say, “Okay, this is what I use for machine learning.” Then you build your model, and then if somebody wants to reproduce it, then, “How the hell did they arrive at these columns and this sort of data set?” You can’t replicate it. But obviously, from your point of view, you should understand you’re writing software to stage. Data wrangling means you write software. The simplest software you can write on this is SQL queries. So, you modify the data in the repository to change it into something else that is code. That should be version, should be tested.

Feature generation. That means you start combining columns. Instead of column A and column B, maybe A divided by B is a useful feature to have. Then you create something new. Again, even this A divided by B is software, which you produce something through code, through manipulating data, and that should be tested. Are you dividing by zero at some point? What does that do? Then you go into model building and then there’s a whole lot of testing around how you build a model, and I’ll come to this in a minute. All of this stuff should also be versioned, and you should keep track of it so you can replicate it. If you have to hand it over to somebody, they have to be able to pull the latest version from the repository, and they have to be able to walk in your shoes.

Now we have a model that sits in a versioning system somewhere; when we deploy it, it should be pulled. So, always have the latest version of the model. Machine learning people are not software developers, so they usually don’t understand these concepts. They don’t necessarily know what the software repository is and how you orchestrate these things. But you guys should know this. You deploy it. You can test there, is the environment you deployed into, can it handle the kind of traffic that comes, will it scale? These kind of things can go wrong.

And then you go to application. That means it’s now sitting in some sort of operational process and produces decisions. The same data that you used to build it in structure is going back into the model. Now time has moved on. You have built your data. Data is built from historic data, and it’s applied to future data, otherwise, it would be pointless. So, how do you know that the future data is the same as the data that you used to build your model from? You need to check that the data is structurally still the same, and things can catch you out.

Let’s say you have something like product codes in your model. Companies change product codes every blue moon. So, suddenly there might be something in there the model has never seen. How will it react to it? Or there’s drift in your data, some kind of numbers, and suddenly the numbers have drifted from the interval they used to be, and then they’re becoming smaller or larger, or there’s some sensor data, and then somebody decided to update the sensor. Now, this is calibrated differently, the numbers have changed. Maybe the mean of the measurements is no different than the standard deviation. Again, not something you would expect. So, you need to check these kinds of things.

Then you’re going to decision making. Again, you should test. If you use your model to make a decision, do you actually have the effect that you want to have? You can test this by keeping a control group, and you know this from testing medicines. In medicine, when they test new drugs, they have a very strict regime of how to do this, double-blind tests. The doctor doesn’t know what sort of pill they give, and the patient doesn’t know what they are receiving. So, this sort of regime, you can replicate in the decision-making process. You’re making a decision, you apply your procedure to your treatment group, and you do the same thing to a control group, or not the same thing, you just do what you always did, or you do nothing. And then you compare in the end, is this the same?

Some companies are very good in this. Netflix does this on you all the time. They experiment on you. So, if you open your Netflix main screen, you’ll see some movies just based on what you like, but also the movie images or the title images are different. Your friend might see a different set of images. They just try out what’s more interesting. And so, these are the groups, and they test these treatments across the groups. Which decision-making gives me a better response? And you can do this as well.

Testing Your Model: Cross-Validation

I’ll talk about two things, I’ll talk about testing a model and testing your data. Model is, if you work in machine learning, then this is something that you know, that you’ve been trained to know this sort of stuff. Cross-validation. So in a nutshell, when you build a model, you have a set of data which is called your training data. You partition it into K equal parts, let’s say 10, and then you use one part to test or validate your model and the other nine parts you use to build it. You do this 10 times. Ideally, you repeat this again. Why are we doing this?

We want to understand how strongly the selection of the training data impacts the model built. So, what you do is, your training data is a kind of a sample of all the data that is potentially available and you somehow have created this set of training data, and you use it to build a model and then this model will be applied to all the other data that’s out there that you haven’t seen. You need to make sure that your training data is representative of that data that the model is going to see in the future.

So selecting that training data, if you don’t do it well, will have an impact of how well the model performs. The only way to check this is by creating many models and see how different they are and how they perform. And this is what this cross-validation procedure is for. So, you do this partitioning into 10 subsets to check if the selection of a set of data has an impact, and then you do this many times to check if this partitioning also has an effect. Because everything should be random. So, in practice, people use K equals 10 or 5, and N equals one. But ideally, aim for K equals 10 and N greater than 1 to have some good idea about how well your model performs.

What are you looking for when you do this? You’re looking for the training performance to be better, but only slightly, than the test performance. You look at the numbers that indicate the performance, and that’s what you want to see. And you want to look for consistent figures across all faults. So, if something sticks out where it’s 90% correct and all the other times it’s only 70% correct, that’s suspicious. If the training performance is much better than the test, then it’s overfitting. If you consistently get 90% performance and training, but only 60% in test, there’s some overfitting happening. That means you have to control your model parameters. If the test performance is much better than the training performance, then all the alarm bells should go off. That probably means that you have some data leakage; some information you shouldn’t have has gone into your test data. And if the figures vary a lot, then you probably don’t have enough data for the task that you’re trying to solve.

You also want to look at the model that you’re building. Sometimes that’s possible, sometimes it’s not. But if you can look at the model structure- so what does the model actually look like when it has been created- does that look consistent across all the folds? The typical example would be a decision tree, which you can look at,. You can plop this out or write it down as rules. Or what you can do is, in every fold, are the same features selected again and again? Or do you see that one fold takes these variables and another fold takes these variables? If you have automatic feature selection in your algorithm. So, these are the kinds of things you can look for. If this differs a lot, then probably you don’t have enough data, or the data selection strongly influences the model built, which would be the case if you don’t have enough data. So, you have to go back to basics and check is the data right? Is the model right? Is the model too complex for the task that I’m trying to solve? Things like this, you have to look into.

What’s the most misused term in machine learning and data science?

Participant 1: Big data.

Nauck: Yes. Good one. Any other takers? That was big data. No? This one, accuracy. Accuracy is a bad word because it hides so much. It hides so many sins in machine learning and data science. if your target class is only 1% in your data and your model is 99% correct, 99% accurate by just saying nobody is from the target class, yes, you have 99% accurate model but it’s 100% pointless. So, accuracy, avoid using it. It doesn’t give you enough information.

If you’re in machine learning, print it out and nail it over your bed. These are the terms you need to work with. You need to understand this is for binary classifiers only. What type of errors are you making? What are the false positives? The false negatives? You need to understand what’s the impact in your decision domain of these arrows, and you want to understand things like sensitivity and specificity, and you want to understand what’s the base rate in your data. These kind of things you need to work with. There’s a very good Wikipedia page. If you Google, “confusion matrix wiki”, you’ll find exactly that. So, that’s very handy to keep available when you do machine learning.

Test Your Data!

To test your data. That’s, I said, you spent 70% to 90% of the time massaging your data to get it into a shape to put it into a model. So, your test effort should be spent in that domain as well. It’s also the most difficult part to test. You could actually say that everything that you do during machine learning is either using data or generating data. So, everything is about testing data. But what I said about the cross-validation, generates data; the data is statistical performance of your models. So, you can test this. If you want to make sure that your training performance is always better than the test performance, it’s a rule that you can test for, but the difference shouldn’t be too big. You can test for that.

So you can write a lot of tests on the output of a cross-validation to check that your model is actually any good before you continue on your pipeline. And this is testing the data that comes out of the cross-validation. But the data that you work with, you should test as well. So, what you do before you do any kind of modeling in machine learning, you go through something that we call exploratory data analysis, and it’s the part where you try to get your head around the data that you’re working with, and you do a lot of visualization at this stage, you plot stuff, you compute statistical features, means, variances, tests, all sorts of stuff. Just to try and understand what is this data telling me? Where does it come from? Is it any good? What’s the quality, bias? All of these kinds of things. So, you learn something about your data, and you can write this down as tests.

We have a few examples. I use R a lot, so these examples are from R. There’s a library called assertr. What you can do is you can write assertions in your code, and, I don’t know if you guys use assertions in your own code, typically something that is used at compile time or maybe even at runtime. This one here is used at runtime, and you use it to write tests about the data. The data I’m playing with here is data about flights and flight delays, flights out of New York. There’s an example data set which is available in R, and I’m looking for a column here that is called delayed. What I have here is a pipeline where I pipe a data table called my data through a number of operators. Actually, what I want to compute is I want to see how many delays I have in this data. What’s the ratio of the delays in my data?

I have this verify, I have these two verify commands in here. The first one says, “Verify delayed is equal to one or delayed is equal to zero,” which is a useful thing to have if you want to build a binary classifier, and you indicate that the positive class is indicated by a one and a negative class is indicated by a zero. Ideally, you don’t want any other numbers to turn up there, or you don’t want any missing values.

The other thing I’m verifying here is that I have at least 50% of delayed rows in my data. So, compute, because one and zero is my indication for delayed. I can just sum it up, divided by the number of rows in my data set, and then I get the ratio. If that all goes through, then this pipeline will produce the output I want. I want the number of delayed flights in my data and I want the ratio, so it tells me 86%. It’s probably skewed, I’m not too happy with it, but just for the sake of it, we’ll continue with it.

If it fails, you see something like this, there’s an arrow, and the execution has stopped. This is what you want. Your code should throw an exception if the data that you tried to feed into it, is not what it expects. Especially, you want to do this when you apply your model later in an operational system. It’s always bad to make a decision that is on wrong data. So, ideally, you want a model that says, “Oh, I’ve never seen that. I don’t know what to do,” and tells you that instead of, “Yes, don’t know, but will give you a one as an output because this is the closest thing I can find.” So, this is your way of enforcing to say, “I don’t know what to do with this.” And that’s a very useful situation to be in when you use models. It tells you it’s not what you expect, but you don’t know exactly where it goes wrong. But this kind of verification is very useful, just as generic checks on the data.

You can try to find the culprit, which is useful in the first stages when you try to get your head around the data. Here I’m using an assertion that says a particular column, or actually the keyword “everything” here means all the columns, they should be positive. They should be between zero and infinity, and this is what this predicate checks. If that goes through, I get my result, and you can see it doesn’t go through, and I get a number of columns where this is not true, and this is the variable for dew point where you have negative value. So, the data set has information about weather, and flights, and so on. And dew point actually can be negative. So it’s cool. I learned something about the data, now I can change my assertions. I can say the dew point columns is okay if it’s negative.

Sometimes we need to test something that you have to compute first, there are also operators for this. This one here insists, says that the wind speed and the wind gust should be within three standard deviations. But in order to do this, you first have to take your data, you have to calculate the mean and the standard deviation of the column, and then you can test if the individual role within is three standard deviations or not. And it would show you all the ones that are not, and there’s a potential outlier. Again, this is useful in the first phase when you get your head around the data.

There are a lot of packages. You can find several of those. The one that I used here is assertr. If you’re working with R, you probably know the name Hadley Wickham. This is more runtime assertions for code. But there are many you can play with. If you’re more a Python person, then there is a package called TDDA, test-driven data analytics, which I would recommend to have a look at this. It’s very cool stuff that can even derive constraints on data or assertions on data automatically. It can look at the data and say, “Oh, I think this column is just positive numbers or so.” That’s the tricky bit. There’s no support to help you write these test cases. You have to do this yourself, and that’s one of the problems in this domain. There is not a lot of tooling that helps people to do this, and that’s why it typically doesn’t get done so much.

Research Trends in Data Science: Supporting Tools

There are some tools out there that try to fill this particular gap. On data quality, you get things like Quilt and TopNotch that try to do things like data quality checking or data versioning, also interesting. So, if you used your data for machine learning and you replicate the whole experiment next month when you have new data, what is the difference between the datasets? That’s quite a cool idea to version your data. Obviously, quite a challenge to do if you talk about massive amounts of data. FeatureHub is the idea that you can work across teams. So, when you develop machine learning models in teams, and you have thousands of columns to go through and to turn them into features, you do this in a team. How do you record and keep these features available and share them across teams? FeatureHub is trying to look at this. And then when you build models, you have to keep track of what you’re doing as well, and things like ModelDB and MLflow try to look at this.

There’s also a whole other area around governance around models. When you have them in production, and you need to check how they perform, or you may have to explain to somebody why they make certain decisions, you need a governance layer around this. For this, you want a certain type of APIs. You want to ask a model, who built you? What sort of data did they use? How many decisions have you made? What have you been built for? Especially in these image recognition domains, what do people do? They reuse deep networks. They chop off the top and the bottom layer and take a network that has learned to detect cats and dogs and then use it elsewhere, but retrain it on their domain by using what it has been trained for before.

But maybe somebody said, “Oh, you can’t do this. If you use this model, you can only use it to recognize cats and dogs. Don’t use it to diagnose x-ray images for cancer,” or something like that. And so, these indications when you have a model are important to keep track of, and these approaches look into this direction. We have a whole working group at BT that looks at model governance and tries to develop standards across industry for that.

Future of AI: Explainable AI (Xai)

The future- what I think is where things have to go to is explainability. So, explainable AI is one of the interesting keywords in the scene. This is the idea that you can ask a model, “Why are you telling me this?” Or, “What sort of data have you seen in the past that makes you give me this decision?” This is really tricky to do. It’s not easy, but this is something where we have to get to, and so we need to be able to get to this sort of conversation with a model and ask it stuff so that we can develop trust in it.

So, we need to be able to trust that it has been built in a proper way, that all the tests have been done. When you buy something of a vendor, when they say, “Here’s my facial image recognition system,” how do you know they have built it in the proper way? If you have certain kind of quality gates for your own software, for your own machine learning, how do you know that the people you buy stuff from have the same? How can you check this? These kind of things could be introduced through explainability, and model-based design could be a way here to help ensure these kinds of things, think of explainability by design, similar to security by design, or privacy by design.

This is what I wanted to say. I hope you guys found it interesting and I’m happy to take questions. I don’t know how it works, but there should be microphones coming around I think.

Questions & Answers

Participant 2: A very interesting talk. One of the things that is used to correct fundamental misconceptions in the academic world, as I understand it, not being within it, is other people challenging the original results and using that attempt to reproduce as a way to discover problems. Is there any way to reproduce that kind of argumentative tension inside an organization, or is that not the right way to do it?

Nauck: Yes. What we try to do is to replicate this to some kind of peer review. So, if one of my guys builds a machine learning model, then somebody else has to check it. That means this reproducibility idea. So, where is the data that you used? What are the steps you went through, and what is the result that you have? Then trying to get to the same result. In academia, you find more and more that people are asked to publish not only the code but also the data that they used so that somebody can take it and replicate the results. You can do this inside your own team or organization.

Participant 3: Thank you for your very interesting and thought-provoking presentation. I have a question about a few of the slides that you had in there. For instance, you had the image recognition problem, and you used an example about huskies and wolves.

Nauck: This one?

Participant 3: Yes, this one. The huskies and wolves are easy to identify as a human because it’s already built in us to see through our eyes that a thing is in certain order. And if they were ordered in a vector of 2 million pixel values, they would be very difficult for a human too. So, humans build their vision in layers upon layers of information, and they check their stuff. The problems here lie in the fact that they interpolate or extrapolate the area of expertise that the model is using, and it fails. Is there a way to identify when the model is exceeding its boundaries in interpolation or extrapolation?

Nauck: Yes, very good question and very difficult to answer, especially in that space when you do image recognition, once you have potentially got an infinite number of images that can possibly be entered into a system. And because these systems, they don’t see or understand the image, they don’t look for concepts in an image like we would. If you’ve been in the previous talk of the track, then Martin showed the attention, the hotspots where humans look at an image, and where the algorithms look at. It has nothing to do with each other. It’s important to realize this is only a statistical pattern recognition as you say. So, if you want to build a system where you can be sure what it is they’re doing, you need to think about combining different concepts.

And so, for example, let’s say you have a camera in a car that is supposed to look at the road and identify only if the road is free or not, which is a similar challenge too here. Then how do you guarantee that the image is actually a road. These image processing systems can be fooled. You could have an image that looks the same to you and me, but one pixel has changed, and it classifies it completely differently. You can find examples like this. So, this is really, really challenging.

What you would typically do if you test inputs to the system, you would say, “Okay, this comes from a particular domain,” so I can check that the input comes from that domain. Is it within certain intervals or whatever? You don’t really have this because you don’t have concepts here; you just have pixels. You can think of maybe different ways of looking at images. There could be the old-fashioned type that does image segmentation, and you use this as well, and then you check, “Okay, the system here says that’s a road, but have I seen a white line in the middle, and have I seen two black stripes to other side of it?” Or things like that. You can try these things. But this is, I would say in its infancy.

Participant 3: So, you suggest that there is a certain kind of unit testing for same machine vision or machine learning? This actually takes to the diagram a few lines after this. Yes. This one. Here the three is the feature generation, and four is the model building. Say, the model building creates machine learning model, and this machine learning model is used as a feature in the next model. So, the models build on each other. And now I have a system that is not tested like you say, with the code examples you have, the system performs, statistically. Sometimes it’s correct, and sometimes it’s not, based on the input you have. That would require something like testing using distributions or something. Is there some kind of literature that has done this research, or something that has used models upon models upon models to create this kind of a stack of machine learning systems?

Nauck: Again, great question. I haven’t seen any.

Participant 3: None?

Nauck: No. So, the typical testing that you see in machine learning is all around cross-validation. Come up with a model that has this, where you can guarantee a certain performance. That’s the idea. But this idea of looking at what type of errors does this model make, and what does it mean in the application context, and how can I detect these arrows when I to use it somewhere – I have not seen any work on this.

See more presentations with transcripts