Month: October 2020

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Obtaining good quality data can be a tough task. An organization may face quality issues when integrating data sets from various applications or departments or when entering data manually.

Here are some of the things a company can do to improve the quality of the information it collects:

1. Data Governance plan

A good data governance plan should not only talk about ownership, classifications, sharing, and sensitivity levels plus also follows in detail with procedural details that outline your data quality goals. It should also have the details of all the personnel involved in the process and each of their roles and more importantly a process to resolve/work through issues.

Data governance can be thought of as the process of ensuring that there are data curators who are looking at the information being ingested into the organization and that there are processes in place to keep that data internally consistent, making it easier for consumers of that data to get access to it in the forms that they need.

2. Data Quality Guidance

You should also have a clear guide to use when separating good data from bad data. You will have to calibrate your automated data quality system with this information, so you need to have it laid out beforehand. This step also involves validating the data so that, before it can be further processed, there is a level of surety about the data and an estimate about how much work it will take to make sure that data meets minimal standards.

3. Data Cleansing Process

Data correction is the whole point of looking for flaws in your datasets. Organizations need to provide guidance on what to do with specific forms of bad data and identifying what’s critical and common across all organizational data silos. Implementing a data cleansing manually is cumbersome as the business shifts, strategies dictate the change in data and the underlying process. Data quality guidance and data cleansing are frequently done together, not only to make sure that the data is consistent but also to raise flags when data is inadequate to the needs of the organization.

4. Clear Data Lineage

With data flowing in from different departments and digital systems, you need to have a clear understanding of data lineage – how an attribute is transformed from system to system interactions and provide the ability to build trust and confidence. Data lineage (also known as provenance) is metadata that indicates where the data was from, how it has been transformed over time, and who, ultimately, is responsible for that data.

5. Data Catalog and Documentation

Improving data quality is a long-term process that you can streamline using both anticipations and past findings. By documenting every problem that is detected and associated data quality score to the data catalog, you reduce the risk of mistake repetition and solidify your data quality enhancement regime with time. Data catalogs are also increasingly tied into semantification, the process of extracting meaning, relationships, and dimensional analysis from the incoming (or ingested) data.

As stated above, there is just too much data out there to incorporate into your business intelligence strategy. The data volumes are building up even more with the introduction of new digital systems and the increasing spread of the internet. For any organization that wants to keep up with the times, that translates to a need for more personnel, from data curators and data stewards to data scientists and data engineers. Luckily, today’s technology and AI/ML innovation allow for even the least tech-savvy individuals to contribute to data management at the east. Organizations should leverage these analytics augmented data quality and data management platforms to recognize immediate ROI and longer cycles of implementation.

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Just AI Conversational Framework (JAICF) provides a Kotlin-based DSL to enable the creation of conversational user interfaces. JAICF works with popular voice and text conversation platforms as well as different NLU engines. InfoQ has spoken with Just AI’s Solution Owner, Vitaliy Gorbachev.

By Sergio De Simone

Cybersecurity Experts Discuss Company Misconception of The Cloud and More in Roundtable Discussion

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Industry experts from TikTok, Microsoft, and more talk latest trends on cybersecurity & public policy.

Enterprise Ireland, Ireland’s trade and innovation agency, hosted a virtual Cyber Security & Public Policy panel discussion with several industry-leading experts. The roundtable discussion allowed cybersecurity executives from leading organizations to come together and discuss The Nexus of Cyber Security and Public Policy.

The panel included Roland Cloutier, the Global Chief Security Officer of TikTok, Ann Johnson, the CVP of Business Development – Security, Compliance & Identity at Microsoft, Richard Browne, the Director of Ireland’s National Cyber Security Centre, and Melissa Hathaway, the President of Hathaway Global Strategies LLC who formerly spearheaded the Cyberspace Policy Review for President Barack Obama and lead the Comprehensive National Cyber Security Initiative (CNCI) for President George W. Bush.

Panelists discussed the European Cloud and the misconception companies have of complete safety and security when migrating to the Cloud and whether it is a good move for a company versus a big mistake. Each panelist also brought valuable perspective and experience to the table on other discussion topics including cyber security’s recent rapid growth and changes; the difference between U.S. and EU policies and regulations; who holds the responsibility for protecting consumer data and privacy; and more.

“As more nations and states continue to improve upon cybersecurity regulations, the conversation between those developing policy and those implementing it within the industry becomes more important,” said Aoife O’Leary, Vice President of Digital Technologies, Enterprise Ireland. “We were thrilled to bring together this panel from both sides of the conversation and continue to highlight the importance of these discussions for both Enterprise Ireland portfolio companies and North American executives and thought leaders.”

This panel discussion was the second of three events in Enterprise Ireland’s Cyber Demo Day 2020 series, inclusive of over 60 leading Irish cyber companies, public policy leaders, and cyber executives from many of the largest organizations in North America and Ireland.

To view a recording of the Cyber Security & Public Policy Panel Discussion from September 23rd, please click here.

###

About Enterprise Ireland

Enterprise Ireland is the Irish State agency that works with Irish enterprises to help them start, grow, innovate, and win export sales in global markets. Enterprise Ireland partners with entrepreneurs, Irish businesses, and the research and investment communities to develop Ireland’s international trade, innovation, leadership, and competitiveness. For more information on Enterprise Ireland, please visit https://enterprise-ireland.com/en/.

Article: Q&A on The Book AO, Concepts and Patterns of 21-st Century Agile Organizations

MMS • Ben Linders Pierre Neis

Article originally posted on InfoQ. Visit InfoQ

The book AO, concepts and patterns of 21-st century agile organizations by Pierre Neis explores the concept of designing systems to allow for agile behaviour. It provides patterns to establish agile organizations that are able to respond to 21-st century challenges.

By Ben Linders, Pierre Neis

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

What Is Fintech?

“Fintech” describes the new technology integrated into various spheres to improve and automate all aspects of financial services provided to individuals and companies. Initially, this word was used for the tech behind the back-end systems of big banks and other organizations. And now it covers a wide specter of finance-related innovations in multiple industries, from education to crypto-currencies and investment management.

While traditional financial institutions offer a bundle of services, fintech focuses on streamlining individual offerings, making them affordable, often one-click experience for users. This impact can be described with the word “disruption” – and now, to be competitive, banks and other conventional establishments have no choice but to change entrenched practices through cooperation with fintech startups. A vivid example is Visa’s partnership with Ingo Money to accelerate the process of digital payments. Despite the slowdown related to the Covid-19 epidemic, the fintech industry will recover momentum and continue to change the finance world’s face.

Fintech users

Fintech users fall into four main categories. Such trends as mobile banking, big data, and unbundling of financial services will create an opportunity for all of them to interact in novel ways:

- B2B – banks and their business clients

- B2C – small enterprises and individual consumers

The main target group for consumer-oriented fintech is millennials – young, ready to embrace digital transformation, and accumulating wealth.

What needs do they have? According to the Credit Carma survey, 85% of millennials in the USA suffer from burnout syndrome and have no energy to think about managing their personal finances. Therefore, any apps that automate and streamline these processes have a good chance to become popular. They need an affordable personal financial assistant that can do the following 24/7:

- Analyze spending behaviors, recurrent payments, bills, debts

- Present an overview of their current financial situation

- Provide coaching and improve financial literacy

What they expect to achieve:

- Stop overspending (avoid late bills, do smart shopping with price comparison, cancel unnecessary subscriptions, etc.)

- Develop saving habits, get better organized

- Invest money (analyze deposit conditions in different banks, form an investment portfolio, etc.)

The fintech industry offers many solutions that can meet all these goals – not only on an individual but also on a national scale. However, in many countries, there is still a high percentage of unbanked people – not having any form of a bank account. According to the World Bank report, this number was 1.7 billion people in 2017. Mistrust to new technologies, poverty, and financial illiteracy are the obstacles for this group to tap into the huge potential of fintech. Therefore, businesses and governments must direct the inclusion efforts towards this audience as all stakeholders will benefit from it. Apparently, affordable and easy-to-get fintech services customized for this huge group of first-time users will be a big trend in the future.

Big Data, AI, ML in Fintech

According to an Accenture report, AI integration will boost corporate profits in many industries, including fintech, by almost 40% by 2035, which equals staggering $14 trillion. Without a doubt, Big Data technologies, such as Streaming Analytics, In-memory computing, Artificial Intelligence, and Machine Learning, will be the powerhouse behind numerous business objectives banks, credit unions, and other institutions strive to achieve:

- Aggregate and interpret massive amounts of structured and unstructured data in real-time.

- With the help of predictive analytics, make accurate future forecasts, identify potential problems (e.g., credit scoring, investment risks)

- Build optimal strategies based on analytical reports

- Facilitate decision-making

- Segment clients for more personalized offers and thus increase retention.

- Detect suspicious behavior, prevent identity fraud and other types of cybercrime, make transactions more secure with such technologies as face and voice recognition.

- Find and extend new borrower pools among the no-file/thin-file segment, widely represented by Gen Z (the successors of millennials), who lack or have a short credit history.

- Automate low-value tasks (e.g., such back-office operations as internal technical requests)

- Cut operational expenses by streamlining processes (e.g., image recognition algorithms for scanning, parsing documents, and taking further actions based on regulations) and reducing man-hours.

- Considerably improve client experience with conversational user interfaces, available 24/7, and capable of resolving any issues instantly. Conversational banking is used by many big banks worldwide; some companies integrate financial chatbots for processing payments in social media.

Neobanks

Digital or internet-only banks do not have brick-and-mortar branches and operate exclusively online. The word neobank became widely used in 2017 and referred to two types of app-based institutions – those that provided financial services with their own banking license and those partnering with traditional banks. Wasting time in lines and paperwork – this inconvenience is the reason why bank visits are predicted to fall to just four visits a year by 2022. Neobanks, e.g., Revolut, Digibank, FirstDirect, offer a wide range of services – global payments and P2P transfers, virtual cards for contactless transactions, operations with cryptocurrencies, etc., and the fees are lower than with traditional banks. Clients get support through in-app chat. Among the challenges associated with digital banking are higher susceptibility to fraud and lower trustworthiness due to the lack of physical address. In the US, the development of neobanks faced regulatory obstacles. However, the situation is changing for the better.

Smart contracts

A smart contract is a software that allows automatic execution and control of agreements between buyers and sellers. How does it work? If two parties want to agree on a transaction, they no longer need a paper document and a lawyer. They sign the agreement with cryptographic keys digitally. The document itself is encoded in a tamper-proof manner. The role of witnesses is performed by a decentralized blockchain network of computing devices that receive copies of the contract, and the code guarantees the fulfillment of its provisions, with all transactions transparent, trackable, and irreversible. This sky-high level of reliability and security make any fintech operation possible in any spot of the world, any time. The parties to the contract can be anonymous, and there is no need for other authorities to regulate or enforce its implementation.

Open banking

Open banking is a system that allows third parties to access bank and non-bank financial institutions data through APIs (application programming interfaces) to create a network. Third-party service providers, such as tech startups, upon user consent, aggregate these data through apps and apply them to identify, for instance, the best financial products, such as savings account with the highest interest rate. Networked accounts will allow banks to accurately calculate mortgage risks and offer the best terms to low-risks clients. Open banking will also help small companies save time with online accounting and will play an important role in fraud detection. Services like Mint require users to provide credentials for each account, although such practice has security risks, and data processing is not always accurate. ÀPIs are a better option as they allow direct data sharing without accessing login and password. Consumer security is still compromised, and this is one of the main reasons why the open banking trend hasn’t taken off yet. Many banks worldwide cannot provide open APIs of sufficient quality to meet existing regulatory standards. There are still a lot of blind spots, including those related to technology. However, open banking is a promising trend. The Accenture report offers many interesting insights.

Blockchain and cryptocurrencies

The distributed ledger technology – Blockchain, which is the basis of many cryptocurrencies, will continue to transform the face of global finance, with the US and China being global adoption leaders. The most valuable feature of a blockchain database is that data cannot be altered or deleted once it has been written. This high level of security makes it perfect for big data apps across various sectors, including healthcare, insurance, energy, banking, etc., especially those dealing with confidential information. Although the technology is still in the early stages of its development and will eventually become more suited to the needs of fintech, there are already Blockchain-based innovative solutions both from giants, like Microsoft and IBM, and numerous startups. The philosophy of decentralized finance has already given rise to a variety of peer to peer financing platforms and will be the source of new cryptocurrencies, perhaps even national ones. Blockchain considerably accelerates transactions between banks through secure servers, and banks use it to build smart contracts. The technology is also growing in popularity with consumers. Since 2009, when Bitcoin was created, the number of Blockchain wallet users has reached 52 million. A wallet is a layer of security known as “tokenization”- payment information is sent to vendors as tokens to associate the transaction with the right account.

Regtech

Regtech or regulation technology is represented by a group of companies, e.g., IdentityMind Global, Suade, Passfort, Fund Recs, providing AI-based SaaS solutions to help businesses comply with regulatory processes. These companies process complex financial data and combine them with information on previous regulatory failures to detect potential risks and design powerful analytical tools. Finance is a conservative industry, heavily regulated by the government. As the number of technology companies providing financial services is increasing, problems associated with compliance with such regulations also multiply. For instance, processes automation makes fintech systems vulnerable to hacker attacks, which can cause serious damage. Responsibility for such security breaches and misuse of sensitive data, prevention of money laundering, and fraud are the main issues that concern state institutions, service providers, and consumers. There will be over 2.6 billion biometric users of payment systems by 2023, so the regtech application area is huge.

In the EU, PSD2 and SCA aim to regulate payments and their providers. Although these legal acts create some regulatory obstacles for fintech innovations, the European Commission also proposes a series of alleviating changes, for instance, taking off the table paper documents for consumers. In the US, fintech companies must comply with outdated financial legislation. The silver lining is the new FedNow service for instantaneous payments, which is likely to be launched in 2023–2024 and provides a ready public infrastructure.

Insuretech

The insurance industry, like many others, needs streamlining to be more efficient and cost-effective and meet the demand of time. Insurtech companies are exploring new possibilities, such as ultra-customization of policies, behavior-based dynamic premium pricing, based on data from Internet-enabled devices, such as GPS navigators and fitness activity trackers, AI brokerages, on-demand insurance for micro-events, etc., through a new generation of smart apps. As we mentioned before, the insurance business is also subject to strict government regulations, and it requires close cooperation of traditional insurers and startups to make a breakthrough that will benefit everyone.

***

Originally published here – https://blackthorn-vision.com/blog/fintech-trends-AI-smart-contracts-neobanks-insuretech-regtech-open-banking-and-blockchain.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

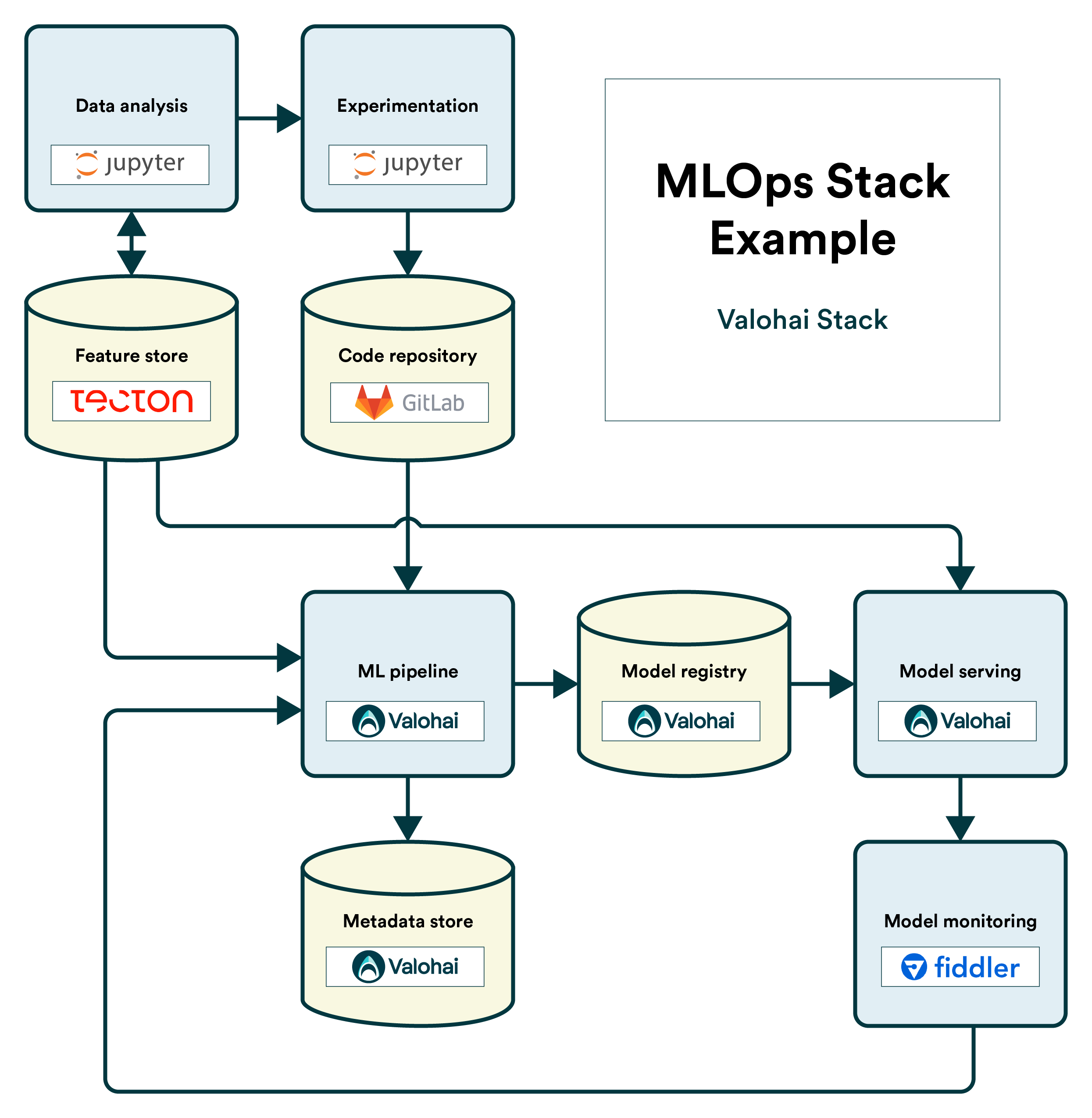

What is MLOps (briefly)

MLOps is a set of best practices that revolve around making machine learning in production more seamless. The purpose is to bridge the gap between experimentation and production with key principles to make machine learning reproducible, collaborative, and continuous.

MLOps is not dependent on a single technology or platform. However, technologies play a significant role in practical implementations, similarly to how adopting Scrum often culminates in setting up and onboarding the whole team to e.g. JIRA.

What is the MLOps Stack?

To make it easier to consider what tools your organization could use to adopt MLOps, we’ve made a simple template that breaks down a machine learning workflow into components. This template allows you to consider where you need tooling.

Download the MLOps Stack here: Download PDF

As a machine learning practitioner, you’ll have a lot of choices in technologies. First, some technologies cover multiple components, while others are more singularly focused. Second, some tools are open-source and can be implemented freely, while others are proprietary but save you the implementation effort.

No single stack works for everyone, and you need to consider your use-case carefully. For example, your requirements for model monitoring might be much more complex if you are working in the financial or medical industry.

The MLOps Stack template is loosely based on Google’s article on MLOps and continuous delivery, but we tried to simplify the workflow to a more manageable abstraction level. There are nine components in the stack which have varying requirements depending on your specific use case.

| Component | Requirements | Selected tooling |

|---|---|---|

| Data analysis | E.g. Must support Python/R, can run locally | |

| Experimentation | ||

| Feature store | ||

| Code repository | ||

| ML pipeline | ||

| Metadata store | ||

| Model registry | ||

| Model serving | ||

| Model monitoring |

An Example MLOps Stack

As an example, we’ve put together a technology stack containing our MLOps platform, Valohai, and some of our favorite tools that work complementary to it, including:

-

JupyterHub / Jupyter Notebook for data analysis and experimentation

-

Tecton for feature stores

-

GitLab for code repositories

-

Fiddler Labs for model monitoring

-

Valohai for training pipelines, model serving, and associated stores

Get Started

You might want to start by placing the tools you’re already using and work from there. The MLOps Stack template is free to download here.

If you are interested in learning more about MLOps, consider our other related content.

Originally published at https://valohai.com.

MMS • Renato Losio

Article originally posted on InfoQ. Visit InfoQ

HashiCorp has recently announced the public preview of the HashiCorp Vault AWS Lambda Extension. The new service is based on the recently launched AWS Lambda Extensions API and allows a serverless application to securely retrieve secrets from HashiCorp Vault without making the Lambda functions Vault-aware.

By Renato Losio

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Machine Learning is an important skill to have in today’s age. But acquiring the skill set could take some time especially when the path to it is unscattered. The below-mentioned points have a very wider reach to the topics it covers and essentially would give anyone a very good start when it comes to starting from scratch. Learners should not limit themselves to only the below-mentioned set of skills as machine learning is an ever-expanding field and keeping abreast about the latest things and events always becomes very beneficial in scaling new heights in this field.

- Programming knowledge

- Applied Mathematics

- Data Modeling and Evaluation

- Machine Learning Algorithms

- Neural Network Architecture

1. PROGRAMMING KNOWLEDGE

The very essence of machine learning is coding(until and unless you are building something using drag and drop tools and which does not require a lot of customization) for cleaning the data, building the model, and validating them as well. Having a very good knowledge of programming skills along with the best practices always helps. You might be using java based programming or object-oriented based programming. But irrespective of what learners are using, debugging, writing efficient user defines functions and for loops and using inherent properties of the data structures essentially pays in the longer run. Having a good understanding of the below things will help

- Computer Science Fundamentals and Programming

- Software Engineering and System Design

- Machine Learning Algorithms and Libraries

- Distributed computing

- Unix

2. APPLIED MATHEMATICS

Knowledge of mathematics and related skills will always be beneficial when it comes to the understanding of the theoretical concepts of machine learning algorithms. Statistics, calculus, coordinate geometry, probability, permutations, and combinations come in very handy, although learners do not have to practically do mathematics using them. We have the libraries and the programming language to aid in a few of these, but in order to understand the underlying principles, these are very useful. Below listed are some of the mathematical concepts which are useful

2.1. Linear Algebra

Below skills in linear algebra could be very useful

- Principal Component Analysis (PCA)

- Singular Value Decomposition (SVD)

- Symmetric Matrices

- Matrix Operations

- Eigenvalues & Eigenvectors

- Vector Spaces and Norms

2.2. Probability Theory and Statistics

There are a lot of probabilistic based algorithms in machine learning and knowledge of provability becomes useful in such cases. The below mention topics in probability are good to have

- Probability Rules

- Bayes’ Theorem

- Variance and Expectation

- Conditional and Joint Distributions

- Standard Distributions (Bernoulli, Binomial, Multinomial, Uniform and Gaussian)

- Moment Generating Functions, Maximum Likelihood Estimation (MLE)

- Prior and Posterior

- Maximum a Posteriori Estimation (MAP)

- Sampling Methods

3. BUILDING MODELS AND EVALUATION

In the world of machine learning, there is no one fixed algorithm which could be identified well in advance and used to build the model. Irrespective of whether its classification, regression, or unsupervised, there are a host of techniques that need to be applied before deciding the best one for a given set of data points. Of course, with the due course, for time and experience modelers do have the idea which out of the lot could be better used than the rest but that is subjected to the situation.

Finalizing the model always leads to interpreting the model output and there are a lot of technical terms involved in this part that could decide the direction of interpretation. As such, not only model selection, developers would also need to stress equally on the aspect of model interpretation and hence would be in a better position to evaluate and suggest changes. Model validation is comparatively easier and well-defined when it comes to supervised learning but in the case of unsupervised, learners need to tread carefully before choosing the hows and whens of model evaluation.

The below concepts related to model validation are very useful to know in order to be a better judge of the models

- Components of the confusion matrix

- Logarithmic Loss

- Confusion Matrix

- Area under Curve

- F1 Score

- Mean Absolute Error

- Mean Squared Error

- Rand index

- Silhouette score

4. MACHINE LEARNING ALGORITHMS

While a machine learning engineer may not have to explicitly apply complex concepts of calculus and probability, they always have the in-build libraries(irrespective of the platform/programming language being used) to help simplify things. When it comes to the libraries, be it for data cleansing/wrangling or building models or model evaluation, they are aplenty. Knowing each and every one of them in any platform is almost impossible and more often not beneficial.

However, there would be a set of libraries which would be used day in and day out for task related to either machine learning, natural language processing, or deep learning. Hence getting familiarised with the lot would always lead to an advantageous situation and faster development time as well. Machine learning libraries associated with the below techniques are useful

- Exposure to packages and APIs such as scikit-learn, Theano, Spark MLlib, H2O, TensorFlow

- Expertise in models such as decision trees, nearest neighbor, neural net, support vector machine, and a knack for deciding which one fits the best

- Deciding and choosing hyperparameters that affect the learning model and the outcome

- Familiarity and understanding of concepts such as gradient descent, convex optimization, quadratic programming, partial differential equations

- Underlying working principle of techniques like random forests, support vector machines (SVMs), Naive Bayes Classifiers, etc helps drive the model building process faster

Source: Google Image

5. NEURAL NETWORK ARCHITECTURE

Understanding neural networks working principle requires time as it is a different terrain in the field of AI especially if one considers neural nets to be an extension of machine learning techniques. Having said that, it is not impossible to have a very good understanding after spending some time with them, getting to know the underlying principles, and working on them as well. The architecture of neural nets takes a lot of inspiration from the human brain and hence the terms are related to the architecture that has been derived from biology. Neural nets form the very essence of deep learning. Depending on the architecture of a neural net, we will have a shallow or deeper model. Depending on the depth of the architecture, the computational complexity would increase proportionately. But they evidently have an edge when it comes to solving complex problems or problems with higher input dimensions. They almost have a magical effect on the model performance when compared to the traditional machine learning algorithms. Hence it is always better to have some initial understanding so that over a period of time learner can transition smoothly

- The architecture of neural nets

- Single-layer perceptron

- Backpropagation and Forward propagation

- Activation functions

- Supervised Deep Learning techniques

- Unsupervised Deep Learning techniques

The neural network is an ever-growing field of study. It is primarily divided into supervised and unsupervised techniques similar to machine learning techniques. In the area of deep learning(the basis of which is neural networks), supervised techniques are mostly studied.

Source: Google Image

You can get more on the best machine learning course.

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Being written in C, the popular curl and libcurl tools, which are installed in some six billion devices worldwide, are exposed to well known security problems arising from the use of a non-memory safe language. A new initiative aims now to provide a memory-safe HTTP/HTTPS backend for curl based on Rust Hyper library.

By Sergio De Simone

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Monday newsletter published by Data Science Central. Previous editions can be found here. The contribution flagged with a + is our selection for the picture of the week. To subscribe, follow this link.

Featured Resources and Technical Contributions

- So You Want to Write for Data Science Central

- Statistical Machine Learning in Python

- Approaches to Time Series Data with Weak Seasonality +

- Odds vs Probability vs Chance

- Free book – Cloud Native, Containers and Next-Gen Apps

- Best Big Data Hadoop Analytics Tools in 2021

- RPA Guide For Fintech Industry

- Applied Data Science with Python

- Question: Techniques to eliminate False Negatives

Featured Articles

- The implications of Huang’s law for the AI stack

- Digital Dreams – Analog Processes

- Waiting for Godot: Developing Competitive Differentiation

- Next Generation Chip Wars Heat Up as AMD Eyes Xilinx acquisition

- Edtech – the Future of E-learning Software

- Data Preparation Need Not Be Cumbersome Or Time Consuming

- How Artificial Intelligence Is Reshaping Small Businesses

- 5 Tried and Tested SaaS Marketing Strategies to Generate Leads

- How cognitive chatbots transform service desk interactions

- Data Science as a Service Industry: Overview and Growth Outlook

Picture of the Week

Source: article flagged with a +

To make sure you keep getting these emails, please add mail@newsletter.datasciencecentral.com to your address book or whitelist us. To subscribe, click here. Follow us: Twitter | Facebook.