Month: February 2021

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

Recently Google announced a partnership with Databricks to bring their fully-managed Apache Spark offering and data lake capabilities to Google Cloud. The offering will become available as Databricks on Google Cloud.

Databricks on Google Cloud will be tightly integrated with Google Cloud’s infrastructure and analytics capabilities. By integrating Databricks with Google Kubernetes Engine (GKE), customers will get a fully container-based Databricks runtime in the cloud. Furthermore, Databricks has an optimized connector with Google BigQuery that allows easy access to data in BigQuery directly via its Storage API for high-performance queries.

The integration of Databricks with Looker and support for SQL Analytics, along with an open API environment on Google Cloud, will give customers the ability to directly query the data lake, providing an entirely new visualization experience. And lastly, customers can deploy Databricks through the Google Marketplace with unified billing and one-click setup inside the Google Cloud console as soon as it becomes available.

According to the Google blog post by Kevin Ichhpurani, corporate vice president, Global Ecosystem at Google Cloud, customers can benefit from less overhead using the managed services in Google Cloud AI Platform when deploying models built on Databricks. Furthermore, they can pair Databricks with Google Cloud services to make the most of their data management and analytics investments. And finally, they save on infrastructure by having Databricks, Google Cloud, and additional analytics applications work side by side on one shared infrastructure.

Google follows Microsoft and AWS in offering Databricks on their Cloud platform. Microsoft released Azure Databricks back in 2018 which is available in 30 regions, including the recent addition of Azure China. Furthermore, Azure Databricks is a first-party Microsoft Azure service that is sold and supported directly by Microsoft. Simultaneously, AWS also offers Databricks with Databricks on AWS available in various regions and has another managed Spark offering called EMR. By partnering with Google, Databricks is now the only unified data platform available on the three significant cloud providers.

In a Google press release Thomas Kurian, CEO at Google Cloud, said:

We’re delighted to deliver Databricks’ lakehouse for AI and ML-driven analytics on Google Cloud. By combining Databricks’ capabilities in data engineering and analytics with Google Cloud’s global, secure network—and our expertise in analytics and delivering containerized applications—we can help companies transform their businesses through the power of data.

In addition, Ali Ghodsi, CEO and co-founder of Databricks, said in the same press release:

This is a pivotal milestone that underscores our commitment to enable customer flexibility and choice with a seamless experience across cloud platforms. We are thrilled to partner with Google Cloud and deliver on our shared vision of a simplified, open, and unified data platform that supports all analytics and AI use-cases that will empower our customers to innovate even faster.

Currently, customers can sign up for the public preview of Databricks on Google in March.

MMS • Arthur Casals

Article originally posted on InfoQ. Visit InfoQ

Last week, Microsoft released the first preview of .NET 6. The new version of the framework represents the final steps of the .NET unification plan that started with .NET 5, providing a cross-platform open-source platform for all things .NET. This first preview brings performance improvements and features such as WPF support for ARM64, support for Apple Silicon, and a redesigned thread pool. .NET 6 will be officially released in November, together with ASP.NET Core 6 and EF Core 6.

.NET 6 is the last step towards the long-expected .NET unification plan, aiming to provide a cross-platform, open-source framework for all things .NET. The new version of the framework includes exciting new projects such as a multi-platform UI toolkit built upon Xamarin, Blazor desktop apps, and “fast inner loop“, a set of improvements and functionalities focused on speeding the build process (or – in some cases – skipping it altogether). According to Richard Lander, program manader at Microsoft for the .NET Team:

.NET 6 will enable you to build the apps that you want to build, for the platforms you want to target, and on the operating systems you want to use for development. […] If you are a desktop app developer, there are new opportunities for you to reach new users. If you are a mobile app developer, you will benefit from using the mainline .NET tools and APIs while targeting iOS and Android platforms. If you are a web or cloud developer, it will be easier to expose services to .NET mobile apps and share code with them.

Following Microsoft’s investment in ARM64, the first preview release of .NET 6 brings ARM64 support for Windows Presentation Framework (WPF) and initial support for Apple Silicon ARM64 chips. According to Lander, Apple Silicon support is a key deliverable of .NET 6. The official release plans include both native and (x64) emulated builds for macOS. Other ARM64 performance improvements are also expected in the next previews, as part of the continued effort that started with .NET 5.

Another key feature present in the preview release is the redesigned thread pool, re-implemented to enhance portability. Starting with .NET 6 Preview 1, the default thread pool is a managed implementation, which will enable applications to have access to the same shared thread pool — with identical behavior — independent of the runtime being used. However, for the time being, you can still revert to using the native-implement runtime thread pool (the development team still haven’t decided how long it will be maintained).

Other features in this release include the adoption of System.CommandLine libraries by the .NET command-line interface (CLI) (enabling response files and directives), net Math APIs (with limited support to hardware acceleration), and better support for Windows Access Control Lists (ACLs). Performance improvements include enhancements to single file apps, single-file signing on macOS, hardware-accelerated structs, dynamic profile-guided optimization (PGO), and Crossgen2 – a new iteration of the initial Crossgen tool for easier code generation and cross-generation development. Crossgen2 is a common dependency of many of the future container improvements planned in .NET 6.

An interesting characteristic of the .NET 6 development process – reflected in the present and future preview releases – is the adoption of a “open planning” process. This model enables you to see the direction the release is taking in terms of features and objectives, as or to find opportunities to engage and contribute easily. At the same time, it enables the development team to collect feedback on each topic. All the current development topics – or “themes” – can be found here.

.NET 6 will be will be released in November 2021 as a Long Term Support (LTS) release. .NET 6 Preview 1 was tested on Visual Studio 16.9 Preview 4 and Visual Studio for Mac 8.9, and it can be downloaded here. The default .NET container images for .NET 6 — starting with Preview 1 — are based on Debian 11 (“bullseye”).

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Announcements

DSC Featured Articles

TechTarget Articles

Picture of the Week

To make sure you keep getting these emails, please add mail@newsletter.datasciencecentral.com to your address book or whitelist us.

Subscribe to our Newsletter | Comprehensive Repository of Data Science and ML Resources

Follow us on Twitter: @DataScienceCtrl | @AnalyticBridge This email, and all related content, is published by Data Science Central, a division of TechTarget, Inc.

275 Grove Street, Newton, Massachusetts, 02466 US

|

MMS • InfoQ

Article originally posted on InfoQ. Visit InfoQ

Data architecture is being disrupted, echoing the evolution of software architecture over the past decade. The changes coming to data engineering will look and sound familiar to those who have watched monoliths be broken up into microservices: DevOps to DataOps; API Gateway to Data Gateway; Service Mesh to Data Mesh. While this will have benefits in agility and productivity, it will come with a cost of understanding and supporting a next-generation data architecture.

Data engineers and software architects will benefit from the guidance of the experts in this eMag as they discuss various aspects of breaking down traditional silos that defined where data lived, how data systems were built and managed, and how data flows in and out of the system.

We would love to receive your feedback via editors@infoq.com or on Twitter about this eMag. I hope you have a great time reading it!

Free download

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Artificial intelligence (AI) and machine learning have come a long way, both in terms of adoption across the broader technology landscape and the insurance industry specifically. That said, there is still much more territory to cover, helping integral employees like claims adjusters do their jobs better, faster and easier.

Data science is currently being used to uncover insights that claims representatives wouldn’t have found otherwise, which can be extremely valuable. Data science steps in to identify patterns within massive amounts of data that are too large for humans to comprehend on their own; machines can alert users to relevant, actionable insights that improve claim outcomes and facilitate operational efficiency.

Even at this basic level, organizations have to compile clean, complete datasets, which is easier said than done. They must ask sharp questions — questions formulated by knowing what the organization truly, explicitly wants to accomplish with AI and what users of AI systems are trying to find in existing data to get value. This means organizations have to know what problems they’re solving — no vague questions allowed. Additionally, companies must take a good look at the types of data they have access to, the quality of that data, and how an AI system might improve it. Expect this process to continue to be refined moving forward as companies attain a greater understanding of AI and what it can do.

AI is already being applied to help modernize and automate many claims-related tasks, which to this point, have been done largely on paper or scanned PDFs. As we look to the future, data science will push the insurance industry toward better digitization and improved methods of collecting and maintaining data. Insurtech will continue to mature, opening up numerous possibilities on what can be done with data.

Let’s look at some of the ways AI systems will evolve to move the insurance industry forward.

Models Will Undergo Continuous Monitoring To Eliminate Data Bias

AI will continue to advance as people become more attuned to issues of bias and explainability.

Organizations need to develop the means (or hire the right third-party vendor) to conduct continuous monitoring for bias that could creep into an AI system. When data scientists train a model, it can seem like it’s all going very well, but they might not realize the model is picking up on some bad signals, which then becomes a problem in the future. When the environment inevitably changes, that problem gets laid bare. By putting some form of continuous monitoring in place with an idea of what to expect, a system can catch potential problems before they become an issue for your customers.

Right now, people are just doing basic QA, but it won’t be long before we see them harness sophisticated tools that let them do more on an end-to-end development cycle. These tools will help data scientists look for bias in models when they’re first developing them, making models more accurate and therefore more valuable over time.

Domain Expertise Will Matter Even More

In creating these monitoring systems, they can become sensitive to disproportionate results. Therefore, organizations must introduce some kind of domain knowledge of what is expected to determine if results are valid based on real experience. A machine is never going to be able to do everything on its own. Organizations will have to say, for example, “We don’t expect many claims to head to litigation based on this type of injury in a particular demographic.” Yes, AI can drill down to that level of specificity. Data scientists will have to be ready to look for cases where things start to go askew. To do that, systems — and even the best off-the-shelf toolkits — have to be adapted to a domain problem.

Data scientists are generally aware of what technology options are available to them. They may not be aware of the myriad factors that go into a claim, however. So, at most companies, the issue becomes: Can the data scientists understand whether or not the technologies they know and have access to are appropriate for the specific problems they’re trying to solve? Generally, the challenge organizations face when implementing data science solutions is the difference between what the technology offers and what the organization needs to learn.

Statistical methods, on which all of this is based, have their limitations. That’s why domain knowledge must be applied. I watched a conference presentation recently that perfectly illustrated this issue. The speaker said that if you train a deep learning system on a bunch of text and then you ask it the question, “What color are sheep?” it will tell you that sheep are black, and the reason is that even though we know as humans that most sheep are white, it’s not something we talk about. It is implicit in our knowledge. So, we can’t extract that kind of implicit knowledge from text, at least not without a lot of sophistication. There’s always going to have to be a human in the loop to correct these kinds of life biases to close that gap between what you can learn from data and what we actually know about the world. This happens by inviting domain expertise into the data science creation process.

We’re getting better and better at democratizing access to AI systems, but there will always be an art to implementing them — where the data scientists have to be close to the subject matter experts in order to understand the underlying data issues, what the outcome is supposed to be, and what the motivations are for those outcomes.

Unstructured Data Will Become More Important

There is so much data at insurance companies’ disposal, but we have only tapped into a small percentage of it — and we’ve yet to cultivate some of the most significant assets. The integration and analysis of unstructured data will enable this to happen as it becomes more accessible.

Case in point, natural language processing continues to mature. This means that instead of pulling information from structured fields, like a yes/no surgery flag that could be interpreted pretty quickly by reading claim notes, adjusters could receive a more holistic view of the claim, going beyond the structured data and finding more and more signals that would have otherwise escaped the adjuster’s attention.

Images also provide all types of exciting and insightful unstructured data. The interpretation of scanned documents is a necessary part of claims. Advanced AI systems that can handle unstructured data would be able to read them and incorporate relevant data into outputs for evaluation. Theoretically, even further in the future, adjusters could look at pictures from car accidents to ascertain the next steps and cost estimates.

Systems that can interpret unstructured data also will be able to extract information in terms of drugs, treatments and comorbidities from medical records. In claim notes, sentiment analysis will seek out patterns from across many claims to identify the ones that yield the most negative interactions with claimants so that early interventions can occur to influence claim outcomes. We are just scratching the surface on unstructured data, but it won’t be long before it makes a profound impact on insurtech.

Feedback Loops Will Improve

Ideally, good machine learning systems involve feedback loops. Human interaction with the machine should always improve the machine’s performance in some way. New situations will perpetually arise, requiring a smooth and unobtrusive way for humans to interact with machines.

For example, claims adjusters may review data outputs and determine that possibly this sentiment wasn’t actually negative, or they might learn that they missed extracting a drug. By letting the machine know what happens on the “real-world” side of things, machines learn and improve — and so do claims adjusters! To reach this level and to be able to continually improve data analysis and its applications, undergoing a continuous improvement loop, is where AI will ultimately shine. It empowers adjusters with rich, accurate knowledge, and with each interaction, the adjuster can inject a bit more “humanness” into the machine for even better results the next time.

Companies are putting systems in place to do that today, but it will still take a while to achieve results in a meaningful way. Not a lot of organizations have reached this level of improvement at scale — except for perhaps the Googles of the world — but progress in the insurance industry is being made each day. AI systems, with increasing human input, are becoming more integral all the time. Within the next five-to-10 years, expect AI to fully transform how claims are settled. It’s a fascinating time, and I for one look forward to this data-rich future!

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Debt levels are rising and borrowers are increasingly unable to pay off the debts. The Covid-19 pandemic has not helped and there is a high risk of delinquency when it comes to types of credit, from business loans to mortgages. Traditional strategies are no longer enough to collect debts and improve receivables.

During the last decade or so, AI and machine learning are disrupting debt collection. Companies are using advanced analytics, machine learning and behavioral science to fully automate their debt collection strategies. According to stats, the share of AI in FinTech alone is expected to reach about $35.4 billion in value by 2025.

Historical debt collection

Historically, debt collection has been reactive. Lenders try to recoup their losses after a borrower becomes delinquent. The risk models that are in use now don’t allow for early delinquency warnings as they are based on a limited set of data. They do not rely on numerical logic to develop a solution.

According to essay experts for an online assignment help, one of the main blockers to improving the efficiency of collections is using obsolete processes. Methods used for the collection are often intrusive and create a negative impact. Even though using emails and SMSs instead of phone calls to collect debts may be more aligned to reaching debtors, there is still a need to customize the process.

Debt collection has to go beyond asking customers to repay overdue installments and suggest a way out of the crisis. This is where AI and machine learning can come into play.

Timely warning for delinquency

AI and ML technologies can analyze a great quantity of data from many different sources. It is possible to process call time, the value of certain accounts, collection rates, call effectiveness and much more.

Machine learning is now enabling the lenders to easily identify at-risk borrowers before they reach a point where they are unable to make payments. Machine learning accuracy constantly improves through retaining as new information comes to light and reveals new insights about delinquency risks.

Machine learning can recognize patterns that provide financial institutions a robust way of evaluating risks. This goes beyond the usual credit scores and other rough indicators. It can collate new data and update the metrics in real-time when conditions change, such as during a pandemic. This is not possible when using the risk analysis based on traditional methods.

Focus on at-risk clients

With a timely warning system of delinquency, financial institutions are able to work on the clients who might fall behind on payments. A timely indication and accurate analysis enables them to prevent their accounts from becoming delinquent. With timely analysis of issues, the debt collection department can adjust their method of collection as per what data puts forward.

For instance, they can single out potential defaulters who take time to respond the messages and also use predictive modeling to decide about the next course of action. For example, they may decide to offer various payment related offers or some type of rebate to the potential defaulter to settle an account before it goes into the collection cycle. There’s a big chance of settlement as it encourages a borrower to make a move.

Create nuanced borrower profiles

Traditional risk models assign borrowers into categories based on broad market sectors but AI and machine learning are allowing the creation of better borrower profiles. They make it possible to highlight nuances within a particular economic sector. For example, in the current pandemic, certain businesses, such as retail stores and restaurants, find online shopping or delivery or take-away as more viable than others.

Economic restrictions and different locations where the virus is more severe also cause different effects in various industries and their related sectors. Considering these and numerous several other key factors, it is possible to understand more about borrowers.

Through AI and ML, financial institutions are able to build more detailed customer profiles. They are able to recognize the borrowers who are more likely to approach the issue positively and try to settle the loans and which borrowers need an extended effort, such as modifying their payment terms or restructuring their loans.

With so much corporate and household debt, even small improvements in categorizing borrowers can generate decent returns. As AI keeps updating its algorithms and customer profiles move towards being more nuanced, lenders become better able to evaluate borrowers based on targeted or pre-laid-out characteristics instead of categorizing them on a broad traditional analysis.

Natural language processing (NLP) is a new technology that means lenders can ask a question using normal language and get a response they understand. One of NLP’s uses in businesses is to allow lenders to refine their methods of categorizing borrowers. They can even determine what language to use when they communicate with specific account subsets.

Optimize strategies for better customer engagement

Direct calls on phones or structured emails are the old methods that lenders used to settle the loan issues with the customers. Lenders today can use an automated, omnichannel communication process. They can email, send text messages, use social media or mobile apps. There are many ways for lenders to reach out but they need to know the right method to put into action, when to get in touch with them and the type of approach that would help to resolve the issue more effectively.

The best debt collection software leveraging the power of AI and machine learning can recognize and present the best channel by which to reach the borrower and the best time of the day for communications to be sent. This increases the likelihood of a response and improves collection rates. AI can even analyze borrower call audio to give actionable insights on the way various scripts impact borrower responses and enable lenders to come up with increasingly nuanced, detailed scripts.

Debt collection strategies can now be structured on the social, demographic and economic data that corresponds to each debt account. By taking into considertation the age, profession, salary and social profiling of a customer, lenders can establish and the chances of them paying their debt and also use this data to tweak their approach to them.

AI debt collection software is able to create voices like humans and create a more personalized experience for debtors. Settling debts today is becoming easier and less painful than a collection process consisting of multiple phone calls at inappropriate times of the day.

AI is eradicating the need for guesswork and human biases in the collection process. It is automating the process in a logical way and at the same time enabling the development of a more customer-centric approach.

The bottom line

AI offers multiple opportunities to help drive improvements in collections with a more informed and customized approach. Of the firms already using AI, 40 percent are using it for collections. Lenders and borrowers alike are seeing the benefits of AI and machine learning in modernizing the debt collection process.

More ability to interact with and understand borrowers helps to reduce losses. Early warning of delinquency means lenders can effectively focus on at-risk clients. More proactive customer outreach is helping borrowers to better manage their debt to avoid facing a financial trouble and avoid debt collection. Credit and collection organizations that embrace AI and machine learning will reap many benefits when they modernize their collection processes.

MMS • Clare Liguori

Article originally posted on InfoQ. Visit InfoQ

00:21 Introductions

00:21 Daniel Bryant: Hello, and welcome to the InfoQ podcast. I’m Daniel Bryant, News Manager here at InfoQ, and Director of Dev-Rel at Ambassador Labs. In this edition of the podcast, I had the pleasure of sitting down with Clare Liguori, principal software engineer at Amazon Web Services. I’ve followed Clare’s work for many years, having first seen her present live, at AWS re:Invent back in 2017. I’ve recently been enjoying reading the Amazon Builders’ Library article series published on their AWS website. Clare’s recent article here, titled “Automating Safe Hands-Off Deployments” caught my eye. The advice presented was fantastic and provided insight into how AWS implement continuous delivery and also provided pointers for the rest of us that aren’t quite operating at this scale yet. I was keen to dive deeper into the topic and jumped at the chance to chat with Clare.

01:02 Daniel Bryant: Before we start today’s podcast, I wanted to share with you details of our upcoming QCon Plus virtual event, taking place this May 17th to 28th. QCon Plus focuses on emerging software trends and practices from the world’s most innovative software professionals. All 16 tracks are curated by domain experts, with the goal of helping you focus on the topics that matter right now in software development. Tracks include: leading full-cycle engineering teams, modern data pipelines, and continuous delivery workflows and patterns. You’ll learn new ideas and insights from over 80 software practitioners at innovator and early adopter companies. The event runs over two weeks for a few hours per day, and you can experience technical talks, real-time interactive sessions, async learning, and optional workshops to help you learn about the emerging trends and validate your software roadmap. If you are a senior software engineer, architect, or team lead, and want to take your technical learning and personal development to a whole new level this year, join us at QCon Plus this May 17th through 28th. Visit qcon.plus for more information.

01:58 Daniel Bryant: Welcome to the InfoQ podcast, Clare.

02:00 Clare Liguori: Thanks. I’m excited to be here.

02:02 Daniel Bryant: Could you briefly introduce yourself for the listeners please?

02:04 Clare Liguori: Yeah. I’m Clare Liguori. I’m a principal engineer at AWS. I have been at Amazon Web Services for about six years now, and currently I focus on developer tooling for containers.

02:17 Based on your work at AWS, what do you think are the primary goals of continuous delivery?

02:17 Daniel Bryant: Now, I’ve seen you present many times on the stage, both live and watching via video as well, and I’ve read your blog post recently. That’s what got me super excited about chatting to you when I read the recent AWS blog posts. So we’re going to dive into that today. What do you think are the primary goals of continuous delivery?

02:32 Clare Liguori: I think for me, sort of as a continuous delivery user, as a developer, I think there’s two things. I think one is just developer productivity. Before I came to Amazon and in Amazon’s history as well, we haven’t always done continuous delivery, but without continuous delivery, you spend so much of your time as a developer, just managing deployments, managing builds, managing all of these individual little steps that you to do, that really just take you away from what I want to be doing, which is writing code.

03:04 Clare Liguori: Then I think the other side of that is just human error that’s involved in doing all of these steps manually. It can really end up affecting your customers, right? You end up deploying the wrong package accidentally, or not rolling back fast enough manually. And so continuous delivery, it takes a lot of the human error and sort of the fear and the scariness for me, out of sending my code out to production.

03:29 Daniel Bryant: I like that a lot. I’ve been there running shell scripts, never a good look, right?

03:34 Clare Liguori: Exactly.

03:34 What does a typical continuous delivery pipeline look like at Amazon

03:34 Daniel Bryant: What does a typical continuous delivery pipeline look like at Amazon?

03:36 Clare Liguori: At a high level, I like to describe it as four basic stages. One is going to be sourced. That can be anything from your application source code, your infrastructure’s code, patches that need to be applied to your operating system. Really anything that you want to deploy out to production is a source and a trigger for the pipeline. The next stage is going to be build. So compiling your source code, running your unit tests, and then pre-production environments where you’re running all of your integration tests against real running code, and then finally going out to production.

04:13 Clare Liguori: One of the things that is great about the Amazon pipelines is that it’s very flexible for how we want to do those deployments. So one of the things that I talk about in the blog post is how we split up production to really reduce the risk of deploying out to production. Before I came to Amazon, one of my conceptions of pipelines was that deploying to production was really sort of a script, a shell script or a file that has a list of commands that need to run, and deploying to production was kind of an atomic thing. You have one change that starts running this script, and then nothing else can be deployed until that script is done.

04:52 Clare Liguori: At Amazon, we have such a large number of deployments that we split production into to reduce the risk of those deployments and do very, very small scope deployments out to customer workloads, that it’s super important that we don’t think of it as this atomic script. It’s actually more of a lot of parallel workflows going on that promote changes one to the other. And so production might look like literally 100 different deployments, but we’re not waiting for all 100 deployments to succeed from individual change. Once that first deployment is done, the next deployment can go in. And so we can have actually 100 changes flowing through that pipeline through those 100 deployments. So that was one of the most unique things that I found in coming to Amazon and learning about how we do our pipelines.

05:41 How many pipelines does a typical service have at Amazon?

05:41 Daniel Bryant: I liked the way you sort of broke down the four stages there. So I’d love to go a bit deeper into each of those stages now. I think many folks, even if they’re not operating at Amazon scale, could definitely relate to those stages. So how many pipelines does a typical service have at Amazon? As I was reading the blog post, I know there’s a few. Does this help with the separation of concerns as well?

05:59 Clare Liguori: I would say the typical team, it is a lot of pipelines. For a particular microservice, you might have multiple different pipelines that are deploying different types of changes. One type of change is the application code, another type of change would be maybe the infrastructure as code, and any other kind of changes to production, like I mentioned before, even patches that need to be applied to the operating system. Some of those get combined into, we have a workflow system that lets you do multiple changes going out to production at the same time. But the key here is really the rollback behavior for us. For me, pipelines are all about helping out with human error and doing the right thing before a human even gets involved. And so rollbacks are super important to us.

06:50 Clare Liguori: With the pipelines being separated into these different types of changes, it’s really easy to roll back just that change that was going out. You made a change to just the CloudFormation template for your service, so you can just roll back that CloudFormation template.

07:04 Clare Liguori: But one of the things we’re starting to see and why some of these pipelines are getting combined now is that application code is also going out through infrastructure as code, especially with things like containers, with things like Lambda functions. A lot of that is modeled in infrastructure as code as well. And so the lines are starting to blur between application code and infrastructure as code. And so a little bit, we’re starting to get into combining those into the same pipeline, but being able to roll back sort of multiple related changes.

07:37 Clare Liguori: So if you have a DynamoDB table change and a ECS elastic container service change going out at the same time, the workflow will deploy them in the right order and then roll them back in the right order. So it becomes a lot easier to sort of reason about how are these changes flowing out to production, but you still get that benefit of being able to roll back automatically the whole thing without a person ever having to get involved and make decisions about what that order should be.

08:06 How are code reviews undertaken at Amazon, and how do you balance the automation, e.g. linting versus human intuition?

08:06 Daniel Bryant: You mentioned that there’s the human balance and automation. How are code reviews undertaken at Amazon, and how do you balance the automation, that linting versus human intuition?

08:17 Clare Liguori: Every change that goes out to production at Amazon is code reviewed, and our pipelines actually enforced that, so they won’t let a change go out to production if it somehow got pushed into a repository and has not been code reviewed. I think one of the most important things about what we call full CD, which is there are no human interactions after that change has been pushed into the source code repository before it gets deployed to production. And so really the last time that you have a person that’s looking at a change, evaluating a change is that code review. And so code review starts to take on multiple purposes.

08:59 Clare Liguori: One is you want to review it for just the performance aspects, the correctness aspects, is this code maintainable, but it’s also about is this safe to deploy to production? Are we making any backwards and compatible changes that we need to change those to be backward compatible? Or are we making any changes that we think are going to be performance regressions at high scale? Or is it instrumented enough so that we can tell when something’s going wrong? Do we have alarms on any new metrics that are being introduced? And so a lot of teams, that’s a lot to sort of have in your mind when you’re going through some of these code reviews, that’s a lot to evaluate. And so one of the things that I evangelize a bit on my teams is the use of checklists in order to think through all of those aspects of evaluating this code, when you’re looking at a code review.

09:52 How do you mock external services or dependencies on other Amazon components?

09:52 Daniel Bryant: How do you mock external services or dependencies on other Amazon components?

09:58 Clare Liguori: So this is an area which differs between whether we’re doing unit tests or whether we’re doing integration tests. Typically, in a unit test, our builds actually run in an environment that has no access to the network. We want to make sure that our builds are fully reproducible, and so a build would not be calling out to a live service because that could change the behavior of the build, and all of a sudden you can end up with very flaky unit tests running in your build. And so in unit tests, typically we would end up mocking, using, my favorites are Mockito for Java. We would end up mocking those other services, mocking out the AWS SDK that we’re using, and making some assumptions along the way about what is the behavior going to be of that service? What error code are they going to return to us for a particular input? Or what is the success code going to be when it comes back? What’s the response going to be?

10:57 Clare Liguori: The way that I see integration tests is really an opportunity to validate some of those assumptions against the real live service. And so integration tests almost never mock the dependency services. So if were calling DynamoDB, or S3, the service would actually call those production services in our pre-prod stages during integration tests, and really run through that actual full stack of getting the request, processing that requests, storing it in the database, and returning a response. And so we get to validate what is the response from DynamoDB going to be for a request that comes from the integration test in a pre-prod environment

11:38 Could you explain the functionality and benefits of each of the pre-prod environments, please?

11:38 Daniel Bryant: In your blog post you mentioned the use of alpha, beta, and gamma pre production environments. Could you explain the functionality and benefits of each of the pre-prod environments, please?

11:47 Clare Liguori: I think looking at the number of pre-prod environments that we have, it’s really about building confidence in this change before it goes to production. And so what we tend to see with alpha, beta, and gamma is more and more testing as the pipeline promotes that change between environments, but also how stable those environments tend to be.

12:11 Daniel Bryant: Oh, okay. Interesting.

12:13 Clare Liguori: Typically, that first stage in the environment, it’s probably not going to be super stable. It’s going to be broken a lot by changes that are getting code reviewed, but missing some things and getting deployed out in the pipeline. But then as we move from alpha to beta, beta to gamma, gamma tends to be a bit more stable than alpha, right? So alpha is typically where we would run tests that are really scoped to the changes that are being deployed by that particular pipeline. What I mean by that is running some very simple, maybe smoke tests or synthetic traffic tests against just that microservice that was being deployed by that pipeline.

12:53 Clare Liguori: Then as we get into beta, beta starts to exercise really the full stack. These systems are lots of microservices that work together to provide something like an AWS service. And so typically, beta will be an environment that has all of these microservices in it, within particular teams’ service or API space. The test will actually exercise that full stack. They’ll go through the front end, call those front end APIs that we’re going to be exposing to AWS customers in production. And that ends up calling all the different backend services, and going through async workflows and all of that, to complete those requests. The integration tests really ended up showing that yes, with this change, we haven’t broken anything upstream or downstream from that particular microservice.

13:42 Clare Liguori: Then gamma is really intended to be the most stable and is really intended to be as production-like as possible. This is where we start to really test out some of the deployment safety mechanisms we have in production to make sure that this change is actually going to be safe to deploy to production. It goes through all the same integration tests, but it also starts running things like monitoring canaries with synthetic traffic, that we also run against production. It runs through all of the same things like canary deployments, deploying that change out to the gamma fleet and making sure that that’s going to be successful.

14:21 Clare Liguori: We actually even alarm on it, at the same thresholds as in production. We make sure that that change is not going to trigger alarms in production before we actually get there, which is very nice. Our on-call engineers love that. We really try to make sure that even before that change gets to a single instance or a single customer workload, that we’ve tested it against something that’s as production-like as possible.

14:46 How do engineers design and build integration tests?

14:46 Daniel Bryant: I think you’ve covered this a little bit already, but I was quite keen to dive into how do engineers design and build integration tests? Now, I’ve often struggled with this, who owns an integration test that spans more than one service, say? So who is responsible? Is it the engineer making the changes, perhaps the bigger team you’ve mentioned, or is there a dedicated QA function?

15:05 Clare Liguori: So typically within AWS, there are very few QA groups. So really, we practice full stack ownership, I would call it, with our engineers on our service teams, that they are responsible for not only writing the application code, but thinking about how that change needs to be tested, thinking about how that change needs to be monitored in production, how it needs to be deployed so they build their pipelines, and then how that change needs to be operated. So they’re also on call for that change. And so integration tests are largely either written by their peers on their team or by themselves for the changes that they’re making.

15:48 Clare Liguori: I think one of the interesting things about integration tests in an environment like this, where there’s so many microservices, that it is very difficult to write a test that covers the entire surface area for a very complex service that might have hundreds of microservices behind it. One of the things that tends to happen is that you write integration tests for the services really that your team owns. And so one of the things that becomes important is understanding really what’s the interface, the APIs that this team owns, that we’re going to be surfacing to other teams? And making sure that we’re really testing that whole interface, that other teams might be hooking into, or customers might be calling if there are front end APIs for AWS customers, and then making sure that we have coverage around all of those, that we’re not introducing changes that are going to break those callers.

16:41 Do all services have to be backwards compatible at Amazon to some degree? And if so, how do you test for that backwards compatibility?

16:41 Daniel Bryant: I’m really curious around backwards compatibility because that is super hard again, from my experience, my Java days as well. Do all services have to be backwards compatible at Amazon to some degree? And if so, how do you test for that backwards compatibility?

16:55 Clare Liguori: One of the funny things that I tend to tell teams is, diamonds are forever. We’ve all heard that. But APIs are also forever.

17:03 Daniel Bryant: Yes. I love it.

17:03 Clare Liguori: When we release a public AWS API, we really stand by that for the long term. We want customers to be able to confidently build applications against this APIs, and know that those APIs are going to continue working over the long term. So all of our AWS APIs have a long history of being backwards compatible. But we do have to do testing to ensure that. And so that comes back to the integration tests are really documenting and testing, what is the behavior that we are exposing to our customers, and running through those same integration tests on every single change that goes out.

17:46 Clare Liguori: One question that I get a lot is, “Do you only tests for the APIs that have changed?” No, we always run the same full integration test suite because you never know when a change is going to break or change the behavior of an API. Things like infrastructure as code changes could change the behavior of an API if you’re changing the storage layer or the routing layer. Lots of unexpected changes could end up changing the behavior of an API. And so all of these changes that are going out to production, regardless of which pipeline it is, run that same integration test suite.

18:21 Clare Liguori: Then one of the things that I’ve noticed that helps us with backward compatibility testing is canary deployments, so deploying out to what we call one-box deployments, deploying out to a single virtual machine, a single EC2 instance, or a single container, or a little small percentage of Lambda function invocations. Having that new change running really side-by-side with the old change, actually helps us a lot with backward compatibility because things like if we’re changing a no SQL database schema, for example, that one is very easy to bite you, because all of a sudden you’re writing this new schema that the old code can’t actually read. You definitely don’t want to be in that state in production, of course.

19:08 Clare Liguori: Going back to our gamma stages, also doing canary deployments, they are exercising those both code paths, old and new, during that canary deployment. And so we’re able to get a little bit of backward compatibility testing just through canary deployments, which is fun.

19:24 Do you typically perform any load testing on a service before deploying it into prod?

19:24 Daniel Bryant: Something you mentioned there, the one box deploys, I’m kind of curious, do you typically perform any load testing on a service before deploying it into prod?

19:31 Clare Liguori: Load testing tends to be one of those things that depends on the team. We give teams a lot of freedom to do as much testing that they need to for the needs of their service. There are teams that do load testing in the pipeline as an approval step for one of the pre-production stages. If there are load tests that they can run that would find performance regressions at scale and things like that, some teams do that.

19:58 Clare Liguori: Other teams find it hard to build up sufficient load in a pre-production environment compared to the scale of some of our AWS regions and our customers. And so sometimes that’s not always possible. Sometimes it looks like, when we’re making a large architectural change, doing more of a one-off load test that really builds up the scale to what we call testing to break in a pre-production environment, where we’re trying to find that next bottleneck for this architectural change that we need to make. So, it really depends.

20:29 Clare Liguori: A lot of what we do at AWS is really, we give teams the freedom since they own that full stack, to make choices for their operational excellence for that. But generally, the sort of minimum bar would be unit tests, integration tests, and the monitoring canaries that are running against production.

20:47 Do you use canary releasing and feature flagging for all production deployments?

20:47 Daniel Bryant: Do you use canary releasing and feature flagging for all production deployments?

20:51 Clare Liguori: We use canary deployments fairly broadly across all of our applications. Canary deployments are interesting in that there are some changes that are just difficult, to impossible to do as a canary deployment. The example that usually comes to mind is something like a database, or really any kind of infrastructure. So making a change to a load balancers is a little hard to do as a canary deployment in confirmation. But generally speaking, wherever we can, we do canary deployments in production. The reason for that is that it limits the potential impact of that change in production to a very, very small percentage of requests or customer workloads. Then during the canary deployment, once we’ve deployed to that what we call one box, we’ll let that bake there for a little bit.

21:43 Clare Liguori: One of the things that we tend to see is changes don’t always trigger alarms immediately. They often will trigger alarms maybe 30 minutes after, maybe an hour after. And by that point, if you’re doing a canary deployment and you deploy to this one EC2 instance, that might not take very long, and then you kind of deploy it out to the rest of the production fleet pretty quickly, and all of a sudden you’ve now rolled out this change that is triggering alarms to a much broader percentage of your fleet. So bake time for us has been a really important enhancement on traditional canary deployments in order to ensure that we’re finding those changes before they get out to a large percentage of our production capacity.

22:27 How do you roll out a change across the entire AWS estate?

22:27 Daniel Bryant: So how do you roll out a change across the entire AWS estate? The blog post mentioned the use of waves, I think, and I’m sure I wasn’t super familiar with the term and listeners may not be as well. I’ve also got a follow-up question, I guess there, is do the alert thresholds change as more boxes and AZs, regions come online with this new change?

22:47 Clare Liguori: Rule number one in deployments in AWS is that we never want to pause a multi-region or multi AZ impact from a change. Customers really rely on that isolation between regions. And so that really drives how we think about reducing the percentage of production capacity that we are deploying to, at any one time with a new change.

23:14 Clare Liguori: Typically, a team will break down those production deployments into individual region deployments, and then even further into individual zonal deployments, so that we’re never introducing a change to a lot of regions at a time, all at the beginning. Now, one of the challenges is that as we’ve scaled, we have a lot of zones, we have a lot of regions. Doing those one at a time can take a long time. And so we’re really having to balance here the speed at which we can deliver these changes to customers. Of course, we want to get new features out to customers as fast as possible, but also balancing the risk of deploying very, very quickly, globally across all of our regions and zones. And so waves help us to balance those two things.

24:05 Clare Liguori: Again, going back to this idea of building confidence in a change, as you start to roll it out, you start with a very, very small percentage of production capacity. Initially we start with the one box through the canary deployment, but we also start with just a single zone, out of all of this zones that we have globally. And then we roll it out to the rest of that single region. And then we’ll do one zone and another region, and then roll it out to the rest of that region. And between those two regions that we’ve done independently, we’ve built a lot of confidence in the change because that whole time we’ve been monitoring for any impact, any increased latency, any increased error rates that we’re seeing for API requests.

24:48 Clare Liguori: Then, we can start to paralyze a little bit more and more, as we get into what we call waves in the pipeline. We might do three regions at a time, individually picking an AZ from each of those regions and deploying it so that each of these individual deployments is still very small scoped. We do a canary deployment in each individual zone. And so we’re continually looking at how to have small, small scope deployments, while being able to parallelize some of it as we’re building confidence. By the end, we might be deploying to multiple regions at a time, but we’ve been through this whole process of really just building that confidence in changes.

25:29 Do any humans monitor a typical rollout?

25:29 Daniel Bryant: I like it. I’m definitely getting the confidence vibe and that makes a lot of sense. Do any humans monitor a typical rollout?

25:36 Clare Liguori: Not typically. And that is one of my favorite things about continuous deployment at Amazon, is that largely we kind of forget about changes once we have merged them, merged the pull requests at the beginning. And so there’s really no one that is watching these pipelines. We’re really letting the pipelines do the watching for us. Things like automatic monitoring by the pipelines, all the rollback by the pipelines become super important because there is no one sitting there waiting to click that roll back button. Right?

26:09 Clare Liguori: And so often what we find is that when an alarm goes off and the on-call gets engaged, usually if it’s a problem caused by a deployment, the pipeline has already rolling back that change before the on-call engineer is even logged in and started looking at what’s going on. And so it really helps us to work on being able to have as little risk as possible in these deployments as well, because we not only scope them to be very small percentages of production capacity, but they also get rolled back really quickly.

26:44 Do you think every organization can aspire to implementing these kinds of hands-off deployments?

26:44 Daniel Bryant: You mentioned that the pull request being the last moment where humans sort of review. Do you think everyone can aspire to implementing these kinds of hands-off deployments?

26:52 Clare Liguori: One of the important things to remember as I talk about how we do things at Amazon, is that we didn’t always have all of this built into our pipelines. This has been a pretty long journey for us. One of the things that we’ve done over time, and we’ve sort of arrived at this kind of complex pipelines when you look at them, is that it’s been an iteration over many years of learning about what changes are going out, what changes have caused impact in production, and how could we have prevented that? And so that’s led to sort of our discovery of canary deployments, looking at how do we also do canary deployments in pre-production? How can we find these problems before customers do? And so monitoring canaries help us to exercise all of those code paths, hopefully before customers do.

27:48 Clare Liguori: And so I think these are achievable really by anyone, but I think it’s important to start small and start to look at what are the biggest risks to production deployments for you, and how can you reduce that risk over time?

28:02 Have you got any advice for developers looking to build trust in their pipelines?

28:02 Daniel Bryant: Yeah. And that sort of perfectly leads onto my next question, because it can seem when folks look at companies like Amazon, it can seem quite a jump. How would you recommend, or have you got any advice for developers in building trust in their pipelines?

28:15 Clare Liguori: One of the things that we do naturally see at Amazon in new teams is kind of a distrust of the pipeline. But it’s interesting, it’s also sort of a distrust in their own tests, their own alarms and things like that. When you build a brand new service, you’re always worried, is the pager not going off because the service is doing well, or is the painter not going off because I’m missing an alarm somewhere that I should have enabled?

28:42 Clare Liguori: One of the things that’s pretty common when we’re building a brand new service is to actually add what we call manual approval steps to the pipeline to begin with. Just like you build confidence in changes that are going out to production, you also need to build confidence in your own tests, your own alarms, your own pipeline approval steps. So just putting that manual approval and having someone watch the pipeline for awhile and see, what are those changes that are going out? Are they causing impact to production? Looking weekly at, what do the metrics look like and should we have alarmed on any of these spikes that are happening in the metrics weekly?

29:21 Clare Liguori: So really validating for yourself, what is the pipeline looking at, and would I have made the same choice, really, in deploying to production, can help to build that confidence in a new pipeline or a new service. And then over time, being able to remove that once you feel like you have sufficient test coverage, you have sufficient monitoring coverage, that you’re not as a person, adding value by sitting there watching the deployment happening.

29:47 Would you recommend that all folks move towards trying to build pipelines as code?

29:47 Daniel Bryant: Would you recommend that all folks move towards trying to build pipelines as code?

29:52 Clare Liguori: One of the things that I really like about pipelines as code is that similar to any kind of code review, it really drives a conversation about, is this the right change? So as you’re building up that pipeline, you can very naturally have conversations in a code review if it’s pipelines as code, about how you want to design your team’s pipelines, and whether it meets the bar for removing that manual approval step after launching that service, or whether we’re running the right set of tests, things like that.

30:24 Clare Liguori: One of the things that I did find when joining Amazon and starting to build out a lot of these pipelines is also that it’s very easy to forget some of these steps. There are a lot of steps in the pipeline. There’s a lot of pre-production environments, a lot of integration test steps. Across all of our regions and zones, there’s a lot of deployments going on. And so as the pipeline just gets more and more complex, you just want to click less buttons, in order to set these up and to have consistency across your pipeline. If you’ve got 10 different microservices that your team owns, you want those to be consistent.

31:00 Clare Liguori: Pipeline’s code, especially with tools like the AWS Cloud Development Kit, where you get to use your favorite programming language and you get to use object-oriented design, and inheritance, what’s really common is to set up a base class that represents, this is how our team sets up pipelines, these are the steps that we run in the pipeline because we’re running the same integration tests in all of these pipelines across that full stack. And so that really helps to achieve some consistency across our team’s pipelines, which has been super helpful and a lot less painful than clicking all the buttons and trying to look at the pipelines to see if they’re consistent.

31:36 Could you share guidance on how to make the iterative jumps towards full continuous delivery?

31:36 Daniel Bryant: Excellent. Yeah. I definitely like clicking buttons. I’ve been there for sure. Maybe this is a bit of a meta question, a bigger question, as a final question, but do you have any advice for how listeners should approach migrating to continuous delivery, continuous deployment, if they’re kind of coming from a place now where they’re literally clicking the buttons and that’s working, but it’s painful? Any guidance on how to make the iterative jumps towards full continuous delivery?

32:01 Clare Liguori: I think again, start small. I think you can break up pipelines into a lot of different steps. We have this sort of full continuous delivery across source builds, test, and production. What I see with even some AWS customers is kind of tackling a few of those steps at a time. It might just be continuous integration to start consistently building a Docker image when someone pushes something to the main branch. Or it might be consistently deploying out to a test environment and running integration tests, and getting a little confidence in your tests, working on test flakiness and things like that, and working on test coverage.

32:44 Clare Liguori: Then, getting to iterating further to production and starting to think about, how do you make these production deployments smaller scoped, and reliable, and monitored, and auto rollback. But I think it’s important to start small and just start with small steps, because it really is an iterative process. It’s been an iterative process for Amazon and something that we continue to look at. We continue to look kind of across AWS and look for any kind of customer impact that’s been caused by a deployment. What was the root cause and how could we have prevented it? And we think about new solutions that we could add to our pipelines and roll out across AWS. And so we’re continuing to iterate on our pipelines. I think they’re definitely not done yet, as we learn at higher and higher scale, how to prevent impact and reduce the risk of impact.

33:31 Daniel Bryant: That’s great. Many of us look up to Amazon. It’s nice to hear you’re still learning too, right? Because that gives us confidence that we can get there, which is great.

33:38 Clare Liguori: Yeah, absolutely.

33:39 Outro: where can listeners chat to you online?

33:39 Clare Liguori: So if listeners want to reach out to you, Clare, where can they find you online?

33:42 Clare Liguori: I’m totally happy to answer any questions on Twitter. My handle is Clare_Liguori on Twitter. So totally happy to answer any questions about how we do pipelines at Amazon.

33:55 Daniel Bryant: Awesome. Well, thanks for your time today, Clare.

33:56 Clare Liguori: Thank you.

(Part 2 of 4) How to Modernize Enterprise Data and Analytics Platform – by Alaa Mahjoub, M.Sc. Eng.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

In many cases, for an enterprise to build its digital business technology platform, it must modernize its traditional data and analytics architecture. A modern data and analytics platform should be built on a services-based principles and architecture.

part 1, provided a conceptual-level reference architecture of a traditional Data and Analytics (D&A) platform.

This part, provide a conceptual-level reference architecture of a modern D&A platform.

Parts 3 and 4, will explain how these two reference architectures can be used to modernize an existing traditional D&A platform. This will be illustrated by providing an example of a Transmission System Operator (TSO) that modernizes its existing traditional D&A platform in order to build a cyber-physical grid for Energy Transition. However, the approaches used in this example can be leveraged as a toolkit to implement similar work in other vertical industries such as transportation, defence, petroleum, water utilities, and so forth.

Reference Architecture of Modern Data and Analytics Platform

The proposed reference architecture of a modern D&A platform environment is shown in Figure 1. This environment consists of: the modern D&A platform itself (which is denoted by the red rectangle at the left side of the figure), the data sources (at the bottom part of the figure) and the other four technology platforms that integrate with the D&A platform to make up the entire digital business technology platform (these platforms are shown in blue).

The reference architecture depicted in Figure 1 comprises several functional capabilities. From an enterprise architecture perspective, each of these functional capabilities includes a grouping of functionality and can be considered as an Architecture Building Block (ABB). These ABBs should be mapped (in a later stage of the design) into real products or specific custom developments (i.e. Solution Building Blocks).

Figure 1: Reference Architecture of a Modern D&A Platform Environment

Following is the description of the ABBs of the modern D&A platform reference architecture environment, classified by functionality:

- Audio: Data at rest audio files or data in motion audio streams

- BC Ledger: Data at rest blockchain ledger repositories or data in motion blockchain ledger streaming data

- Data at Rest Data Sources: Data sources of inactive data that is stored physically in any digital form (e.g. databases, data warehouses, data marts, spreadsheets, archives, tapes, off-site backups, mobile devices, etc.)

- Data in Motion Data Sources (Streaming): Data sources that generate sequences of data elements made available over time. Streaming data may be traversing a network or temporary residing in computer memory to be read or updated.

- Docs: Documents such as PDF or MS Office files

- ECM: Enterprise Content Management Systems

- ERP: Enterprise Resource Planning Systems

- Image: Data at rest imaging files or data in motion imaging streams

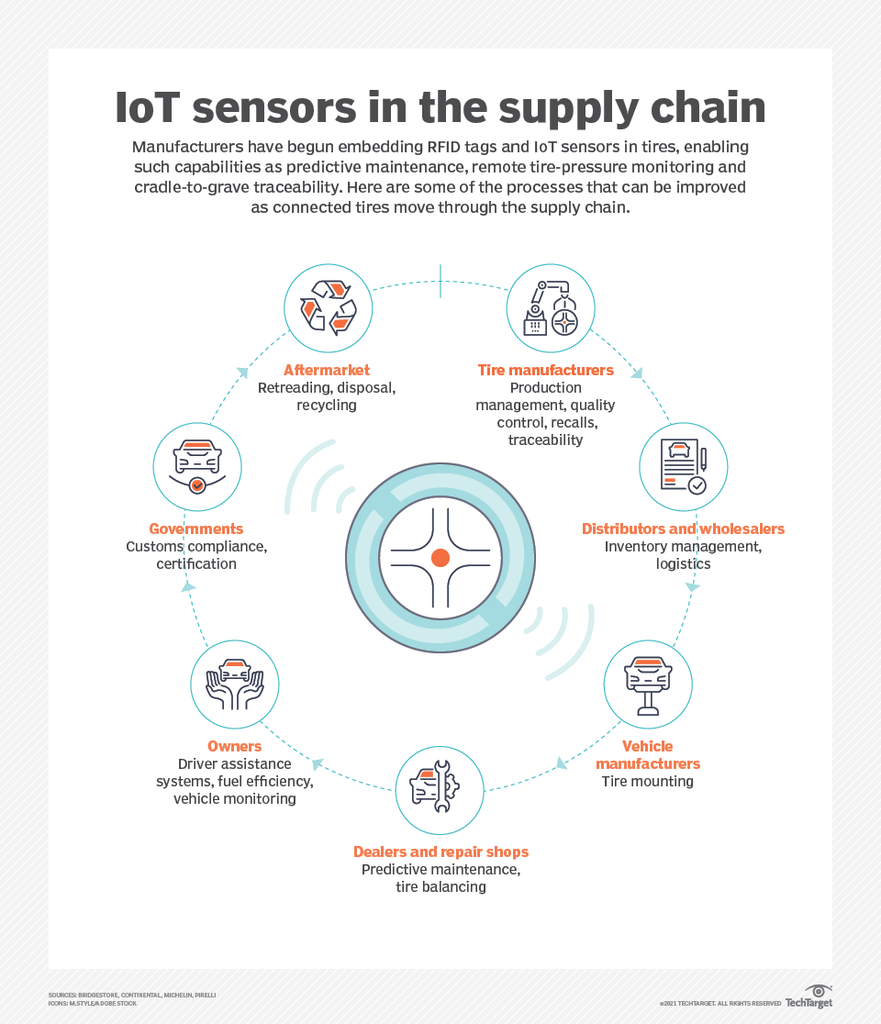

- IoT/OT Feeds: Data in motion streams fed from Internet of Things or operational technology real-time monitoring and control devices. The Internet of Things is the network of physical objects that contain embedded technology to communicate and sense or interact with their internal states or the external environment. Examples of operational technology real-time monitoring and control devices are the remote terminal units and programmable logic controllers.

- IT Logs: Data at rest logging files or data in motion logging streams. Logs are used to records either events that occur in an operating system or other software runs, or messages between different users of a communication software.

- Mobile: Mobile devices such as smart phones, tablets and laptop computers.

- Public Data Marketplace: An online store where people or other organizations can buy / sell data. In general, the data in this marketplace may belong to the enterprise or to third parties.

- RDBMS: Relational Database Management Systems

- Social/Web: Social media platforms such as Facebook and Twitter or web sites that host data or contents

- SQL/NoSQL: SQL or NoSQL Databases.

- Text: Text data sources such as emails or CSV files.

- Video: Data at rest video files or data in motion video streams.

The Digital Business Platform Architectural Building Blocks

- The IT & OT Systems Platform: This platform runs the core business applications, back office applications, infrastructure applications, endpoint device applications and operational technology applications

- The Customer Experience Platform: This platform run the customers and citizens facing applications such as customer portals, B2C and B2B

- The IoT Platform: This platform connects physical assets (Things) such as buses, taxis and traffic signals (for transportation domain), or power transformers and generator (for electrical power / utility domain) or oil wellheads (for Oil and Gas domain); for monitoring, optimization, control and monetization.

- The Ecosystems Platform: This platform supports the creation of, and connection to, external ecosystems, marketplaces and communities relevant to the enterprise business such as government agencies, smart cities, credit card networks, police, ambulance, etc.

- The D&A Platform: This platform contains the data management and analytics tools and applications

The Modern D&A Platform Architectural Building Blocks

The Data Fabric Layer

A data fabric is generally a custom-made design that provides reusable data services, pipelines, semantic tiers or APIs via combination of data integration approaches (bulk/batch, message queue, virtualized, streams, events, replication or synchronization), in an orchestrated fashion. Data fabrics can be improved by adding dynamic schema recognition or even cost-based optimization approaches (and other augmented data management capabilities). As a data fabric becomes increasingly dynamic or even introduces ML capabilities, it evolves from a data fabric into a data mesh network. Data fabric informs and automates the design, integration and deployment of data objects regardless of deployment platforms or architectural approaches. Data fabric utilizes continuous analytics and AI/ML over all metadata assets (such as location metadata, data quality, frequency of access and lineage metadata, not just technical metadata) to provide actionable insights and recommendations on data management and integration design and deployment patterns. This approach results in faster, informed and, in some cases, completely automated data access and sharing. The data fabric requires various data management capabilities to be combined and used together. These include augmented data catalogs, semantic data enrichment, utilization of graphs for modelling and integration, and finally an insights layer that uses AI/ML toolkits over metadata graphs to provide actionable recommendations and automated data delivery. Following are the architectural building blocks of the data fabric:

The Data Hubs: A data hub is a logical architecture which enables data sharing by connecting producers of data (applications, processes and teams) with consumers of data (other applications, process and teams). Endpoints interact with the data hub, provisioning data into it or receiving data from it, and the hub provides a point of mediation and governance, and visibility to how data is flowing across the enterprise. Each of the five platforms that make up the entire digital business platform may have one or more data hub. In general, each of these platforms can exchange data with the other four platforms through their appropriate data hub(s).

The Data Connectivity: Provides the ability to interact with data sources and targets of different types of data structure, whether they are hosted on-premises, on public cloud, intercloud, hybrid cloud, edge, or embedded systems

Active & Passive Metadata Management: Provides the ability to manage metadata through a set of technical capabilities such as business glossary & semantic graph, data lineage & impact analysis, data cataloguing & search, metadata ingestion and translation, modelling, taxonomies & ontology and semantic frameworks. A data catalog creates and maintains an inventory of data assets through the discovery, description and organization of distributed datasets. Data cataloguing provides context to enable data stewards, data/business analysts, data engineers, data scientists and other line of business data consumers to find and understand relevant datasets for the purpose of extracting business value. Modern machine-learning-augmented data catalogs automate various tedious tasks involved in data cataloging, including metadata discovery, ingestion, translation, enrichment and the creation of semantic relationships between metadata.

Data Integration: Provides the ability to integrate the different types of data assets through a set of technical capabilities such as ETL & Textual ETL, bulk ingestion, APIs, data virtualization, data replication, concurrency control, message-oriented data movement, streaming (CEP & ESP), blockchain ledger pooling service, data transformation and master data management. Textual ETL is the technology that allows an organisation to read unstructured data and text in any format and in any language and to convert the text to a standard relational data base (DB2, Oracle, Teradata, SQL Server et al) where the text is in a useful meaningful format. Textual ETL reads, integrates, and prepares unstructured data that is ready to go into standard technologies such as Oracle, DB2, Teradata, and SQL Server. Once the unstructured data resides in any of those technologies, standard analytical tools such as Business Objects, Cognos, MicroStrategy, SAS, Tableau and other analytical and BI visualization technologies can be used to access, analyse, and present the unstructured data. These new applications can look at and retrieve textual data and can address and highlight fundamental challenges of the corporation that have previously been unrealized.

Data Governance: Provides the ability to support the governance processes of the different types of data assets through a set of technical capabilities such as policy management, rules management, security, privacy, encryption, bring your own key (BYOK), information lifecycle management, discovery and documenting data sources, regulatory and compliance management

Data Quality: Provides the ability to support the data quality processes of the different types of data assets through a set of technical capabilities such as data profiling, data monitoring, data standardization, data cleansing, data matching, data linking and merging, data enrichment, and data parsing and issue resolution

Augmented Data Management: Provides the ability to support and augment the data management processes of the different types of data assets through a set of technical capabilities such as ML & AI augmentation of main data management functions (e.g. data quality management, master data management, data integration, database management systems and metadata management), automated/ augmented dataset feature generation, automated/ augmented DataOps and data engineering (e.g. data preparation, code and configuration management, agile project management, continuous delivery, automated testing and orchestration), augmented data management performance tuning, data management workflow & collaboration as well as data administration & authorization intelligence

Data Lake: A data lake is a concept consisting of a collection of storage instances of various data assets. These assets are stored in a near-exact, or even exact, copy of the source format and are in addition to the originating data stores.

Logical DW/DV: Logical Data Warehouse /Data Vault: The logical data warehouse is conceptual data management architecture for analytics combining the strengths of traditional repository warehouses with alternative data management and access strategies (specifically, federation and distributed processing). It also includes dynamic optimization approaches and multiple use-case support. However, the architecture of the traditional repository part of the Logical DW can include architecture styles such as normalized schema, data marts (DM), data vault (DV), operational data stores (ODS) and OLAP cubes. The DV is a data integration architecture that contains a detail-oriented database containing a uniquely linked set of normalized tables that support one or more functional areas of business tables with satellite tables to track historical changes.

Private Data Marketplace: The private data marketplace (or enterprise data marketplace) is a company store for data, one that is accessible only to employees and invited guests. The enterprise data marketplace is distinctly different from commercial and open markets specifically because it is a marketplace for enterprise data. Think of it as the company store for data—a store that is accessible only to employees and invited guests. Enterprise data marketplace is to data markets what a corporate intranet is to the broader Internet.

ML Models & Analytics Artefacts Repository: Repository for storing the machine learning different models and data versions.

Metadata Repository (Relational/Graph): Repository for storing active and passive metadata of the different data assets. A RDBMS or Graph database may be used to store this metadata.

Master Data Repository: A Repository for storing the master data assets.

Data Persistence Performance Engine (Disk, SSD, RAM, Persistence RAM, …): Used for data caching to speed up the data analysis. It augments the capabilities of accessing, integrating, transforming and loading data into a self-contained performance engine, with the ability to index data, manage data loads and refresh scheduling.

The Analytic Capabilities Layer

Include the following analytical capabilities that support building and running business intelligence, data science, machine learning and artificial intelligence applications:

AutoML and Conversational UI: Automated Machine Learning provides methods and processes to make Machine Learning available for non-Machine Learning experts, to improve efficiency of Machine Learning and to accelerate research on Machine Learning. Machine learning (ML) has achieved considerable successes in recent years and an ever-growing number of disciplines rely on it. However, this success crucially relies on human machine learning experts to perform the following tasks:

Pre-process and clean the data

Select and construct appropriate features

Select an appropriate model family

Optimize model hyperparameters

Postprocess machine learning models

Critically analyse the results obtained

As the complexity of these tasks is often beyond non-ML-experts, the rapid growth of machine learning applications has created a demand for off-the-shelf machine learning methods that can be used easily and without expert knowledge. We call the resulting research area that targets progressive automation of machine learning AutoML.

Natural Language Query (NLQ): A query expressed by typing English, French or any other spoken language in a normal manner. For example, “how many sales reps sold more than a million dollars in any eastern state in January?” In order to allow for spoken queries, both a voice recognition system and natural language query software are required.

Natural Language Generation (NLG): is a software process that transforms structured data into natural language. It can be used to produce long form content for organizations to automate custom reports, as well as produce custom content for a web or mobile application. It can also be used to generate short blurbs of text in interactive conversations (a chatbot) which might even be read out by a text-to-speech system.

Conversational GUIs: are graphical user interfaces that includes things such as natural language query (NLQ) and natural language generation (NLG) and Chatbot-like features.

Integration with Third-party Analytics Platform: Provides the ability to Integrate with Third-party analytics platform as a key BI fabric capability