Month: August 2022

MMS • Matthew Scullion

Article originally posted on InfoQ. Visit InfoQ

Subscribe on:

Transcript

Shane Hastie: Good day folks. This is Shane Hastie for the InfoQ Engineering Culture podcast. Today I’m sitting down literally across the world from Matthew Scullion. Matthew is the CEO of Matillion who do data stuff in the cloud. Matthew, welcome. Thanks for taking the time to talk to us today.

Introductions [00:25]

Matthew Scullion: Shane, it is such a pleasure to be here. Thanks for having us on the podcast. And you’re right, data stuff. We should perhaps get into that.

Shane Hastie: I look forward to talking about some of this data stuff. But before we get into that, probably a good starting point is, tell me a little bit about yourself. What’s your background? What brought you to where you are today?

Oh gosh, okay. Well, as you said, Shane, Matthew Scullion. I’m CEO and co-founder of a software company called Matillion. I hail from Manchester, UK. So, that’s a long way away from you at the moment. It’s nice to have the world connected in this way. I’ve spent my whole career in software, really. I got started very young. I don’t know why, but I’m a little embarrassed about this now. I got involved in my first software startup when I was, I think, 17 years old, back in late nineties, on the run-up to the millennium bug and also, importantly, as the internet was just starting to revolutionize business. And I’ve been working around B2B enterprise infrastructure software ever since.

And then, just over 10 years ago, I was lucky to co-found Matillion. We’re an ISV, which means a software company. So, you’re right, we do data stuff. We’re not a solutions companies, so we don’t go in and deliver finished projects for companies. Rather, we make the technologies that customers and solution providers use to deliver data projects. And we founded that company in Manchester in 2011. Just myself, my co-founder Ed Thompson, our CTO at the time, and we were shortly thereafter joined by another co-founder, Peter McCord. Today, the company’s international. About 1500 customers around the world, mostly, in revenue terms certainly, large enterprise customers spread across well over 40 different countries, and about 600 Matillioners. Roughly half R and D, building out the platform, and half running the business and looking after our clients and things like that. I’m trying to think if there’s anything else at all interesting to say about me, Shane. I am, outside of work, lucky to be surrounded by beautiful ladies, my wife and my two daughters. And so, between those two things, Matillion and my family, that’s most of the interesting stuff to say about me, I think.

Shane Hastie: Wonderful. Thank you. So, the reason we got together to talk about this was a survey that you did looking at what’s happening in the data employment field, the data job markets, and in the use of business data. So, what were the interesting things that came out of that survey?

Surveying Data Teams [03:01]

Matthew Scullion: Thanks very much for asking about that, Shane. And you’re quite right, we did do a survey, and it was a survey of our own customers. We’re lucky to have quite a lot of large enterprise customers that use our technology. I mean, there’s hundreds of them. Western Union, Sony, Slack, National Grid, Peet’s Coffee, Cisco. It’s a long list of big companies that use Matillion software to make their data useful. And so, we can talk to those companies, and also ones that aren’t yet Matillion customers, about what they’ve got going on in data, wider in their enterprise architecture, and in fact, with their teams and their employment situations, to make sure we are doing the right things to make their lives better, I suppose. And we had some hypotheses based on our own experience. We have a large R and D team here at Matillion, and we had observations about what’s going on in the engineering talent market, of course, but also feedback from our customers and partners about why they use our technology and what they’ve got going on around data.

Our hypothesis, Shane, and the reason that Matillion exists as a company, really is, as I know, certainly you and probably every listener to this podcast will have noticed, data has become a pretty big deal, right? As we like to say, it’s the new commodity, the new oil, and every aspect of how we work, live and play today is being changed, we hope for the better, with the application of data. It’s happening now, everywhere, and really quickly. We can talk, if you want, about some of the reasons for that, but let’s just bank that for now. You’ve got this worldwide race to put data to work. And of course, what that means is there’s a constraint, or set of constraints, and many of those constraints are around people. Whilst we all like to talk about and think about the things that data can do for us, helping us understand and serve our companies’ customers better is one of the reasons why companies put data to work. Streamlining business processes, improving products, increasingly data becoming the products.

All these things are what organizations are trying to do, and we do that with analytics and data visualization, artificial intelligence and machine learning. But what’s spoken about a lot less is that before you can do any of that stuff, before you can build the core dashboard that informs an area of the business, what to do next with a high level of fidelity, before you can coach the AI model to help your business become smarter and more efficient, you have to make data useful. You have to refine it, a little bit like iron ore into steel. The world is awash with data, but data doesn’t start off useful in its raw state. It’s not born in a way where you can put it to work in analytics, AI, or machine learning. You have to refine it. And the world’s ability to do that refinement is highly constrained. The ways that we do it are quite primitive and slow. They’re the purview of a small number of highly skilled people.

Our thesis was that every organization would like to be able to do more analytics, AI and ML projects, but they have this kink in the hose pipe. There’s size nine boots stood on the hose pipe of useful data coming through, and we thought was lightly causing stress and constraint within enterprise data teams. So we did this survey to ask and to say, “Is it true? Do you struggle in this area?” And the answer was very much yes, Shane, and we got some really interesting feedback from that.

Shane Hastie: And what was that feedback?

60% of the work in data analytics is sourcing and preparing the data [06:42]

Matthew Scullion: So, we targeted the survey on a couple of areas. And first of all, we’re saying, “Well, look, this part of making data useful in order to unlock AI machine learning and analytics projects. It may well be constrained, but is it a big deal? How much of your time on a use case like that do you spend trying to do that sort of stuff?” And this, really, is the heart of the answer I think. If you’re not involved in this space, you might not realize. Typically it’s about 60%, according to this and previous survey results. 60% of the work of delivering an analytics, AI and machine learning use case isn’t in building the dashboard, isn’t in the data scientist defining and coaching the model. Isn’t in the fun stuff, therefore, Shane. The stuff that we think about and use. Rather it’s in the loading, joining together, refinement and embellishment of the data to take it from its raw material state, buried in source systems into something ready to be used in analytics.

Friction in the data analytics value stream is driving people away

So, any time a company is thinking about delivering a data use case, they have to think about, the majority of the work is going to be in refining the data to make it useful. And so, we then asked for more information about what that was like, and the survey results were pretty clear. 75% of the data teams that we surveyed, at least, reported to us that the ways that they were doing that were slowing them down, mostly because they were either using outdated technology to do that, pre-cloud technology repurposed to a post-cloud world, and that was slowing this down. Or because they were doing it in granular ways. The cloud, I think many of us think it’s quite mainstream, and it is, right? It is pretty mainstream. But it’s still quite early in this once-in-a-generation tectonic change in the way that we deliver enterprise infrastructure technology. It’s still quite early. And normally in technology revolutions, we start off doing things in quite manual ways. We code them at a fairly low level.

So, 75% of data teams believe that the ways that they’re doing migration of data, data integration and maintenance, are costing their organizations both time, productivity and money. And that constraint also makes their lives less pleasant personally as they otherwise could be. Around 50% of our user respondents in this survey revealed this unpleasant picture, Shane, to be honest, of constant pressure and stress that comes with dealing with inefficient data integration. To put it simply, the business wants, needs and is asking for more than they’re capable of delivering, and that leads to 50% of these people feeding back that they feel under constant pressure and stress, experiencing burnout, and actually, this means that data professionals in such teams are looking for new roles and looking to go to areas with more manageable work-life balances.

So, yeah, it’s an interesting correlation between the desire of all organizations, really, to make themselves better using data, the boot on the hose pipe slowing down our ability to doing that, meaning that data professionals are maxed out and unable to keep up the demand. And that, in turn, therefore, leading to stress and difficulty in attracting and retaining talent into teams. Does that all make sense?

Shane Hastie: It does indeed. And, certainly, if I think back to my experience, the projects that were the toughest, it was generally pretty easy to get the software product built, but then to do the data integration or the data conversions as we did so often back then, and making that old data usable again, were very stressful and not fun. That’s still the case.

Data preparation and conversion is getting harder not easier [10:43]

Matthew Scullion: It’s still the case and worse to an order of magnitude because we have so many systems now. Separately, we also did a survey, probably need to work on a more interesting way of introducing that term, don’t I, Shane? But we talk to our clients all the time. And another data point we have is that in our enterprise customers, our larger businesses – so, this is typically businesses with, say, a revenue of 500 million US dollars or above. The average number of systems that they want to get data out of and put it to work in analytics projects, the average number is just north of a thousand different systems. Now, that’s not in a single use case, but it is across the organization. And each of those systems, of course, has got dozens or hundreds, in many cases thousands of data elements inside it. You look at a system like SAP, I think it has 80,000 different entities inside, and that would count as one system on my list of a thousand.

And in today’s world, even a company like Matillion, we’re a 600-person company. We have hundreds of modern SaaS applications that we use, and I’d be fairly willing to bet that we have a couple of ones being created every day. So, the challenge is becoming harder and harder. And at the other side of the equation, the hunger, the need to deliver data projects much, much more acute, as we race to change every aspect of how we work, live and play, for the better, using data. Organizations that can figure out an agile, productive, maintainable way of doing this at pace have a huge competitive advantage. It really is something that can be driven at the engineering and enterprise architecture and IT leadership level, because the decisions that we make there can give the business agility and speed as well as making people’s lives better in the way that we do it.

Shane Hastie: Let’s drill into this. What are some of the big decisions that organizations need to make at that level to support this, to make using data easier?

Architecture and data management policies need to change to make data more interoperable [12:44]

Matthew Scullion: Yeah, so I’m very much focused, as we’ve discussed already, on this part of using data, the making it useful. The refining it from iron ore into steel, before you then turn that steel into a bridge or ship or a building, right? So, in terms of building the dashboards or doing the data science, that’s not really my bag. But the bit that we focus on, which is the majority of the work, like I mentioned earlier, is getting the data into one place, the de-normalizing, flattening and joining together of that data. The embellishing it with metrics to make a single version of the truth, and make it useful. And then, the making sure that process happens fast enough, reliably, at scale, and can be maintained over time. That’s the bit that I focus in. So, I’m answering your question, Shane, through that lens, and in my belief, at least, to focus on that bit, because it’s not the bit that we think about, but it’s the majority of the work.

First of all, perhaps it would be useful to talk about how we typically do that today in the cloud, and people have been doing this stuff for 30 years, right? So, what’s accelerating the rate at which data is used and needs to be used is the cloud. The cloud’s provided this platform where we can, almost at the speed of thought, create limitlessly scalable data platforms and derive competitive advantage that improves the lives of our downstream customers. Once you’ve created that latent capacity, people want to use it, and therefore you have to use it. So, the number of data projects and the speed at which we can do them today, massively up and to the right because of the cloud. And then, we’ve spoken already about all the different source systems that have got your iron ore buried in.

So, in the cloud today, people typically do, for the most part, one of two different main ways to make data useful, to do data integration, to refine it from iron ore into steel. So, the first thing that they do, and this is very common in new technology, is that they make data useful in a very engineering-centric way. Great thing about coding, as you and I know well, is that you can do anything in code, right? And so, we do, particularly earlier technology markets. We hand code making data useful. And there’s nothing wrong with that, and in some use cases, it’s, in fact, the right way to do it. There’s a range of different technologies that we can do, we might be doing it in SQL or DBT. We might be doing it using Spark and Pi Spark. We might even be coding in Java or whatever. But we’re using engineering skills to do this work. And that’s great, because A, we don’t need any other software to do it. B, engineers can do anything. It’s very precise.

But it does have a couple of major drawbacks when we are faced with the need to innovate with data in every aspect of how we work, live and play. And drawback number one is it’s the purview of a small number of people, comparatively, right? Engineering resources in almost every organization are scarce. And particularly in larger organizations, companies with many hundreds or many thousands of team members, the per capita headcount of engineers in a business that’s got 10,000 people, most of whom make movies or earth-moving equipment or sell drugs or whatever it is. It’s low, right? We’re a precious resource, us engineers. And because we’ve got this huge amount of work to do in data integration, we become a bottleneck.

The second thing is data integration just changes all the time. Any time I’ve ever seen someone use a dashboard, read a report, they’re like, “That’s great, and now I have another question.” And that means the data integration that supports that data use case immediately needs updating. So, you don’t just build something once, it’s permanently evolving. And so, at a personal level for the engineer, unless they want to sit there and maintain that data integration program forever, we need to think about that, and it’s not a one and done thing. And so, that then causes a problem because we have to ramp new skills onto the project. People don’t want to do that forever. They want to move on to different companies, different use cases, and sorry, if they don’t, ultimately they’ll probably move on to a different company because they’re bored. And as an organization, we need the ability to ramp new skills on there, and that’s difficult in code, because you’ve got to go and learn what someone else coded.

Pre-cloud tools and techniques do not work in the modern cloud-based environment

So, in the post-cloud world, in this early new mega trend, comparatively speaking, one of the ways that we make data useful is by hand-coding it, in effect. And that’s great because we can do it with precision, and engineers can do anything, but the downside is it’s the least productive way to do it. It’s the purview of a small number of valuable, but scarce people, and it’s hard to maintain in the long term. Now, the other way that people do this is that they use data integration technology that solves some of those problems, but that was built for the pre-cloud world. And that’s the other side of the coin that people face. They’re like, “Okay, well I don’t want to code this stuff. I learned this 20 years ago with my on-premise data warehouse and my on-premise data integration technology. I need this stuff to be maintainable. I need a wider audience of people to be able to participate. I’ll use my existing enterprise data integration technology, ETL technology, to do that.”

That’s a great approach, apart from the fact that pre-cloud technology isn’t architected to make best use of the modern cloud, public cloud platforms and hyperscalers likes AWS Azure and Google Cloud, nor the modern cloud data platforms like Snowflake, Databricks, Amazon Redshift, Google BigQuery, et al. And so, in that situation, you’ve gone to all the trouble of buying a Blu-ray player, but you’re watching it through a standard definition television, right? You’re using the modern underlying technology, but the way you’re accessing it is out of date. Architecturally, the way that we do things in the cloud is just different to how we did it with on-premises technology, and therefore it’s hard to square that circle.

It’s for these two reasons that today, many organizations struggle to make data useful fast enough, and why, in turn, therefore, that they’re in this lose-lose situation of the engineers are either stressed out and burnt out and stuck on projects that they want to move on from, or bored because they’re doing low-level data enrichment for weeks, months, or years, and not being able to get off it, as the business’ insatiable demand for useful data never goes away and they can’t keep up. Or, because they’re unable to serve the needs of the business and to change every aspect of how we work, live and play with data. Or honestly, Shane, probably both. It’s probably both of those things.

So our view, and this is why Matillion exists, is that you can square this circle. You can make data useful with productivity, and the way that you do it is by putting a technology layer in place, specifically designed to talk to these problems. And if that technology layer is going to be successful, we think it needs to have a couple of things that it exhibits. The first one is it needs to solve for this skills problem, and do that by making it essentially easier whilst not dumbing it down, and by making it easier, making a wider audience of people able to participate in making data useful. Now, we do that in Matillion by making our technology low-code, no-code, code optional. Matillion’s platform is a visual data integration platform, so you can dive in and visually load, transform, synchronize and orchestrate data.

That low-code, no-code environments can make a single engineer far more productive, but perhaps as, if not more importantly, it means it’s not just high-end engineers that can do this work. It can also be done by data professionals, maybe ETL guys, BI people, data scientists. Even tech-savvy business analyst, financiers and marketers. Anyone that understands what a row and a column is can pretty much use technology like Matillion. And the other thing that the low-code, no-code user experience really helps with is managing skills on projects. You can ramp someone onto a project that’s already been up and running much more easily, because you can understand what’s going on, because it’s a diagram. You can drop into something a year after it was last touched and make changes to it much, much more easily because it’s low-code, no-code.

Now, the average engineer, Shane, in my experience, often is skeptical about visual 4GL or low-code, no-code engineering, and I understand the reasons why. We’ve all tried to use these tools before. But, in the case of data, at least, it can be done. It’s a technically hard problem, it’s one that we’ve spent the last seven, eight years perfecting, but you can build a visual environment that creates the high-quality push down ELT instruction set to the underlying cloud data platform as well, if not perhaps even better than we could by hand, and certainly far faster. That pure ELT architecture, which means that we get the underlying cloud data platform to do the work of transforming data, giving us scalability and performance in our data integrations. That’s really important, and that can be done, and that’s certainly what we’ve done at Matillion.

The skills challenges are most apparent in large organisations

The other criteria I’ll just touch on quickly. The people that suffer with this skills challenge the most are larger businesses. Smaller businesses that are really putting data to work tend to be either technology businesses or technology-enabled businesses, which probably means they’re younger and therefore have fewer source systems with data in. A higher percentage of their team are engineering team members. They’re more digitally native. And so, the problem’s slightly less pronounced for that kind of tech startup style company. But if you’re a global 8,000, manufacturing, retail, life sciences, public sector, financial services, whatever type company, then your primary business is doing something else, and this is something that you need to do as a part of it. The problem for you is super acute.

And so, the second criteria that a technology that’s going to solve this problem has to have is it has to work well for the enterprise, and that’s the other thing that Matillion does. So, we’re data integration for the cloud and for the enterprise, and that means that we scale to very large use cases and have all the right security and permissions technology. But it’s also things like auditability, maintainability, integration to software development life-cycle management, and code repositories and all that sort of good stuff, so that you can treat data integration in the same way that you treat building software, with proper, agile processes, proper DevOps, or as we call them in the data space, data-ops processes, in use behind the scenes.

These challenges are not new – the industry has faced them with each technology shift

So, that’s the challenge. And finally, if you don’t mind me rounding out on this point, Shane, it’s like, we’ve all lived through this before. Nothing’s new in IT. The example I always go back to is one from, I was going to say the beginning of my career. I’d be exaggerating my age slightly there, actually. It’s more like it’s from the beginning of my life. But the PC revolution is something I always think about. When PCs first came in, the people that used them were enthusiasts and engineers because they arrived in a box of components that you had to solder together. And then, you had to write code to make them do anything. And that’s the same with every technology revolution. And that’s where we’re up to with data today. And then later, visual operating systems, abstracted the backend complexity of the hardware and underlying software, and allowed a wider audience for people to get involved, and then, suddenly, everyone in the world use PCs. And now, we don’t really think about PCs anymore. It’s just a screen in our pocket or our laptop bag.

That’s what will and is happening with data. We’ve been in the solder it together and write code stage, but we will never be able to keep up with the world’s insatiable need and desire to make data useful by doing it that way. We have to get more people into the pass rush, and that’s certainly what we and Matillion are trying to do, which suits everyone. It means engineers can focus on the unique problems that only they can solve. It means business people closer to the business problems can self-serve, and in a democratized way, make the data useful that they need to understand their customers better and drive business improvement.

Shane Hastie: Some really interesting stuff in there. Just coming around a little bit, this is the Engineering Culture podcast. In our conversations before we started recording, you mentioned that Matillion has a strong culture, and that you do quite a lot to maintain and support that. What’s needed to build and maintain a great culture in an engineering-centric organization?

Maintaining a collaborative culture [25:44]

Matthew Scullion: Thanks for asking about that, Shane, and you’re right. People that are unlucky enough to get cornered by me at cocktail parties will know that I like to do nothing more than bang on about culture. It’s important to me. I believe that it’s important to any organization trying to be high performance and change the world like, certainly, we are here in Matillion. I often say a line when I’m talking to the company, that the most important thing in Matillion, and I have to be careful with this one, because it could be misinterpreted. The most important thing in Matillion, it’s not even our product platform, which is so important to us and our customers. It’s not our shiny investors. Matillion was lucky to become a unicorn stage company last year, I think we’ve raised about 300 million bucks in venture capital so far from some of the most prestigious investors in the world, who we value greatly, but they’re not the most important thing.

It’s not even, Shane, now this is the bit I have to be careful saying, it’s not even our customers in a way. We only exist to make the lives of our customers better. But the most important thing at Matillion is our team, because it’s our team that make those customers’ lives better, that build those products, that attract those investors. The team in any organization is the most important thing, in my opinion. And teams live in a culture. And if that culture’s good, then that team will perform better, and ultimately do a better job at delighting its customers, building its products, whatever they do. So, we really believe that at Matillion. We always have, actually. The very first thing that I did on the first day of Matillion, all the way back in January of 2011, which seems like a long, long time ago now, is I wrote down the Matillion values. There’s six of them today. I don’t think I had six on the first day. I think I embellished the list of it afterwards. But we wrote down the Matillion values, these values being the foundations that this culture sits on top of.

If we talk to engineering culture specifically, I’ve either been an engineer or been working with or managing engineers my whole career. So, 25 years now, I suppose, managing or being in engineering management. And the things that I think are the case about engineering culture is, first of all, engineering is fundamentally a creative business. We invent new, fun stuff every day. And so, thing number one that you’ve got to do for engineers is keep it interesting, right? There’s got to be interesting, stimulating work to do. This is partly what we heard in that data survey a few minutes ago, right? If you’re making data useful through code, might be interesting for the first few days, but for the next five years, maintaining it’s not very interesting. It gets boring, stressful, and you churn out the company. You’ve got to keep engineers stimulated, give them technically interesting problems.

But also, and this next one applies to all parts of the organization. You’ve got to give them a culture, you’ve got to give each other a culture, where we can do our best work. Where we’re intellectually safe to do our best work. Where we treat each other with integrity and kindness. Where we are all aligned to delivering on shared goals, where we all know what those same shared goals are ,and where we trust each other in a particular way. That particular way of trusting each other, it’s trusting that we have the same shared goal, because that means if you say to me, “Hey, Matthew, I think you are approaching this in the wrong way,” then I know that you’re only saying that to me because you have the same shared goal as I do. And therefore, I’m happy that you’re saying it to me. In fact, if you didn’t say it to me, you’d be helping me fail.

So, trust in shared goals, the kind of intellectual safety born from respect and integrity. And then, finally, the interest and stimulation. To me, those are all central to providing a resonant culture for perhaps all team members in an organization, but certainly engineers to work in. We think it’s a huge competitive advantage to have a strong, healthy culture. We think it’s the advantage that’s allowed us, in part, but materially so, to be well on the way to building a consequential, generational organization that’s making the world’s data useful. Yes, as you can tell, it’s something I feel very passionate about.

Shane Hastie: Thank you very much. A lot of good stuff there. If people want to continue the conversation, where do they find you?

Matthew Scullion: Well, me personally, you can find me on Twitter, @MatthewScullion. On LinkedIn, just hit Matthew Scullion Matillion, you’ll find me on there. Company-wise, please do go ahead and visit us at matillion.com. All our software is very easy to consume. It’s all cloud-native, so you can try it out free of charge, click it and launch it in a few minutes, and we’d love to see you there. And Shane, it’s been such a pleasure being on the podcast today. Thank you for having me.

Mentioned

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

Virtual Machine Threat Detection in Google Security Command Center Now Generally Available

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

Google Cloud recently announced the general availability (GA) of Virtual Machine Threat Detection (VMTD) as a built-in service in Security Command Center Premium, which can detect if hackers attempt to mine cryptocurrency in a company’s cloud environment.

The capability of the Security Command Center is a part of the vision the company has regarding invisible security. Earlier, VMTD was released in public preview and received adoption from users around the world, according to the company. Moreover, since the service’s initial release, the company has added several new features like more frequent scanning across many instances.

Customers can easily enable VTMD by checking a box in their Security Command Center Premium settings. Subsequently, the service can detect if the customers’ cloud environment contains malware that hijacks infrastructure resources to mine cryptocurrency. Furthermore, the service provides technical information about the malware to help administrators block it.

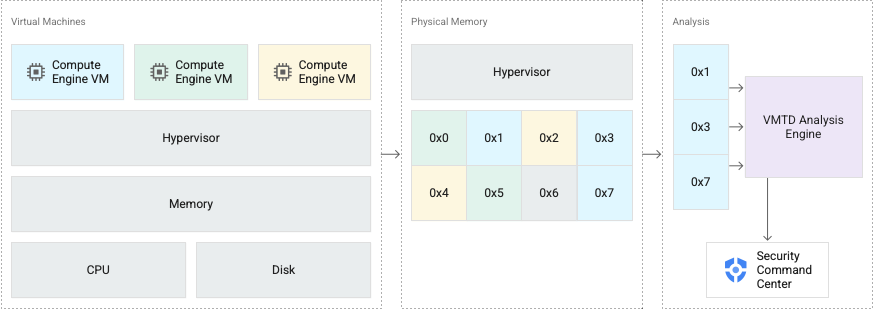

VM Threat Detection is built into Google Cloud’s hypervisor, a secure platform that creates and manages all Compute Engine VMs. Under the hood, the service scans enabled Compute Engine projects and VM instances to detect unwanted applications, such as cryptocurrency mining software running in VMs. And the analysis engine ingests metadata from VM guest memory and writes findings to Security Command Center.

VMTD does not rely on software agents to detect malware compared to traditional cybersecurity products. Attackers cannot disable it; unlike agents, they potentially can. Furthermore, setup is less time-consuming, considering when there are a large number of instances.

In a Google Cloud blog post, the company intends to expand VMTD to cover more cybersecurity use cases in the future. Timothy Peacock, a senior product manager, stated:

In the future, we plan on further improving VMTD’s understanding of Linux kernels to detect additional advanced attacks and report live telemetry to our customers. With its unique position as an outside-the-instance observer, VMTD can detect rootkits and bootkits, attacks that tamper with kernel integrity, and otherwise blind the kernel and traditional endpoint detection and response technology (EDR) to their presence.

Lastly, the pricing details of the Security Command Center are available on the pricing page, and more details are on the documentation landing page.

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

WINTON GROUP Ltd lowered its stake in shares of MongoDB, Inc. (NASDAQ:MDB – Get Rating) by 19.1% in the first quarter, according to the company in its most recent Form 13F filing with the Securities & Exchange Commission. The firm owned 535 shares of the company’s stock after selling 126 shares during the period. WINTON GROUP Ltd’s holdings in MongoDB were worth $237,000 as of its most recent filing with the Securities & Exchange Commission.

Other hedge funds also recently bought and sold shares of the company. Confluence Wealth Services Inc. purchased a new stake in MongoDB during the fourth quarter worth $25,000. Bank of New Hampshire purchased a new stake in MongoDB during the first quarter worth $25,000. Covestor Ltd purchased a new stake in MongoDB during the fourth quarter worth $43,000. Cullen Frost Bankers Inc. purchased a new stake in shares of MongoDB during the 1st quarter valued at $44,000. Finally, Montag A & Associates Inc. lifted its holdings in shares of MongoDB by 200.0% during the 4th quarter. Montag A & Associates Inc. now owns 108 shares of the company’s stock valued at $57,000 after purchasing an additional 72 shares during the last quarter. Hedge funds and other institutional investors own 88.70% of the company’s stock.

Analyst Ratings Changes

Several analysts have recently commented on the company. Piper Sandler decreased their price objective on MongoDB from $430.00 to $375.00 and set an “overweight” rating for the company in a report on Monday, July 18th. Morgan Stanley decreased their price objective on MongoDB from $378.00 to $368.00 and set an “overweight” rating for the company in a report on Thursday, June 2nd. Oppenheimer decreased their price objective on MongoDB from $490.00 to $400.00 and set an “outperform” rating for the company in a report on Thursday, June 2nd. Stifel Nicolaus decreased their price objective on MongoDB from $425.00 to $340.00 in a report on Thursday, June 2nd. Finally, Redburn Partners assumed coverage on MongoDB in a report on Wednesday, June 29th. They set a “sell” rating and a $190.00 price objective for the company. One equities research analyst has rated the stock with a sell rating and seventeen have given a buy rating to the stock. According to data from MarketBeat, MongoDB currently has an average rating of “Moderate Buy” and a consensus target price of $414.78.

Insider Activity

In other news, CTO Mark Porter sold 1,520 shares of the firm’s stock in a transaction dated Tuesday, August 2nd. The stock was sold at an average price of $325.00, for a total transaction of $494,000.00. Following the sale, the chief technology officer now directly owns 29,121 shares in the company, valued at approximately $9,464,325. The transaction was disclosed in a legal filing with the Securities & Exchange Commission, which is available through this hyperlink. In other news, Director Dwight A. Merriman sold 14,090 shares of the firm’s stock in a transaction dated Monday, August 1st. The stock was sold at an average price of $312.06, for a total transaction of $4,396,925.40. Following the sale, the director now directly owns 1,322,954 shares in the company, valued at approximately $412,841,025.24. The transaction was disclosed in a legal filing with the Securities & Exchange Commission, which is available through this hyperlink. Also, CTO Mark Porter sold 1,520 shares of the firm’s stock in a transaction dated Tuesday, August 2nd. The shares were sold at an average price of $325.00, for a total transaction of $494,000.00. Following the completion of the transaction, the chief technology officer now directly owns 29,121 shares in the company, valued at $9,464,325. The disclosure for this sale can be found here. Insiders have sold 43,795 shares of company stock worth $12,357,981 in the last ninety days. Company insiders own 5.70% of the company’s stock.

MongoDB Price Performance

Shares of MDB opened at $353.57 on Monday. The business’s 50-day moving average is $314.31 and its two-hundred day moving average is $331.88. The company has a market capitalization of $24.08 billion, a P/E ratio of -73.05 and a beta of 0.96. The company has a debt-to-equity ratio of 1.69, a quick ratio of 4.16 and a current ratio of 4.16. MongoDB, Inc. has a fifty-two week low of $213.39 and a fifty-two week high of $590.00.

MongoDB (NASDAQ:MDB – Get Rating) last announced its quarterly earnings results on Wednesday, June 1st. The company reported ($1.15) earnings per share for the quarter, topping the consensus estimate of ($1.34) by $0.19. The company had revenue of $285.45 million for the quarter, compared to the consensus estimate of $267.10 million. MongoDB had a negative return on equity of 45.56% and a negative net margin of 32.75%. The firm’s revenue for the quarter was up 57.1% compared to the same quarter last year. During the same period last year, the firm earned ($0.98) EPS. Research analysts predict that MongoDB, Inc. will post -5.08 earnings per share for the current year.

MongoDB Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

Article originally posted on mongodb google news. Visit mongodb google news

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

WINTON GROUP Ltd trimmed its holdings in MongoDB, Inc. (NASDAQ:MDB – Get Rating) by 19.1% in the first quarter, Holdings Channel.com reports. The institutional investor owned 535 shares of the company’s stock after selling 126 shares during the quarter. WINTON GROUP Ltd’s holdings in MongoDB were worth $237,000 as of its most recent filing with the SEC.

A number of other institutional investors and hedge funds have also made changes to their positions in the business. Jennison Associates LLC purchased a new stake in shares of MongoDB in the first quarter valued at about $113,395,000. TD Asset Management Inc. increased its stake in shares of MongoDB by 18.3% during the first quarter. TD Asset Management Inc. now owns 621,217 shares of the company’s stock worth $275,566,000 after acquiring an additional 96,217 shares during the period. Aaron Wealth Advisors LLC acquired a new position in shares of MongoDB during the first quarter worth about $43,000. Steel Peak Wealth Management LLC increased its stake in shares of MongoDB by 60.3% during the first quarter. Steel Peak Wealth Management LLC now owns 1,751 shares of the company’s stock worth $777,000 after acquiring an additional 659 shares during the period. Finally, Tcwp LLC acquired a new position in shares of MongoDB during the first quarter worth about $52,000. 88.70% of the stock is owned by hedge funds and other institutional investors.

MongoDB Stock Down 2.8 %

MDB stock opened at $353.57 on Monday. The company’s 50 day simple moving average is $314.31 and its 200-day simple moving average is $331.88. The firm has a market capitalization of $24.08 billion, a PE ratio of -73.05 and a beta of 0.96. MongoDB, Inc. has a 12 month low of $213.39 and a 12 month high of $590.00. The company has a debt-to-equity ratio of 1.69, a current ratio of 4.16 and a quick ratio of 4.16.

MongoDB (NASDAQ:MDB – Get Rating) last released its quarterly earnings results on Wednesday, June 1st. The company reported ($1.15) EPS for the quarter, beating the consensus estimate of ($1.34) by $0.19. The business had revenue of $285.45 million for the quarter, compared to analyst estimates of $267.10 million. MongoDB had a negative net margin of 32.75% and a negative return on equity of 45.56%. The company’s quarterly revenue was up 57.1% on a year-over-year basis. During the same period in the prior year, the business earned ($0.98) earnings per share. Equities analysts expect that MongoDB, Inc. will post -5.08 earnings per share for the current fiscal year.

Wall Street Analyst Weigh In

MDB has been the topic of a number of recent analyst reports. UBS Group boosted their price target on shares of MongoDB from $315.00 to $345.00 and gave the stock a “buy” rating in a research note on Wednesday, June 8th. Royal Bank of Canada boosted their price target on shares of MongoDB from $325.00 to $375.00 in a research note on Thursday, June 9th. Citigroup boosted their price target on shares of MongoDB from $425.00 to $450.00 in a research note on Monday, August 22nd. Barclays upped their target price on shares of MongoDB from $338.00 to $438.00 and gave the stock an “overweight” rating in a research report on Tuesday, August 16th. Finally, Robert W. Baird started coverage on shares of MongoDB in a research report on Tuesday, July 12th. They set an “outperform” rating and a $360.00 target price on the stock. One investment analyst has rated the stock with a sell rating and seventeen have assigned a buy rating to the company’s stock. According to MarketBeat.com, MongoDB has an average rating of “Moderate Buy” and an average price target of $414.78.

Insider Activity at MongoDB

In related news, CEO Dev Ittycheria sold 4,991 shares of the company’s stock in a transaction that occurred on Tuesday, July 5th. The shares were sold at an average price of $264.45, for a total transaction of $1,319,869.95. Following the transaction, the chief executive officer now owns 199,753 shares of the company’s stock, valued at $52,824,680.85. The sale was disclosed in a legal filing with the Securities & Exchange Commission, which is accessible through this link. In other MongoDB news, CRO Cedric Pech sold 350 shares of the stock in a transaction that occurred on Tuesday, July 5th. The shares were sold at an average price of $264.46, for a total transaction of $92,561.00. Following the transaction, the executive now owns 45,785 shares of the company’s stock, valued at $12,108,301.10. The transaction was disclosed in a legal filing with the SEC, which is accessible through the SEC website. Also, CEO Dev Ittycheria sold 4,991 shares of the stock in a transaction that occurred on Tuesday, July 5th. The stock was sold at an average price of $264.45, for a total transaction of $1,319,869.95. Following the transaction, the chief executive officer now directly owns 199,753 shares in the company, valued at approximately $52,824,680.85. The disclosure for this sale can be found here. Over the last 90 days, insiders have sold 43,795 shares of company stock valued at $12,357,981. Company insiders own 5.70% of the company’s stock.

MongoDB Company Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

- Get a free copy of the StockNews.com research report on MongoDB (MDB)

- Why Trading Volume is Unusually High on These 3 Stocks

- Can NetApp Resume Its Rally After Strong Earnings Guidance?

- Three Reasons Why Medtronic Stock can be a Recession Winner

- Rivian Rising to the Challenge

- 3 Deflation Enablers Stocks that Can Thrive in a Recession

Want to see what other hedge funds are holding MDB? Visit HoldingsChannel.com to get the latest 13F filings and insider trades for MongoDB, Inc. (NASDAQ:MDB – Get Rating).

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

Article originally posted on mongodb google news. Visit mongodb google news

MMS • Doris Xin

Article originally posted on InfoQ. Visit InfoQ

Key Takeaways

- Automating the ML pipeline could put machine learning into the hands of more people, including those who do not have years of data science training and a data center at their disposal. Unfortunately, true end-to-end automation is not yet available.

- AutoML is currently most frequently used in hyperparameter tuning and model selection. The failure of AutoML to be end-to-end can actually cut into the efficiency improvements; moving aspects of the project between platforms and processes is time-consuming, risks mistakes, and takes up the mindshare of the operator.

- AutoML systems should seek to balance human control with automation: focusing on the best opportunities for automation and allowing for humans to perform the parts that are most suited for humans. By combining the manual and automated strategies, practitioners can increase confidence and trust in the final product.

- The collaboration between humans and automation tooling can be better than either one working alone. By combining the manual and automated strategies, practitioners can increase confidence and trust in the final product.

- ML practitioners are the most open to the automation of the mechanical engineering tasks, such as data pipeline building, model serving, and model monitoring, involved in ML operations (MLOps). The focus of AutoML should be on these tasks since they are high-leverage and their automation has a much lower chance of introducing bias.

The current conversation about automated machine learning (AutoML) is a blend of hope and frustration.

Automation to improve machine learning projects comes from a noble goal. By streamlining development, ML projects can be put in the hands of more people, including those who do not have years of data science training and a data center at their disposal.

End-to-end automation, while it may be promised by some providers, is not available yet. There are capabilities in AutoML, particularly in modeling tasks, that practitioners from novice to advanced data scientists are using today to enhance their work. However, seeing how automation and human operators interact, it may never be optimal for most data science projects to completely automate the process. In addition, AutoML tools struggle to address fairness, explainability, and bias—arguably the central issue in artificial intelligence (AI) and ML today. AutoML systems should emphasize ways to decrease the amount of time data engineers and ML practitioners spend on mechanistic tasks and interact well with practitioners of different skill levels.

Along with my colleagues Eva Yiwei Wu, Doris Jung-Lin Lee, Niloufar Salehi, and Aditya Parameswaran, I presented a paper at the ACM CHI Conference on Human Factors in Computer Systems studying how AutoML fits into machine workflows. The paper details an in-depth qualitative study of sixteen AutoML users ranging from hobbyists to industry researchers across a diverse set of domains.

Questions asked aimed to uncover their experiences working with and without AutoML tools and their perceptions of AutoML tools so that we could ultimately gain a better understanding of AutoML user needs; AutoML tool strengths / weaknesses; and insights into the respective roles of the human developer and automation in ML development.

The many faces of AutoML

The idea of automating some elements of a machine learning project is sensible. Many data scientists have had the dream of democratizing data science through an easy-to-use process that puts those capabilities in more hands. Today’s AutoML solutions fall into three categories: cloud provider solutions, commercial platforms, and open source projects.

Cloud provider solutions

The hosted compute resources of Google Cloud AutoML, Microsoft Azure Automated ML, and Amazon SageMaker Autopilot provide all the familiar benefits of cloud solutions: integration with the cloud data ecosystem for more end-to-end support, pay-as-you-go pricing models, low barrier to entry, and no infrastructure concerns. They also bring with them common cloud service frustrations a project may face: lack of customizability, vendor lock-in, and an opaque process. They all offer no-code solutions that appeal to the citizen scientist or innovator. But for the more advanced user, the lack of transparency is problematic for many scenarios; as of this writing, only Amazon SageMaker Autopilot allows the user to export code and intermediate results. Many users find themselves frustrated by the lack of control over model selection and limited visibility into the models utilized.

It is a familiar story with the Google, Amazon, and Microsoft solutions in other categories; cloud products seek to provide as much of an end-to-end solution as possible and an easy-to-use experience. The design tradeoff is low visibility and less customization options. For example, Google AutoML only provides the final model; it fails to yield the training code or any intermediate results during training. Microsoft Azure gives much more information about the training process itself, but it does not give the code for the training models.

Commercial platforms

The most frequently discussed commercial AutoML products are DataRobot and H20 Driverless AI. The companies behind these products are seeking to provide end-to-end AI and ML platforms for business users as well as data scientists, particularly those using on-premises compute. They focus on operationalizing the model: launching the application or data results for use.

Open source projects

There are a lot of open source projects in the machine learning space. Some of the more well-known are Auto-sklearn, AutoKeras, and TPOT. The open source process allows for the best of data science and development to come together. However, they are often lacking in post-processing deployment help that is a primary focus of commercial platforms.

What AutoML brings to a project

Those we surveyed had frustrations, but they were still able to use AutoML tools to make their work more fruitful. In particular, AutoML tools are used in the modeling tasks. Although most AutoML providers stated that their product is “end-to-end,” the pre-processing and post-processing phases are not greatly impacted by AutoML.

The data collection, data tagging, and data wrangling of pre-processing are still tedious, manual processes. There are utilities that provide some time savings and aid in simple feature engineering, but overall, most practitioners do not make use of AutoML as they prepare data.

In post-processing, AutoML offerings have some deployment capabilities. But Deployment is famously a problematic interaction between MLOps and DevOps in need of automation. Take for example one of the most common post-processing tasks: generating reports and sharing results. While cloud-hosted AutoML tools are able to auto-generate reports and visualizations, our findings show that users are still adopting manual approaches to modify default reports. The second most common post-processing task is deploying models. Automated deployment was only afforded to users of hosted AutoML tools and limitations still existed for security or end user experience considerations.

The failure of AutoML to be end-to-end can actually cut into the efficiency improvements; moving aspects of the project between platforms and processes is time-consuming, risks mistakes, and takes up the mindshare of the operator.

AutoML is most frequently and enthusiastically used in hyperparameter tuning and model selection.

Hyperparameter tuning and model selection

When possible configurations quickly explode to billions, as they do in hyperparameters for many projects, automation is a welcome aid. AutoML tools can try possibilities and score them to accelerate the process and improve outcomes. This was the first AutoML feature to become available. Perhaps its maturity is why it is so popular. Eleven of the 16 users we interviewed used AutoML hyperparameter-tuning capabilities.

Improving key foundations of the modeling phase saves a lot of time. Users did find that the AutoML solution could not be completely left alone to the task. This is a theme with AutoML features; knowledge gained from the human’s prior experience and the ability to understand context can, if the user interface allows, eliminate some dead ends. By limiting the scope of the AutoML process, a human significantly reduces the cost of the step.

AutoML assistance to model selection can aid the experienced ML practitioner in overcoming their own assumptions by quickly testing other models. One practitioner in our survey called the AutoML model selection process “the no assumptions approach” that overcame their own familiarity and preferences.

AutoML for model selection is a more efficient way of developing and testing models. It also improves, though perhaps not dramatically, the effectiveness of model development.

The potential drawback is that, left unchecked, the model selection is very resource heavy – encompassing ingesting, transforming, and validating data distributions data. It is essential to identify models that are not viable for deployment or valuable compute resources are wasted and can thus lead to crashing pipelines. Many AutoML tools don’t understand the resources available to them which causes system failures. The human operator can pare down the possible models. But this practice does fly in the face of the idea of overcoming operator assumptions. The scientist or operator has an important role to play discerning what experiments are worth providing the AutoML system and what are a waste of time.

Informing manual development

Despite any marketing or names that suggest an AutoML platform is automatic, that is not what users experience. There is nothing entirely push-button, and the human operator is still called upon to make decisions. However, the tool and the human working together can be better than either one working alone.

By combining the manual and automated strategies, practitioners can increase confidence and trust in the final product. Practitioners shared that running the automated process validated that they were on the right track. They also like to use it for rapid prototyping. It works the other way as well; by performing a manual process in parallel with the AutoML process, practitioners have built trust in their AutoML pipeline by showing that its results are akin to a manual effort.

Reproducibility

Data scientists do tend to be somewhat artisanal. Since an automated process can be reproduced and can standardize code, organizations can have a more enduring repository of ML and AI projects even as staff turns over. New models can be created more rapidly, and teams can compare models in an apples-to-apples format.

In context: The big questions of responsible AI

At this point in the growth of AI, all practitioners should be considering bias and fairness. It is an ethical and social impetus. Documenting efforts to minimize bias is important in building trust with clients, users, and the public.

The key concept of explainability is how we as practitioners earn user trust. While AutoML providers are making efforts of their own to build in anti-bias process and explainability, there is a long way to go. They have not effectively addressed explainability. Being able to reveal the model and identify the source of suspect outcomes helps build trust and, should there be a problem, identify a repair. Simply having a human involved does not assure that there is no bias. However, without visibility, the likelihood of bias grows. Transparency mechanisms like visualization increase user understanding and trust in AutoML, but it does not suffice for trust and understanding between humans and the tool-built model. Humans need agency to establish the level of understanding to trust AutoML.

In our interviews, we discovered that practitioners switch to completely manual development to have the user agency, interpretability, and trust expected for projects.

Human-automation partnership

A contemporary end-to-end AutoML system is by necessity highly complex. Its users come from different backgrounds and have diverse goals but are all likely doing difficult work under a lot of pressure. AutoML can be a significant force multiplier for ML projects, as we learned in our interviews.

While the term “automated ML” might imply a push-button, one-stop-shop nature, ML practitioners are valuable contributors and supervisors to AutoML. Humans improve the efficiency, effectiveness and safety of AutoML tools by instructing, advising and safeguarding them. With this in mind, the research study also identified guidelines to consider for successful human-automation partnerships:

- Focus on automating the parts of the process that consume valuable data engineering time. Currently there are only 2.5 data engineer job applicants for every data engineer position (compare this to 4.76 candidates per data scientist job listing or 10.8 candidates per web developer job listing according to DICE 2020 Tech Job Report); there is much demand and little supply for this skill set.

- Establish trust with users as well as agency for those users. When AutoML systems deprive users of visibility and control, those systems cannot be a part of real-world uses where they can have an impact.

- Develop user experience and user interfaces that adapt for user proficiency. The trend for AutoML systems is to develop more capabilities for novice and low-code users. Although some users have the ability to export raw model-training code, it is often a distraction from their workflow, leading to wasted time exploring low-impact code. Further, when novices are afforded the option to see raw model-training code it requires them to self-select the appropriate modality (information and customizability provided to the user). Matching expert users with the “novice modality” causes frustration due to lack of control. In the other direction, novices get distracted by things they don’t understand.

- Provide an interactive, multi-touch exploration for more advanced users. Iteration is the habitual and necessary work style of ML practitioners, and the tool should aid in the process rather than interrupt it.

- Let the platform be a translator and shared truth for varied stakeholders. The conversations between business users and ML practitioners would greatly benefit from a platform and shared vocabulary. Providing a trusted space for conversation would remove that burden that frequently falls to the ML engineers.

AutoML systems should seek to balance human control with automation: focusing on the best opportunities for automation and allowing for humans to perform the parts that are most suited for humans: using contextual awareness and domain expertise.

Is AutoML ready?

As a collection of tools, AutoML capabilities have proven value but need to be vetted more thoroughly. Segments of these products can help the data scientist improve outcomes and efficiencies. AutoML can provide rapid prototyping. Citizen scientists, innovators, and organizations with fewer resources can employ machine learning. However, there is not an effective end-to-end AutoML solution available now.

Are we focusing on the right things in the automation of ML today? Should it be hyperparameter tuning or data engineering? Are the expectations appropriate?

In our survey, we found that practitioners are much more open to automating the mechanical parts of ML development and deployment to accelerate the iteration speed for ML models. However, AutoML systems seem to focus on the processes over which the users want to have the most agency. The issues around bias in particular suggest that AutoML providers and practitioners should be aware of the deficiencies of AutoML for model development.

The tasks that are more amenable to automation are involved in machine learning operations (MLOps). They include tasks such as data pipeline building, model serving, and model monitoring. What’s different about these tasks is that they help the users build models rather than remove the user from the model building process (which, as we noted earlier, is bad for transparency and bias reasons).

MLOps is a nascent field that requires repetitive engineering work and uses tooling and procedures that are relatively ill-defined. With a talent shortage for data engineering (on average, it takes 18 months to fill a job position), MLOps represents a tremendous opportunity for automation and standardization.

So rather than focus on AutoML, the future of automation for ML and AI rests in the ability for us to realize the potential of AutoMLOps.

Java News Roundup: JReleaser 1.2, Spring Batch, PrimeFaces, Quarkus, JobRunr, Apache Beam

MMS • Michael Redlich

Article originally posted on InfoQ. Visit InfoQ

It was very quiet for Java news during the week of August 22nd, 2022 featuring news from JDK 19, JDK 20, Spring Batch 5.0.0-M5, Quarkus 2.11.3, JReleaser 1.2.0, PrimeFaces 12.0.0-M3, JobRunr 5.1.8, Apache Beam 2.14.0 and Apache Johnzon 1.2.19.

JDK 19

JDK 19 remains in its release candidate phase with the anticipated GA release on September 20, 2022. The release notes include links to documents such as the complete API specification and an annotated API specification comparing the differences between JDK 18 (Build 36) and JDK 19 (Build 36). InfoQ will follow-up with a more detailed news story.

JDK 20

Build 12 of the JDK 20 early-access builds was also made available this past week, featuring updates from Build 11 that include fixes to various issues. Further details on this build may be found in the release notes.

For JDK 19 and JDK 20, developers are encouraged to report bugs via the Java Bug Database.

Spring Framework

After a very busy previous week, it was a very quiet week for the Spring team.

On the road to Spring Batch 5.0.0, the fifth milestone release was made available with updates that include: removing the autowiring of jobs in JobLauncherTestUtils class; a migration to JUnit Jupiter; and improvements in documentation. This release also features dependency upgrades to Spring Framework 6.0.0-M5, Spring Data 2022.0.0-M5, Spring Integration 6.0.0-M4, Spring AMQP 3.0.0-M3, Spring for Apache Kafka 3.0.0-M5, Micrometer 1.10.0-M4 and Hibernate 6.1.2.Final. And finally, Spring Batch 5.0.0-M5 introduces two deprecations, namely: the Hibernate ItemReader and ItemWriter interfaces for cursor/paging are now deprecated in favor of using the ones based on the Jakarta Persistence specification; and the AssertFile utility class was also deprecated due to the discovery of two static methods in JUnit that provide the same functionality. More details on this release may be found in the release notes.

Quarkus

Red Hat has released Quarkus 2.11.3.Final that ships with a comprehensive fix for CVE-2022-2466, a vulnerability discovered in the SmallRye GraphQL server extension in which server requests were not properly terminated. There were also dependency upgrades to mariadb-java-client 3.0.7, postgresql 42.4.1 and 42.4.2 and mysql-connector-java 8.0.30. Further details on this release may be found in the release notes.

JReleaser

Version 1.2.0 of JReleaser, a Java utility that streamlines creating project releases, has been made available featuring: support for Flatpak as a packager; allow basedir to be a named template; allow a message file, with each line as a separate message and to skip empty/blank lines, on Twitter via Twitter4J; an option to configure unused custom launchers as it was discovered via the logs that the -add-launcher argument was not being passed. There were also numerous dependency upgrades such as: jsonschema 4.26.0, github-api 1.308, slf4j 2.0.0, aws-java-sdk 1.12.270 and 1.12.290 and jsoup 1.15.3. More details on this release may be found in the changelog.

PrimeFaces

On the road to PrimeFaces 12.0.0, the third release candidate has been made available featuring: a fix for the AutoComplete component not working on Apache MyFaces; a new showMinMaxRange attribute to allow navigation past min/max dates with a default value of true; and a new showSelectAll attribute to the DataTable component to display the “select all checkbox” inside the column’s header. Further details may be found in the list of issues.

JobRunr

Ronald Dehuysser, founder and primary developer of JobRunr, a utility to perform background processing in Java, has released version 5.1.8 that features the ability to turn off metrics for background job servers.

Apache Software Foundation

Apache Beam 2.41.0 has been released featuring numerous bug fixes and support for the KV class for the Python RunInference transform for Java. More details on this release may be found in the release notes and a more in-depth introduction to Apache Beam may be found in this InfoQ technical article.

Version 1.2.19 of Apache Johnzon, a project that fully implements the JSR 353, Java API for JSON Processing (JSON-P), and JSR 367, Java API for JSON Binding (JSON-B) specifications, has been released featuring: basic support of enumerations in the PojoGenerator class; adding JSON-Schema to onEnum callback; ensure an import of JsonbProperty when enumerations use it; and expose the toJavaName() method to subclasses in the PojoGenerator class. Further details on this release may be found in the changelog.

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

MongoDB, Inc. (NASDAQ:MDB – Get Rating) has been assigned an average rating of “Moderate Buy” from the nineteen ratings firms that are presently covering the stock, MarketBeat reports. One equities research analyst has rated the stock with a sell recommendation and fourteen have issued a buy recommendation on the company. The average 12-month price objective among analysts that have covered the stock in the last year is $414.78.

Several equities research analysts have recently weighed in on MDB shares. Canaccord Genuity Group lowered their price objective on MongoDB from $400.00 to $300.00 in a research note on Thursday, June 2nd. Barclays upped their price objective on MongoDB from $338.00 to $438.00 and gave the company an “overweight” rating in a research note on Tuesday, August 16th. Needham & Company LLC boosted their target price on MongoDB from $310.00 to $350.00 and gave the stock a “buy” rating in a report on Friday, June 10th. Redburn Partners started coverage on MongoDB in a report on Wednesday, June 29th. They issued a “sell” rating and a $190.00 target price on the stock. Finally, Stifel Nicolaus reduced their target price on MongoDB from $425.00 to $340.00 in a report on Thursday, June 2nd.

Insider Buying and Selling at MongoDB

In other MongoDB news, Director Dwight A. Merriman sold 3,000 shares of the company’s stock in a transaction on Wednesday, June 1st. The shares were sold at an average price of $251.74, for a total transaction of $755,220.00. Following the completion of the sale, the director now owns 544,896 shares in the company, valued at approximately $137,172,119.04. The sale was disclosed in a filing with the Securities & Exchange Commission, which is accessible through this hyperlink. In related news, Director Dwight A. Merriman sold 629 shares of the company’s stock in a transaction on Tuesday, June 28th. The shares were sold at an average price of $292.64, for a total value of $184,070.56. Following the completion of the sale, the director now owns 1,322,755 shares in the company, valued at approximately $387,091,023.20. The transaction was disclosed in a filing with the SEC, which is available through this link. Also, Director Dwight A. Merriman sold 3,000 shares of the company’s stock in a transaction on Wednesday, June 1st. The shares were sold at an average price of $251.74, for a total transaction of $755,220.00. Following the completion of the sale, the director now owns 544,896 shares of the company’s stock, valued at approximately $137,172,119.04. The disclosure for this sale can be found here. In the last 90 days, insiders sold 43,795 shares of company stock worth $12,357,981. Insiders own 5.70% of the company’s stock.

Hedge Funds Weigh In On MongoDB

Large investors have recently added to or reduced their stakes in the company. Confluence Wealth Services Inc. bought a new position in MongoDB during the 4th quarter worth about $25,000. Bank of New Hampshire bought a new position in MongoDB during the 1st quarter worth about $25,000. John W. Brooker & Co. CPAs bought a new position in MongoDB during the 2nd quarter worth about $26,000. Prentice Wealth Management LLC bought a new position in MongoDB during the 2nd quarter worth about $26,000. Finally, Venture Visionary Partners LLC bought a new position in MongoDB during the 2nd quarter worth about $28,000. Institutional investors own 88.70% of the company’s stock.

MongoDB Trading Down 2.8 %

Shares of MDB opened at $353.57 on Monday. MongoDB has a 12-month low of $213.39 and a 12-month high of $590.00. The company has a quick ratio of 4.16, a current ratio of 4.16 and a debt-to-equity ratio of 1.69. The firm has a market capitalization of $24.08 billion, a price-to-earnings ratio of -73.05 and a beta of 0.96. The stock’s 50-day moving average price is $314.31 and its 200 day moving average price is $331.88.

MongoDB (NASDAQ:MDB – Get Rating) last released its quarterly earnings data on Wednesday, June 1st. The company reported ($1.15) earnings per share for the quarter, beating the consensus estimate of ($1.34) by $0.19. MongoDB had a negative return on equity of 45.56% and a negative net margin of 32.75%. The company had revenue of $285.45 million for the quarter, compared to analyst estimates of $267.10 million. During the same period in the prior year, the company posted ($0.98) EPS. The company’s revenue was up 57.1% compared to the same quarter last year. As a group, research analysts predict that MongoDB will post -5.08 earnings per share for the current year.

MongoDB Company Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Further Reading

- Get a free copy of the StockNews.com research report on MongoDB (MDB)

- Can NetApp Resume Its Rally After Strong Earnings Guidance?

- Three Reasons Why Medtronic Stock can be a Recession Winner

- Rivian Rising to the Challenge

- 3 Deflation Enablers Stocks that Can Thrive in a Recession

- Why This Dip in Advanced Auto Parts May be an Opportunity

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

Article originally posted on mongodb google news. Visit mongodb google news

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

MongoDB, Inc. (NASDAQ:MDB – Get Rating) has received a consensus rating of “Moderate Buy” from the nineteen ratings firms that are presently covering the firm, Marketbeat Ratings reports. One research analyst has rated the stock with a sell recommendation and fourteen have assigned a buy recommendation to the company. The average 1 year price target among brokerages that have covered the stock in the last year is $414.78.

MongoDB, Inc. (NASDAQ:MDB – Get Rating) has received a consensus rating of “Moderate Buy” from the nineteen ratings firms that are presently covering the firm, Marketbeat Ratings reports. One research analyst has rated the stock with a sell recommendation and fourteen have assigned a buy recommendation to the company. The average 1 year price target among brokerages that have covered the stock in the last year is $414.78.

A number of research analysts recently weighed in on the company. Piper Sandler dropped their price objective on MongoDB from $430.00 to $375.00 and set an “overweight” rating on the stock in a report on Monday, July 18th. UBS Group upped their price objective on MongoDB from $315.00 to $345.00 and gave the stock a “buy” rating in a report on Wednesday, June 8th. Canaccord Genuity Group dropped their price objective on MongoDB from $400.00 to $300.00 in a report on Thursday, June 2nd. Morgan Stanley dropped their price objective on MongoDB from $378.00 to $368.00 and set an “overweight” rating on the stock in a report on Thursday, June 2nd. Finally, Stifel Nicolaus dropped their price objective on MongoDB from $425.00 to $340.00 in a report on Thursday, June 2nd.

Insiders Place Their Bets

In other MongoDB news, CRO Cedric Pech sold 350 shares of the company’s stock in a transaction dated Tuesday, July 5th. The stock was sold at an average price of $264.46, for a total transaction of $92,561.00. Following the completion of the sale, the executive now directly owns 45,785 shares in the company, valued at $12,108,301.10. The sale was disclosed in a legal filing with the Securities & Exchange Commission, which is accessible through this hyperlink. In other news, CRO Cedric Pech sold 350 shares of the stock in a transaction dated Tuesday, July 5th. The stock was sold at an average price of $264.46, for a total value of $92,561.00. Following the completion of the sale, the executive now owns 45,785 shares in the company, valued at $12,108,301.10. The transaction was disclosed in a legal filing with the SEC, which is available through this link. Also, Director Dwight A. Merriman sold 3,000 shares of the stock in a transaction dated Wednesday, June 1st. The shares were sold at an average price of $251.74, for a total value of $755,220.00. Following the completion of the sale, the director now owns 544,896 shares of the company’s stock, valued at approximately $137,172,119.04. The disclosure for this sale can be found here. Insiders sold 43,795 shares of company stock worth $12,357,981 over the last three months. 5.70% of the stock is owned by insiders.

Institutional Investors Weigh In On MongoDB

Ad Tradewins

The Safest Option in Trades!

If you’re new to trading, then you’ve probably heard the wrong thing about options—that they’re risky, unpredictable, or difficult.

And it couldn’t be more wrong! With the Hughes Optioneering Strategy, you’ll soon learn that the safest option for new accounts is options themselves!

A number of hedge funds have recently bought and sold shares of the business. Commerce Bank grew its stake in MongoDB by 1.7% during the 4th quarter. Commerce Bank now owns 1,413 shares of the company’s stock valued at $747,000 after purchasing an additional 24 shares in the last quarter. Total Clarity Wealth Management Inc. lifted its holdings in MongoDB by 6.9% during the 1st quarter. Total Clarity Wealth Management Inc. now owns 465 shares of the company’s stock worth $206,000 after buying an additional 30 shares during the last quarter. Profund Advisors LLC lifted its holdings in MongoDB by 5.2% during the 4th quarter. Profund Advisors LLC now owns 647 shares of the company’s stock worth $342,000 after buying an additional 32 shares during the last quarter. Ieq Capital LLC lifted its holdings in MongoDB by 2.3% during the 1st quarter. Ieq Capital LLC now owns 1,485 shares of the company’s stock worth $659,000 after buying an additional 34 shares during the last quarter. Finally, Wedbush Securities Inc. lifted its holdings in MongoDB by 1.8% during the 1st quarter. Wedbush Securities Inc. now owns 2,253 shares of the company’s stock worth $999,000 after buying an additional 40 shares during the last quarter. Institutional investors and hedge funds own 88.70% of the company’s stock.

MongoDB Price Performance

Shares of NASDAQ MDB opened at $353.57 on Monday. The company has a current ratio of 4.16, a quick ratio of 4.16 and a debt-to-equity ratio of 1.69. MongoDB has a twelve month low of $213.39 and a twelve month high of $590.00. The stock has a 50 day moving average of $314.31 and a two-hundred day moving average of $331.88. The stock has a market cap of $24.08 billion, a P/E ratio of -73.05 and a beta of 0.96.